Publications

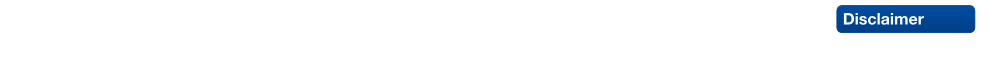

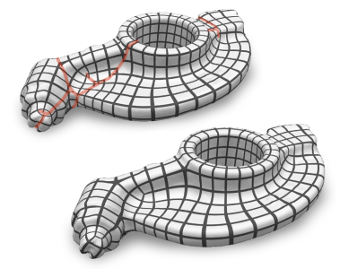

Reduced-Order Shape Optimization Using Offset Surfaces

Proceedings of the 2015 SIGGRAPH Conference

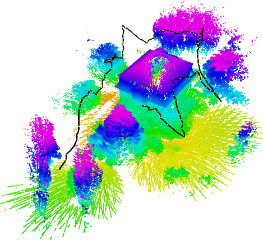

Given the 2-manifold surface of a 3d object, we propose a novel method for the computation of an offset surface with varying thickness such that the solid volume between the surface an its offset satisfies a set of prescribed constraints and at the same time minimizes a given objective functional. Since the constraints as well as the objective functional can easily be adjusted to specific application requirements, our method provides a flexible and powerful tool for shape optimization. We use manifold harmonics to derive a reduced-order formulation of the optimization problem which guarantees a smooth offset surface and speeds up the computation independently from the input mesh resolution without affecting the quality of the result. The constrained optimization problem can be solved in a numerically robust manner with commodity solvers. Furthermore, the method allows to simultaneously optimize an inner and an outer offset in order to increase the degrees of freedom. We demonstrate our method in a number of examples where we control the physical mass properties of rigid objects for the purpose of 3d printing.

@article{musialski-2015-souos,

title = "Reduced-Order Shape Optimization Using Offset Surfaces",

author = "Przemyslaw Musialski and Thomas Auzinger and Michael Birsak

and Michael Wimmer and Leif Kobbelt",

year = "2015",

abstract = "Given the 2-manifold surface of a 3d object, we propose a

novel method for the computation of an offset surface with

varying thickness such that the solid volume between the

surface an its offset satisfies a set of prescribed

constraints and at the same time minimizes a given objective

functional. Since the constraints as well as the objective

functional can easily be adjusted to specific application

requirements, our method provides a flexible and powerful

tool for shape optimization. We use manifold harmonics to

derive a reduced-order formulation of the optimization

problem which guarantees a smooth offset surface and speeds

up the computation independently from the input mesh

resolution without affecting the quality of the result. The

constrained optimization problem can be solved in a

numerically robust manner with commodity solvers.

Furthermore, the method allows to simultaneously optimize an

inner and an outer offset in order to increase the degrees

of freedom. We demonstrate our method in a number of

examples where we control the physical mass properties of

rigid objects for the purpose of 3d printing.",

pages = "to appear--9",

month = aug,

number = "4",

event = "ACM SIGGRAPH 2015",

journal = "ACM Transactions on Graphics (ACM SIGGRAPH 2015)",

volume = "34",

location = "Los Angeles, CA, USA",

keywords = "reduced-order models, shape optimization, computational

geometry, geometry processing, physical mass properties",

URL = "http://www.cg.tuwien.ac.at/research/publications/2015/musialski-2015-souos/",

}

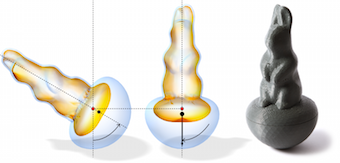

BendFields: Regularized Curvature Fields from Rough Concept Sketches

Designers frequently draw curvature lines to convey bending of smooth surfaces in concept sketches. We present a method to extrapolate curvature lines in a rough concept sketch, recovering the intended 3D curvature field and surface normal at each pixel of the sketch. This 3D information allows to enrich the sketch with 3D-looking shading and texturing. We first introduce the concept of regularized curvature lines that model the lines designers draw over curved surfaces, encompassing curvature lines and their extension as geodesics over flat or umbilical regions. We build on this concept to define the orthogonal cross field that assigns two regularized curvature lines to each point of a 3D surface. Our algorithm first estimates the projection of this cross field in the drawing, which is nonorthogonal due to foreshortening. We formulate this estimation as a scattered interpolation of the strokes drawn in the sketch, which makes our method robust to sketchy lines that are typical for design sketches. Our interpolation relies on a novel smoothness energy that we derive from our definition of regularized curvature lines. Optimizing this energy subject to the stroke constraints produces a dense nonorthogonal 2D cross field which we then lift to 3D by imposing orthogonality. Thus, one central concept of our approach is the generalization of existing cross field algorithms to the nonorthogonal case. We demonstrate our algorithm on a variety of concept sketches with various levels of sketchiness. We also compare our approach with existing work that takes clean vector drawings as input.

@Article{IBB15,

author = "Iarussi, Emmanuel and Bommes, David and Bousseau, Adrien",

title = "BendFields: Regularized Curvature Fields from Rough Concept Sketches ",

journal = "ACM Transactions on Graphics",

year = "2015",

url = "http://www-sop.inria.fr/reves/Basilic/2015/IBB15"

}

Visual landmark recognition from Internet photo collections: A large-scale evaluation

In this paper, we present an object-centric, fixeddimensional 3D shape representation for robust matching of partially observed object shapes, which is an important component for object categorization from 3D data. A main problem when working with RGB-D data from stereo, Kinect, or laser sensors is that the 3D information is typically quite noisy. For that reason, we accumulate shape information over time and register it in a common reference frame. Matching the resulting shapes requires a strategy for dealing with partial observations. We therefore investigate several distance functions and kernels that implement different such strategies and compare their matching performance in quantitative experiments. We show that the resulting representation achieves good results for a large variety of vision tasks, such as multi-class classification, person orientation estimation, and articulated body pose estimation, where robust 3D shape matching is essential.

Quantized Global Parametrization

Global surface parametrization often requires the use of cuts or charts due to non-trivial topology. In recent years a focus has been on so-called seamless parametrizations, where the transition functions across the cuts are rigid transformations with a rotation about some multiple of 90 degrees. Of particular interest, e.g. for quadrilateral meshing, paneling, or texturing, are those instances where in addition the translational part of these transitions is integral (or more generally: quantized). We show that finding not even the optimal, but just an arbitrary valid quantization (one that does not imply parametric degeneracies), is a complex combinatorial problem. We present a novel method that allows us to solve it, i.e. to find valid as well as good quality quantizations. It is based on an original approach to quickly construct solutions to linear Diophantine equation systems, exploiting the specific geometric nature of the parametrization problem. We thereby largely outperform the state-of-the-art, sometimes by several orders of magnitude.

Real-Time Isosurface Extraction with View-Dependent Level of Detail and Applications

Volumetric scalar datasets are common in many scientific, engineering, and medical applications where they originate from measurements or simulations. Furthermore, they can represent geometric scene content, e.g. as distance or density fields. Often isosurfaces are extracted, either for indirect volume visualization in the former category, or to simply obtain a polygonal representation in case of the latter. However, even moderately sized volume datasets can result in complex isosurfaces which are challenging to recompute in real-time, e.g. when the user modifies the isovalue or when the data itself is dynamic. In this paper, we present a GPU-friendly algorithm for the extraction of isosurfaces, which provides adaptive level of detail rendering with view-dependent tessellation. It is based on a longest edge bisection scheme where the resulting tetrahedral cells are subdivided into four hexahedra, which then form the domain for the subsequent isosurface extraction step. Our algorithm generates meshes with good triangle quality even for highly nonlinear scalar data. In contrast to previous methods, it does not require any stitching between regions of different levels of detail. As all computation is performed at run-time and no preprocessing is required, the algorithm naturally supports dynamic data and allows us to change isovalues at any time.

@article{SBD2015,

title = {Real-Time Isosurface Extraction with View-Dependent Level of Detail and Applications},

author = {Manuel Scholz and Jan Bender and Carsten Dachsbacher},

year = {2015},

volume = {34},

pages = {103--115},

number = {1},

doi = {10.1111/cgf.12462},

issn = {1467-8659},

journal = {Computer Graphics Forum},

url = {http://dx.doi.org/10.1111/cgf.12462}

}

Position-Based Simulation Methods in Computer Graphics

The physically-based simulation of mechanical effects has been an important research topic in computer graphics for more than two decades. Classical methods in this field discretize Newton's second law and determine different forces to simulate various effects like stretching, shearing, and bending of deformable bodies or pressure and viscosity of fluids, to mention just a few. Given these forces, velocities and finally positions are determined by a numerical integration of the resulting accelerations.

In the last years position-based simulation methods have become popular in the graphics community. In contrast to classical simulation approaches these methods compute the position changes in each simulation step directly, based on the solution of a quasi-static problem. Therefore, position-based approaches are fast, stable and controllable which make them well-suited for use in interactive environments. However, these methods are generally not as accurate as force-based methods but still provide visual plausibility. Hence, the main application areas of position-based simulation are virtual reality, computer games and special effects in movies and commercials.

In this tutorial we first introduce the basic concept of position-based dynamics. Then we present different solvers and compare them with the classical implicit Euler method. We discuss approaches to improve the convergence of these solvers. Moreover, we show how position-based methods are applied to simulate hair, cloth, volumetric deformable bodies, rigid body systems and fluids. We also demonstrate how complex effects like anisotropy or plasticity can be simulated and introduce approaches to improve the performance. Finally, we give an outlook and discuss open problems.

@inproceedings{BMM2015,

title = "Position-Based Simulation Methods in Computer Graphics",

author = "Jan Bender and Matthias M{\"u}ller and Miles Macklin",

year = "2015",

booktitle = "EUROGRAPHICS 2015 Tutorials",

publisher = "Eurographics Association",

location = "Zurich, Switzerland"

}

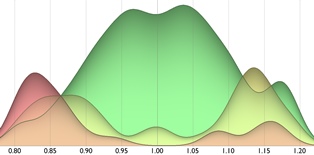

Biternion Nets: Continuous Head Pose Regression from Discrete Training Labels

TL;DR: By doing the obvious thing of encoding an angle φ as (cos φ, sin φ), we can do cool things and simplify data labeling requirements.

While head pose estimation has been studied for some time, continuous head pose estimation is still an open problem. Most approaches either cannot deal with the periodicity of angular data or require very fine-grained regression labels. We introduce biternion nets, a CNN-based approach that can be trained on very coarse regression labels and still estimate fully continuous 360° head poses. We show state-of-the-art results on several publicly available datasets. Finally, we demonstrate how easy it is to record and annotate a new dataset with coarse orientation labels in order to obtain continuous head pose estimates using our biternion nets.

@inproceedings{Beyer2015BiternionNets,

author = {Lucas Beyer and Alexander Hermans and Bastian Leibe},

title = {Biternion Nets: Continuous Head Pose Regression from Discrete Training Labels},

booktitle = {Pattern Recognition},

publisher = {Springer},

series = {Lecture Notes in Computer Science},

volume = {9358},

pages = {157-168},

year = {2015},

isbn = {978-3-319-24946-9},

doi = {10.1007/978-3-319-24947-6_13},

ee = {http://lucasb.eyer.be/academic/biternions/biternions_gcpr15.pdf},

}

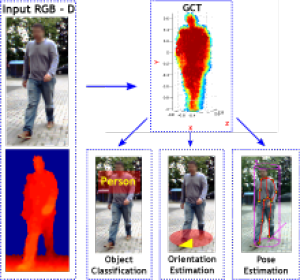

A Fixed-Dimensional 3D Shape Representation for Matching Partially Observed Objects in Street Scenes

In this paper, we present an object-centric, fixeddimensional 3D shape representation for robust matching of partially observed object shapes, which is an important component for object categorization from 3D data. A main problem when working with RGB-D data from stereo, Kinect, or laser sensors is that the 3D information is typically quite noisy. For that reason, we accumulate shape information over time and register it in a common reference frame. Matching the resulting shapes requires a strategy for dealing with partial observations. We therefore investigate several distance functions and kernels that implement different such strategies and compare their matching performance in quantitative experiments. We show that the resulting representation achieves good results for a large variety of vision tasks, such as multi-class classification, person orientation estimation, and articulated body pose estimation, where robust 3D shape matching is essential.

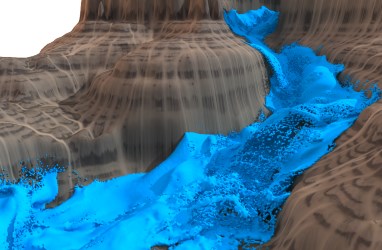

Divergence-Free Smoothed Particle Hydrodynamics

In this paper we introduce an efficient and stable implicit SPH method for the physically-based simulation of incompressible fluids. In the area of computer graphics the most efficient SPH approaches focus solely on the correction of the density error to prevent volume compression. However, the continuity equation for incompressible flow also demands a divergence-free velocity field which is neglected by most methods. Although a few methods consider velocity divergence, they are either slow or have a perceivable density fluctuation.

Our novel method uses an efficient combination of two pressure solvers which enforce low volume compression (below 0.01%) and a divergence-free velocity field. This can be seen as enforcing incompressibility both on position level and velocity level. The first part is essential for realistic physical behavior while the divergence-free state increases the stability significantly and reduces the number of solver iterations. Moreover, it allows larger time steps which yields a considerable performance gain since particle neighborhoods have to be updated less frequently. Therefore, our divergence-free SPH (DFSPH) approach is significantly faster and more stable than current state-of-the-art SPH methods for incompressible fluids. We demonstrate this in simulations with millions of fast moving particles.

@INPROCEEDINGS{Bender2015,

author = {Jan Bender and Dan Koschier},

title = {Divergence-Free Smoothed Particle Hydrodynamics},

booktitle = {Proceedings of the 2015 ACM SIGGRAPH/Eurographics Symposium on Computer

Animation},

year = {2015},

publisher = {ACM},

doi = {http://dx.doi.org/10.1145/2786784.2786796}

}

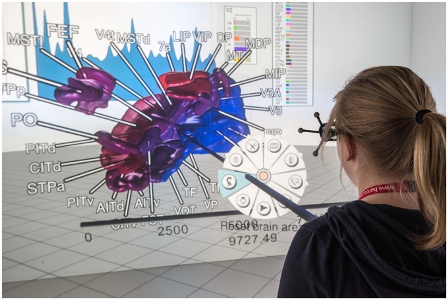

Integrating Visualizations into Modeling NEST Simulations

Modeling large-scale spiking neural networks showing realistic biological behavior in their dynamics is a complex and tedious task. Since these networks consist of millions of interconnected neurons, their simulation produces an immense amount of data. In recent years it has become possible to simulate even larger networks. However, solutions to assist researchers in understanding the simulation's complex emergent behavior by means of visualization are still lacking. While developing tools to partially fill this gap, we encountered the challenge to integrate these tools easily into the neuroscientists' daily workflow. To understand what makes this so challenging, we looked into the workflows of our collaborators and analyzed how they use the visualizations to solve their daily problems. We identified two major issues: first, the analysis process can rapidly change focus which requires to switch the visualization tool that assists in the current problem domain. Second, because of the heterogeneous data that results from simulations, researchers want to relate data to investigate these effectively. Since a monolithic application model, processing and visualizing all data modalities and reflecting all combinations of possible workflows in a holistic way, is most likely impossible to develop and to maintain, a software architecture that offers specialized visualization tools that run simultaneously and can be linked together to reflect the current workflow, is a more feasible approach. To this end, we have developed a software architecture that allows neuroscientists to integrate visualization tools more closely into the modeling tasks. In addition, it forms the basis for semantic linking of different visualizations to reflect the current workflow. In this paper, we present this architecture and substantiate the usefulness of our approach by common use cases we encountered in our collaborative work.

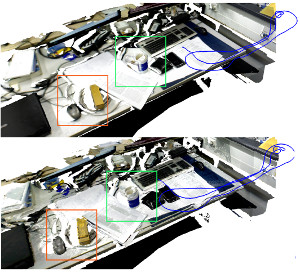

Multi-band Hough Forests for Detecting Humans with Reflective Safety Clothing from Mobile Machinery

We address the problem of human detection from heavy mobile machinery and robotic equipment operating at industrial working sites. Exploiting the fact that workers are typically obliged to wear high-visibility clothing with reflective markers, we propose a new recognition algorithm that specifically incorporates the highly discriminative features of the safety garments in the detection process. Termed Multi-band Hough Forest, our detector fuses the input from active near-infrared (NIR) and RGB color vision to learn a human appearance model that not only allows us to detect and localize industrial workers, but also to estimate their body orientation. We further propose an efficient pipeline for automated generation of training data with high-quality body part annotations that are used in training to increase detector performance. We report a thorough experimental evaluation on challenging image sequences from a real-world production environment, where persons appear in a variety of upright and non-upright body positions.

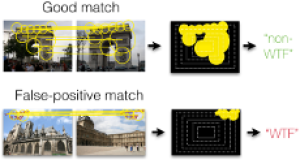

Fixing WTFs: Detecting Image Matches caused by Watermarks, Timestamps, and Frames in Internet Photos

An increasing number of photos in Internet photo collections comes with watermarks, timestamps, or frames (in the following called WTFs) embedded in the image content. In image retrieval, such WTFs often cause false-positive matches. In image clustering, these false-positive matches can cause clusters of different buildings to be joined into one. This harms applications like landmark recognition or large-scale structure-from-motion, which rely on clean building clusters. We propose a simple, but highly effective detector for such false-positive matches. Given a matching image pair with an estimated homography, we first determine similar regions in both images. Exploiting the fact that WTFs typically appear near the border, we build a spatial histogram of the similar regions and apply a binary classifier to decide whether the match is due to a WTF. Based on a large-scale dataset of WTFs we collected from Internet photo collections, we show that our approach is general enough to recognize a large variety of watermarks, timestamps, and frames, and that it is efficient enough for largescale applications. In addition, we show that our method fixes the problems that WTFs cause in image clustering applications. The source code is publicly available and easy to integrate into existing retrieval and clustering systems.

Disentangling the Impact of Social Groups on Response Times and Movement Dynamics in Evacuations

Crowd evacuations are paradigmatic examples for collective behaviour, as interactions between individuals lead to the overall movement dynamics. Approaches assuming that all individuals interact in the same way have significantly improved our understanding of pedestrian crowd evacuations. However, this scenario is unlikely, as many pedestrians move in social groups that are based on friendship or kinship. We test how the presence of social groups affects the egress time of individuals and crowds in a representative crowd evacuation experiment. Our results suggest that the presence of social groups increases egress times and that this is largely due to differences at two stages of evacuations. First, individuals in social groups take longer to show a movement response at the start of evacuations, and, second, they take longer to move into the vicinity of the exits once they have started to move towards them. Surprisingly, there are no discernible time differences between the movement of independent individuals and individuals in groups directly in front of the exits. We explain these results and discuss their implications. Our findings elucidate behavioural differences between independent individuals and social groups in evacuations. Such insights are crucial for the control of crowd evacuations and for planning mass events.

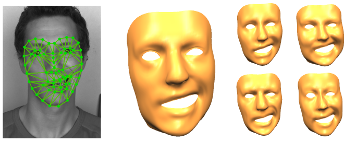

Data Driven 3D Face Tracking Based on a Facial Deformation Model

We introduce a new markerless 3D face tracking approach for 2D video streams captured by a single consumer grade camera. Our approach is based on tracking 2D features in the video and matching them with the projection of the corresponding feature points of a deformable 3D model. By this we estimate the initial shape and pose of the face. To make the tracking and reconstruction more robust we add a smoothness prior for pose changes as well as for deformations of the faces. Our major contribution lies in the formulation of the smooth deformation prior which we derive from a large database of previously captured facial animations showing different (dynamic) facial expressions of a fairly large number of subjects. We split these animation sequences into snippets of fixed length which we use to predict the facial motion based on previous frames. In order to keep the deformation model compact and independent from the individual physiognomy, we represent it by deformation gradients (instead of vertex positions) and apply a principal component analysis in deformation gradient space to extract the major modes of facial deformation. Since the facial deformation is optimized during tracking, it is particularly easy to apply them to other physiognomies and thereby re-target the facial expressions. We demonstrate the effectiveness of our technique on a number of examples.

VMV 2015 Honorable Mention

ACTUI: Using Commodity Mobile Devices to Build Active Tangible User Interfaces

We present the prototype design for a novel user interface, which extends the concept of tangible user interfaces from mostly specialized hardware components and studio deployment to commodity mobile devices in daily life. Our prototype enables mobile devices to be components of a tangible interface where each device can serve as both, a touch sensing display and as a tangible item for interaction. The only necessary modification is the attachment of a conductive 2D touch pattern on each device. Compared to existing approaches, our Active Commodity Tangible User Interfaces (ACTUI) can display graphical output directly on their built-in display paving the way to a plethora of innovative applications where the diverse combination of local and global active display area can significantly enhance the flexibility and effectiveness of the interaction. We explore two exemplary application scenarios where we demonstrate the potential of ACTUI.

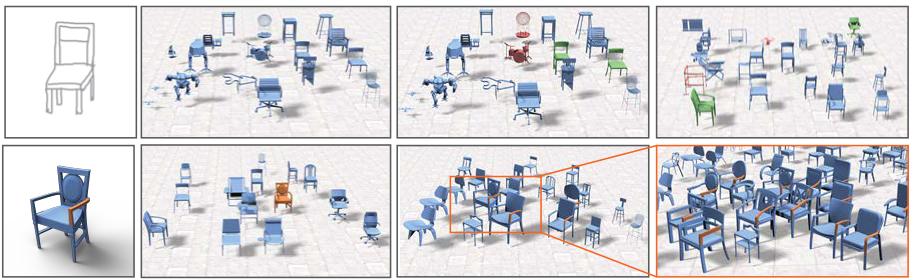

Active Exploration of Large 3D Model Repositories

With broader availability of large-scale 3D model repositories, the need for efficient and effective exploration becomes more and more urgent. Existing model retrieval techniques do not scale well with the size of the database since often a large number of very similar objects are returned for a query, and the possibilities to refine the search are quite limited. We propose an interactive approach where the user feeds an active learning procedure by labeling either entire models or parts of them as “like” or “dislike” such that the system can automatically update an active set of recommended models. To provide an intuitive user interface, candidate models are presented based on their estimated relevance for the current query. From the methodological point of view, our main contribution is to exploit not only the similarity between a query and the database models but also the similarities among the database models themselves. We achieve this by an offline pre-processing stage, where global and local shape descriptors are computed for each model and a sparse distance metric is derived that can be evaluated efficiently even for very large databases. We demonstrate the effectiveness of our method by interactively exploring a repository containing over 100K models.

@ARTICLE{6951464,

author={L. {Gao} and Y. {Cao} and Y. {Lai} and H. {Huang} and L. {Kobbelt} and S. {Hu}},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Active Exploration of Large 3D Model Repositories},

year={2015},

volume={21},

number={12},

pages={1390-1402},}

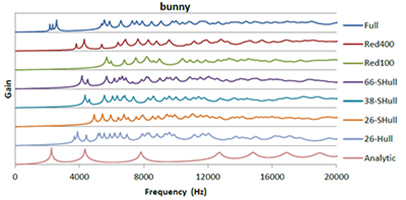

Level-of-Detail Modal Analysis for Real-time Sound Synthesis

Modal sound synthesis is a promising approach for real-time physically-based sound synthesis. A modal analysis is used to compute characteristic vibration modes from the geometry and material properties of scene objects. These modes allow an efficient sound synthesis at run-time, but the analysis is computationally expensive and thus typically computed in a pre-processing step. In interactive applications, however, objects may be created or modified at run-time. Unless the new shapes are known upfront, the modal data cannot be pre-computed and thus a modal analysis has to be performed at run-time. In this paper, we present a system to compute modal sound data at run-time for interactive applications. We evaluate the computational requirements of the modal analysis to determine the computation time for objects of different complexity. Based on these limits, we propose using different levels-of-detail for the modal analysis, using different geometric approximations that trade speed for accuracy, and evaluate the errors introduced by lower-resolution results. Additionally, we present an asynchronous architecture to distribute and prioritize modal analysis computations.

@inproceedings {vriphys.20151335,

booktitle = {Workshop on Virtual Reality Interaction and Physical Simulation},

editor = {Fabrice Jaillet and Florence Zara and Gabriel Zachmann},

title = {{Level-of-Detail Modal Analysis for Real-time Sound Synthesis}},

author = {Rausch, Dominik and Hentschel, Bernd and Kuhlen, Torsten W.},

year = {2015},

publisher = {The Eurographics Association},

ISBN = {978-3-905674-98-9},

DOI = {10.2312/vriphys.20151335}

pages = {61--70}

}

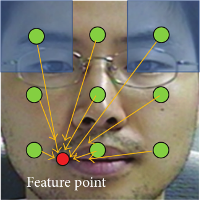

Nonparametric Facial Feature Localization Using Segment-Based Eigenfeatures

We present a nonparametric facial feature localization method using relative directional information between regularly sampled image segments and facial feature points. Instead of using any iterative parameter optimization technique or search algorithm, our method finds the location of facial feature points by using a weighted concentration of the directional vectors originating from the image segments pointing to the expected facial feature positions. Each directional vector is calculated by linear combination of eigendirectional vectors which are obtained by a principal component analysis of training facial segments in feature space of histogram of oriented gradient (HOG). Our method finds facial feature points very fast and accurately, since it utilizes statistical reasoning from all the training data without need to extract local patterns at the estimated positions of facial features, any iterative parameter optimization algorithm, and any search algorithm. In addition, we can reduce the storage size for the trained model by controlling the energy preserving level of HOG pattern space.

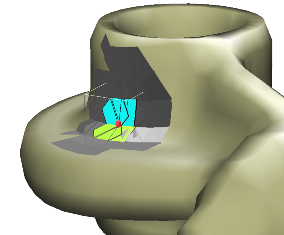

Accurate Contact Modeling for Multi-rate Single-point Haptic Rendering of Static and Deformable Environments

Common approaches for the haptic rendering of complex scenarios employ multi-rate simulation schemes. Here, the collision queries or the simulation of a complex deformable object are often performed asynchronously on a lower frequency, while some kind of intermediate contact representation is used to simulate interactions on the haptic rate. However, this can produce artifacts in the haptic rendering when the contact situation quickly changes and the intermediate representation is not able to reflect the changes due to the lower update rate. We address this problem utilizing a novel contact model. It facilitates the creation of contact representations that are accurate for a large range of motions and multiple simulation time-steps.We handle problematic convex contact regions using a local convex decomposition and special constraints for convex areas.We combine our accurate contact model with an implicit temporal integration scheme to create an intermediate mechanical contact representation, which reflects the dynamic behavior of the simulated objects. Moreover, we propose a new iterative solving scheme for the involved constrained dynamics problems.We increase the robustness of our method using techniques from trust region-based optimization. Our approach can be combined with standard methods for the modeling of deformable objects or constraint-based approaches for the modeling of, for instance, friction or joints. We demonstrate its benefits with respect to the simulation accuracy and the quality of the rendered haptic forces in multiple scenarios.

Best Paper Award!

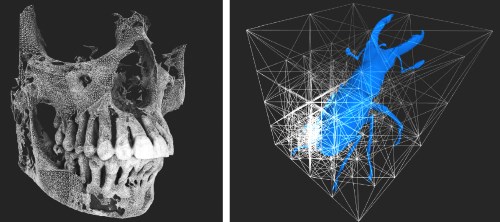

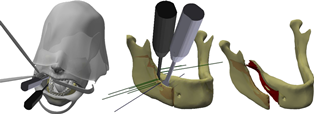

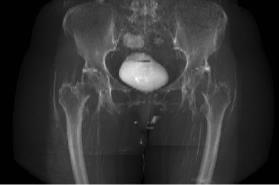

Bimanual Haptic Simulation of Bone Fracturing for the Training of the Bilateral Sagittal Split Osteotomy

In this work we present a haptic training simulator for a maxillofacial procedure comprising the controlled breaking of the lower mandible. To our knowledge the haptic simulation of fracture is seldom addressed, especially when a realistic breaking behavior is required. Our system combines bimanual haptic interaction with a simulation of the bone based on well-founded methods from fracture mechanics. The system resolves the conflict between simulation complexity and haptic real-time constraints by employing a dedicated multi-rate simulation and a special solving strategy for the occurring mechanical equations. Furthermore, we present remeshing-free methods for collision detection and visualization which are tailored for an efficient treatment of the topological changes induced by the fracture. The methods have been successfully implemented and tested in a simulator prototype using real pathological data and a semi-immersive VR-system with two haptic devices. We evaluated the computational efficiency of our methods and show that a stable and responsive haptic simulation of the fracturing has been achieved.

A Framework for Developing Flexible Virtual-Reality-centered Annotation Systems

The act of note-taking is an essential part of the data analysis process. It has been realized in form of various annotation systems that have been discussed in many publications. Unfortunately, the focus usually lies on high-level functionality, like interaction metaphors and display strategies. We argue that it is worthwhile to also consider software engineering aspects. Annotation systems often share similar functionality that can potentially be factored into reusable components with the goal to speed up the creation of new annotation systems. At the same time, however, VR-centered annotation systems are not only subject to application-specific requirements, but also to those arising from differences between the various VR platforms, like desktop VR setups or CAVEs. As a result, it is usually necessary to build application-specific VR-centered annotation systems from scratch instead of reusing existing components.

To improve this situation, we present a framework that provides reusable and adaptable building blocks to facilitate the creation of flexible annotation systems for VR applications. We discuss aspects ranging from data representation over persistence to the integration of new data types and interaction metaphors, especially in context of multi-platform applications. To underpin the benefits of such an approach and promote the proposed concepts, we describe how the framework was applied to several of our own projects.

Proceedings of the Workshop on Formal Methods in Human Computer Interaction(FoMHCI)

Conference Proceedings

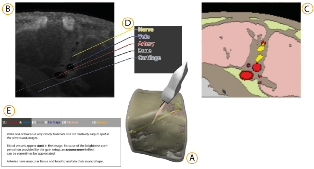

Simulation-based Ultrasound Training Supported by Annotations, Haptics and Linked Multimodal Views

When learning ultrasound (US) imaging, trainees must learn how to recognize structures, interpret textures and shapes, and simultaneously register the 2D ultrasound images to their 3D anatomical mental models. Alleviating the cognitive load imposed by these tasks should free the cognitive resources and thereby improve the learning process. We argue that the amount of cognitive load that is required to mentally rotate the models to match the images to them is too large and therefore negatively impacts the learning process. We present a 3D visualization tool that allows the user to naturally move a 2D slice and navigate around a 3D anatomical model. The slice is displayed in-place to facilitate the registration of the 2D slice in its 3D context. Two duplicates are also shown externally to the model; the first is a simple rendered image showing the outlines of the structures and the second is a simulated ultrasound image. Haptic cues are also provided to the users to help them maneuver around the 3D model in the virtual space. With the additional display of annotations and information of the most important structures, the tool is expected to complement the available didactic material used in the training of ultrasound procedures.

Comparison and Evaluation of Viewpoint Quality Estimation Algorithms for Immersive Virtual Environments

The knowledge of which places in a virtual environment are interesting or informative can be used to improve user interfaces and to create virtual tours. Viewpoint Quality Estimation algorithms approximate this information by calculating quality scores for viewpoints. However, even though several such algorithms exist and have also been used, e.g., in virtual tour generation, they have never been comparatively evaluated on virtual scenes. In this work, we introduce three new Viewpoint Quality Estimation algorithms, and compare them against each other and six existing metrics, by applying them to two different virtual scenes. Furthermore, we conducted a user study to obtain a quantitative evaluation of viewpoint quality. The results reveal strengths and limitations of the metrics on actual scenes, and provide recommendations on which algorithms to use for real applications.

@InProceedings{Freitag2015,

Title = {{Comparison and Evaluation of Viewpoint Quality Estimation Algorithms for Immersive Virtual Environments}},

Author = {Freitag, Sebastian and Weyers, Benjamin and B\"{o}nsch, Andrea and Kuhlen, Torsten W.},

Booktitle = {ICAT-EGVE 2015 - International Conference on Artificial Reality and Telexistence and Eurographics Symposium on Virtual Environments},

Year = {2015},

Pages = {53-60},

Doi = {10.2312/egve.20151310}

}

Haptic 3D Surface Representation of Table-Based Data for People With Visual Impairments

The UN Convention on the Rights of Persons with Disabilities Article 24 states that “States Parties shall ensure inclusive education at all levels of education and life long learning.” This article focuses on the inclusion of people with visual impairments in learning processes including complex table-based data. Gaining insight into and understanding of complex data is a highly demanding task for people with visual impairments. Especially in the case of table-based data, the classic approaches of braille-based output devices and printing concepts are limited. Haptic perception requires sequential information processing rather than the parallel processing used by the visual system, which hinders haptic perception to gather a fast overview of and deeper insight into the data. Nevertheless, neuroscientific research has identified great dependencies between haptic perception and the cognitive processing of visual sensing. Based on these findings, we developed a haptic 3D surface representation of classic diagrams and charts, such as bar graphs and pie charts. In a qualitative evaluation study, we identified certain advantages of our relief-type 3D chart approach. Finally, we present an education model for German schools that includes a 3D printing approach to help integrate students with visual impairments./citation.cfm?id=2700433

Verified Stochastic Methods in Geographic Information System Applications With Uncertainty

Modern localization techniques are based on the Global Positioning System (GPS). In general, the accuracy of the measurement depends on various uncertain parameters. In addition, despite its relevance, a number of localization approaches fail to consider the modeling of uncertainty in geographic information system (GIS) applications. This paper describes a new verified method for uncertain (GPS) localization for use in GPS and GIS application scenarios based on Dempster-Shafer theory (DST), with two-dimensional and interval-valued basic probability assignments. The main benefit our approach offers for GIS applications is a workflow concept using DST-based models that are embedded into an ontology-based semantic querying mechanism accompanied by 3D visualization techniques. This workflow provides interactive means of querying uncertain GIS models semantically and provides visual feedback.

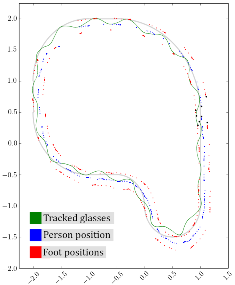

Low-Cost Vision-Based Multi-Person Foot Tracking for CAVE Systems with Under-Floor Projection

In this work, we present an approach for tracking the feet of multiple users in CAVE-like systems with under-floor projection. It is based on low-cost consumer cameras, does not require users to wear additional equipment, and can be installed without modifying existing components. If the brightness of the floor projection does not contain too much variation, the feet of several people can be successfully and precisely tracked and assigned to individuals. The tracking data can be used to enable or enhance user interfaces like Walking-in-Place or torso-directed steering, provide audio feedback for footsteps, and improve the immersive experience for multiple users.

BlowClick: A Non-Verbal Vocal Input Metaphor for Clicking

In contrast to the wide-spread use of 6-DOF pointing devices, freehand user interfaces in Immersive Virtual Environments (IVE) are non-intrusive. However, for gesture interfaces, the definition of trigger signals is challenging. The use of mechanical devices, dedicated trigger gestures, or speech recognition are often used options, but each comes with its own drawbacks. In this paper, we present an alternative approach, which allows to precisely trigger events with a low latency using microphone input. In contrast to speech recognition, the user only blows into the microphone. The audio signature of such blow events can be recognized quickly and precisely. The results of a user study show that the proposed method allows to successfully complete a standard selection task and performs better than expected against a standard interaction device, the Flystick.

Cirque des Bouteilles: The Art of Blowing on Bottles

Making music by blowing on bottles is fun but challenging. We introduce a novel 3D user interface to play songs on virtual bottles. For this purpose the user blows into a microphone and the stream of air is recreated in the virtual environment and redirected to virtual bottles she is pointing to with her fingers. This is easy to learn and subsequently opens up opportunities for quickly switching between bottles and playing groups of them together to form complex melodies. Furthermore, our interface enables the customization of the virtual environment, by means of moving bottles, changing their type or filling level.

MRI Visualisation by Digitally Reconstructed Radiographs

Visualising volumetric medical images such as computed tomography and magnetic resonance imaging (MRI) on picture archiving and communication systems (PACS) clients is often achieved by image browsing in sagittal, coronal or axial views or three-dimensional (3D) rendering. This latter technique requires fine thresholding for MRI. On the other hand, computing virtual radiograph images, also referred to as digitally reconstructed radiographs (DRR), provides in a single two-dimensional (2D) image a complete overview of the 3D data. It appears therefore as a powerful alternative for MRI visualisation and preview in PACS. This study describes a method to compute DRR from T1-weighted MRI. After segmentation of the background, a histogram distribution analysis is performed and each foreground MRI voxel is labeled as one of three tissues: cortical bone, also known as principal absorber of the X-rays, muscle and fat. An intensity level is attributed to each voxel according to the Hounsfield scale, linearly related to the X-ray attenuation coefficient. Each DRR pixel is computed as the accumulation of the new intensities of the MRI dataset along the corresponding X-ray. The method has been tested on 16 T1-weighted MRI sets. Anterior-posterior and lateral DRR have been computed with reasonable qualities and avoiding any manual tissue segmentations.

@Article{Serrurier2015,

Title = {{MRI} {V}isualisation by {D}igitally {R}econstructed {R}adiographs},

Author = {Antoine Serrurier and Andrea B\"{o}nsch and Robert Lau and Thomas M. Deserno (n\'{e} Lehmann)},

Journal = {Proceeding of SPIE 9418, Medical Imaging 2015: PACS and Imaging Informatics: Next Generation and Innovations},

Year = {2015},

Pages = {94180I-94180I-7},

Volume = {9418},

Doi = {10.1117/12.2081845},

Url = {http://rasimas.imib.rwth-aachen.de/output_publications.php}

}

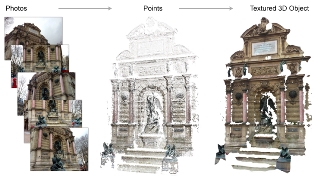

Surface-Reconstructing Growing Neural Gas: A Method for Online Construction of Textured Triangle Meshes

In this paper we propose surface-reconstructing growing neural gas (SGNG), a learning based artificial neural network that iteratively constructs a triangle mesh from a set of sample points lying on an object?s surface. From these input points SGNG automatically approximates the shape and the topology of the original surface. It furthermore assigns suitable textures to the triangles if images of the surface are available that are registered to the points.

By expressing topological neighborhood via triangles, and by learning visibility from the input data, SGNG constructs a triangle mesh entirely during online learning and does not need any post-processing to close untriangulated holes or to assign suitable textures without occlusion artifacts. Thus, SGNG is well suited for long-running applications that require an iterative pipeline where scanning, reconstruction and visualization are executed in parallel.

Results indicate that SGNG improves upon its predecessors and achieves similar or even better performance in terms of smaller reconstruction errors and better reconstruction quality than existing state-of-the-art reconstruction algorithms. If the input points are updated repeatedly during reconstruction, SGNG performs even faster than existing techniques.

Best Paper Award!

Person Attribute Recognition with a Jointly-trained Holistic CNN Model

This paper addresses the problem of human visual attribute recognition, i.e., the prediction of a fixed set of semantic attributes given an image of a person. Previous work often considered the different attributes independently from each other, without taking advantage of possible dependencies between them. In contrast, we propose a method to jointly train a CNN model for all attributes that can take advantage of those dependencies, considering as input only the image without additional external pose, part or context information. We report detailed experiments examining the contribution of individual aspects, which yields beneficial insights for other researchers. Our holistic CNN achieves superior performance on two publicly available attribute datasets improving on methods that additionally rely on pose-alignment or context. To support further evaluations, we present a novel dataset, based on realistic outdoor video sequences, that contains more than 27,000 pedestrians annotated with 10 attributes. Finally, we explore design options to embrace the N/A labels inherently present in this task.

@InProceedings{PARSE27k,

author = {Patrick Sudowe and Hannah Spitzer and Bastian Leibe},

title = {{Person Attribute Recognition with a Jointly-trained Holistic CNN Model}},

booktitle = {ICCV'15 ChaLearn Looking at People Workshop},

year = {2015},

}

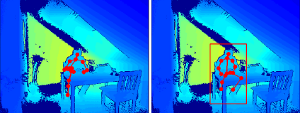

A Semantic Occlusion Model for Human Pose Estimation from a Single Depth image

Human pose estimation from depth data has made significant progress in recent years and commercial sensors estimate human poses in real-time. However, state-of-theart methods fail in many situations when the humans are partially occluded by objects. In this work, we introduce a semantic occlusion model that is incorporated into a regression forest approach for human pose estimation from depth data. The approach exploits the context information of occluding objects like a table to predict the locations of occluded joints. In our experiments on synthetic and real data, we show that our occlusion model increases the joint estimation accuracy and outperforms the commercial Kinect 2 SDK for occluded joints.

Sequence-Level Object Candidates Based on Saliency for Generic Object Recognition on Mobile Systems

In this paper, we propose a novel approach for generating generic object candidates for object discovery and recognition in continuous monocular video. Such candidates have recently become a popular alternative to exhaustive window-based search as basis for classification. Contrary to previous approaches, we address the candidate generation problem at the level of entire video sequences instead of at the single image level. We propose a processing pipeline that starts from individual region candidates and tracks them over time. This enables us to group candidates for similar objects and to automatically filter out inconsistent regions. For generating the per-frame candidates, we introduce a novel multi-scale saliency approach that achieves a higher per-frame recall with fewer candidates than current state-of-the-art methods. Taken together, those two components result in a significant reduction of the number of object candidates compared to frame level methods, while keeping a consistently high recall.

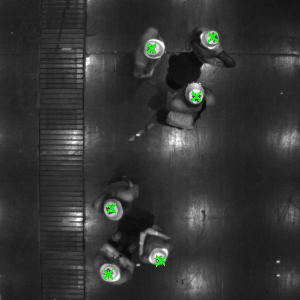

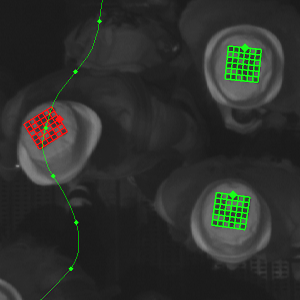

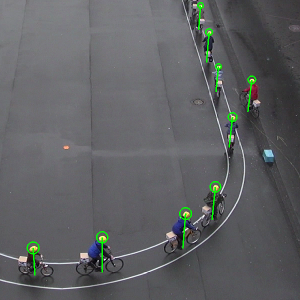

Robust Marker-Based Tracking for Measuring Crowd Dynamics

We present a system to conduct laboratory experiments with thousands of pedestrians. Each participant is equipped with an individual marker to enable us to perform precise tracking and identification. We propose a novel rotation invariant marker design which guarantees a minimal Hamming distance between all used codes. This increases the robustness of pedestrian identification. We present an algorithm to detect these markers, and to track them through a camera network. With our system we are able to capture the movement of the participants in great detail, resulting in precise trajectories for thousands of pedestrians. The acquired data is of great interest in the field of pedestrian dynamics. It can also potentially help to improve multi-target tracking approaches, by allowing better insights into the behaviour of crowds.

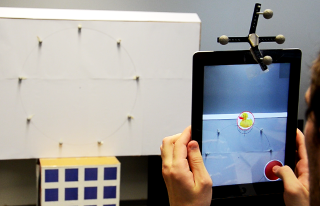

Influence of Temporal Delay and Display Update Rate in an Augmented Reality Application Scenario

In mobile augmented reality (AR) applications, highly complex computing tasks such as position tracking and 3D rendering compete for limited processing resources. This leads to unavoidable system latency in the form of temporal delay and reduced display update rates. In this paper we present a user study on the influence of these system parameters in an AR point'n'click scenario. Our experiment was conducted in a lab environment to collect quantitative data (user performance as well as user perceived ease of use). We can show that temporal delay and update rate both affect user performance and experience but that users are much more sensitive to longer temporal delay than to lower update rates. Moreover, we found that the effects of temporal delay and update rate are not independent as with longer temporal delay, changing update rates tend to have less impact on the ease of use. Furthermore, in some cases user performance can actually increase when reducing the update rate in order to make it compatible to the latency. Our findings indicate that in the development of mobile AR applications, more emphasis should be put on delay reduction than on update rate improvement and that increasing the update rate does not necessarily improve user performance and experience if the temporal delay is significantly higher than the update interval.

@inproceedings{Li:2015:ITD:2836041.2836070,

author = {Li, Ming and Arning, Katrin and Vervier, Luisa and Ziefle, Martina and Kobbelt, Leif},

title = {Influence of Temporal Delay and Display Update Rate in an Augmented Reality Application Scenario},

booktitle = {Proceedings of the 14th International Conference on Mobile and Ubiquitous Multimedia},

series = {MUM '15},

year = {2015},

isbn = {978-1-4503-3605-5},

location = {Linz, Austria},

pages = {278--286},

numpages = {9},

url = {http://doi.acm.org/10.1145/2836041.2836070},

doi = {10.1145/2836041.2836070},

acmid = {2836070},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {display update rate, ease of use, latency, mobile augmented reality, perception tolerance, point'n'click, temporal delay, user study},

}

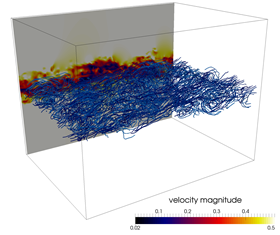

Packet-Oriented Streamline Tracing on Modern SIMD Architectures

The advection of integral lines is an important computational kernel in vector field visualization. We investigate how this kernel can profit from vector (SIMD) extensions in modern CPUs. As a baseline, we formulate a streamline tracing algorithm that facilitates auto-vectorization by an optimizing compiler. We analyze this algorithm and propose two different optimizations. Our results show that particle tracing does not per se benefit from SIMD computation. Based on a careful analysis of the auto-vectorized code, we propose an optimized data access routine and a re-packing scheme which increases average SIMD efficiency. We evaluate our approach on three different, turbulent flow fields. Our optimized approaches increase integration performance up to 5:6 over our baseline measurement. We conclude with a discussion of current limitations and aspects for future work.

@INPROCEEDINGS{Hentschel2015,

author = {Bernd Hentschel and Jens Henrik G{\"o}bbert and Michael Klemm and

Paul Springer and Andrea Schnorr and Torsten W. Kuhlen},

title = {{P}acket-{O}riented {S}treamline {T}racing on {M}odern {SIMD}

{A}rchitectures},

booktitle = {Proceedings of the Eurographics Symposium on Parallel Graphics

and Visualization},

year = {2015},

pages = {43--52},

abstract = {The advection of integral lines is an important computational

kernel in vector field visualization. We investigate

how this kernel can profit from vector (SIMD) extensions in modern CPUs. As a

baseline, we formulate a streamline

tracing algorithm that facilitates auto-vectorization by an optimizing compiler.

We analyze this algorithm and

propose two different optimizations. Our results show that particle tracing does

not per se benefit from SIMD computation.

Based on a careful analysis of the auto-vectorized code, we propose an optimized

data access routine

and a re-packing scheme which increases average SIMD efficiency. We evaluate our

approach on three different,

turbulent flow fields. Our optimized approaches increase integration performance

up to 5.6x over our baseline

measurement. We conclude with a discussion of current limitations and aspects

for future work.}

}

Gaze Guiding zur Unterstützung der Bedienung technischer Systeme

Die Vermeidung von Bedienfehlern ist gerade in sicherheitskritischen Systemen von zentraler Bedeutung. Um das Wiedererinnern an einmal erlernte Fähigkeiten für das Bedienen und Steuern technischer Systeme zu erleichtern und damit Fehler zu vermeiden, werden sogenannte Refresher Interventionen eingesetzt. Hierbei handelt es sich bisher zumindest um aufwändige Simulations- oder Simulationstrainings, die bereits erlernte Fähigkeiten durch deren wiederholte Ausführung auffrischen und so in selten auftretenden kritischen Situationen korrekt abrufbar machen. Die vorliegende Arbeit zeigt wie das Ziel des Wiedererinnerns auch ohne Refresher in Form einer Gaze Guiding Komponente erreicht werden kann, die in eine visuelle Benutzerschnittstelle zur Bedienung des technischen Prozesses eingebettet wird und den Fertigkeitsabruf durch gezielte kontextabhängige Ein- und Überblendungen unterstützt. Die Wirkung dieses Konzepts wird zurzeit in einer größeren DFG-geförderten Studie untersucht.

An Integrative Tool Chain for Collaborative Virtual Museums in Immersive Virtual Environments

Various conceptual approaches for the creation and presentation of virtual museums can be found. However, less work exists that concentrates on collaboration in virtual museums. The support of collaboration in virtual museums provides various benefits for the visit as well as the preparation and creation of virtual exhibits. This paper addresses one major problem of collaboration in virtual museums: the awareness of visitors. We use a Cave Automated Virtual Environment (CAVE) for the visualization of generated virtual museums to offer simple awareness through co-location. Furthermore, the use of smartphones during the visit enables the visitors to create comments or to access exhibit related metadata. Thus, the main contribution of this ongoing work is the presentation of a workflow that enables an integrated deployment of generic virtual museums into a CAVE, which will be demonstrated by deploying the virtual Leopold Fleischhacker Museum.

Crowdsourcing and Knowledge Co-creation in Virtual Museums

This paper gives an overview on crowdsourcing practices in virtual mu-seums. Engaged nonprofessionals and specialists support curators in creating digi-tal 2D or 3D exhibits, exhibitions and tour planning and enhancement of metadata using the Virtual Museum and Cultural Object Exchange Format (ViMCOX). ViMCOX provides the semantic structure of exhibitions and complete museums and includes new features, such as room and outdoor design, interactions with artwork, path planning and dissemination and presentation of contents. Applica-tion examples show the impact of crowdsourcing in the Museo de Arte Contem-poraneo in Santiago de Chile and in the virtual museum depicting the life and work of the Jewish sculptor Leopold Fleischhacker. A further use case is devoted to crowd-based support for restoration of high-quality 3D shapes.

"Workshop on Formal Methods in Human Computer Interaction."

This workshop aims to gather active researchers and practitioners in the field of formal methods in the context of user interfaces, interaction techniques, and interactive systems. The main objectives are to look at the evolutions of the definition and use of formal methods for interactive systems since the last book on the field nearly 20 years ago and also to identify important themes for the next decade of research. The goals of this workshop are to offer an exchange platform for scientists who are interested in the formal modeling and description of interaction, user interfaces, and interactive systems and to discuss existing formal modeling methods in this area of conflict. Participants will be asked to present their perspectives, concepts, and techniques for formal modeling along one of two case studies – the control of a nuclear power plant and an air traffic management arrival manager.

FILL: Formal Description of Executable and Reconfigurable Models of Interactive Systems

This paper presents the Formal Interaction Logic Language (FILL) as modeling approach for the description of user interfaces in an executable way. In the context of the workshop on Formal Methods in Human Computer Interaction, this work presents FILL by first introducing its architectural structure, its visual representation and transformation of reference nets, a special type of Petri nets, and finally discussing FILL in context of two use case proposed by the workshop. Therefore, this work shows how FILL can be used to model automation as part of the user interface model as well as how formal reconfiguration can be used to implement user-based automation given a formal user interface model.

3DUIdol-6th Annual 3DUI Contest.

The 6th annual IEEE 3DUI contest focuses on Virtual Music Instruments (VMIs), and on 3D user interfaces for playing them. The Contest is part of the IEEE 2015 3DUI Symposium held in Arles, France. The contest is open to anyone interested in 3D User Interfaces (3DUIs), from researchers to students, enthusiasts, and professionals. The purpose of the contest is to stimulate innovative and creative solutions to challenging 3DUI problems. Due to the recent explosion of affordable and portable 3D devices, this year's contest will be judged live at 3DUI. The judgment will be done by selected 3DUI experts during on-site presentation during the conference. Therefore, contestants are required to bring their systems for live judging and for attendees to experience them.

Poster: Scalable Metadata In- and Output for Multi-platform Data Annotation Applications

Metadata in- and output are important steps within the data annotation process. However, selecting techniques that effectively facilitate these steps is non-trivial, especially for applications that have to run on multiple virtual reality platforms. Not all techniques are applicable to or available on every system, requiring to adapt workflows on a per-system basis. Here, we describe a metadata handling system based on Android's Intent system that automatically adapts workflows and thereby makes manual adaption needless.

Poster: Vision-based Multi-Person Foot Tracking for CAVE Systems with Under-Floor Projection

In this work, we present an approach for tracking the feet of mul- tiple users in CAVE-like systems with under-floor projection. It is based on low-cost consumer cameras, does not require users to wear additional equipment, and can be installed without modifying existing components. If the brightness of the floor projection does not contain too much variation, the feet of several people can be reliably tracked and assigned to individuals.

Poster: Effects and Applicability of Rotation Gain in CAVE-like Environments

In this work, we report on a pilot study we conducted, and on a study design, to examine the effects and applicability of rotation gain in CAVE-like virtual environments. The results of the study will give recommendations for the maximum levels of rotation gain that are reasonable in algorithms for enlarging the virtual field of regard or redirected walking.

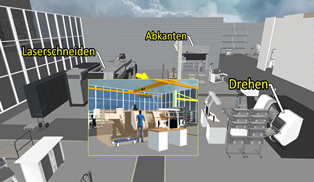

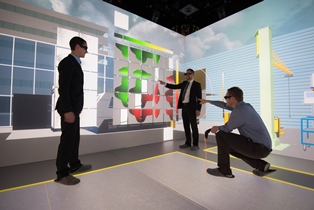

Poster: flapAssist: How the Integration of VR and Visualization Tools Fosters the Factory Planning Process

Virtual Reality (VR) systems are of growing importance to aid decision support in the context of the digital factory, especially factory layout planning. While current solutions either focus on virtual walkthroughs or the visualization of more abstract information, a solution that provides both, does currently not exist. To close this gap, we present a holistic VR application, called Factory Layout Planning Assistant (flapAssist). It is meant to serve as a platform for planning the layout of factories, while also providing a wide range of analysis features. By being scalable from desktops to CAVEs and providing a link to a central integration platform, flapAssist integrates well in established factory planning workflows.

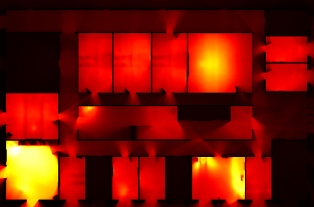

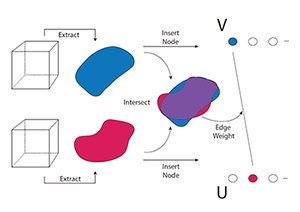

Poster: Tracking Space-Filling Structures in Turbulent Flows

We present a novel approach for tracking space-filling features, i.e. a set of features which covers the entire domain. In contrast to previous work, we determine the assignment between features from successive time steps by computing a globally optimal, maximum-weight, maximal matching on a weighted, bi-partite graph. We demonstrate the method's functionality by tracking dissipation elements (DEs), a space-filling structure definition from turbulent flow analysis. The ability to track DEs over time enables researchers from fluid mechanics to extend their analysis beyond the assessment of static flow fields to time-dependent settings.

@INPROCEEDINGS{Schnorr2015,

author = {Andrea Schnorr and Jens-Henrik Goebbert and Torsten W. Kuhlen and Bernd Hentschel},

title = {{T}racking {S}pace-{F}illing {S}tructures in {T}urbulent {F}lows},

booktitle = Proc # { the } # LDAV,

year = {2015},

pages = {143--144},

abstract = {We present a novel approach for tracking space-filling features, i.e. a set of features which covers the entire domain. In contrast to previous work, we determine the assignment between features from successive time steps by computing a globally optimal, maximum-weight, maximal matching on a weighted, bi-partite graph. We demonstrate the method's functionality by tracking dissipation elements (DEs), a space-filling structure definition from turbulent flow analysis. The abilitytotrack DEs over time enables researchers from fluid mechanics to extend their analysis beyond the assessment of static flow fields to time-dependent settings.},

doi = {10.1109/LDAV.2015.7348089},

keywords = {Feature Tracking, Weighted, Bi-Partite Matching, Flow

Visualization, Dissipation Elements}

}

Methodology for Generating Individualized Trajectories from Experiments

Traffic research has reached a point where trajectories are available for microscopic analysis. The next step will be trajectories which are connected to human factors, i.e information about the agent. The first step in pedestrian dynamics has been done using video recordings to generate precise trajectories. We go one step further and present two experiments for which ID markers are used to produce individualized trajectories: a large-scale experiment on pedestrian dynamics and an experiment on single-file bicycle traffic. The camera set-up has to be carefully chosen when using ID markers. It has to facilitate reading out the markers, while at the same time being able to capture the whole experiment. We propose two set-ups to address this problem and report on experiments conducted with these set-ups.

Imalytics Preclinical: Interactive Analysis of Biomedical Volume Data

A software tool is presented for interactive segmentation of volumetric medical data sets. To allow interactive processing of large data sets, segmentation operations, and rendering are GPU-accelerated. Special adjustments are provided to overcome GPU-imposed constraints such as limited memory and host-device bandwidth. A general and efficient undo/redo mechanism is implemented using GPU-accelerated compression of the multiclass segmentation state. A broadly applicable set of interactive segmentation operations is provided which can be combined to solve the quantification task of many types of imaging studies. A fully GPU-accelerated ray casting method for multiclass segmentation rendering is implemented which is well-balanced with respect to delay, frame rate, worst-case memory consumption, scalability, and image quality. Performance of segmentation operations and rendering are measured using high resolution example data sets showing that GPU-acceleration greatly improves the performance. Compared to a reference marching cubes implementation, the rendering was found to be superior with respect to rendering delay and worst-case memory consumption while providing sufficiently high frame rates for interactive visualization and comparable image quality.

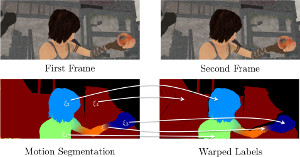

Efficient Dense Rigid-Body Motion Segmentation and Estimation in RGB-D Video

Motion is a fundamental grouping cue in video. Many current approaches to motion segmentation in monocular or stereo image sequences rely on sparse interest points or are dense but computationally demanding. We propose an efficient expectation-maximization (EM) framework for dense 3D segmentation of moving rigid parts in RGB-D video. Our approach segments images into pixel regions that undergo coherent 3D rigid-body motion. Our formulation treats background and foreground objects equally and poses no further assumptions on the motion of the camera or the objects than rigidness. While our EM-formulation is not restricted to a specific image representation, we supplement it with efficient image representation and registration for rapid segmentation of RGB-D video. In experiments, we demonstrate that our approach recovers segmentation and 3D motion at good precision.

@string{ijcv="International Journal of Computer Vision"}

@article{stueckler-ijcv15,

author = {J. Stueckler and S. Behnke},

title = {Efficient Dense Rigid-Body Motion Segmentation and Estimation in RGB-D Video},

journal = ijcv,

month = jan,

year = {2015},

doi = {10.1007/s11263-014-0796-3},

publisher = {Springer US},

}

Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess - A Concept for the Integration of Virtual Reality Applications to Improve the Information Exchange within the Factory Planning Process

Factory planning is a highly heterogeneous process that involves various expert groups at the same time. In this context, the communication between different expert groups poses a major challenge. One reason for this lies in the differing domain knowledge of individual groups. However, since decisions made within one domain usually have an effect on others, it is essential to make these domain interactions visible to all involved experts in order to improve the overall planning process. In this paper, we present a concept that facilitates the integration of two separate virtual-reality- and visualization analysis tools for different application domains of the planning process. The concept was developed in context of the Virtual Production Intelligence and aims at creating an approach to making domain interactions visible, such that the aforementioned challenges can be mitigated.

@Article{Pick2015,

Title = {“Ein Konzept zur Integration von Virtual Reality Anwendungen zur Verbesserung des Informationsaustauschs im Fabrikplanungsprozess”},

Author = {S. Pick, S. Gebhardt, B. Hentschel, T. W. Kuhlen, R. Reinhard, C. Büscher, T. Al Khawli, U. Eppelt, H. Voet, and J. Utsch},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {139--152}

}

Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses - An Approach for the Softwaretechnical Integration of Virtual Reality Applications by the Example of the Factory Planning Process

The integration of independent applications is a complex task from a software engineering perspective. Nonetheless, it entails significant benefits, especially in the context of Virtual Reality (VR) supported factory planning, e.g., to communicate interdependencies between different domains. To emphasize this aspect, we integrated two independent VR and visualization applications into a holistic planning solution. Special focus was put on parallelization and interaction aspects, while also considering more general requirements of such an integration process. In summary, we present technical solutions for the effective integration of several VR applications into a holistic solution with the integration of two applications from the context of factory planning with special focus on parallelism and interaction aspects. The effectiveness of the approach is demonstrated by performance measurements.

@Article{Gebhardt2015,

Title = {“Ein Ansatz zur Softwaretechnischen Integration von Virtual Reality Anwendungen am Beispiel des Fabrikplanungsprozesses”},

Author = {S. Gebhardt, S. Pick, B. Hentschel, T. W. Kuhlen, R. Reinhard, and C. Büscher},

Journal = {Tagungsband 12. Paderborner Workshop Augmented \& Virtual Reality in der Produktentstehung},

Year = {2015},

Pages = {153--166}

}

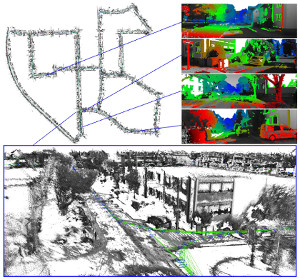

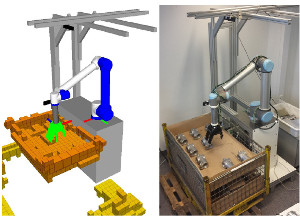

NimbRo Explorer: Semi-Autonomous Exploration and Mobile Manipulation in Rough Terrain

Fully autonomous exploration and mobile manipulation in rough terrain are still beyond the state of the art—robotics challenges and competitions are held to facilitate and benchmark research in this direction. One example is the DLR SpaceBot Cup 2013, for which we developed an integrated robot system to semi-autonomously perform planetary exploration and manipulation tasks. Our robot explores, maps, and navigates in previously unknown, uneven terrain using a 3D laser scanner and an omnidirectional RGB-D camera. We developed manipulation capabilities for object retrieval and pick-and-place tasks. Many parts of the mission can be performed autonomously. In addition, we developed teleoperation interfaces on different levels of shared autonomy which allow for specifying missions, monitoring mission progress, and on-the-fly reconfiguration. To handle network communication interruptions and latencies between robot and operator station, we implemented a robust network layer for the middleware ROS. The integrated system has been demonstrated at the DLR SpaceBot Cup 2013. In addition, we conducted systematic experiments to evaluate the performance of our approaches.

@article{stueckler15_jfr_explorer,

author={J. Stueckler and M. Schwarz and M. Schadler and A. Topalidou-Kyniazopoulou and S. Behnke},

title={NimbRo Explorer: Semi-Autonomous Exploration and Mobile Manipulation in Rough Terrain},

journal={Journal of Field Robotics},

note={published online},

year={2015},

}

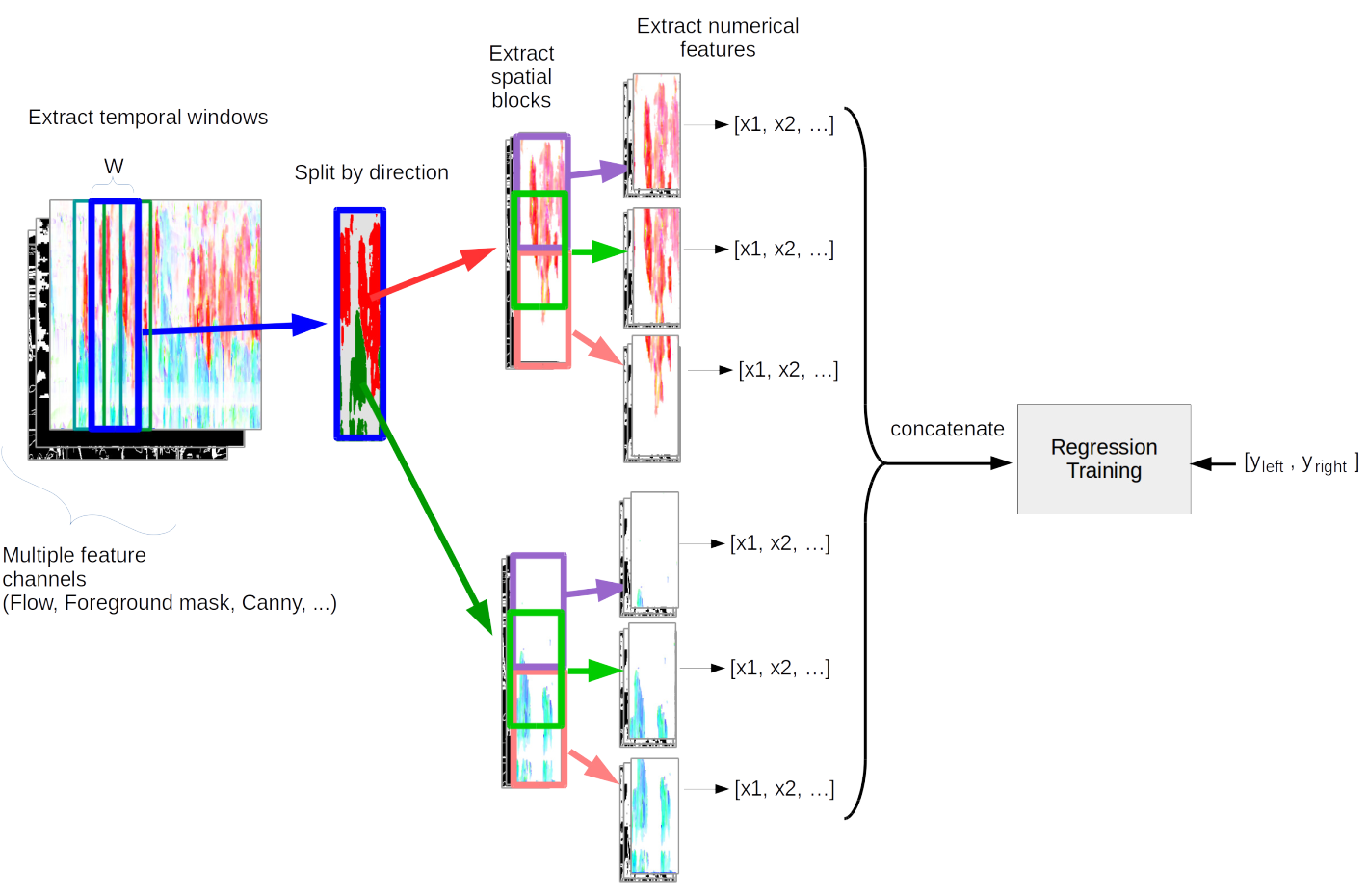

Pedestrian Line Counting by Probabilistic Combination of Flow and Appearance Information

In this thesis we examine the task of estimating how many pedestrians cross a given line in a surveillance video, in the presence of high occlusion and dense crowds. We show that a prior, blob-based pedestrian line counting method fails on our newly annotated private dataset, which is more challenging than those used in the literature.

We propose a new spatiotemporal slice-based method that works with simple low-level features based on optical flow, background subtraction and edge detection and show that it produces good results on the new dataset. Furthermore, presumably due to the very simple and general nature of the features we use, the method also performs well on the popular UCSD vidd dataset without additional hyperparameter tuning, showing the robustness of our approach.

We design new evaluation measures that generalize the precision and recall used in information retrieval and binary classification to continuous, instantaneous pedestrian flow estimations and we argue that they are better suited to this task than currently used measures.

We also consider the relations between pedestrian region counting and line counting by comparing the output of a region counting method with the counts that we derive from line counting. Finally we show a negative result, where a probabilistic method for combining line and region counter outputs does not lead to the hoped result of mutually improved counters.

Sequence-Discriminative Training of Recurrent Neural Networks

We investigate sequence-discriminative training of long short-term memory recurrent neural networks using the maximum mutual information criterion. We show that although recurrent neural networks already make use of the whole observation sequence and are able to incorporate more contextual information than feed forward networks, their performance can be improved with sequence-discriminative training. Experiments are performed on two publicly available handwriting recognition tasks containing English and French handwriting. On the English corpus, we obtain a relative improvement in WER of over 11% with maximum mutual information (MMI) training compared to cross-entropy training. On the French corpus, we observed that it is necessary to interpolate the MMI objective function with cross-entropy.

@InProceedings { voigtlaender2015:seq,

author= {Voigtlaender, Paul and Doetsch, Patrick and Wiesler, Simon and Schlüter, Ralf and Ney, Hermann},

title= {Sequence-Discriminative Training of Recurrent Neural Networks},

booktitle= {IEEE International Conference on Acoustics, Speech, and Signal Processing},

year= 2015,

pages= {2100-2104},

address= {Brisbane, Australia},

month= apr,

booktitlelink= {http://icassp2015.org/}

}

Multi-Layered Mapping and Navigation for Autonomous Micro Aerial Vehicles

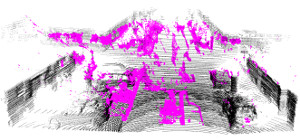

Micro aerial vehicles, such as multirotors, are particularly well suited for the autonomous monitoring, inspection, and surveillance of buildings, e.g., for maintenance or disaster management. Key prerequisites for the fully autonomous operation of micro aerial vehicles are real-time obstacle detection and planning of collision-free trajectories. In this article, we propose a complete system with a multimodal sensor setup for omnidirectional obstacle perception consisting of a 3D laser scanner, two stereo camera pairs, and ultrasonic distance sensors. Detected obstacles are aggregated in egocentric local multiresolution grid maps. Local maps are efficiently merged in order to simultaneously build global maps of the environment and localize in these. For autonomous navigation, we generate trajectories in a multi-layered approach: from mission planning over global and local trajectory planning to reactive obstacle avoidance. We evaluate our approach and the involved components in simulation and with the real autonomous micro aerial vehicle. Finally, we present the results of a complete mission for autonomously mapping a building and its surroundings.

@article{droeschel15-jfr-mod,

author={D. Droeschel and M. Nieuwenhuisen and M. Beul and J. Stueckler and D. Holz and S. Behnke},

title={Multi-Layered Mapping and Navigation for Autonomous Micro Aerial Vehicles},

journal={Journal of Field Robotics},

year={2015},

note={published online},

}

Dense Continuous-Time Tracking and Mapping with Rolling Shutter RGB-D Cameras

We propose a dense continuous-time tracking and mapping method for RGB-D cameras. We parametrize the camera trajectory using continuous B-splines and optimize the trajectory through dense, direct image alignment. Our method also directly models rolling shutter in both RGB and depth images within the optimization, which improves tracking and reconstruction quality for low-cost CMOS sensors. Using a continuous trajectory representation has a number of advantages over a discrete-time representation (e.g. camera poses at the frame interval). With splines, less variables need to be optimized than with a discrete represen- tation, since the trajectory can be represented with fewer control points than frames. Splines also naturally include smoothness constraints on derivatives of the trajectory estimate. Finally, the continuous trajectory representation allows to compensate for rolling shutter effects, since a pose estimate is available at any exposure time of an image. Our approach demonstrates superior quality in tracking and reconstruction compared to approaches with discrete-time or global shutter assumptions.

@string{iccv="IEEE International Conference on Computer Vision (ICCV)"}

@inproceedings{kerl15iccv,

author = {C. Kerl and J. Stueckler and D. Cremers},

title = {Dense Continuous-Time Tracking and Mapping with Rolling Shutter {RGB-D} Cameras},

booktitle = iccv,

year = {2015},

address = {Santiago, Chile},

}

Motion Cooperation: Smooth Piece-Wise Rigid Scene Flow from RGB-D Images

We propose a novel joint registration and segmentation approach to estimate scene flow from RGB-D images. In- stead of assuming the scene to be composed of a number of independent rigidly-moving parts, we use non-binary labels to capture non-rigid deformations at transitions between the rigid parts of the scene. Thus, the velocity of any point can be computed as a linear combination (interpolation) of the estimated rigid motions, which provides better results than traditional sharp piecewise segmentations. Within a variational framework, the smooth segments of the scene and their corresponding rigid velocities are alternately re- fined until convergence. A K-means-based segmentation is employed as an initialization, and the number of regions is subsequently adapted during the optimization process to capture any arbitrary number of independently moving ob- jects. We evaluate our approach with both synthetic and real RGB-D images that contain varied and large motions. The experiments show that our method estimates the scene flow more accurately than the most recent works in the field, and at the same time provides a meaningful segmentation of the scene based on 3D motion.

@inproceedings{jaimez15_mocoop,

author= {M. Jaimez and M. Souiai and J. Stueckler and J. Gonzalez-Jimenez and D. Cremers},

title = {Motion Cooperation: Smooth Piece-Wise Rigid Scene Flow from RGB-D Images},

booktitle = {Proc. of the Int. Conference on 3D Vision (3DV)},

month = oct,

year = 2015,

}

Reconstructing Street-Scenes in Real-Time From a Driving Car