Publications

4D-StOP: Panoptic Segmentation of 4D LiDAR using Spatio-temporal Object Proposal Generation and Aggregation

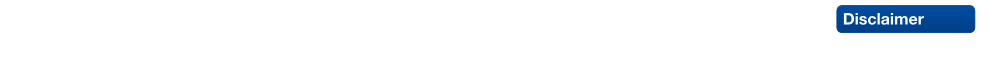

In this work, we present a new paradigm, called 4D-StOP, to tackle the task of 4D Panoptic LiDAR Segmentation. 4D-StOP first generates spatio-temporal proposals using voting-based center predictions, where each point in the 4D volume votes for a corresponding center. These tracklet proposals are further aggregated using learned geometric features. The tracklet aggregation method effectively generates a video-level 4D scene representation over the entire space-time volume. This is in contrast to existing end-to-end trainable state-of-the-art approaches which use spatio-temporal embeddings that are represented by Gaussian probability distributions. Our voting-based tracklet generation method followed by geometric feature-based aggregation generates significantly improved panoptic LiDAR segmentation quality when compared to modeling the entire 4D volume using Gaussian probability distributions. 4D-StOP achieves a new state-of-the-art when applied to the SemanticKITTI test dataset with a score of 63.9 LSTQ, which is a large (+7%) improvement compared to current best-performing end-to-end trainable methods. The code and pre-trained models are available at:https://github.com/LarsKreuzberg/4D-StOP

EMBER: Exact Mesh Booleans via Efficient & Robust Local Arrangements

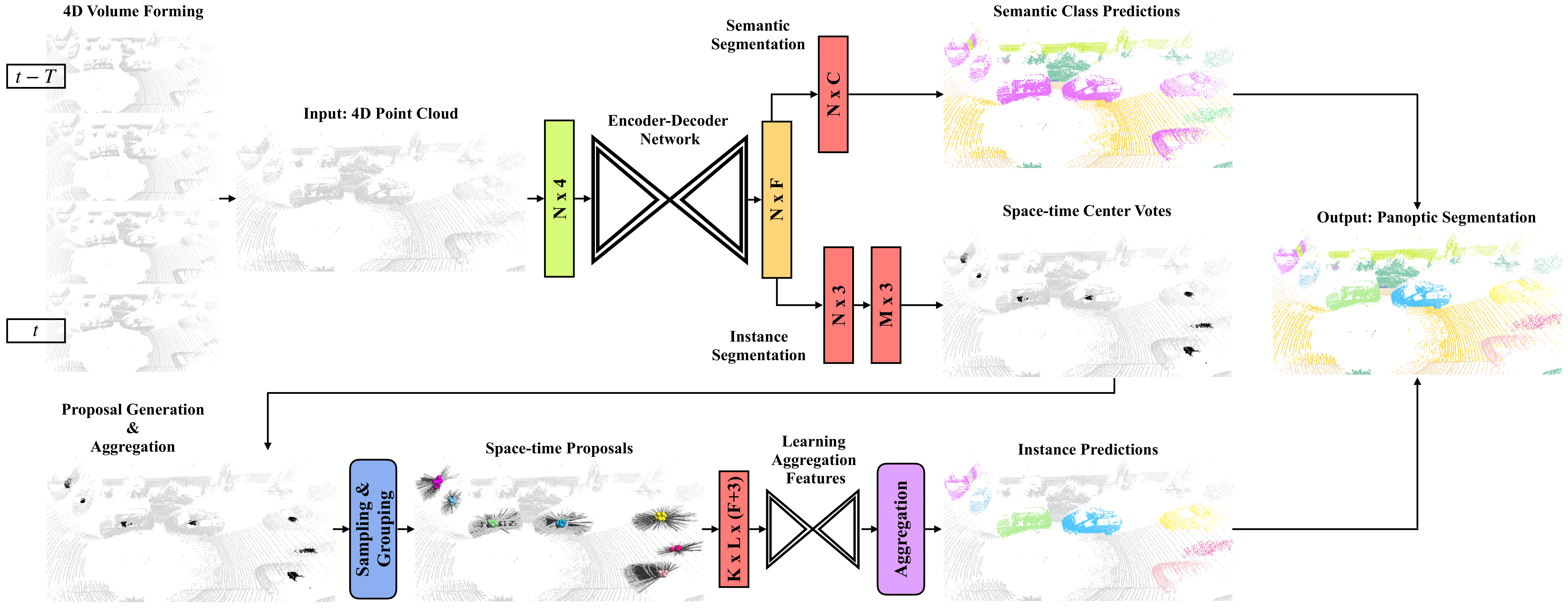

Boolean operators are an essential tool in a wide range of geometry processing and CAD/CAM tasks. We present a novel method, EMBER, to compute Boolean operations on polygon meshes which is exact, reliable, and highly performant at the same time. Exactness is guaranteed by using a plane-based representation for the input meshes along with recently introduced homogeneous integer coordinates. Reliability and robustness emerge from a formulation of the algorithm via generalized winding numbers and mesh arrangements. High performance is achieved by avoiding the (pre-)construction of a global acceleration structure. Instead, our algorithm performs an adaptive recursive subdivision of the scene’s bounding box while generating and tracking all required data on the fly. By leveraging a number of early-out termination criteria, we can avoid the generation and inspection of regions that do not contribute to the output. With a careful implementation and a work-stealing multi-threading architecture, we are able to compute Boolean operations between meshes with millions of triangles at interactive rates. We run an extensive evaluation on the Thingi10K dataset to demonstrate that our method outperforms state-of-the-art algorithms, even inexact ones like QuickCSG, by orders of magnitude.

If you are interested in a binary implementation including various additional features, please contact the authors. Contact: trettner@shapedcode.com

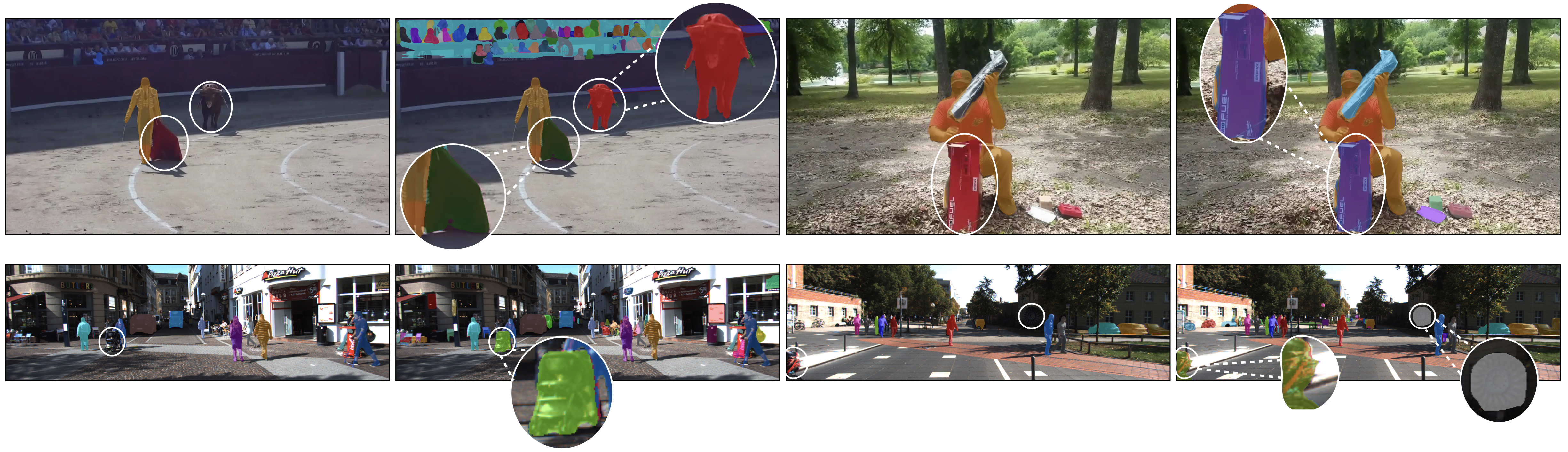

HODOR: High-level Object Descriptors for Object Re-segmentation in Video Learned from Static Images

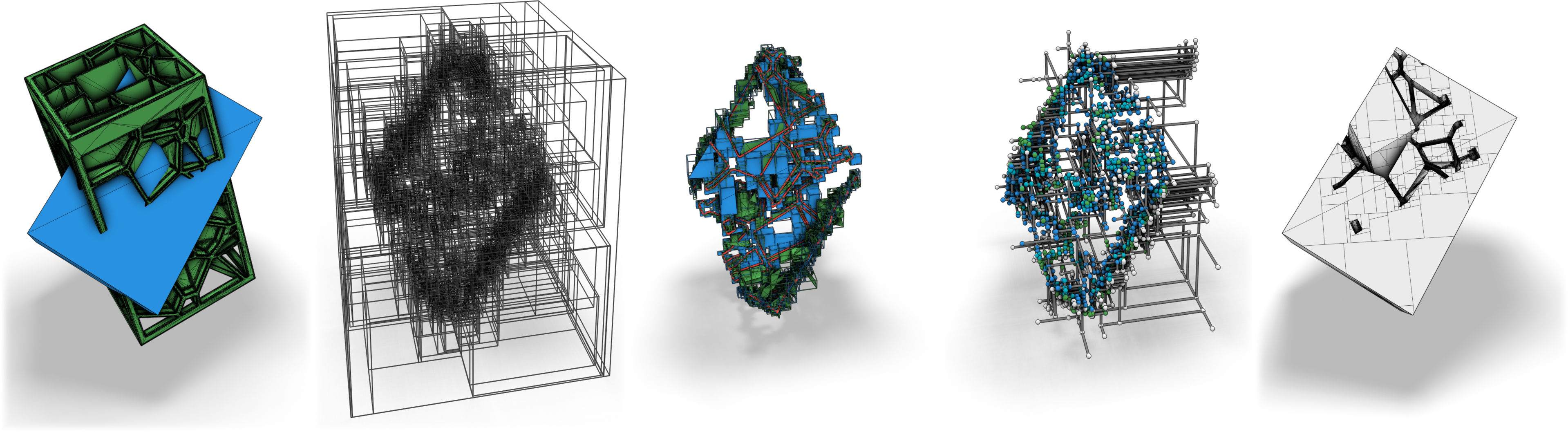

Existing state-of-the-art methods for Video Object Segmentation (VOS) learn low-level pixel-to-pixel correspondences between frames to propagate object masks across video. This requires a large amount of densely annotated video data, which is costly to annotate, and largely redundant since frames within a video are highly correlated. In light of this, we propose HODOR: a novel method that tackles VOS by effectively leveraging annotated static images for understanding object appearance and scene context. We encode object instances and scene information from an image frame into robust high-level descriptors which can then be used to re-segment those objects in different frames. As a result, HODOR achieves state-of-the-art performance on the DAVIS and YouTube-VOS benchmarks compared to existing methods trained without video annotations. Without any architectural modification, HODOR can also learn from video context around single annotated video frames by utilizing cyclic consistency, whereas other methods rely on dense, temporally consistent annotations.

@article{Athar22CVPR,

title = {{HODOR: High-level Object Descriptors for Object Re-segmentation in Video Learned from Static Images}},

author = {Athar, Ali and Luiten, Jonathon and Hermans, Alexander and Ramanan, Deva and Leibe, Bastian},

journal = {{IEEE Conference on Computer Vision and Pattern Recognition (CVPR'22)}},

year = {2022}

}

Opening up Open World Tracking

Tracking and detecting any object, including ones never-seen-before during model training, is a crucial but elusive capability of autonomous systems. An autonomous agent that is blind to never-seen-before objects poses a safety hazard when operating in the real world and yet this is how almost all current systems work. One of the main obstacles towards advancing tracking any object is that this task is notoriously difficult to evaluate. A benchmark that would allow us to perform an apples-to-apples comparison of existing efforts is a crucial first step towards advancing this important research field. This paper addresses this evaluation deficit and lays out the landscape and evaluation methodology for detecting and tracking both known and unknown objects in the open-world setting. We propose a new benchmark, TAO-OW: Tracking Any Object in an Open World}, analyze existing efforts in multi-object tracking, and construct a baseline for this task while highlighting future challenges. We hope to open a new front in multi-object tracking research that will hopefully bring us a step closer to intelligent systems that can operate safely in the real world.

@inproceedings{liu2022opening,

title={Opening up Open-World Tracking},

author={Liu, Yang and Zulfikar, Idil Esen and Luiten, Jonathon and Dave, Achal and Ramanan, Deva and Leibe, Bastian and O{\v{s}}ep, Aljo{\v{s}}a and Leal-Taix{\'e}, Laura},

journal={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}

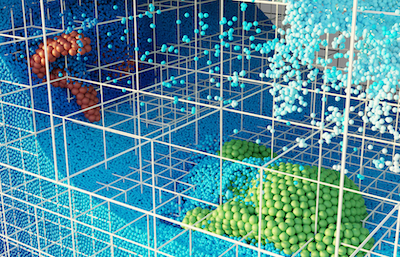

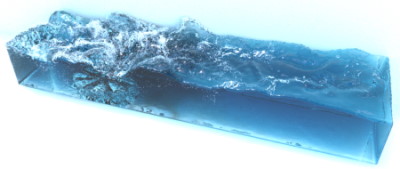

Fast Octree Neighborhood Search for SPH Simulations

We present a new octree-based neighborhood search method for SPH simulation. A speedup of up to 1.9x is observed in comparison to state-of-the-art methods which rely on uniform grids. While our method focuses on maximizing performance in fixed-radius SPH simulations, we show that it can also be used in scenarios where the particle support radius is not constant thanks to the adaptive nature of the octree acceleration structure.

Neighborhood search methods typically consist of an acceleration structure that prunes the space of possible particle neighbor pairs, followed by direct distance comparisons between the remaining particle pairs. Previous works have focused on minimizing the number of comparisons. However, in an effort to minimize the actual computation time, we find that distance comparisons exhibit very high throughput on modern CPUs. By permitting more comparisons than strictly necessary, the time spent on preparing and searching the acceleration structure can be reduced, yielding a net positive speedup. The choice of an octree acceleration structure, instead of the uniform grid typically used in fixed-radius methods, ensures balanced computational tasks. This benefits both parallelism and provides consistently high computational intensity for the distance comparisons. We present a detailed account of high-level considerations that, together with low-level decisions, enable high throughput for performance-critical parts of the algorithm.

Finally, we demonstrate the high performance of our algorithm on a number of large-scale fixed-radius SPH benchmarks and show in experiments with a support radius ratio up to 3 that our method is also effective in multi-resolution SPH simulations.

@ARTICLE{ FWL+22,

author= {Jos{\'{e}} Antonio Fern{\'{a}}ndez-Fern{\'{a}}ndez and Lukas Westhofen and Fabian L{\"{o}}schner and Stefan Rhys Jeske and Andreas Longva and Jan Bender },

title= {{Fast Octree Neighborhood Search for SPH Simulations}},

year= {2022},

journal= {ACM Transactions on Graphics (SIGGRAPH Asia)},

publisher= {ACM},

volume = {41},

number = {6},

pages= {13}

}

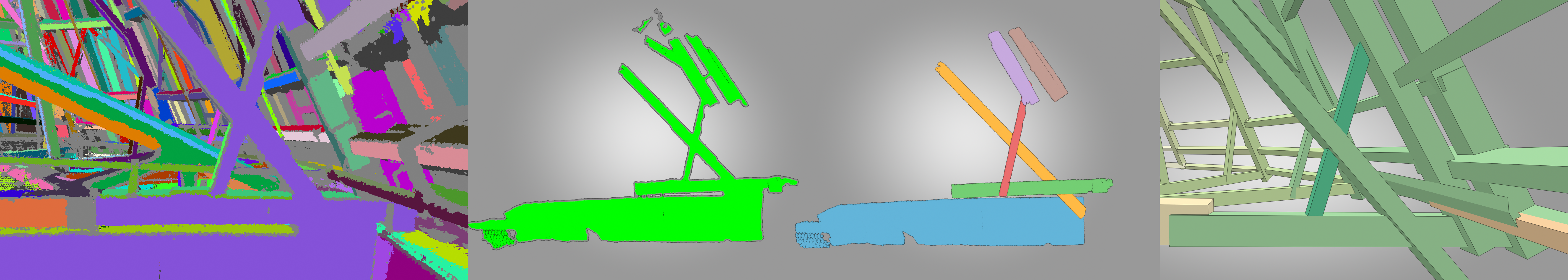

Scan2FEM: From Point Clouds to Structured 3D Models Suitable for Simulation

Preservation of cultural heritage is important to prevent singular objects or sites of cultural importance to decay. One aspect of preservation is the creation of a digital twin. In case of a catastrophic event, this twin can be used to support repairs or reconstruction, in order to stay faithful to the original object or site. Certain activities in prolongation of such an objects lifetime may involve adding or replacing structural support elements to prevent a collapse. We propose an automatic method that is capable of transforming a point cloud into a geometric representation that is suitable for structural analysis. We robustly find cuboids and their connections in a point cloud to approximate the wooden beam structure contained inside. We export the necessary information to perform structural analysis, on the example of the timber attic of the UNESCO World Heritage Aachen Cathedral. We provide evaluation of the resulting cuboids’ quality and show how a user can interactively refine the cuboids in order to improve the approximated model, and consequently the simulation results.

@inproceedings {10.2312:gch.20221215,

booktitle = {Eurographics Workshop on Graphics and Cultural Heritage},

editor = {Ponchio, Federico and Pintus, Ruggero},

title = {{Scan2FEM: From Point Clouds to Structured 3D Models Suitable for Simulation}},

author = {Selman, Zain and Musto, Juan and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISSN = {2312-6124},

ISBN = {978-3-03868-178-6},

DOI = {10.2312/gch.20221215}

}

TinyAD: Automatic Differentiation in Geometry Processing Made Simple

Non-linear optimization is essential to many areas of geometry processing research. However, when experimenting with different problem formulations or when prototyping new algorithms, a major practical obstacle is the need to figure out derivatives of objective functions, especially when second-order derivatives are required. Deriving and manually implementing gradients and Hessians is both time-consuming and error-prone. Automatic differentiation techniques address this problem, but can introduce a diverse set of obstacles themselves, e.g. limiting the set of supported language features, imposing restrictions on a program's control flow, incurring a significant run time overhead, or making it hard to exploit sparsity patterns common in geometry processing. We show that for many geometric problems, in particular on meshes, the simplest form of forward-mode automatic differentiation is not only the most flexible, but also actually the most efficient choice. We introduce TinyAD: a lightweight C++ library that automatically computes gradients and Hessians, in particular of sparse problems, by differentiating small (tiny) sub-problems. Its simplicity enables easy integration; no restrictions on, e.g., looping and branching are imposed. TinyAD provides the basic ingredients to quickly implement first and second order Newton-style solvers, allowing for flexible adjustment of both problem formulations and solver details. By showcasing compact implementations of methods from parametrization, deformation, and direction field design, we demonstrate how TinyAD lowers the barrier to exploring non-linear optimization techniques. This enables not only fast prototyping of new research ideas, but also improves replicability of existing algorithms in geometry processing. TinyAD is available to the community as an open source library.

- Best Paper Award (1st place) at SGP 2022

- Graphics Replicability Stamp

@article{schmidt2022tinyad,

title={{TinyAD}: Automatic Differentiation in Geometry Processing Made Simple},

author={Schmidt, Patrick and Born, Janis and Bommes, David and Campen, Marcel and Kobbelt, Leif},

year={2022},

journal={Computer Graphics Forum},

volume={41},

number={5},

}

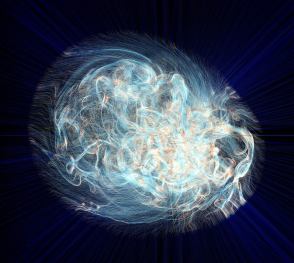

A Survey on SPH Methods in Computer Graphics

Throughout the past decades, the graphics community has spent major resources on the research and development of physics simulators on the mission to computer-generate behaviors achieving outstanding visual effects or to make the virtual world indistinguishable from reality. The variety and impact of recent research based on Smoothed Particle Hydrodynamics (SPH) demonstrates the concept's importance as one of the most versatile tools for the simulation of fluids and solids. With this survey, we offer an overview of the developments and still-active research on physics simulation methodologies based on SPH that has not been addressed in previous SPH surveys. Following an introduction about typical SPH discretization techniques, we provide an overview over the most used incompressibility solvers and present novel insights regarding their relation and conditional equivalence. The survey further covers recent advances in implicit and particle-based boundary handling and sampling techniques. While SPH is best known in the context of fluid simulation we discuss modern concepts to augment the range of simulatable physical characteristics including turbulence, highly viscous matter, deformable solids, as well as rigid body contact handling. Besides the purely numerical approaches, simulation techniques aided by machine learning are on the rise. Thus, the survey discusses recent data-driven approaches and the impact of differentiable solvers on artist control. Finally, we provide context for discussion by outlining existing problems and opportunities to open up new research directions.

@article {KBST2022,

journal = {Computer Graphics Forum},

title = {{A Survey on SPH Methods in Computer Graphics}},

author = {Koschier, Dan and Bender, Jan and Solenthaler, Barbara and Teschner, Matthias},

year = {2022},

volume ={41},

number = {2},

publisher = {The Eurographics Association and John Wiley & Sons Ltd.},

ISSN = {1467-8659},

DOI = {10.1111/cgf.14508}

}

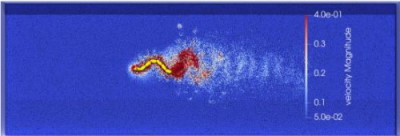

Gazebo Fluids: SPH-based simulation of fluid interaction with articulated rigid body dynamics

Physical simulation is an indispensable component of robotics simulation platforms that serves as the basis for a plethora of research directions. Looking strictly at robotics, the common characteristic of the most popular physics engines, such as ODE, DART, MuJoCo, bullet, SimBody, PhysX or RaiSim, is that they focus on the solution of articulated rigid bodies with collisions and contacts problems, while paying less attention to other physical phenomena. This restriction limits the range of addressable simulation problems, rendering applications such as soft robotics, cloth simulation, simulation of viscoelastic materials, and fluid dynamics, especially surface swimming, infeasible. In this work, we present Gazebo Fluids, an open-source extension of the popular Gazebo robotics simulator that enables the interaction of articulated rigid body dynamics with particle-based fluid and deformable solid simulation. We implement fluid dynamics and highly viscous and elastic material simulation capabilities based on the Smoothed Particle Hydrodynamics method. We demonstrate the practical impact of this extension for previously infeasible application scenarios in a series of experiments, showcasing one of the first self-propelled robot swimming simulations with SPH in a robotics simulator.

@InProceedings{ABA22,

author = {Emmanouil Angelidis and Jan Bender and Jonathan Arreguit and Lars Gleim and Wei Wang and Cristian Axenie and Alois Knoll and Auke Ijspeert},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title = {Gazebo Fluids: SPH-based simulation of fluid interaction with articulated rigid body dynamics},

organization={IEEE},

year = {2022}

}

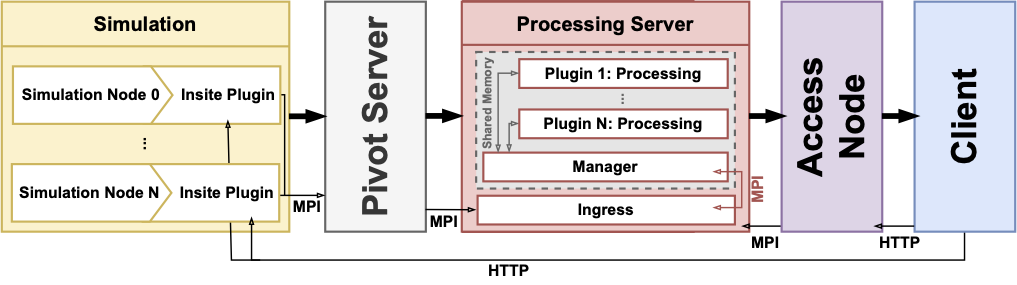

Insite: A Pipeline Enabling In-Transit Visualization and Analysis for Neuronal Network Simulations

Neuronal network simulators are central to computational neuroscience, enabling the study of the nervous system through in-silico experiments. Through the utilization of high-performance computing resources, these simulators are capable of simulating increasingly complex and large networks of neurons today. Yet, the increased capabilities introduce a challenge to the analysis and visualization of the simulation results. In this work, we propose a pipeline for in-transit analysis and visualization of data produced by neuronal network simulators. The pipeline is able to couple with simulators, enabling querying, filtering, and merging data from multiple simulation instances. Additionally, the architecture allows user-defined plugins that perform analysis tasks in the pipeline. The pipeline applies traditional REST API paradigms and utilizes data formats such as JSON to provide easy access to the generated data for visualization and further processing. We present and assess the proposed architecture in the context of neuronal network simulations generated by the NEST simulator.

@InProceedings{10.1007/978-3-031-23220-6_20,

author="Kr{\"u}ger, Marcel and Oehrl, Simon and Demiralp, Ali C. and Spreizer, Sebastian and Bruchertseifer, Jens and Kuhlen, Torsten W. and Gerrits, Tim and Weyers, Benjamin",

editor="Anzt, Hartwig and Bienz, Amanda and Luszczek, Piotr and Baboulin, Marc",

title="Insite: A Pipeline Enabling In-Transit Visualization and Analysis for Neuronal Network Simulations",

booktitle="High Performance Computing. ISC High Performance 2022 International Workshops",

year="2022",

publisher="Springer International Publishing",

address="Cham",

pages="295--305",

isbn="978-3-031-23220-6"

}

Performance Assessment of Diffusive Load Balancing for Distributed Particle Advection

Particle advection is the approach for the extraction of integral curves from vector fields. Efficient parallelization of particle advection is a challenging task due to the problem of load imbalance, in which processes are assigned unequal workloads, causing some of them to idle as the others are performing computing. Various approaches to load balancing exist, yet they all involve trade-offs such as increased inter-process communication, or the need for central control structures. In this work, we present two local load balancing methods for particle advection based on the family of diffusive load balancing. Each process has access to the blocks of its neighboring processes, which enables dynamic sharing of the particles based on a metric defined by the workload of the neighborhood. The approaches are assessed in terms of strong and weak scaling as well as load imbalance. We show that the methods reduce the total run-time of advection and are promising with regard to scaling as they operate locally on isolated process neighborhoods.

Pseudodynamic analysis of heart tube formation in the mouse reveals strong regional variability and early left–right asymmetry

Understanding organ morphogenesis requires a precise geometrical description of the tissues involved in the process. The high morphological variability in mammalian embryos hinders the quantitative analysis of organogenesis. In particular, the study of early heart development in mammals remains a challenging problem due to imaging limitations and complexity. Here, we provide a complete morphological description of mammalian heart tube formation based on detailed imaging of a temporally dense collection of mouse embryonic hearts. We develop strategies for morphometric staging and quantification of local morphological variations between specimens. We identify hot spots of regionalized variability and identify Nodal-controlled left–right asymmetry of the inflow tracts as the earliest signs of organ left–right asymmetry in the mammalian embryo. Finally, we generate a three-dimensional+t digital model that allows co-representation of data from different sources and provides a framework for the computer modeling of heart tube formation.

@article{esteban2022pseudodynamic,

author = {Esteban, Isaac and Schmidt, Patrick and Desgrange, Audrey and Raiola, Morena and Temi{\~n}o, Susana and Meilhac, Sigol\`{e}ne M. and Kobbelt, Leif and Torres, Miguel},

title = {Pseudo-dynamic analysis of heart tube formation in the mouse reveals strong regional variability and early left-right asymmetry},

year = {2022},

journal = {Nature Cardiovascular Research},

volume = 1,

number = 5

}

Astray: A Performance-Portable Geodesic Ray Tracer

Geodesic ray tracing is the numerical method to compute the motion of matter and radiation in spacetime. It enables visualization of the geometry of spacetime and is an important tool to study the gravitational fields in the presence of astrophysical phenomena such as black holes. Although the method is largely established, solving the geodesic equation remains a computationally demanding task. In this work, we present Astray; a high-performance geodesic ray tracing library capable of running on a single or a cluster of computers equipped with compute or graphics processing units. The library is able to visualize any spacetime given its metric tensor and contains optimized implementations of a wide range of spacetimes, including commonly studied ones such as Schwarzschild and Kerr. The performance of the library is evaluated on standard consumer hardware as well as a compute cluster through strong and weak scaling benchmarks. The results indicate that the system is capable of reaching interactive frame rates with increasing use of high-performance computing resources. We further introduce a user interface capable of remote rendering on a cluster for interactive visualization of spacetimes.

@inproceedings {10.2312:vmv.20221208,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Astray: A Performance-Portable Geodesic Ray Tracer}},

author = {Demiralp, Ali Can and Krüger, Marcel and Chao, Chu and Kuhlen, Torsten W. and Gerrits, Tim},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221208}

}

Studying the Effect of Tissue Properties on Radiofrequency Ablation by Visual Simulation Ensemble Analysis

Radiofrequency ablation is a minimally invasive, needle-based medical treatment to ablate tumors by heating due to absorption of radiofrequency electromagnetic waves. To ensure the complete target volume is destroyed, radiofrequency ablation simulations are required for treatment planning. However, the choice of tissue properties used as parameters during simulation induce a high uncertainty, as the tissue properties are strongly patient-dependent. To capture this uncertainty, a simulation ensemble can be created. Understanding the dependency of the simulation outcome on the input parameters helps to create improved simulation ensembles by focusing on the main sources of uncertainty and, thus, reducing computation costs. We present an interactive visual analysis tool for radiofrequency ablation simulation ensembles to target this objective. Spatial 2D and 3D visualizations allow for the comparison of ablation results of individual simulation runs and for the quantification of differences. Simulation runs can be interactively selected based on a parallel coordinates visualization of the parameter space. A 3D parameter space visualization allows for the analysis of the ablation outcome when altering a selected tissue property for the three tissue types involved in the ablation process. We discuss our approach with domain experts working on the development of new simulation models and demonstrate the usefulness of our approach for analyzing the influence of different tissue properties on radiofrequency ablations.

Honorable Mention Award!@inproceedings {10.2312:vcbm.20221187,

booktitle = {Eurographics Workshop on Visual Computing for Biology and Medicine},

editor = {Renata G. Raidou and Björn Sommer and Torsten W. Kuhlen and Michael Krone and Thomas Schultz and Hsiang-Yun Wu},

title = {{Studying the Effect of Tissue Properties on Radiofrequency Ablation by Visual Simulation Ensemble Analysis}},

author = {Heimes, Karl and Evers, Marina and Gerrits, Tim and Gyawali, Sandeep and Sinden, David and Preusser, Tobias and Linsen, Lars},

year = {2022},

publisher = {The Eurographics Association},

ISSN = {2070-5786},

ISBN = {978-3-03868-177-9},

DOI = {10.2312/vcbm.20221187}

}

Multifaceted Visual Analysis of Oceanographic Simulation Ensemble Data

The analysis of multirun oceanographic simulation data imposes various challenges ranging from visualizing multifield spatio-temporal data over properly identifying and depicting vortices to visually representing uncertainties. We present an integrated interactive visual analysis tool that enables us to overcome these challenges by employing multiple coordinated views of different facets of the data at different levels of aggregation.

@ARTICLE {9495240,

author = {H. Rave and J. Fincke and S. Averkamp and B. Tangerding and L. P. Wehrenberg and T. Gerrits and K. Huesmann and S. Leistikow and L. Linsen},

journal = {IEEE Computer Graphics and Applications},

title = {Multifaceted Visual Analysis of Oceanographic Simulation Ensemble Data},

year = {2022},

volume = {42},

number = {04},

issn = {1558-1756},

pages = {80-88},

abstract = {The analysis of multirun oceanographic simulation data imposes various challenges ranging from visualizing multifield spatio-temporal data over properly identifying and depicting vortices to visually representing uncertainties. We present an integrated interactive visual analysis tool that enables us to overcome these challenges by employing multiple coordinated views of different facets of the data at different levels of aggregation.}

keywords = {visualization;data visualization;data models;uncertainty;salinity (geophysical);correlation;rendering (computer graphics)},

doi = {10.1109/MCG.2021.3098096},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

month = {jul}

}

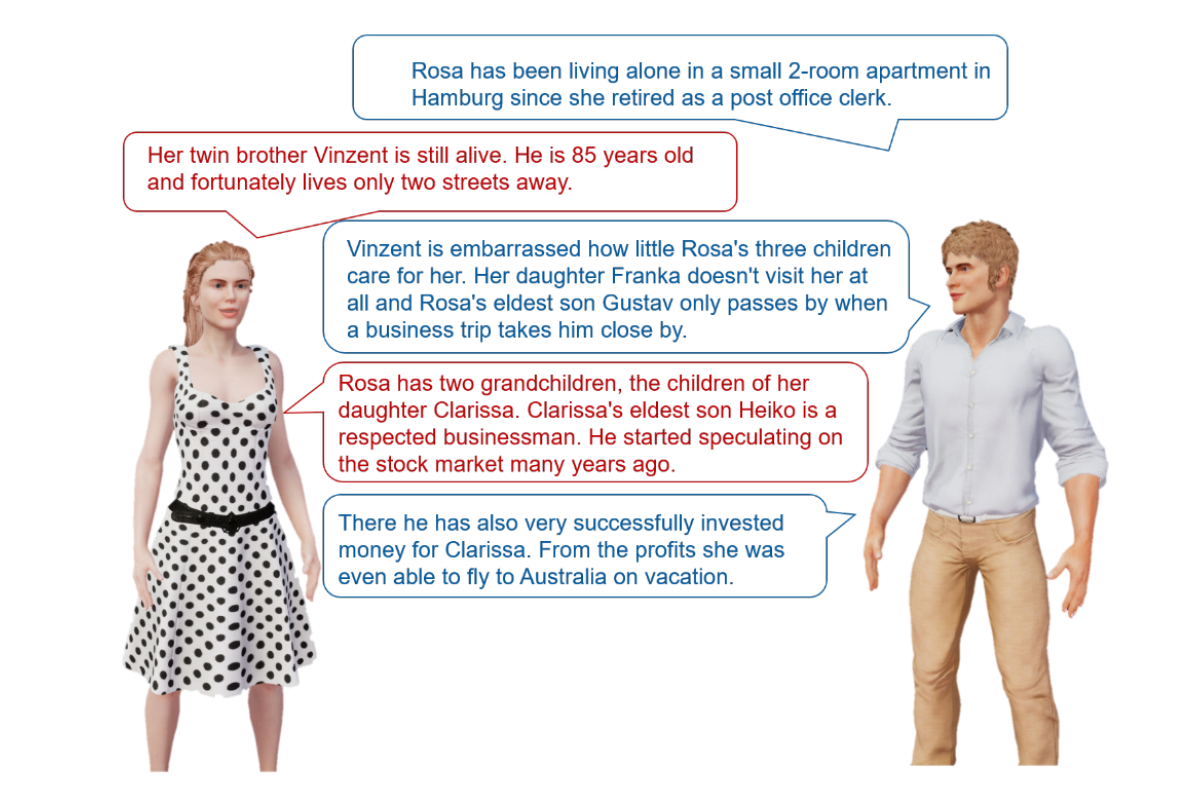

Verbal Interactions with Embodied Conversational Agents

Embedding virtual humans into virtual reality (VR) applications can fulfill diverse needs. These, so-called, embodied conversational agents (ECAs) can simply enliven the virtual environments, act for example as training partners, tutors, or therapists, or serve as advanced (emotional) user interfaces to control immersive systems. The latter case is of special interest since we as human users are specifically good at interpreting other humans. ECAs can enhance their verbal communication with non-verbal behavior and thereby make communication more efficient. For example, backchannels, like nodding or signaling not understanding, can be used to give feedback while a user is speaking. Furthermore, gestures, gaze, posture, proxemics, and many more non-verbal behaviors can be applied. Additionally, turn-taking can be streamlined when the ECA understands when to take over the turn and signals willingness to yield it once done. While many of these aspects are already under investigation from very different disciplines, operationalizing those into versatile, virtually embodied human-computer interfaces remains an open challenge.

To this end, I conducted several studies investigating acoustical effects of ECAs' speech, both with regard to the auralization in the virtual environment and the speech signals used. Furthermore, I want to find guidelines for expressing both turn-taking and various backchannels that make interactions with such advanced embodied interfaces more efficient and pleasant, both when the ECA is speaking and during listening. Additionally, measuring social presence (i.e., the feeling of being there and interacting with a ``real'' person) is an important instrument for this kind of research, since I want to facilitate exactly those subconscious processes of understanding other humans, which we as humans are particularly good at. Therefore, I want to investigate objective measures for social presence.

@inproceedings{Ehret2022a,

author = {Ehret, Jonathan},

booktitle = {Doctoral Consortium at the 22nd ACM International Conference on

Intelligent Virtual Agents},

title = {{Doctoral Consortium : Verbal Interactions with Embodied

Conversational Agents}},

year = {2022}

}

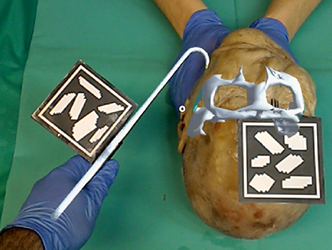

Augmented Reality-Based Surgery on the Human Cadaver Using a New Generation of Optical Head-Mounted Displays: Development and Feasibility Study

**Background:** Although nearly one-third of the world’s disease burden requires surgical care, only a small proportion of digital health applications are directly used in the surgical field. In the coming decades, the application of augmented reality (AR) with a new generation of optical-see-through head-mounted displays (OST-HMDs) like the HoloLens (Microsoft Corp) has the potential to bring digital health into the surgical field. However, for the application to be performed on a living person, proof of performance must first be provided due to regulatory requirements. In this regard, cadaver studies could provide initial evidence.

**Objective:** The goal of the research was to develop an open-source system for AR-based surgery on human cadavers using freely available technologies.

**Methods:** We tested our system using an easy-to-understand scenario in which fractured zygomatic arches of the face had to be repositioned with visual and auditory feedback to the investigators using a HoloLens. Results were verified with postoperative imaging and assessed in a blinded fashion by 2 investigators. The developed system and scenario were qualitatively evaluated by consensus interview and individual questionnaires.

**Results:** The development and implementation of our system was feasible and could be realized in the course of a cadaver study. The AR system was found helpful by the investigators for spatial perception in addition to the combination of visual as well as auditory feedback. The surgical end point could be determined metrically as well as by assessment.

**Conclusions:** The development and application of an AR-based surgical system using freely available technologies to perform OST-HMD–guided surgical procedures in cadavers is feasible. Cadaver studies are suitable for OST-HMD–guided interventions to measure a surgical end point and provide an initial data foundation for future clinical trials. The availability of free systems for researchers could be helpful for a possible translation process from digital health to AR-based surgery using OST-HMDs in the operating theater via cadaver studies.

@article{puladi2022augmented,

title={Augmented Reality-Based Surgery on the Human Cadaver Using a New Generation of Optical Head-Mounted Displays: Development and Feasibility Study},

author={Puladi, Behrus and Ooms, Mark and Bellgardt, Martin and Cesov, Mark and Lipprandt, Myriam and Raith, Stefan and Peters, Florian and M{\"o}hlhenrich, Stephan Christian and Prescher, Andreas and H{\"o}lzle, Frank and others},

journal={JMIR Serious Games},

volume={10},

number={2},

pages={e34781},

year={2022},

publisher={JMIR Publications Inc., Toronto, Canada}

}

Quantitative Mapping of Keratin Networks in 3D

Mechanobiology requires precise quantitative information on processes taking place in specific 3D microenvironments. Connecting the abundance of microscopical, molecular, biochemical, and cell mechanical data with defined topologies has turned out to be extremely difficult. Establishing such structural and functional 3D maps needed for biophysical modeling is a particular challenge for the cytoskeleton, which consists of long and interwoven filamentous polymers coordinating subcellular processes and interactions of cells with their environment. To date, useful tools are available for the segmentation and modeling of actin filaments and microtubules but comprehensive tools for the mapping of intermediate filament organization are still lacking. In this work, we describe a workflow to model and examine the complete 3D arrangement of the keratin intermediate filament cytoskeleton in canine, murine, and human epithelial cells both, in vitro and in vivo. Numerical models are derived from confocal Airyscan high-resolution 3D imaging of fluorescence-tagged keratin filaments. They are interrogated and annotated at different length scales using different modes of visualization including immersive virtual reality. In this way, information is provided on network organization at the subcellular level including mesh arrangement, density, and isotropic configuration as well as details on filament morphology such as bundling, curvature, and orientation. We show that the comparison of these parameters helps to identify, in quantitative terms, similarities and differences of keratin network organization in epithelial cell types defining subcellular domains, notably basal, apical, lateral, and perinuclear systems. The described approach and the presented data are pivotal for generating mechanobiological models that can be experimentally tested.

@article {Windoffer2022,

article_type = {journal},

title = {{Quantitative Mapping of Keratin Networks in 3D}},

author = {Windoffer, Reinhard and Schwarz, Nicole and Yoon, Sungjun and Piskova, Teodora and Scholkemper, Michael and Stegmaier, Johannes and Bönsch, Andrea and Di Russo, Jacopo and Leube, Rudolf},

editor = {Coulombe, Pierre},

volume = 11,

year = 2022,

month = {feb},

pub_date = {2022-02-18},

pages = {e75894},

citation = {eLife 2022;11:e75894},

doi = {10.7554/eLife.75894},

url = {https://doi.org/10.7554/eLife.75894},

journal = {eLife},

issn = {2050-084X},

publisher = {eLife Sciences Publications, Ltd},

}

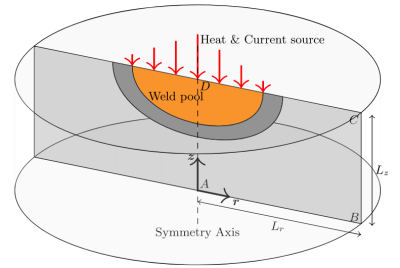

Quantitative Evaluation of SPH in TIG Spot Welding

While the application of the Smoothed Particle Hydrodynamics (SPH) method for the modeling of welding processes has become increasingly popular in recent years, little is yet known about the quantitative predictive capability of this method. We propose a novel SPH model for the simulation of the tungsten inert gas (TIG) spot welding process and conduct a thorough comparison between our SPH implementation and two Finite Element Method (FEM) based models. In order to be able to quantitatively compare the results of our SPH simulation method with grid based methods we additionally propose an improved particle to grid interpolation method based on linear least-squares with an optional hole-filling pass which accounts for missing particles. We show that SPH is able to yield excellent results, especially given the observed deviations between the investigated FEM methods and as such, we validate the accuracy of the method for an industrially relevant engineering application.

@Article{JSS+22,

author = {Stefan Rhys Jeske and Marek Sebastian Simon and Oleksii Semenov and Jan Kruska and Oleg Mokrov and Rahul Sharma and Uwe Reisgen and Jan Bender},

journal = {Computational Particle Mechanics},

title = {Quantitative evaluation of {SPH} in {TIG} spot welding},

year = {2022},

month = {apr},

doi = {10.1007/s40571-022-00465-x},

publisher = {Springer Science and Business Media {LLC}},

}

Application and Benchmark of SPH for Modeling the Impact in Thermal Spraying

The properties of a thermally sprayed coating, such as its durability or thermal conductivity depend on its microstructure, which is in turn directly related to the particle impact process. To simulate this process we present a 3D Smoothed Particle Hydrodynamics (SPH) model, which represents the molten droplet as an incompressible fluid, while a semi-implicit Enthalpy-Porosity method is applied for modeling the phase change during solidification. In addition, we present an implicit correction for SPH simulations, based on well known approaches, from which we can observe improved performance and simulation stability. We apply our SPH method to the impact and solidification of Al2O3 droplets onto a substrate and perform a comprehensive quantitative comparison of our method with the commercial software Ansys Fluent using the Volume of Fluid (VOF) approach, while taking identical physical effects into consideration. The results are evaluated in depth and we discuss the applicability of either method for the simulation of thermal spray deposition. We also evaluate the droplet spread factor given varying initial droplet diameters and compare these results with an analytic expression from previous literature. We show that SPH is an excellent method for solving this free surface problem accurately and efficiently.

@Article{JBB+22,

author = {Stefan Rhys Jeske and Jan Bender and Kirsten Bobzin and Hendrik Heinemann and Kevin Jasutyn and Marek Simon and Oleg Mokrov and Rahul Sharma and Uwe Reisgen},

journal = {Computational Particle Mechanics},

title = {Application and benchmark of {SPH} for modeling the impact in thermal spraying},

year = {2022},

month = {jan},

doi = {10.1007/s40571-022-00459-9},

publisher = {Springer Science and Business Media {LLC}},

}

MODE: A modern ordinary differential equation solver for C++ and CUDA

Ordinary differential equations (ODE) are used to describe the evolution of one or more dependent variables using their derivatives with respect to an independent variable. They arise in various branches of natural sciences and engineering. We present a modern, efficient, performance-oriented ODE solving library built in C++20. The library implements a broad range of multi-stage and multi-step methods, which are generated at compile-time from their tableau representations, avoiding runtime overhead. The solvers can be instantiated and iterated on the CPU and the GPU using identical code. This work introduces the prominent features of the library with examples

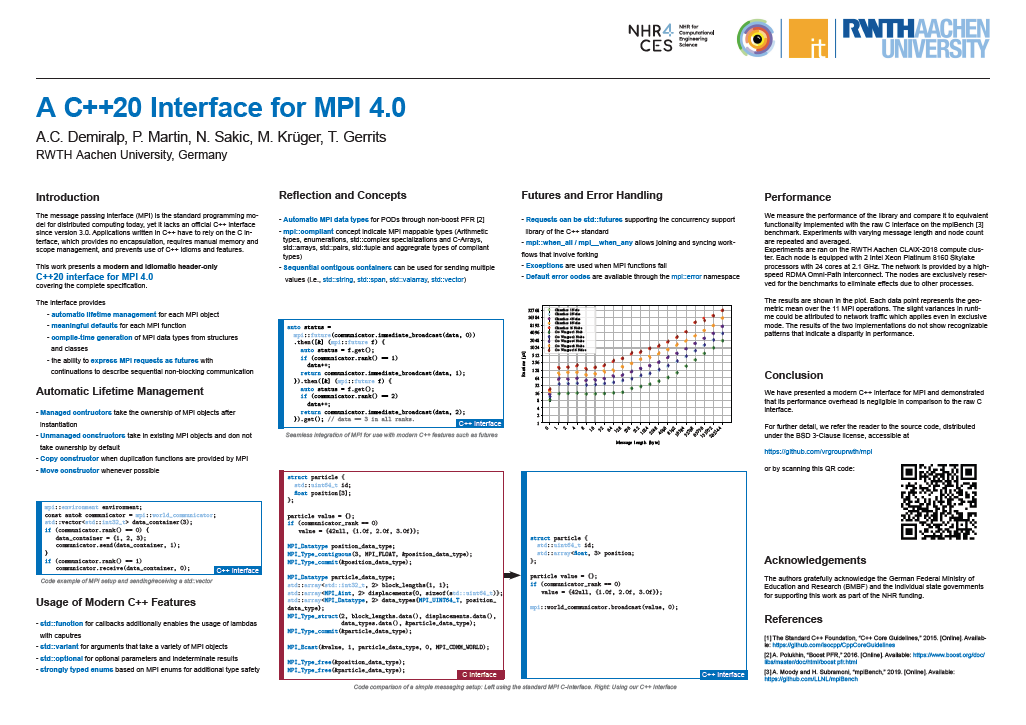

Poster: A C++20 Interface for MPI 4.0

We present a modern C++20 interface for MPI 4.0. The interface utilizes recent language features to ease development of MPI applications. An aggregate reflection system enables generation of MPI data types from user-defined classes automatically. Immediate and persistent operations are mapped to futures, which can be chained to describe sequential asynchronous operations and task graphs in a concise way. This work introduces the prominent features of the interface with examples. We further measure its performance overhead with respect to the raw C interface.

@misc{demiralp2023c20,

title={A C++20 Interface for MPI 4.0},

author={Ali Can Demiralp and Philipp Martin and Niko Sakic and Marcel Krüger and Tim Gerrits},

year={2023},

eprint={2306.11840},

archivePrefix={arXiv},

primaryClass={cs.DC}

}

Poster: Measuring Listening Effort in Adverse Listening Conditions: Testing Two Dual Task Paradigms for Upcoming Audiovisual Virtual Reality Experiments

Listening to and remembering the content of conversations is a highly demanding task from a cognitive-psychological perspective. Particularly, in adverse listening conditions cognitive resources available for higher-level processing of speech are reduced since increased listening effort consumes more of the overall available cognitive resources. Applying audiovisual Virtual Reality (VR) environments to listening research could be highly beneficial for exploring cognitive performance for overheard content. In this study, we therefore evaluated two (secondary) tasks concerning their suitability for measuring cognitive spare capacity as an indicator of listening effort in audiovisual VR environments. In two experiments, participants were administered a dual-task paradigm including a listening primary) task in which a conversation between two talkers is presented, and an unrelated secondary task each. Both experiments were carried out without additional background noise and under continuous noise. We discuss our results in terms of guidance for future experimental studies, especially in audiovisual VR environments.

@InProceedings{ Mohanathasan2022ESCoP,

author = { Chinthusa Mohanathasan, Jonathan Ehret, Cosima A.

Ermert, Janina Fels, Torsten Wolfgang Kuhlen and Sabine J. Schlittmeier},

booktitle = { 22. Conference of the European Society for Cognitive

Psychology , Lille , France , ESCoP},

title = { Measuring Listening Effort in Adverse Listening

Conditions: Testing Two Dual Task Paradigms for Upcoming Audiovisual Virtual

Reality Experiments},

year = {2022},

}

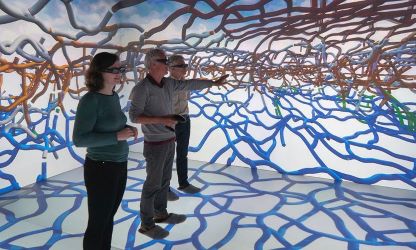

The aixCAVE at RWTH Aachen University

At a large technical university like RWTH Aachen, there is enormous potential to use VR as a tool in research. In contrast to applications from the entertainment sector, many scientific application scenarios - for example, a 3D analysis of result data from simulated flows - not only depend on a high degree of immersion, but also on a high resolution and excellent image quality of the display. In addition, the visual analysis of scientific data is often carried out and discussed in smaller teams. For these reasons, but also for simple ergonomic aspects (comfort, cybersickness), many technical and scientific VR applications cannot just be implemented on the basis of head-mounted displays. To this day, in VR Labs of universities and research institutions, it is therefore desirable to install immersive large-screen rear projection systems (CAVEs) in order to adequately support the scientists. Due to the high investment costs, such systems are used at larger universities such as Aachen, Cologne, Munich, or Stuttgart, often operated by the computing centers as a central infrastructure accessible to all scientists at the university.

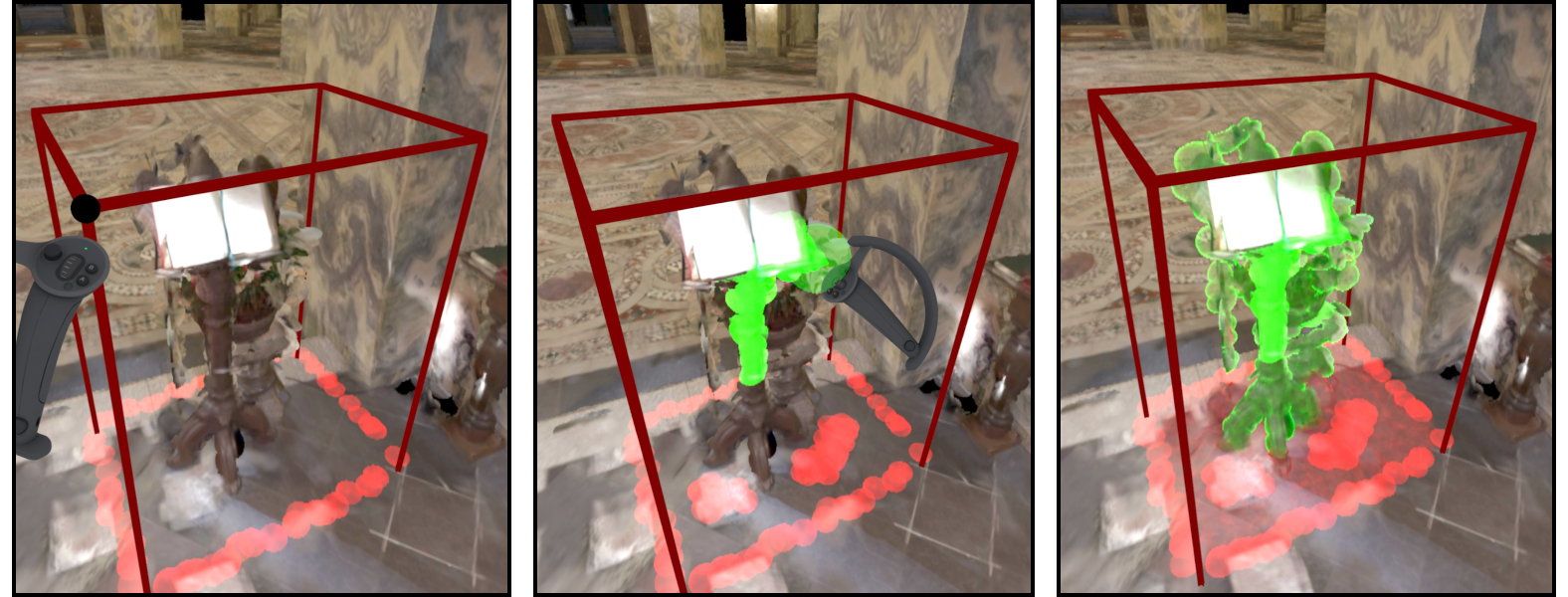

Interactive Segmentation of Textured Point Clouds

We present a method for the interactive segmentation of textured 3D point clouds. The problem is formulated as a minimum graph cut on a k-nearest neighbor graph and leverages the rich information contained in high-resolution photographs as the discriminative feature. We demonstrate that the achievable segmentation accuracy is significantly improved compared to using an average color per point as in prior work. The method is designed to work efficiently on large datasets and yields results at interactive rates. This way, an interactive workflow can be realized in an immersive virtual environment, which supports the segmentation task by improved depth perception and the use of tracked 3D input devices. Our method enables to create high-quality segmentations of textured point clouds fast and conveniently.

@inproceedings {10.2312:vmv.20221200,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Interactive Segmentation of Textured Point Clouds}},

author = {Schmitz, Patric and Suder, Sebastian and Schuster, Kersten and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221200}

}

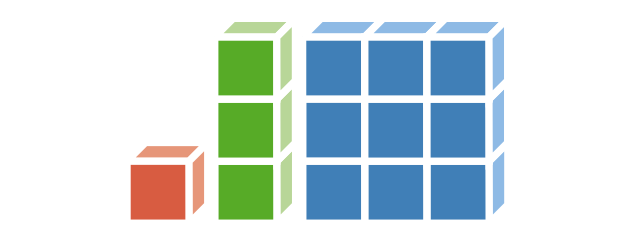

M2F3D: Mask2Former for 3D Instance Segmentation

In this work, we show that the top performing Mask2Former approach for image-based segmentation tasks works surprisingly well when adapted to the 3D scene understanding domain. Current 3D semantic instance segmentation methods rely largely on predicting centers followed by clustering approaches and little progress has been made in applying transformer-based approaches to this task. We show that with small modifications to the Mask2Former approach for 2D, we can create a 3D instance segmentation approach, without the need for highly 3D specific components or carefully hand-engineered hyperparameters. Initial experiments with our M2F3D model on the ScanNet benchmark are very promising and sets a new state-of-the-art on ScanNet test (+0.4 mAP50).

Please see our extended work Mask3D: Mask Transformer for 3D Instance Segmentation accepted at ICRA 2023.

Automatic region-growing system for the segmentation of large point clouds

This article describes a complete unsupervised system for the segmentation of massive 3D point clouds. Our system bridges the missing components that permit to go from 99% automation to 100% automation for the construction industry. It scales up to billions of 3D points and targets a generic low-level grouping of planar regions usable by a wide range of applications. Furthermore, we introduce a hierarchical multi-level segment definition to cope with potential variations in high-level object definitions. The approach first leverages planar predominance in scenes through a normal-based region growing. Then, for usability and simplicity, we designed an automatic heuristic to determine without user supervision three RANSAC-inspired parameters. These are the distance threshold for the region growing, the threshold for the minimum number of points needed to form a valid planar region, and the decision criterion for adding points to a region. Our experiments are conducted on 3D scans of complex buildings to test the robustness of the “one-click” method in varying scenarios. Labelled and instantiated point clouds from different sensors and platforms (depth sensor, terrestrial laser scanner, hand-held laser scanner, mobile mapping system), in different environments (indoor, outdoor, buildings) and with different objects of interests (AEC-related, BIM-related, navigation-related) are provided as a new extensive test-bench. The current implementation processes ten million points per minutes on a single thread CPU configuration. Moreover, the resulting segments are tested for the high-level task of semantic segmentation over 14 classes, to achieve an F1-score of 90+ averaged over all datasets while reducing the training phase to a fraction of state of the art point-based deep learning methods. We provide this baseline along with six new open-access datasets with 300+ million hand-labelled and instantiated 3D points at: https://www.graphics.rwth-aachen.de/project/ 45/.

@article{POUX2022104250,

title = {Automatic region-growing system for the segmentation of large point clouds},

journal = {Automation in Construction},

volume = {138},

pages = {104250},

year = {2022},

issn = {0926-5805},

doi = {https://doi.org/10.1016/j.autcon.2022.104250},

url = {https://www.sciencedirect.com/science/article/pii/S0926580522001236},

author = {F. Poux and C. Mattes and Z. Selman and L. Kobbelt},

keywords = {3D point cloud, Segmentation, Region-growing, RANSAC, Unsupervised clustering}

}

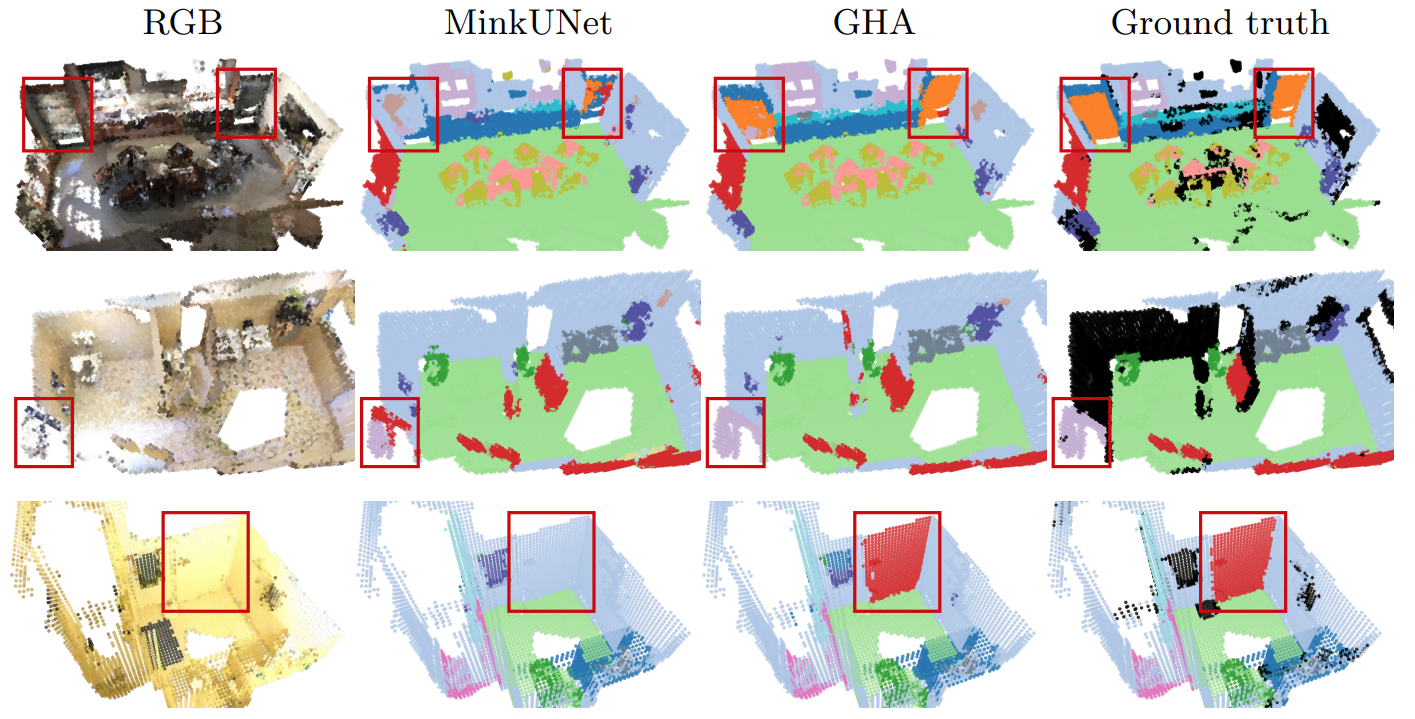

Global Hierarchical Attention for 3D Point Cloud Analysis

We propose a new attention mechanism, called Global Hierarchical Attention (GHA), for 3D point cloud analysis. GHA approximates the regular global dot-product attention via a series of coarsening and interpolation operations over multiple hierarchy levels. The advantage of GHA is two-fold. First, it has linear complexity with respect to the number of points, enabling the processing of large point clouds. Second, GHA inherently possesses the inductive bias to focus on spatially close points, while retaining the global connectivity among all points. Combined with a feedforward network, GHA can be inserted into many existing network architectures. We experiment with multiple baseline networks and show that adding GHA consistently improves performance across different tasks and datasets. For the task of semantic segmentation, GHA gives a +1.7% mIoU increase to the MinkowskiEngine baseline on ScanNet. For the 3D object detection task, GHA improves the CenterPoint baseline by +0.5% mAP on the nuScenes dataset, and the 3DETR baseline by +2.1% mAP25 and +1.5% mAP50 on ScanNet.

Pedestrian-Robot Interactions on Autonomous Crowd Navigation: Reactive Control Methods and Evaluation Metrics

Autonomous navigation in highly populated areas remains a challenging task for robots because of the difficulty in guaranteeing safe interactions with pedestrians in unstructured situations. In this work, we present a crowd navigation control framework that delivers continuous obstacle avoidance and post-contact control evaluated on an autonomous personal mobility vehicle. We propose evaluation metrics for accounting efficiency, controller response and crowd interactions in natural crowds. We report the results of over 110 trials in different crowd types: sparse, flows, and mixed traffic, with low- (< 0.15 ppsm), mid- (< 0.65 ppsm), and high- (< 1 ppsm) pedestrian densities. We present comparative results between two low-level obstacle avoidance methods and a baseline of shared control. Results show a 10% drop in relative time to goal on the highest density tests, and no other efficiency metric decrease. Moreover, autonomous navigation showed to be comparable to shared-control navigation with a lower relative jerk and significantly higher fluency in commands indicating high compatibility with the crowd. We conclude that the reactive controller fulfills a necessary task of fast and continuous adaptation to crowd navigation, and it should be coupled with high-level planners for environmental and situational awareness.

Differentiable Soft-Masked Attention

Transformers have become prevalent in computer vision due to their performance and flexibility in modelling complex operations. Of particular significance is the ‘cross-attention’ operation, which allows a vector representation (e.g. of an object in an image) to be learned by ‘attending’ to an arbitrarily sized set of input features. Recently, ‘Masked Attention’ was proposed in which a given object representation only attends to those image pixel features for which the segmentation mask of that object is active. This specialization of attention proved beneficial for various image and video segmentation tasks. In this paper, we propose another specialization of attention which enables attending over ‘soft-masks’ (those with continuous mask probabilities instead of binary values), and is also differentiable through these mask probabilities, thus allowing the mask used for attention to be learned within the network without requiring direct loss supervision. This can be useful for several applications. Specifically, we employ our ‘Differentiable Soft-Masked Attention’ for the task of Weakly Supervised Video Object Segmentation (VOS), where we develop a transformer-based network for VOS which only requires a single annotated image frame for training, but can also benefit from cycle consistency training on a video with just one annotated frame. Although there is no loss for masks in unlabeled frames, the network is still able to segment objects in those frames due to our novel attention formulation.

Late-Breaking Report: Natural Turn-Taking with Embodied Conversational Agents

Adding embodied conversational agents (ECAs) to immersive virtual environments (IVEs) becomes relevant in various application scenarios, for example, conversational systems. For successful interactions with these ECAs, they have to behave naturally, i.e. in the way a user would expect a real human to behave. Teaming up with acousticians and psychologists, we strive to explore turn-taking in VR-based interactions between either two ECAs or an ECA and a human user.

Late-Breaking Report: An Embodied Conversational Agent Supporting Scene Exploration by Switching between Guiding and Accompanying

In this late-breaking report, we first motivate the requirement of an embodied conversational agent (ECA) who combines characteristics of a virtual tour guide and a knowledgeable companion in order to allow users an interactive and adaptable, however, structured exploration of an unknown immersive, architectural environment. Second, we roughly outline our proposed ECA’s behavioral design followed by a teaser on the planned user study.

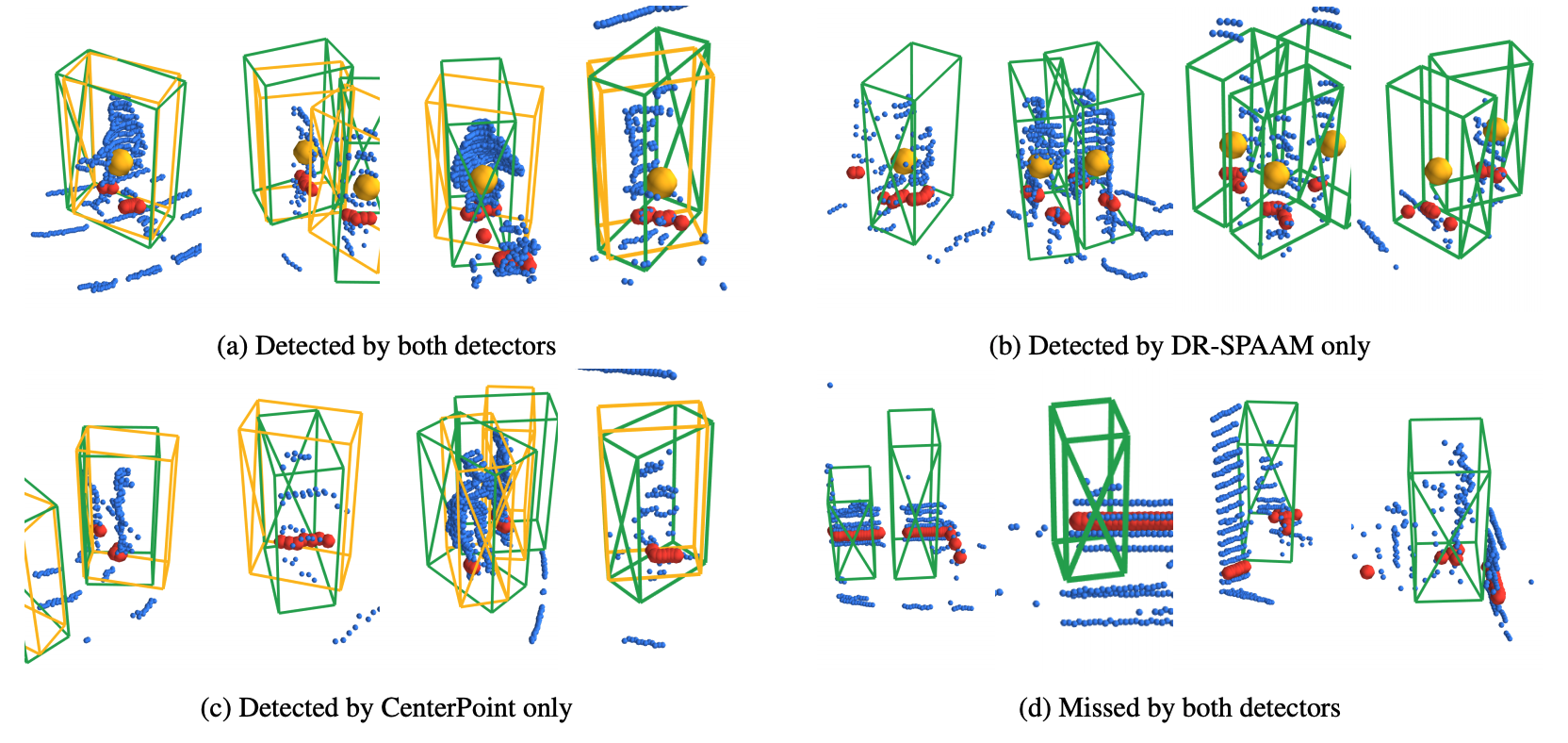

2D vs. 3D LiDAR-based Person Detection on Mobile Robots

Person detection is a crucial task for mobile robots navigating in human-populated environments. LiDAR sensors are promising for this task, thanks to their accurate depth measurements and large field of view. Two types of LiDAR sensors exist: the 2D LiDAR sensors, which scan a single plane, and the 3D LiDAR sensors, which scan multiple planes, thus forming a volume. How do they compare for the task of person detection? To answer this, we conduct a series of experiments, using the public, large-scale JackRabbot dataset and the state-of-the-art 2D and 3D LiDAR-based person detectors (DR-SPAAM and CenterPoint respectively). Our experiments include multiple aspects, ranging from the basic performance and speed comparison, to more detailed analysis on localization accuracy and robustness against distance and scene clutter. The insights from these experiments highlight the strengths and weaknesses of 2D and 3D LiDAR sensors as sources for person detection, and are especially valuable for designing mobile robots that will operate in close proximity to surrounding humans (e.g. service or social robot).

Previous Year (2021)