Publications

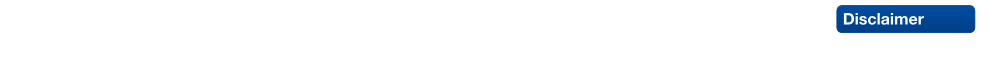

Tracking People and Their Objects

Current pedestrian tracking approaches ignore impor- tant aspects of human behavior. Humans are not moving independently, but they closely interact with their environ- ment, which includes not only other persons, but also dif- ferent scene objects. Typical everyday scenarios include people moving in groups, pushing child strollers, or pulling luggage. In this paper, we propose a probabilistic approach for classifying such person-object interactions, associating objects to persons, and predicting how the interaction will most likely continue. Our approach relies on stereo depth information in order to track all scene objects in 3D, while simultaneously building up their 3D shape models. These models and their relative spatial arrangement are then fed into a probabilistic graphical model which jointly infers pairwise interactions and object classes. The inferred inter- actions can then be used to support tracking by recovering lost object tracks. We evaluate our approach on a novel dataset containing more than 15,000 frames of person- object interactions in 325 video sequences and demonstrate good performance in challenging real-world scenarios.

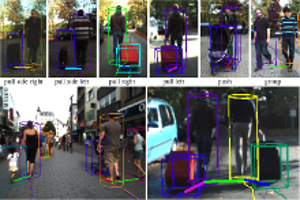

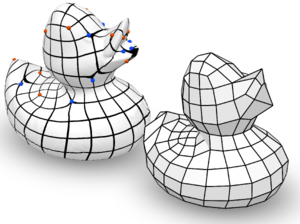

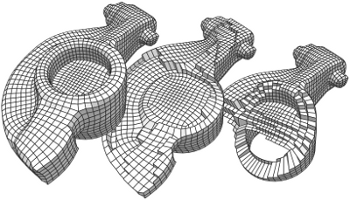

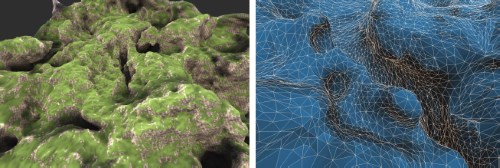

Integer-Grid Maps for Reliable Quad Meshing

Quadrilateral remeshing approaches based on global parametrization enable many desirable mesh properties. Two of the most important ones are (1) high regularity due to explicit control over irregular vertices and (2) smooth distribution of distortion achieved by convex variational formulations. Apart from these strengths, state-of-the-art techniques suffer from limited reliability on real-world input data, i.e. the determined map might have degeneracies like (local) non-injectivities and consequently often cannot be used directly to generate a quadrilateral mesh. In this paper we propose a novel convex Mixed-Integer Quadratic Programming (MIQP) formulation which ensures by construction that the resulting map is within the class of so called Integer-Grid Maps that are guaranteed to imply a quad mesh. In order to overcome the NP-hardness of MIQP and to be able to remesh typical input geometries in acceptable time we propose two additional problem specific optimizations: a complexity reduction algorithm and singularity separating conditions. While the former decouples the dimension of the MIQP search space from the input complexity of the triangle mesh and thus is able to dramatically speed up the computation without inducing inaccuracies, the latter improves the continuous relaxation, which is crucial for the success of modern MIQP optimizers. Our experiments show that the reliability of the resulting algorithm does not only annihilate the main drawback of parametrization based quad-remeshing but moreover enables the global search for high-quality coarse quad layouts – a difficult task solely tackled by greedy methodologies before.

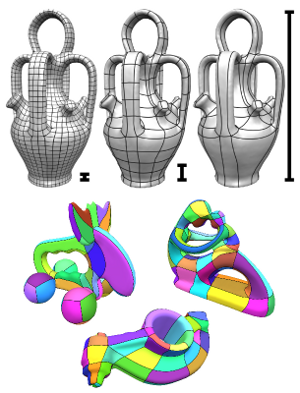

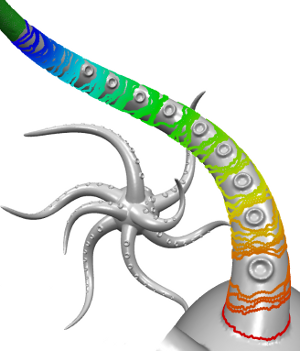

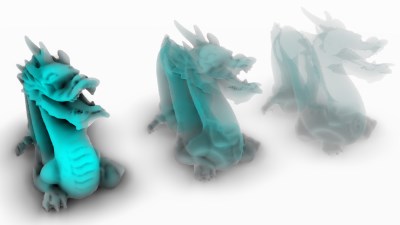

Multi-View Normal Field Integration for 3D Reconstruction of Mirroring Objects

In this paper, we present a novel, robust multi-view normal field integration technique for reconstructing the full 3D shape of mirroring objects. We employ a turntable-based setup with several cameras and displays. These are used to display illumination patterns which are reflected by the object surface. The pattern information observed in the cameras enables the calculation of individual volumetric normal fields for each combination of camera, display and turntable angle. As the pattern information might be blurred depending on the surface curvature or due to non-perfect mirroring surface characteristics, we locally adapt the decoding to the finest still resolvable pattern resolution. In complex real-world scenarios, the normal fields contain regions without observations due to occlusions and outliers due to interreflections and noise. Therefore, a robust reconstruction using only normal information is challenging. Via a non-parametric clustering of normal hypotheses derived for each point in the scene, we obtain both the most likely local surface normal and a local surface consistency estimate. This information is utilized in an iterative min-cut based variational approach to reconstruct the surface geometry.

Random Forests of Local Experts for Pedestrian Detection

Pedestrian detection is one of the most challenging tasks in computer vision, and has received a lot of attention in the last years. Recently, some authors have shown the advan- tages of using combinations of part/patch-based detectors in order to cope with the large variability of poses and the existence of partial occlusions. In this paper, we propose a pedestrian detection method that efficiently combines mul- tiple local experts by means of a Random Forest ensemble. The proposed method works with rich block-based repre- sentations such as HOG and LBP, in such a way that the same features are reused by the multiple local experts, so that no extra computational cost is needed with respect to a holistic method. Furthermore, we demonstrate how to inte- grate the proposed approach with a cascaded architecture in order to achieve not only high accuracy but also an ac- ceptable efficiency. In particular, the resulting detector op- erates at five frames per second using a laptop machine. We tested the proposed method with well-known challeng- ing datasets such as Caltech, ETH, Daimler, and INRIA. The method proposed in this work consistently ranks among the top performers in all the datasets, being either the best method or having a small difference with the best one.

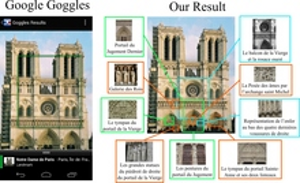

Discovering Details and Scene Structure with Hierarchical Iconoid Shift

Current landmark recognition engines are typically aimed at recognizing building-scale landmarks, but miss interesting details like portals, statues or windows. This is because they use a flat clustering that summarizes all photos of a building facade in one cluster. We propose Hierarchical Iconoid Shift, a novel landmark clustering algorithm capable of discovering such details. Instead of just a collection of clusters, the output of HIS is a set of dendrograms describing the detail hierarchy of a landmark. HIS is based on the novel Hierarchical Medoid Shift clustering algorithm that performs a continuous mode search over the complete scale space. HMS is completely parameter-free, has the same complexity as Medoid Shift and is easy to parallelize. We evaluate HIS on 800k images of 34 landmarks and show that it can extract an often surprising amount of detail and structure that can be applied, e.g., to provide a mobile user with more detailed information on a landmark or even to extend the landmark’s Wikipedia article.

QEx: Robust Quad Mesh Extraction

The most popular and actively researched class of quad remeshing techniques is

the family of parametrization based quad meshing methods. They all strive

to generate an integer-grid map, i.e. a parametrization of the input surface

into R2 such that the canonical grid of integer iso-lines forms a

quad mesh when mapped back onto the surface in R3. An essential,

albeit broadly neglected aspect of these methods is the quad extraction

step, i.e. the materialization of an actual quad mesh from the mere “quad

texture”. Quad (mesh) extraction is often believed to be a trivial matter but

quite the opposite is true: Numerous special cases, ambiguities induced by

numerical inaccuracies and limited solver precision, as well as imperfections

in the maps produced by most methods (unless costly countermeasures are taken)

pose significant challenges to the quad extractor. We present a method to

sanitize a provided parametrization such that it becomes numerically

consistent even in a limited precision floating point representation. Based

on this we are able to provide a comprehensive and sound description of how to

perform quad extraction robustly and without the need for any complex

tolerance thresholds or disambiguation rules. On top of that we develop a

novel strategy to cope with common local fold-overs in the parametrization.

This allows our method, dubbed QEx, to generate all-quadrilateral meshes

where otherwise holes, non-quad polygons or no output at all would have been

produced. We thus enable the practical use of an entire class of maps that was

previously considered defective. Since state of the art quad meshing methods

spend a significant share of their run time solely to prevent local

fold-overs, using our method it is now possible to obtain quad meshes

significantly quicker than before. We also provide libQEx, an open source

C++ reference implementation of our method and thus significantly lower the

bar to enter the field of quad meshing.

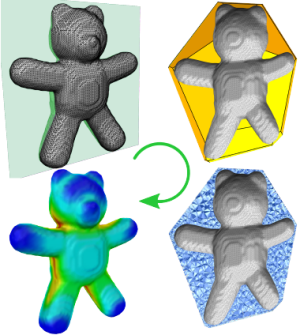

Efficient Computation of Shortest Path-Concavity for 3D Meshes

In the context of shape segmentation and retrieval object-wide distributions of measures are needed to accurately evaluate and compare local regions of shapes. Lien et al. proposed two point-wise concavity measures in the context of Approximate Convex Decompositions of polygons measuring the distance from a point to the polygon’s convex hull: an accurate Shortest Path-Concavity (SPC) measure and a Straight Line-Concavity (SLC) approximation of the same. While both are practicable on 2D shapes, the exponential costs of SPC in 3D makes it inhibitively expensive for a generalization to meshes. In this paper we propose an efficient and straight forward approximation of the Shortest Path-Concavity measure to 3D meshes. Our approximation is based on discretizing the space between mesh and convex hull, thereby reducing the continuous Shortest Path search to an efficiently solvable graph problem. Our approach works out-of-the-box on complex mesh topologies and requires no complicated handling of genus. Besides presenting a rigorous evaluation of our method on a variety of input meshes, we also define an SPC-based Shape Descriptor and show its superior retrieval and runtime performance compared with the recently presented results on the Convexity Distribution by Lian et al.

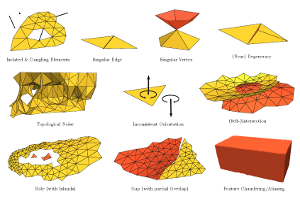

Polygon Mesh Repairing: An Application Perspective

Nowadays, digital 3D models are in widespread and ubiquitous use, and each specific application dealing with 3D geometry has its own quality requirements that restrict the class of acceptable and supported models. This article analyzes typical defects that make a 3D model unsuitable for key application contexts, and surveys existing algorithms that process, repair, and improve its structure, geometry, and topology to make it appropriate to case-by-case requirements. The analysis is focused on polygon meshes, which constitute by far the most common 3D object representation. In particular, this article provides a structured overview of mesh repairing techniques from the point of view of the application context. Di fferent types of mesh defects are classified according to the upstream application that produced the mesh, whereas mesh quality requirements are grouped by representative sets of downstream applications where the mesh is to be used. The numerous mesh repair methods that have been proposed during the last two decades are analyzed and classified in terms of their capabilities, properties, and guarantees. Based on these classifications, guidelines can be derived to support the identification of repairing algorithms best-suited to bridge the compatibility gap between the quality provided by the upstream process and the quality required by the downstream applications in a given geometry processing scenario.

Efficient GPU data structures and methods to solve sparse linear systems in dynamics applications

We present graphics processing unit (GPU) data structures and algorithms to efficiently solve sparse linear systems that are typically required in simulations of multi-body systems and deformable bodies. Thereby, we introduce an efficient sparse matrix data structure that can handle arbitrary sparsity patterns and outperforms current state-of-the-art implementations for sparse matrix vector multiplication. Moreover, an efficient method to construct global matrices on the GPU is presented where hundreds of thousands of individual element contributions are assembled in a few milliseconds. A finite-element-based method for the simulation of deformable solids as well as an impulse-based method for rigid bodies are introduced in order to demonstrate the advantages of the novel data structures and algorithms. These applications share the characteristic that a major computational effort consists of building and solving systems of linear equations in every time step. Our solving method results in a speed-up factor of up to 13 in comparison to other GPU methods.

@article{WebBenSchStoFel13,

author = {Weber, Daniel and Bender, Jan and Schnoes, Markus and Stork, Andr{\'e} and Fellner, Dieter},

title = {Efficient {GPU} Data Structures and Methods to Solve Sparse Linear Systems in Dynamics Applications},

year = {2013},

journal = {Computer Graphics Forum},

volume = {32},

number = {1},

publisher = {Blackwell Publishing Ltd},

issn = {1467-8659},

url = {http://dx.doi.org/10.1111/j.1467-8659.2012.03227.x},

doi = {10.1111/j.1467-8659.2012.03227.x},

pages = {16--26},

}

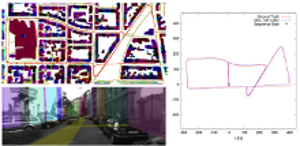

OpenStreetSLAM: Global Vehicle Localization Using OpenStreetMaps

In this paper we propose an approach for global vehicle localization that combines visual odometry with map information from OpenStreetMaps to provide robust and accurate estimates for the vehicle’s position. The main contribution of this work comes from the incorporation of the map data as an additional cue into the observation model of a Monte Carlo Localization framework. The resulting approach is able to compensate for the drift that visual odometry accumulates over time, significantly improving localization quality. As our results indicate, the proposed approach outperforms current state-ofthe- art visual odometry approaches, indicating in parallel the potential that map data can bring to the global localization task.

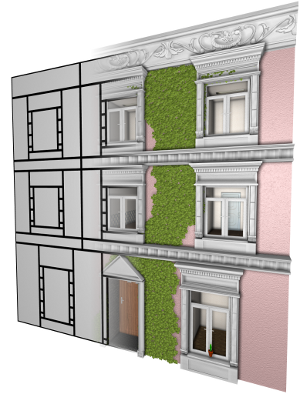

View-Dependent Realtime Rendering of Procedural Facades with High Geometric Detail

We present an algorithm for realtime rendering of large-scale city models with procedurally generated facades. By using highly detailed assets like windows, doors, and decoration such city models can provide an extremely high geometric level of detail but on the downside they also consist of billions of polygons which makes it infeasible to even store them as explicit polygonal meshes. Moreover, when rendering urban scenes usually only a very small fraction of the city is actually visible which calls for effective culling mechanisms. For procedural textures there are efficient screen space techniques that evaluate, e.g., a split grammar on a per-pixel basis in the fragment shader and thus render a textured facade in a view dependent manner. We take this idea further by introducing 3D geometric detail in addition to flat textures. Our approach is a two-pass procedure that first renders a flat procedural facade. During rasterization the fragment shader triggers the instantiation of a detailed asset whenever a geometric facade element is potentially visible. The set of instantiated detail models are then rendered in a second pass. The major challenges arise from the fact that geometric details belonging to a facade can be visible even if the base polygon of the facade itself is not visible. Hence we propose measures to conservatively estimate visibility without introducing excessive redundancy. We further extend our technique by a simple level of detail mechanism that switches to baked textures (of the assets) depending on the distance to the camera. We demonstrate that our technique achieves realtime frame rates for large-scale city models with massive detail on current commodity graphics hardware.

Position-based Methods for the Simulation of Solid Objects in Computer Graphics

The dynamic simulation of solids has a long history in computer graphics. The classical methods in this field are based on the use of forces or impulses to simulate joints between rigid bodies as well as the stretching, shearing and bending stiffness of deformable objects. In the last years the class of position-based methods has become popular in the graphics community. These kinds of methods are fast, unconditionally stable and controllable which make them well-suited for the use in interactive environments. Position-based methods are not as accurate as force based methods in general but they provide visual plausibility. Therefore, the main application areas of these approaches are virtual reality, computer games and special effects in movies.

This state of the art report covers the large variety of position-based methods that were developed in the field of deformable solids. We will introduce the concept of position-based dynamics, present dynamic simulation based on shape matching and discuss data-driven approaches. Furthermore, we will present several applications for these methods.

@inproceedings{BMOT2013,

title = "Position-based Methods for the Simulation of Solid Objects in Computer Graphics",

author = "Jan Bender and Matthias M{\"u}ller and Miguel A. Otaduy and Matthias Teschner",

year = "2013",

booktitle = "EUROGRAPHICS 2013 State of the Art Reports",

publisher = "Eurographics Association",

location = "Girona, Spain"

}

Practical Anisotropic Geodesy

The computation of intrinsic, geodesic distances and geodesic paths on surfaces is a fundamental low-level building block in countless Computer Graphics and Geometry Processing applications. This demand led to the development of numerous algorithms – some for the exact, others for the approximative computation, some focussing on speed, others providing strict guarantees. Most of these methods are designed for computing distances according to the standard Riemannian metric induced by the surface’s embedding in Euclidean space. Generalization to other, especially anisotropic, metrics – which more recently gained interest in several application areas – is not rarely hampered by fundamental problems. We explore and discuss possibilities for the generalization and extension of well-known methods to the anisotropic case, evaluate their relative performance in terms of accuracy and speed, and propose a novel algorithm, the Short-Term Vector Dijkstra. This algorithm is strikingly simple to implement and proves to provide practical accuracy at a higher speed than generalized previous methods.

SIFT-Realistic Rendering

3D localization approaches establish correspondences between points in a query image and a 3D point cloud reconstruction of the environment. Traditionally, the database models are created from photographs using Structure-from-Motion (SfM) techniques, which requires large collections of densely sampled images. In this paper, we address the question how point cloud data from terrestrial laser scanners can be used instead to significantly reduce the data collection effort and enable more scalable localization.

The key change here is that, in contrast to SfM points, laser-scanned 3D points are not automatically associated with local image features that could be matched to query image features. In order to make this data usable for image-based localization, we explore how point cloud rendering techniques can be leveraged to create virtual views from which database features can be extracted that match real image-based features as closely as possible. We propose different rendering techniques for this task, experimentally quantify how they affect feature repeatability, and demonstrate their benefit for image-based localization.

Fast and stable cloth simulation based on multi-resolution shape matching

We present an efficient and unconditionally stable method which allows the deformation of very complex stiff cloth models in real-time. This method is based on a shape matching approach which uses edges and triangles as 1D and 2D regions to simulate stretching and shearing resistance. Previous shape matching approaches require large overlapping regions to simulate stiff materials. This unfortunately also affects the bending behavior of the model. Instead of using large regions, we introduce a novel multi-resolution shape matching approach to increase only the stretching and shearing stiffness. Shape matching is performed for each level of the multi-resolution model and the results are propagated from one level to the next one. To preserve the fine wrinkles of the cloth on coarse levels of the hierarchy we present a modified version of the original shape matching method. The introduced method for cloth simulation can perform simulations in linear time and has no numerical damping. Furthermore, we show that multi-resolution shape matching can be performed efficiently on the GPU.

@ARTICLE{Bender2013_2,

author = {Jan Bender and Daniel Weber and Raphael Diziol},

title = {Fast and stable cloth simulation based on multi-resolution shape matching},

journal = {Computers \& Graphics },

year = {2013},

volume = {37},

pages = {945 - 954},

number = {8},

doi = {http://dx.doi.org/10.1016/j.cag.2013.08.003},

issn = {0097-8493},

url = {http://www.sciencedirect.com/science/article/pii/S0097849313001283}

}

Adaptive cloth simulation using corotational finite elements

In this article we introduce an efficient adaptive cloth simulation method which is based on a reversible $\sqrt{3}$-refinement of corotational finite elements. Our novel approach can handle arbitrary triangle meshes and is not restricted to regular grid meshes which are required by other adaptive methods. Most previous works in the area of adaptive cloth simulation use discrete cloth models like mass-spring systems in combination with a specific subdivision scheme. However, if discrete models are used, the simulation does not converge to the correct solution as the mesh is refined. Therefore, we introduce a cloth model which is based on continuum mechanics since continuous models do not have this problem. We use a linear elasticity model in combination with a corotational formulation to achieve a high performance. Furthermore, we present an efficient method to update the sparse matrix structure after a refinement or coarsening step. The advantage of the $\sqrt{3}$-subdivision scheme is that it generates high quality meshes while the number of triangles increases only by a factor of 3 in each refinement step. However, the original scheme was not intended for the use in an interactive simulation and only defines a mesh refinement. In this article we introduce a combination of the original refinement scheme with a novel coarsening method to realize an adaptive cloth simulation with high quality meshes. The proposed approach allows an efficient mesh adaption and therefore does not cause much overhead. We demonstrate the significant performance gain which can be achieved with our adaptive simulation method in several experiments including a complex garment simulation.

@ARTICLE{Bender2013,

author = {Jan Bender and Crispin Deul},

title = {Adaptive cloth simulation using corotational finite elements },

journal = {Computers \& Graphics },

year = {2013},

volume = {37},

pages = {820 - 829},

number = {7},

doi = {http://dx.doi.org/10.1016/j.cag.2013.04.008},

url = {http://www.sciencedirect.com/science/article/pii/S0097849313000605},

issn = {0097-8493}

}

Failure Mode and Effects Analysis in Designing a Virtual Reality-Based Training Simulator for Bilateral Sagittal Split Osteotomy

Virtual reality-based simulators offer a cost-effective and efficient alternative to traditional medical training and planning. Developing a simulator that enables the training of medical skills and also supports recognition of errors made by the trainee is a challenge. The first step in developing such a system consists of error identification in the real procedure, in order to ensure that the training environment covers the most significant errors that can occur. This paper focuses on identifying the main system requirements for an interactive simulator for training bilateral sagittal split osteotomy (BSSO). An approach is proposed based on failure mode and effects analysis (FMEA), a risk analysis method that is well structured and already an approved technique in other domains. Based on the FMEA results, a BSSO training simulator is currently being developed, which centers upon the main critical steps of the procedure (sawing and splitting) and their main errors. FMEA seems to be a suitable tool in the design phase of developing medical simulators. Herein, it serves as a communication medium for knowledge transfer between the medical experts and the system developers. The method encourages a reflective process and allows identification of the most important elements and scenarios that need to be trained.

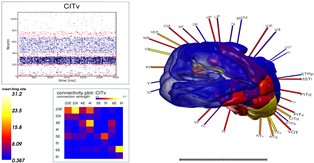

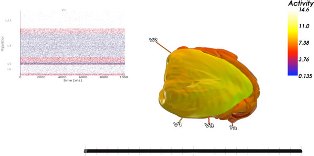

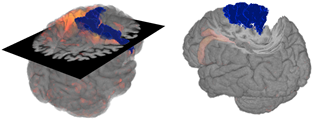

VisNEST – Interactive Analysis of Neural Activity Data

The aim of computational neuroscience is to gain insight into the dynamics and functionality of the nervous system by means of modeling and simulation. Current research leverages the power of High Performance Computing facilities to enable multi-scale simulations capturing both low-level neural activity and large-scale interactions between brain regions. In this paper, we describe an interactive analysis tool that enables neuroscientists to explore data from such simulations. One of the driving challenges behind this work is the integration of macroscopic data at the level of brain regions with microscopic simulation results, such as the activity of individual neurons. While researchers validate their findings mainly by visualizing these data in a non-interactive fashion, state-of-the-art visualizations, tailored to the scientific question yet sufficiently general to accommodate different types of models, enable such analyses to be performed more efficiently. This work describes several visualization designs, conceived in close collaboration with domain experts, for the analysis of network models. We primarily focus on the exploration of neural activity data, inspecting connectivity of brain regions and populations, and visualizing activity flux across regions. We demonstrate the effectiveness of our approach in a case study conducted with domain experts.

Distributed Parallel Particle Advection using Work Requesting

Particle advection is an important vector field visualization technique that is difficult to apply to very large data sets in a distributed setting due to scalability limitations in existing algorithms. In this paper, we report on several experiments using work requesting dynamic scheduling which achieves balanced work distribution on arbitrary problems with minimal communication overhead. We present a corresponding prototype implementation, provide and analyze benchmark results, and compare our results to an existing algorithm.

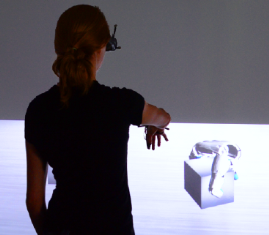

An Evaluation of Two Simple Methods for Representing Heaviness in Immersive Virtual Environments

Weight perception in virtual environments generally can be achieved with haptic devices. However, most of these are hard to integrate in an immersive virtual environment (IVE) due to their technical complexity and the restriction of a user's movement within the IVE. We describe two simple methods using only a wireless light-weight finger-tracking device in combination with a physics simulated hand model to create a feeling of heaviness of virtual objects when interacting with them in an IVE. The first method maps the varying distance between tracked fingers and the thumb to the grasping force required for lifting a virtual object with a given weight. The second method maps the detected intensity of finger pinch during grasping gestures to the lifting force. In an experiment described in this paper we investigated the potential of the proposed methods for the discrimination of heaviness of virtual objects by finding the just noticeable difference (JND) to calculate the Weber fraction. Furthermore, the workload that users experienced using these methods was measured to gain more insight into their usefulness as interaction technique. At a hit ratio of 0.75, the determined Weber fraction using the finger distance based method was 16.25% and using the pinch based method was 15.48%, which corresponds to values found in related work. There was no significant effect of method on the difference threshold measured and the workload experienced, however the user preference was higher for the pinch based method. The results demonstrate the capability of the proposed methods for the perception of heaviness in IVEs and therefore represent a simple alternative to haptics based methods.

Research Challenges for Visualization Software

Over the last twenty-five years, visualization software has evolved into robust frameworks that can be used for research projects, rapid prototype development, or as the basis of richly featured, end-user tools. In this article, new take stock of current capabilities and describe upcoming challenges facing visualization software in six categories: massive parallelization, emerging processor architectures, application architecture and data management,data models, rendering, and interaction. Further, for each of these categories, we describe evolutionary advances sufficient to meet the visualization software challenge, and posit areas in which revolutionary advances are required

Virtual Air Traffic System Simulation - Aiding the Communication of Air Traffic Effects

A key aspect of air traffic infrastructure projects is the communication between stakeholders during the approval process regarding their environmental impact. Yet, established means of communication have been found to be rather incomprehensible. In this paper we present an application that addresses these communication issues by enabling the exploration of airplane noise emissions in the vicinity of airports in a virtual environment (VE). The VE is composed of a model of the airport area and flight movement data. We combine a real-time 3D auralization approach with visualization techniques to allow for an intuitive access to noise emissions. Specifically designed interaction techniques help users to easily explore and compare air traffic scenarios.

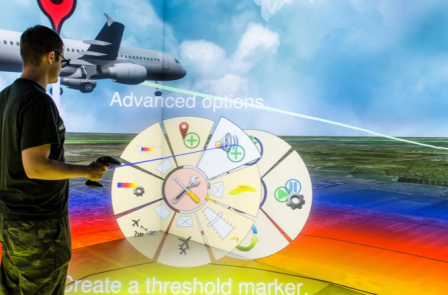

Extended Pie Menus for Immersive Virtual Environments

Pie menus are a well-known technique for interacting with 2D environments and so far a large body of research documents their usage and optimizations. Yet, comparatively little research has been done on the usability of pie menus in immersive virtual environments (IVEs). In this paper we reduce this gap by presenting an implementation and evaluation of an extended hierarchical pie menu system for IVEs that can be operated with a six-degrees-of-freedom input device. Following an iterative development process, we first developed and evaluated a basic hierarchical pie menu system. To better understand how pie menus should be operated in IVEs, we tested this system in a pilot user study with 24 participants and focus on item selection. Regarding the results of the study, the system was tweaked and elements like check boxes, sliders, and color map editors were added to provide extended functionality. An expert review with five experts was performed with the extended pie menus being integrated into an existing VR application to identify potential design issues. Overall results indicated high performance and efficient design.

A Scalable Collaborative Online System for City Reconstruction

Recent advances in Structure-from-Motion and Bundle Adjustment allow us to efficiently reconstruct large 3D scenes from millions of images. However, acquiring the imagery necessary to reconstruct a whole city and not only its landmark buildings still poses a tremendous problem. In this paper, we therefore present an online system for collaborative city reconstruction that is based on crowdsourcing the image acquisition. Employing publicly available building footprints to reconstruct individual blocks rather than the whole city at once enables our system to easily scale to large urban environments. In order to map all partial reconstructions into a single coordinate frame, we develop a robust alignment scheme that registers the individual point clouds to their corresponding footprints based on GPS coordinates. Our approach can handle noise and outliers in the GPS positions and allows us to detect wrong alignments caused by the typical issues in the context of crowdsourcing applications such as malicious or improper image uploads. Furthermore, we present an efficient rendering method to obtain dense and textured views of the resulting point clouds without requiring costly multi-view stereo methods

@inproceedings{untzelmann2013iccv,

author = "Untzelmann, Ole and Sattler, Torsten and Middelberg, Sven and Kobbelt, Leif",

title = "{A Scalable Collaborative Online System for City Reconstruction}",

booktitle = "{The IEEE International Conference on Computer Vision (ICCV) Workshops}",

year = {2013}

}

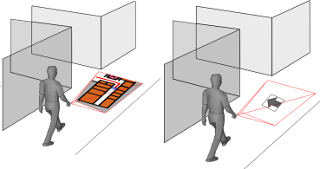

Evaluation of a Mobile Projector based Indoor Navigation Interface

In recent years, the interest and potential applications of pedestrian indoor navigation solutions have significantly increased. Whereas the majority of mobile indoor navigation aid solutions visualize navigational information on mobile screens, the present study investigates the effectiveness of a mobile projector as navigation aid which directly projects navigational information into the environment. A benchmark evaluation of the mobile projector-based indoor navigation interface was carried out investigating a combination of different navigation devices (mobile projector vs. mobile screen) and navigation information (map vs. arrow) as well as the impact of users' spatial abilities. Results showed a superiority of the mobile screen as navigation aid and the map as navigation information type. Especially users with low spatial abilities benefited from this combination in their navigation performance and acceptance. Potential application scenarios and design implications for novel indoor navigation interfaces are derived from our findings.

Advanced Automatic Hexahedral Mesh Generation from Surface Quad Meshes

A purely topological approach for the generation of hexahedral meshes from quadrilateral surface meshes of genus zero has been proposed by M. Müller-Hannemann: in a first stage, the input surface mesh is reduced to a single hexahedron by successively eliminating loops from the dual graph of the quad mesh; in the second stage, the hexahedral mesh is constructed by extruding a layer of hexahedra for each dual loop from the first stage in reverse elimination order. In this paper, we introduce several techniques to extend the scope of target shapes of the approach and significantly improve the quality of the generated hexahedral meshes. While the original method can only handle "almost convex" objects and requires mesh surgery and remeshing in case of concave geometry, we propose a method to overcome this issue by introducing the notion of concave dual loops. Furthermore, we analyze and improve the heuristic to determine the elimination order for the dual loops such that the inordinate introduction of interior singular edges, i.e. edges of degree other than four in the hexahedral mesh, can be avoided in many cases.

Geometry Seam Carving

We present a novel approach to feature-aware mesh deformation. Previous mesh editing methods are based on an elastic deformation model and thus tend to uniformly distribute the distortion in a least squares sense over the entire deformation region. Recent results from image resizing, however, show that discrete local modifications like deleting or adding connected seams of image pixels in regions with low saliency lead to far superior preservation of local features compared to uniform scaling -- the image retargeting analogon to least squares mesh deformation. Hence, we propose a discrete mesh editing scheme that combines elastic as well as plastic deformation (in regions with little geometric detail) by transferring the concept of seam carving from image retargeting to the mesh deformation scenario. A geometry seam consists of a connected strip of triangles within the mesh's deformation region. By collapsing or splitting the interior edges of this strip we perform a deletion or insertion operation that is equivalent to image seam carving and can be interpreted as a local plastic deformation. We use a feature measure to rate the geometric saliency of each triangle in the mesh and a well-adjusted distortion measure to determine where the current mesh distortion asks for plastic deformations, i.e., for deletion or insertion of geometry seams. Precomputing a fixed set of low-saliency seams in the deformation region allows us to perform fast seam deletion and insertion operations in a predetermined order such that the local mesh modifications are properly restored when a mesh editing operation is (partially) undone. Geometry seam carving hence enables the deformation of a given mesh in a way that causes stronger distortion in homogeneous mesh regions while salient features are preserved much better.

Physically-Based Character Skinning

In this paper we present a novel multi-layer model for physically-based character skinning. In contrast to geometric approaches which are commonly used in the field of character skinning, physically-based methods can simulate secondary motion effects. Furthermore, these methods can handle collisions and preserve the volume of the model without the need of an additional post-process. Physically-based approaches are computationally more expensive than geometric methods but they provide more realistic results. Recent works in this area use finite element simulations to model the elastic behavior of skin. These methods require the generation of a volumetric mesh for the skin shape in a pre-processing step. It is not easy for an artist to model the different elastic behaviors of muscles, fat and skin using a volumetric mesh since there is no clear assignment between volume elements and tissue types. For our novel multi-layer model the mesh generation is very simple and can be performed automatically. Furthermore, the model contains a layer for each kind of tissue. Therefore, the artist can easily control the elastic behavior by adjusting the stiffness parameters for muscles, fat and skin. We use shape matching with oriented particles and a fast summation technique to simulate the elastic behavior of our skin model and a position-based constraint enforcement to handle collisions, volume conservation and the coupling of the skeleton with the deformable model. Position-based methods have the advantage that they are fast, unconditionally stable, controllable and provide visually plausible results.

@inproceedings{Deul2013,

author = {Crispin Deul and Jan Bender},

title = {Physically-Based Character Skinning},

booktitle = {Virtual Reality Interactions and Physical Simulations (VRIPhys)},

year = {2013},

month = nov,

address = {Lille, France},

publisher = {Eurographics Association}

}

Multilevel Cloth Simulation using GPU Surface Sampling

Today most cloth simulation systems use triangular mesh models. However, regular grids allow many optimizations as connectivity is implicit, warp and weft directions of the cloth are aligned to grid edges and distances between particles are equal. In this paper we introduce a cloth simulation that combines both model types. All operations that are performed on the CPU use a low-resolution triangle mesh while GPU-based methods are performed efficiently on a high-resolution grid representation. Both models are coupled by a sampling operation which renders triangle vertex data into a texture and by a corresponding projection of texel data onto a mesh. The presented scheme is very flexible and allows individual components to be performed on different architectures, data representations and detail levels. The results are combined using shader programs which causes a negligible overhead. We have implemented CPU-based collision handling and a GPU-based hierarchical constraint solver to simulate systems with more than 230k particles in real-time.

@inproceedings{Schmitt2013,

author = {Nikolas Schmitt and Martin Knuth and Jan Bender and Arjan Kuijper},

title = {Multilevel Cloth Simulation using GPU Surface Sampling},

booktitle = {Virtual Reality Interactions and Physical Simulations (VRIPhys)},

year = {2013},

month = nov,

address = {Lille, France},

publisher = {Eurographics Association}

}

Level of Detail for Real-Time Volumetric Terrain Rendering

Terrain rendering is an important component of many GIS applications and simulators. Most methods rely on heightmap-based terrain which is simple to acquire and handle, but has limited capabilities for modeling features like caves, steep cliffs, or overhangs. In contrast, volumetric terrain models, e.g. based on isosurfaces can represent arbitrary topology. In this paper, we present a fast, practical and GPU-friendly level of detail algorithm for large scale volumetric terrain that is specifically designed for real-time rendering applications. Our algorithm is based on a longest edge bisection (LEB) scheme. The resulting tetrahedral cells are subdivided into four hexahedra, which form the domain for a subsequent isosurface extraction step. The algorithm can be used with arbitrary volumetric models such as signed distance fields, which can be generated from triangle meshes or discrete volume data sets. In contrast to previous methods our algorithm does not require any stitching between detail levels. It generates crack free surfaces with a good triangle quality. Furthermore, we efficiently extract the geometry at runtime and require no preprocessing, which allows us to render infinite procedural content with low memory consumption.

@inproceedings{Scholz2013,

author = {Manuel Scholz and Jan Bender and Carsten Dachsbacher },

title = {{Level of Detail for Real-Time Volumetric Terrain Rendering}},

pages = {211-218},

URL = {http://diglib.eg.org/EG/DL/PE/VMV/VMV13/211-218.pdf},

DOI = {10.2312/PE.VMV.VMV13.211-218},

editor = {Michael Bronstein and Jean Favre and Kai Hormann},

booktitle = {VMV 2013: Vision, Modeling & Visualization},

year = {2013},

address = {Lugano, Switzerland},

publisher = {Eurographics Association}

}

Screen-Space Ambient Occlusion Using A-buffer Techniques

Computing ambient occlusion in screen-space (SSAO) is a common technique in real-time rendering applications which use rasterization to process 3D triangle data. However, one of the most critical problems emerging in screen-space is the lack of information regarding occluded geometry which does not pass the depth test and is therefore not resident in the G-buffer. These occluded fragments may have an impact on the proximity-based shadowing outcome of the ambient occlusion pass. This not only decreases image quality but also prevents the application of SSAO on multiple layers of transparent surfaces where the shadow contribution depends on opacity. We propose a novel approach to the SSAO concept by taking advantage of per-pixel fragment lists to store multiple geometric layers of the scene in the G-buffer, thus allowing order independent transparency (OIT) in combination with high quality, opacity-based ambient occlusion (OITAO). This A-buffer concept is also used to enhance overall ambient occlusion quality by providing stable results for low-frequency details in dynamic scenes. Furthermore, a flexible compression-based optimization strategy is introduced to improve performance while maintaining high quality results.

@inproceedings{Bauer2013,

author = {Fabian Bauer and Martin Knuth and Jan Bender},

title = {Screen-Space Ambient Occlusion Using A-buffer Techniques},

booktitle = {International Conference on Computer-Aided Design and Computer Graphics},

year = {2013},

month = nov,

address = {Hong Kong, China},

publisher = {IEEE}

}

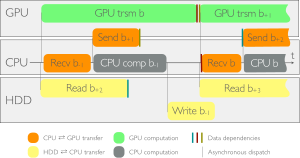

Streaming Data from HDD to GPUs for Sustained Peak Performance

In the context of the genome-wide association studies (GWAS), one has to solve long sequences of generalized least-squares problems; such a task has two limiting factors: execution time --often in the range of days or weeks-- and data management --data sets in the order of Terabytes. We present an algorithm that obviates both issues. By pipelining the computation, and thanks to a sophisticated transfer strategy, we stream data from hard disk to main memory to GPUs and achieve sustained peak performance; with respect to a highly-optimized CPU implementation, our algorithm shows a speedup of 2.6x. Moreover, the approach lends itself to multiple GPUs and attains almost perfect scalability. When using 4 GPUs, we observe speedups of 9x over the aforementioned implementation, and 488x over a widespread biology library.

@inproceedings{Beyer2013GWAS,

author = {Lucas Beyer and Paolo Bientinesi},

title = {Streaming Data from HDD to GPUs for Sustained Peak Performance},

booktitle = {Euro-Par},

publisher = {Springer},

series = {Lecture Notes in Computer Science},

volume = {8097},

pages = {788-799},

year = {2013},

isbn = {3642400477},

ee = {http://arxiv.org/abs/1302.4332},

}

ProFi: Design and Evaluation of a Product Finder in a Supermarket Scenario

This paper presents the design and evaluation of ProFi, a PROduct FInding assistant in a supermarket scenario. We explore the idea of micro-navigation in supermarkets and aim at enhancing visual search processes in front of a shelf. In order to assess the concept, a prototype is built combining visual recognition techniques with an Augmented Reality interface. Two AR patterns (circle and spotlight) are designed to highlight target products. The prototype is formally evaluated in a controlled environment. Quantitative and qualitative data is collected to evaluate the usability and user preference. The results show that ProFi significantly improves the users’ product finding performance, especially when using the circle, and that ProFi is well accepted by users.

Poster: Interactive Visualization of Brain-Scale Spiking Activity

In recent years, the simulation of spiking neural networks has advanced in terms of both simulation technology and knowledge about neuroanatomy. Due to these advances, it is now possible to run simulations at the brain scale, which produce an unprecedented amount of data to be analyzed and understood by researchers. As aid, VisNEST, a tool for the combined visualization of simulated spike data and anatomy was developed.

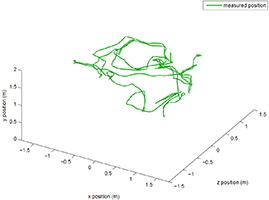

Adaptive Human Motion Prediction using Multiple Model Approaches

A common problem in Virtual Reality is latency. Especially for head tracking, latency can lead to a lower immersion. Prediction can be used to reduce the effect of latency. However, for good results the prediction process has to be reliably fast and accurate. Human motion is not homogeneous and humans often tend to change the way they move. Prediction models can be designed for these special motion types. To combine the special models, a multiple model approach is presented. It constantly evaluates the quality of the different specialized motion prediction and adjusts the set of motion models. We propose two variants, and compare them to a reference prediction algorithm.

Poster Interactive Visualization of Brain Volume Changes

The visual analysis of brain volume data by neuroscientists is commonly done in 2D coronal, sagittal and transversal views, limiting the visualization domain from potentially three to two dimensions. This is done to avoid occlusion and thus gain necessary context information. In contrast, this work intends to benefit from all spatial information that can help to understand the original data. Example data of a patient with brain degeneration are used to demonstrate how to enrich 2D with 3D data. To this end, two approaches are presented. First, a conventional 2D section in combination with transparent brain anatomy is used. Second, the principle of importance-driven volume rendering is adapted to allow a direct line-of-sight to relevant structures by means of a frustum-like cutout.

Poster: Hyperslice Visualization of Metamodels for Manufacturing Processes

In modeling and simulation of manufacturing processes, complex models are used to examine and understand the behavior and properties of the product or process. To save computation time, global approximation models, often referred to as metamodels, serve as surrogates for the original complex models. Such metamodels are difficult to interpret, because they usually have multi-dimensional input and output domains. We propose a hyperslice-based visualization approach, that uses hyperslices in combination with direct volume rendering, training point visualization, and gradient trajectory navigation, that helps in understanding such metamodels. Great care was taken to provide a high level of interactivity for the exploration of the data space.

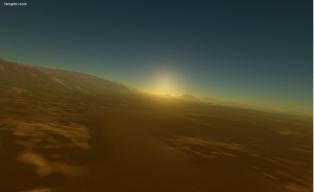

Physically Based Rendering of the Martian Atmosphere

With the introduction of complex precomputed scattering tables by Bruneton in 2008, the quality of visualizing atmospheric scattering vastly improved. The presented algorithms allowed for the rendering of complex atmospheric features such as multiple-scattering or light shafts in real-time and at interactive framerates. While their published implementation corresponding to the publication was merely a proof of concept, we present a more practical approach by applying their scattering theory to an already existing planetary rendering engine. Because the commonly used set of parameters only describes the atmosphere of the Earth, we further extend the scattering formulation to visualize the atmosphere of the planet Mars. Validating the modified scattering and resulting parameters is then done by comparison with available imagery from the Martian atmosphere

Multi-View 3D Reconstruction of Highly-Specular Objects

In this thesis, we address the problem of image-based 3D reconstruction of objects exhibiting complex reflectance behaviour using surface gradient information techniques. In this context, we are addressing two open questions. The first one focuses on the aspect, if it is possible to design a robust multi-view normal field integration algorithm, which can integrate noisy, imprecise and only partially captured real-world data. Secondly, the question is if it is possible to recover a precise geometry of the challenging highly-specular objects by multi-view normal estima- tion and integration using such an algorithm.

The main result of this work is the first multi-view normal field integration algorithm that reliably reconstructs a surface of object from normal fields captured in the real-world setup. The surface of the unknown object is reconstructed by fitting a surface to the vector field reconstructed from observed normal samples. The vector field and the surface consistency information are computed based on a feature space analysis of back-projections of the normals using robust, nonparametric probability density estimation methods. This normal field integration technique is not only suitable for reconstructing lambertian objects, but, in the scope of this work, it is also used for the reconstruction of highly-specular objects via multi-view shape-from-specularity techniques.

We performed an evaluation on synthetic normal fields, photometric stereo based normal estimates of a real lambertian object and, most importantly, demon- strated state-of-the art results in the domain of 3D reconstruction of highly-specular objects based on the measured data and integrated by the proposed algorithm. Our method presents a significant advancement in the area of gradient information based 3D reconstruction techniques with a potential to address 3D reconstruction of a large class of objects exhibiting complex reflectance behaviour. Furthermore, using this method, a wide range of proposed normal estimation techniques can now be used for the recovery of full 3D shapes.

Computer Vision Systems

This book constitutes the refereed proceedings of the 9th International Conference on Computer Vision Systems, ICVS 2013, held in St. Petersburg, Russia, July 16-18, 2013. Proceedings. The 16 revised papers presented with 20 poster papers were carefully reviewed and selected from 94 submissions. The papers are organized in topical sections on image and video capture; visual attention and object detection; self-localization and pose estimation; motion and tracking; 3D reconstruction; features, learning and validation.

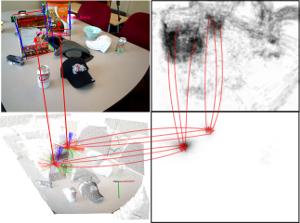

Multi-Scale, Categorical Object Detection and Pose Estimation using Hough Forest in RGB-D Images

Classification and localization of objects enables a robot to plan and execute tasks in unstructured environments. Much work on the detection and pose estimation of objects in the robotics context focused on object instances. We propose here a novel approach that detects object classes and finds the canonical pose of the detected objects in RGB-D images using Hough forests. In Hough forests each random decision tree maps local image patch to one of its leaves through a cascade of binary decisions over a patch appearance, where each leaf casts probabilistic Hough vote in Hough space encoded in object location, scale and orientation. We propose depth and surfel pair-feature as an additional appearance channels to introduce scale, shape and geometric information about the object. Moreover, we exploit depth at various stages of the processing pipeline to handle variable scale efficiently. Since obtaining large amounts of annotated training data is a cumbersome process, we use training data captured on a turn-table setup. Although the training examples from this domain do not include clutter, occlusions or varying background situations. Hence, we propose a simple but effective approach to render training images from turn-table dataset which shows the same statistical distribution in image properties as natural scenes. We evaluate our approach on publicly available RGB-D object recognition benchmark datasets and demonstrate good performance in varying background and view poses, clutter, and occlusions.

Depth-Enhanced Hough Forests for Object-Class Detection and Continuous Pose Estimation

Much work on the detection and pose estimation of objects in the robotics context focused on object instances. We propose a novel approach that detects object classes and finds the pose of the detected objects in RGB-D images. Our method is based on Hough forests, a variant of random decision and regression trees that categorize pixels and vote for 3D object position and orientation. It makes efficient use of dense depth for scale-invariant detection and pose estimation. We propose an effective way to train our method for arbitrary scenes that are rendered from training data in a turn-table setup. We evaluate our approach on publicly available RGB-D object recognition benchmark datasets and demonstrate stateof-the-art performance in varying background and view poses, clutter, and occlusions.

OpenFlipper - A Highly Modular Framework for Processing and Visualization of Complex Geometric Models

OpenFlipper is an open-source framework for processing and visualization of complex geometric models suitable for software development in both research and commercial applications. In this paper we describe in detail the software architecture which is designed in order to provide a high degree of modularity and adaptability for various purposes. Although OpenFlipper originates in the field of geometry processing, many emerging applications in this domain increasingly rely on immersion technologies. Consequently, the presented software is, unlike most existing VR software frameworks, mainly intended to be used for the content creation and processing of virtual environments while directly providing a variety of immersion techniques. By keeping OpenFlipper’s core as simple as possible and implementing functional components as plugins, the framework’s structure allows for easy extensions, replacements, and bundling. We particularly focus on the description of the integrated rendering pipeline that addresses the requirements of flexible, modern high-end graphics applications. Furthermore we describe how cross-platform unit and smoke testing as well as continuous integration is implemented in order to guarantee that new code revisions remain portable and regression is minimized. OpenFlipper is licensed under the Lesser GNU Public License and available, up to this state, for Linux, Windows, and Mac OSX.

Previous Year (2012)