Publications

DINO in the Room: Leveraging 2D Foundation Models for 3D Segmentation

Vision foundation models (VFMs) trained on large-scale image datasets provide high-quality features that have significantly advanced 2D visual recognition. However, their potential in 3D scene segmentation remains largely untapped, despite the common availability of 2D images alongside 3D point cloud datasets. While significant research has been dedicated to 2D-3D fusion, recent state-of-the-art 3D methods predominantly focus on 3D data, leaving the integration of VFMs into 3D models underexplored. In this work, we challenge this trend by introducing DITR, a generally applicable approach that extracts 2D foundation model features, projects them to 3D, and finally injects them into a 3D point cloud segmentation model. DITR achieves state-of-the-art results on both indoor and outdoor 3D semantic segmentation benchmarks. To enable the use of VFMs even when images are unavailable during inference, we additionally propose to pretrain 3D models by distilling 2D foundation models. By initializing the 3D backbone with knowledge distilled from 2D VFMs, we create a strong basis for downstream 3D segmentation tasks, ultimately boosting performance across various datasets.

@InProceedings{abouzeid2025ditr,

title = {{DINO} in the Room: Leveraging {2D} Foundation Models for {3D} Segmentation},

author = {Knaebel, Karim and Yilmaz, Kadir and de Geus, Daan and Hermans, Alexander and Adrian, David and Linder, Timm and Leibe, Bastian},

booktitle = {2026 International Conference on 3D Vision (3DV)},

year = {2026}

}

Beyond Words: The Impact of Static and Animated Faces as Visual Cues on Memory Performance and Listening Effort during Two-Talker Conversations

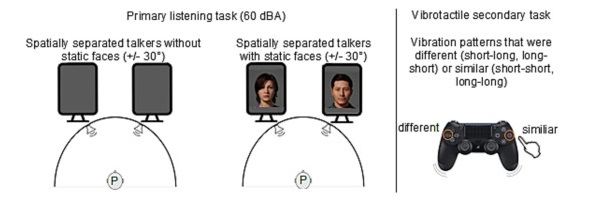

Listening to a conversation between two talkers and recalling the information is a common goal in verbal communication. However, cognitive-psychological experiments on short-term memory performance often rely on rather simple stimulus material, such as unrelated word lists or isolated sentences. The present study uniquely incorporated running speech, such as listening to a two-talker conversation, to investigate whether talker-related visual cues enhance short-term memory performance and reduce listening effort in non-noisy listening settings. In two equivalent dual-task experiments, participants listened to interrelated sentences spoken by two alternating talkers from two spatial positions, with talker-related visual cues presented as either static faces (Experiment 1, n = 30) or animated faces with lip sync (Experiment 2, n = 28). After each conversation, participants answered content-related questions as a measure of short-term memory (via the Heard Text Recall task). In parallel to listening, they performed a vibrotactile pattern recognition task to assess listening effort. Visual cue conditions (static or animated faces) were compared within-subject to a baseline condition without faces. To account for inter-individual variability, we measured and included individual working memory capacity, processing speed, and attentional functions as cognitive covariates. After controlling for these covariates, results indicated that neither static nor animated faces improved short-term memory performance for conversational content. However, static faces reduced listening effort, whereas animated faces increased it, as indicated by secondary task RTs. Participants' subjective ratings mirrored these behavioral results. Furthermore, working memory capacity was associated with short-term memory performance, and processing speed was associated with listening effort, the latter reflected in performance on the vibrotactile secondary task. In conclusion, this study demonstrates that visual cues influence listening effort and that individual differences in working memory and processing speed help explain variability in task performance, even in optimal listening conditions.

@article{MOHANATHASAN2026106295,

title = {Beyond words: The impact of static and animated faces as visual cues on memory performance and listening effort during two-talker conversations},

journal = {Acta Psychologica},

volume = {263},

pages = {106295},

year = {2026},

issn = {0001-6918},

doi = {https://doi.org/10.1016/j.actpsy.2026.106295},

url = {https://www.sciencedirect.com/science/article/pii/S0001691826000946},

author = {Chinthusa Mohanathasan and Plamenna B. Koleva and Jonathan Ehret and Andrea Bönsch and Janina Fels and Torsten W. Kuhlen and Sabine J. Schlittmeier}

}

Previous Year (2025)