Publications

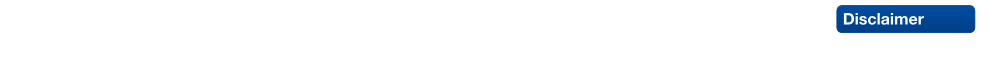

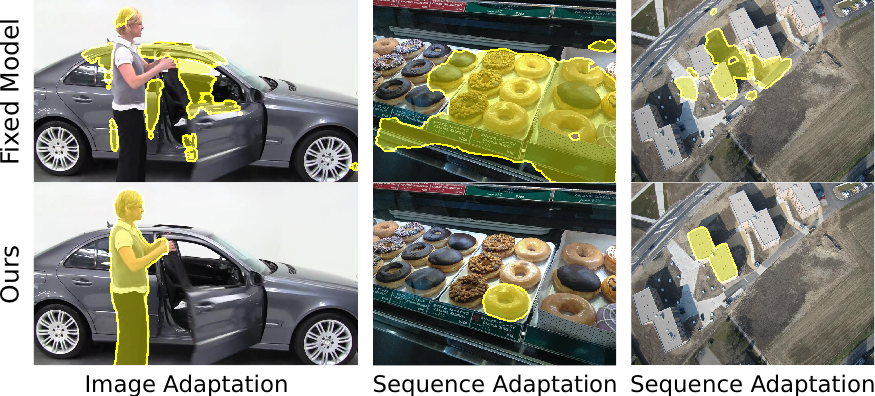

Fine-Tuning Image-Conditional Diffusion Models is Easier than You Think

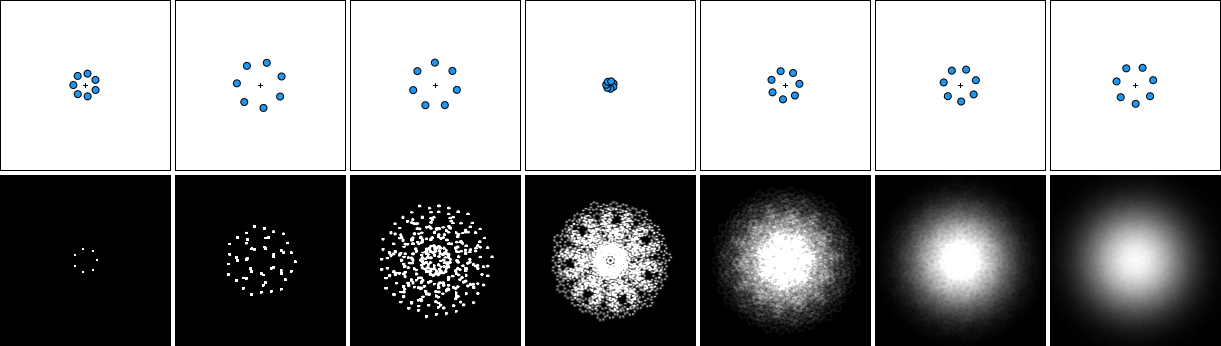

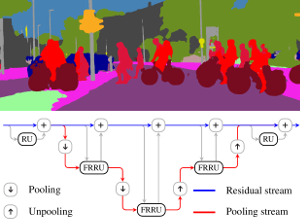

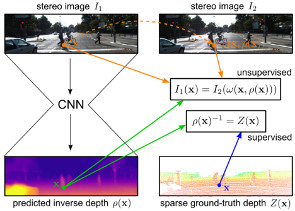

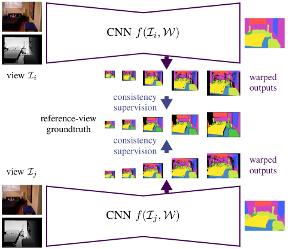

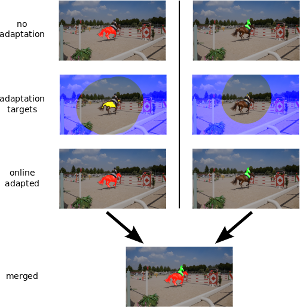

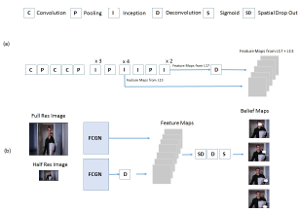

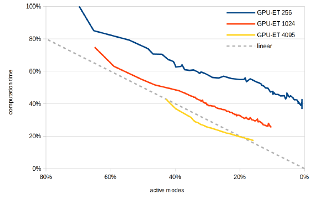

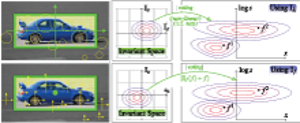

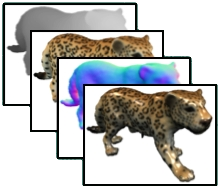

Recent work showed that large diffusion models can be reused as highly precise monocular depth estimators by casting depth estimation as an image-conditional image generation task. While the proposed model achieved state-of-the-art results, high computational demands due to multi-step inference limited its use in many scenarios. In this paper, we show that the perceived inefficiency was caused by a flaw in the inference pipeline that has so far gone unnoticed. The fixed model performs comparably to the best previously reported configuration while being more than 200x faster. To optimize for downstream task performance, we perform end-to-end fine-tuning on top of the single-step model with task-specific losses and get a deterministic model that outperforms all other diffusion-based depth and normal estimation models on common zero-shot benchmarks. We surprisingly find that this fine-tuning protocol also works directly on Stable Diffusion and achieves comparable performance to current state-of-the-art diffusion-based depth and normal estimation models, calling into question some of the conclusions drawn from prior works.

@article{martingarcia2024diffusione2eft,

title = {Fine-Tuning Image-Conditional Diffusion Models is Easier than You Think},

author = {Martin Garcia, Gonzalo and Abou Zeid, Karim and Schmidt, Christian and de Geus, Daan and Hermans, Alexander and Leibe, Bastian},

journal = {arXiv preprint arXiv:2409.11355},

year = {2024}

}

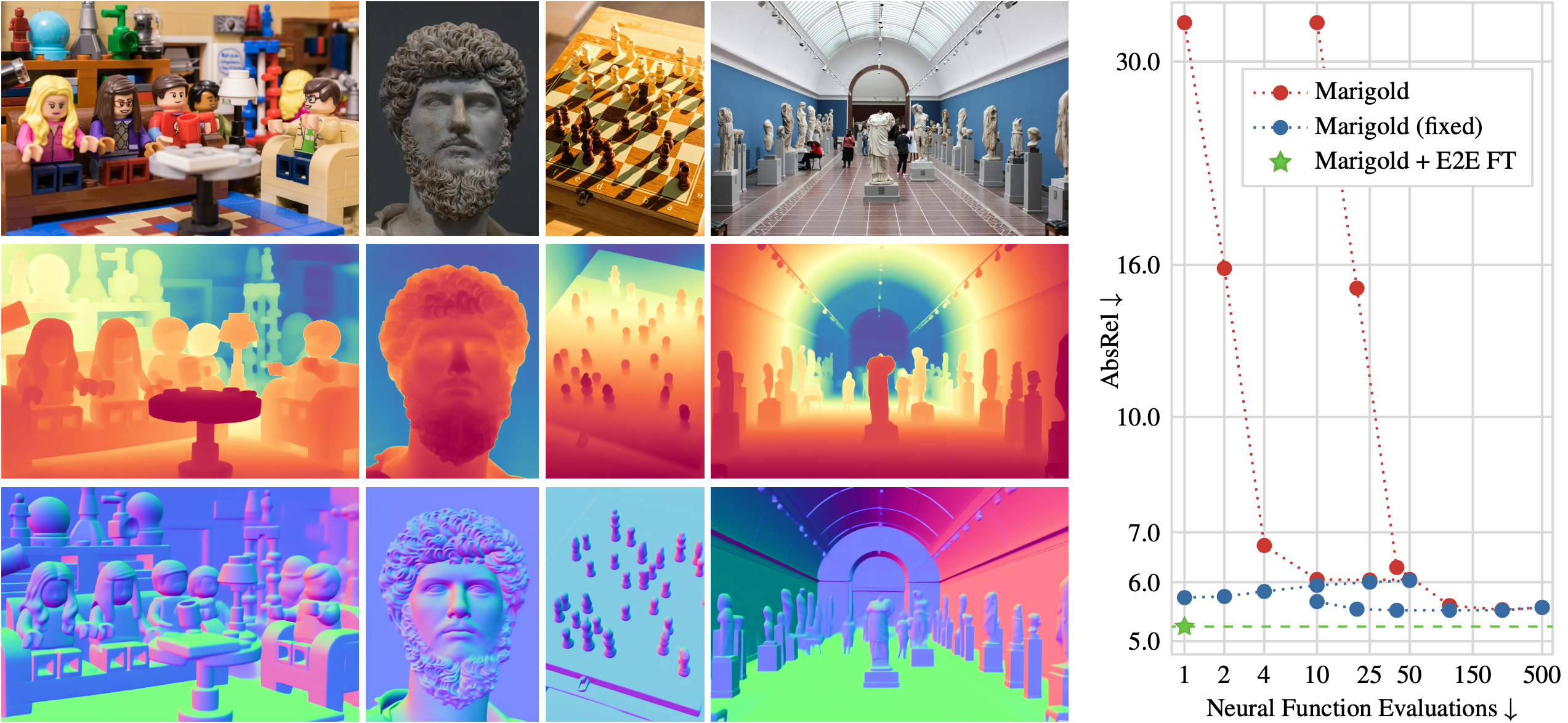

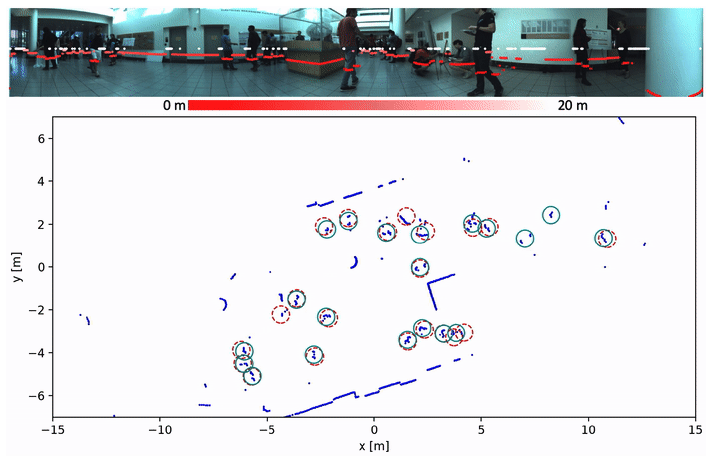

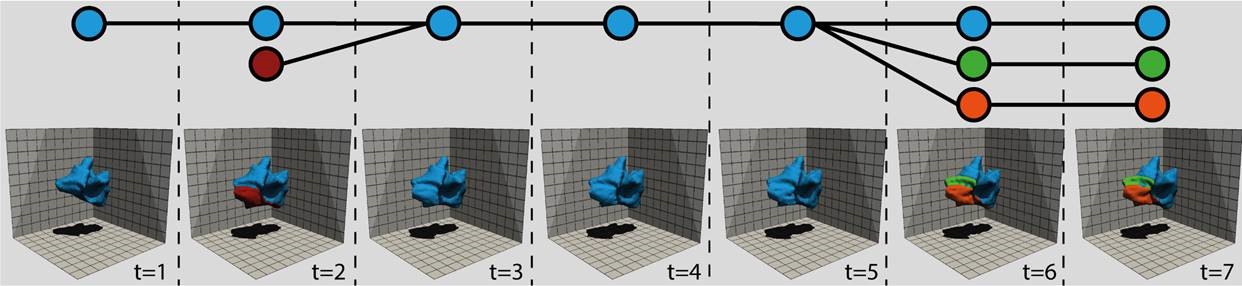

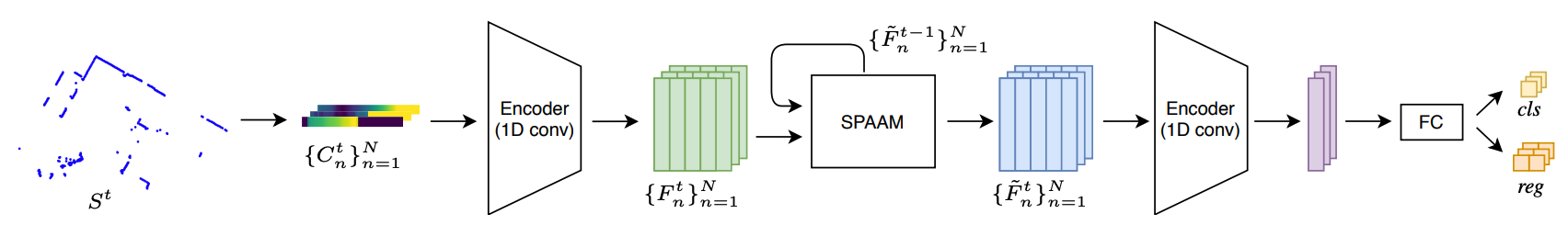

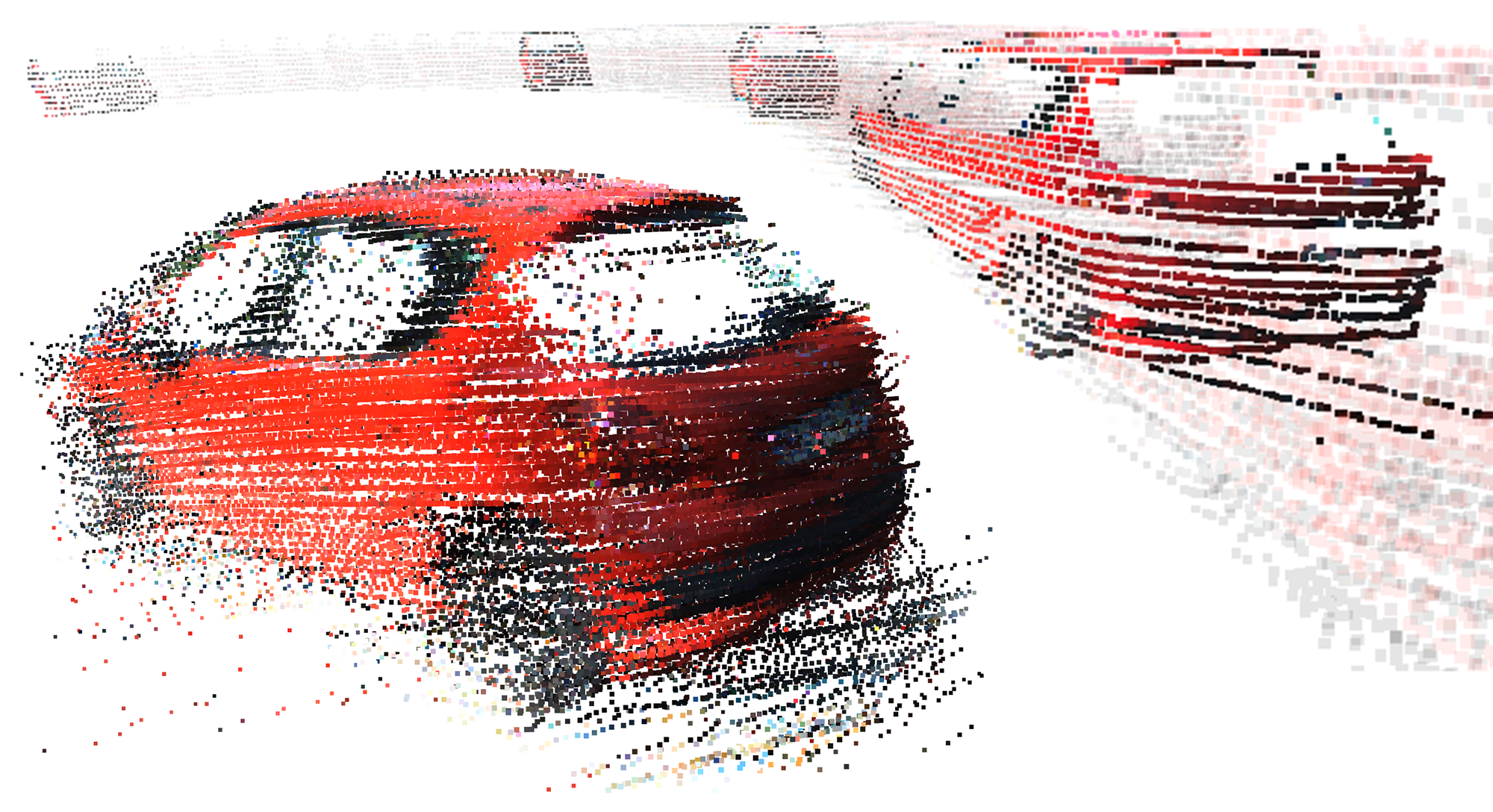

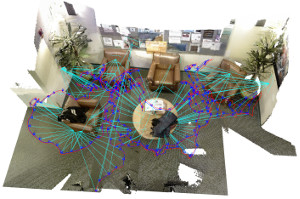

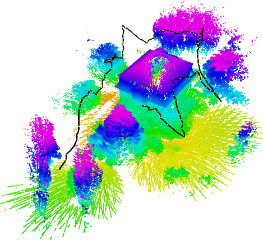

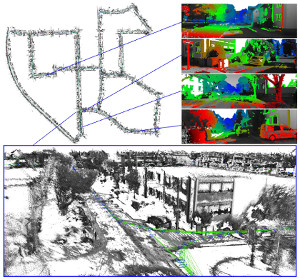

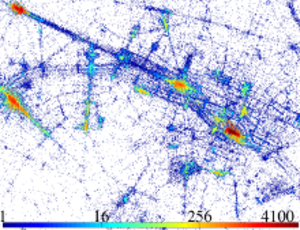

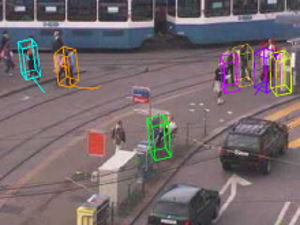

Interactive4D: Interactive 4D LiDAR Segmentation

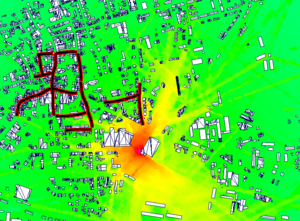

Interactive segmentation has an important role in facilitating the annotation process of future LiDAR datasets. Existing approaches sequentially segment individual objects at each LiDAR scan, repeating the process throughout the entire sequence, which is redundant and ineffective. In this work, we propose interactive 4D segmentation, a new paradigm that allows segmenting multiple objects on multiple LiDAR scans simultaneously, and Interactive4D, the first interactive 4D segmentation model that segments multiple objects on superimposed consecutive LiDAR scans in a single iteration by utilizing the sequential nature of LiDAR data. While performing interactive segmentation, our model leverages the entire space-time volume, leading to more efficient segmentation. Operating on the 4D volume, it directly provides consistent instance IDs over time and also simplifies tracking annotations. Moreover, we show that click simulations are crucial for successful model training on LiDAR point clouds. To this end, we design a click simulation strategy that is better suited for the characteristics of LiDAR data. To demonstrate its accuracy and effectiveness, we evaluate Interactive4D on multiple LiDAR datasets, where Interactive4D achieves a new state-of-the-art by a large margin.

@article{fradlin2024interactive4d,

title = {{Interactive4D: Interactive 4D LiDAR Segmentation}},

author = {Fradlin, Ilya and Zulfikar, Idil Esen and Yilmaz, Kadir and Kontogianni, Thodora and Leibe, Bastian},

journal = {arXiv preprint arXiv:2410.08206},

year = {2024}

}

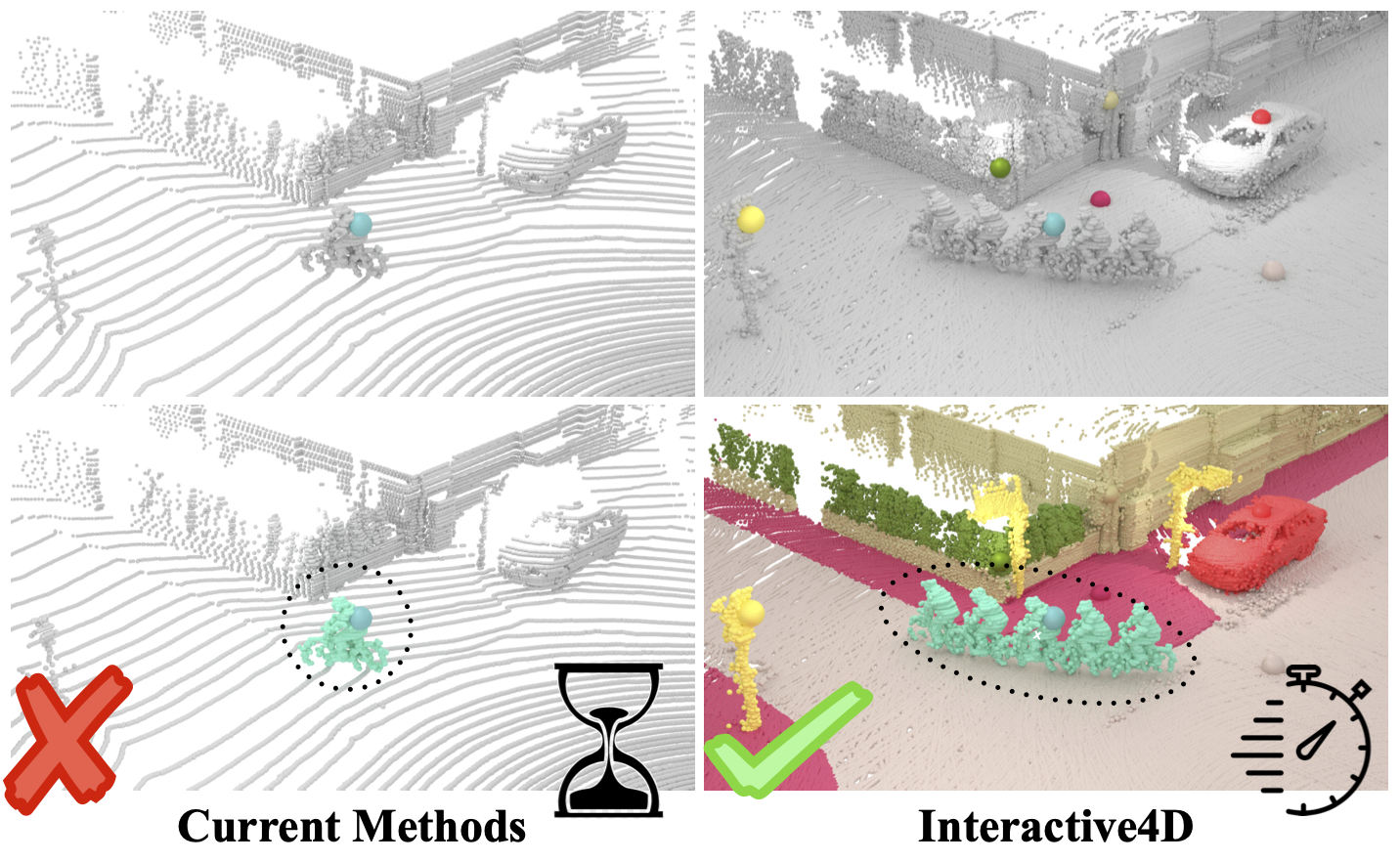

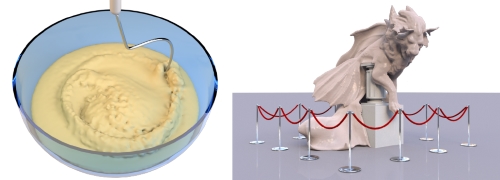

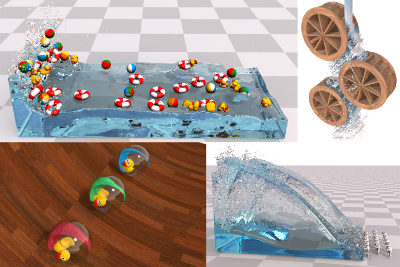

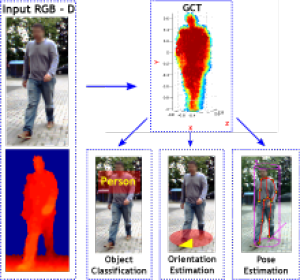

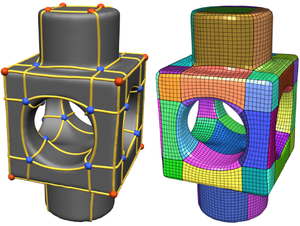

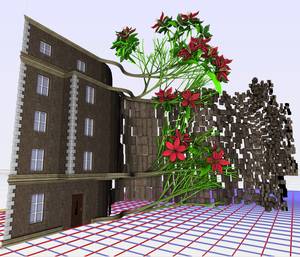

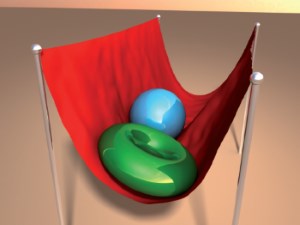

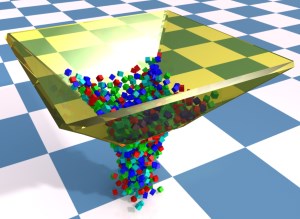

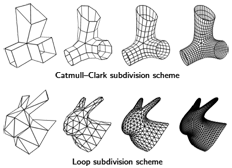

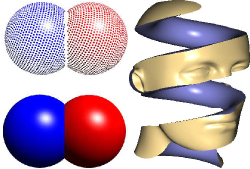

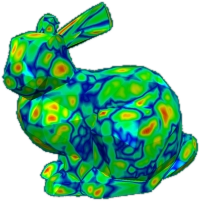

Multiphysics Simulation Methods in Computer Graphics

Physics simulation is a cornerstone of many computer graphics applications, ranging from video games and virtual reality to visual effects and computational design. The number of techniques for physically-based modeling and animation has thus skyrocketed over the past few decades, facilitating the simulation of a wide variety of materials and physical phenomena. This report captures the state-of-the-art of multiphysics simulation for computer graphics applications. Although a lot of work has focused on simulating individual phenomena, here we put an emphasis on methods developed by the computer graphics community for simulating various physical phenomena and materials, as well as the interactions between them. These include combinations of discretization schemes, mathematical modeling frameworks, and coupling techniques. For the most commonly used methods we provide an overview of the state-of-the-art and deliver valuable insights into the various approaches. A selection of software frameworks that offer out-of-the-box multiphysics modeling capabilities is also presented. Finally, we touch on emerging trends in physics-based animation that affect multiphysics simulation, including machine learning-based methods which have become increasingly popular in recent years.

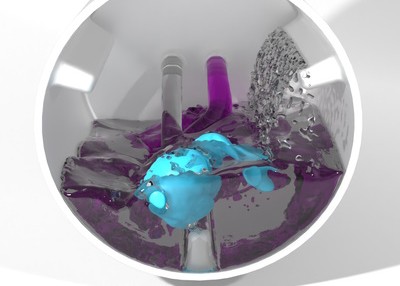

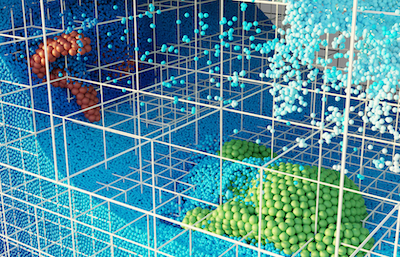

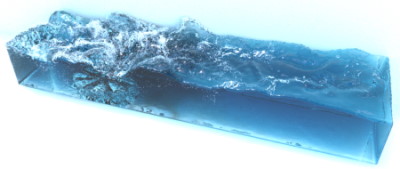

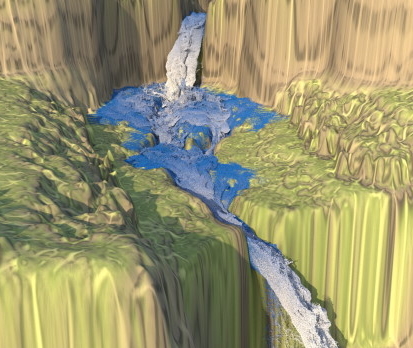

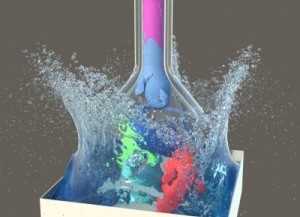

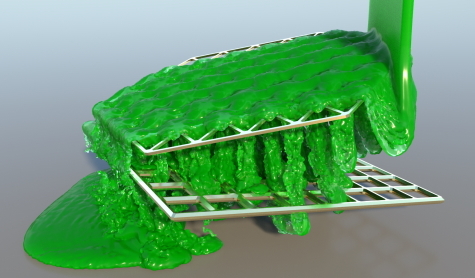

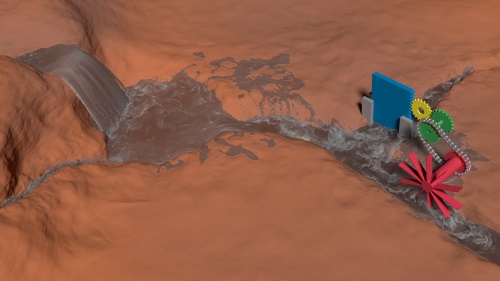

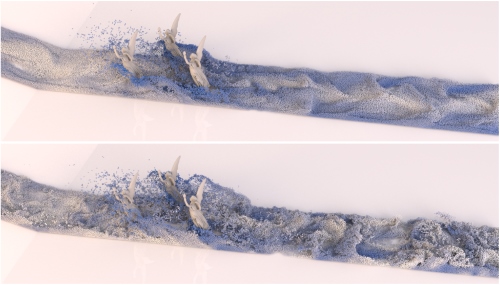

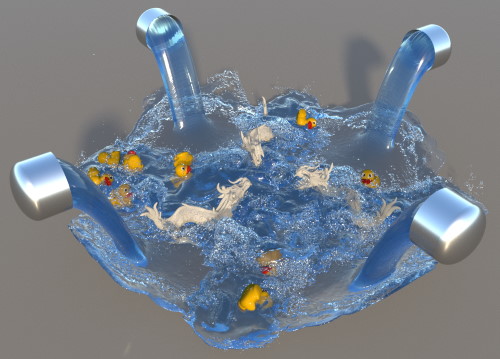

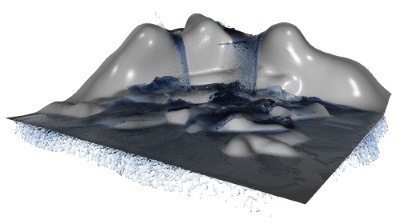

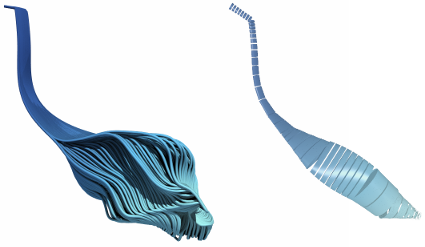

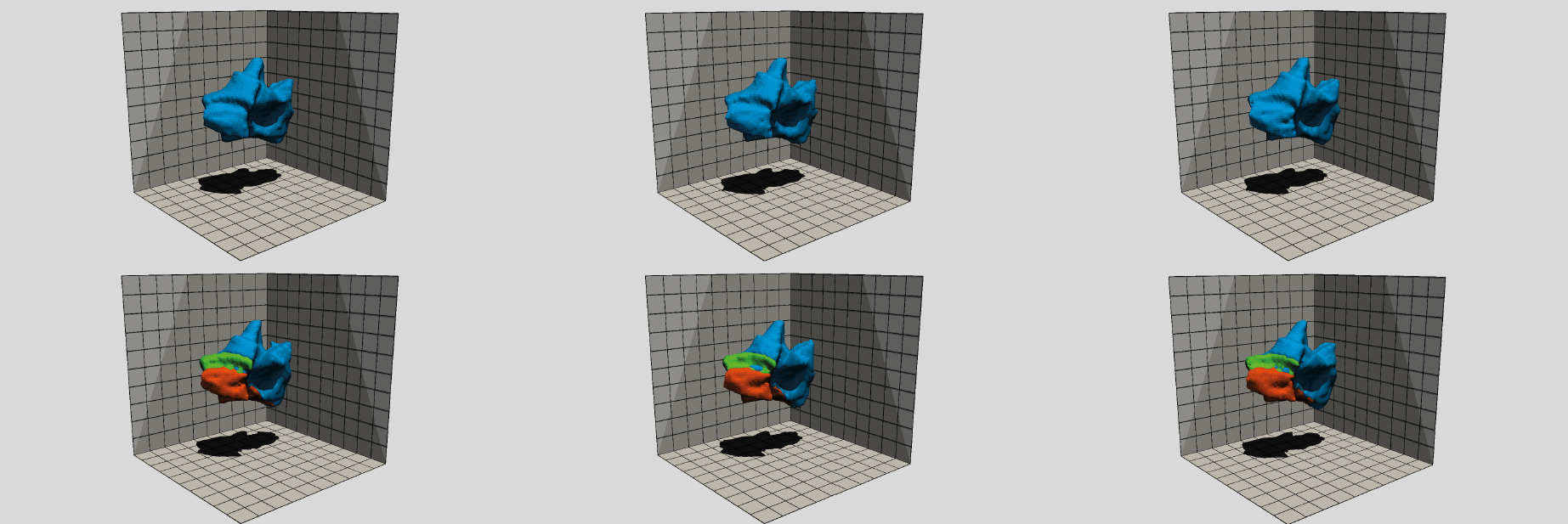

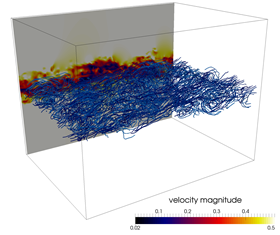

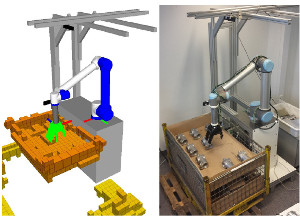

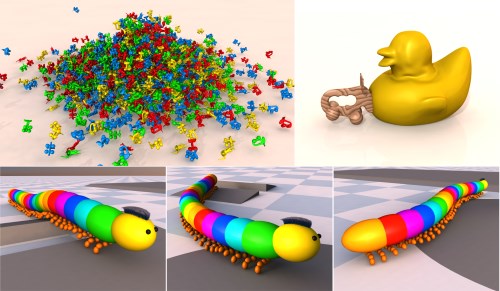

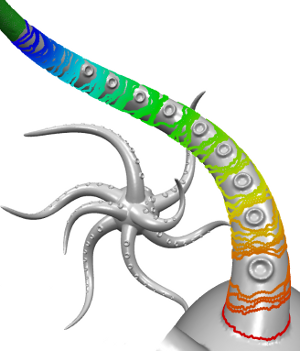

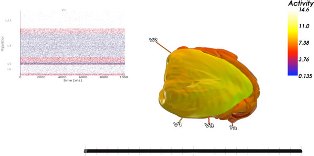

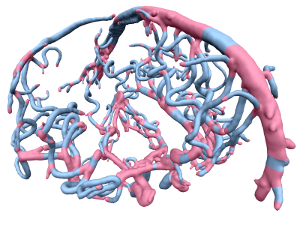

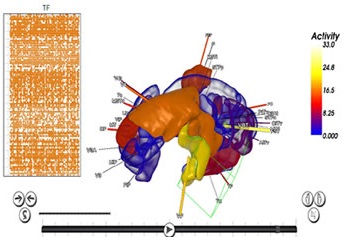

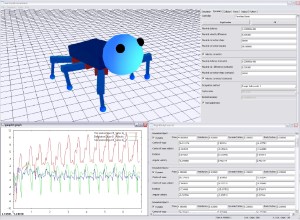

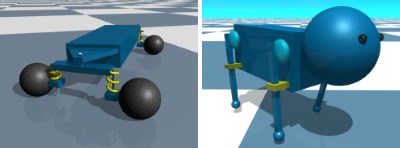

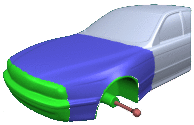

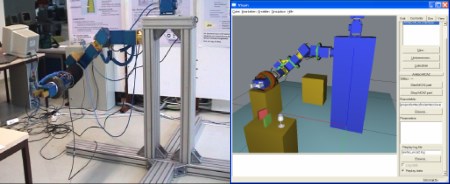

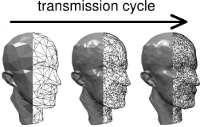

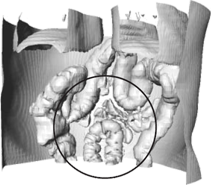

A Smoothed Particle Hydrodynamics framework for fluid simulation in robotics

Simulation is a core component of robotics workflows that can shed light on the complex interplay between a physical body, the environment and sensory feedback mechanisms in silico. To this goal several simulation methods, originating in rigid body dynamics and in continuum mechanics have been employed, enabling the simulation of a plethora of phenomena such as rigid/soft body dynamics, fluid dynamics, muscle simulation as well as sensor and actuator dynamics. The physics engines commonly employed in robotics simulation focus on rigid body dynamics, whereas continuum mechanics methods excel on the simulation of phenomena where deformation plays a crucial role, keeping the two fields relatively separate. Here, we propose a shift of paradigm that allows for the accurate simulation of fluids in interaction with rigid bodies within the same robotics simulation framework, based on the continuum mechanics-based Smoothed Particle Hydrodynamics method. The proposed framework is useful for simulations such as swimming robots with complex geometries, robots manipulating fluids and even robots emitting highly viscous materials such as the ones used for 3D printing. Scenarios like swimming on the surface, air-water transitions, locomotion on granular media can be natively simulated within the proposed framework. Firstly, we present the overall architecture of our framework and give examples of a concrete software implementation. We then verify our approach by presenting one of the first of its kind simulation of self-propelled swimming robots with a smooth particle hydrodynamics method and compare our simulations with real experiments. Finally, we propose a new category of simulations that would benefit from this approach and discuss ways that the sim-to-real gap could be further reduced.

@article{AAB+24,

title = {A smoothed particle hydrodynamics framework for fluid simulation in robotics},

journal = {Robotics and Autonomous Systems},

volume = {185},

year = {2025},

issn = {0921-8890},

doi = {https://doi.org/10.1016/j.robot.2024.104885},

url = {https://www.sciencedirect.com/science/article/pii/S0921889024002690},

author = {Emmanouil Angelidis and Jonathan Arreguit and Jan Bender and Patrick Berggold and Ziyuan Liu and Alois Knoll and Alessandro Crespi and Auke J. Ijspeert}

}

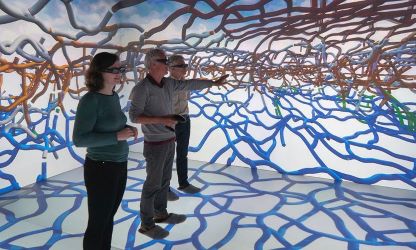

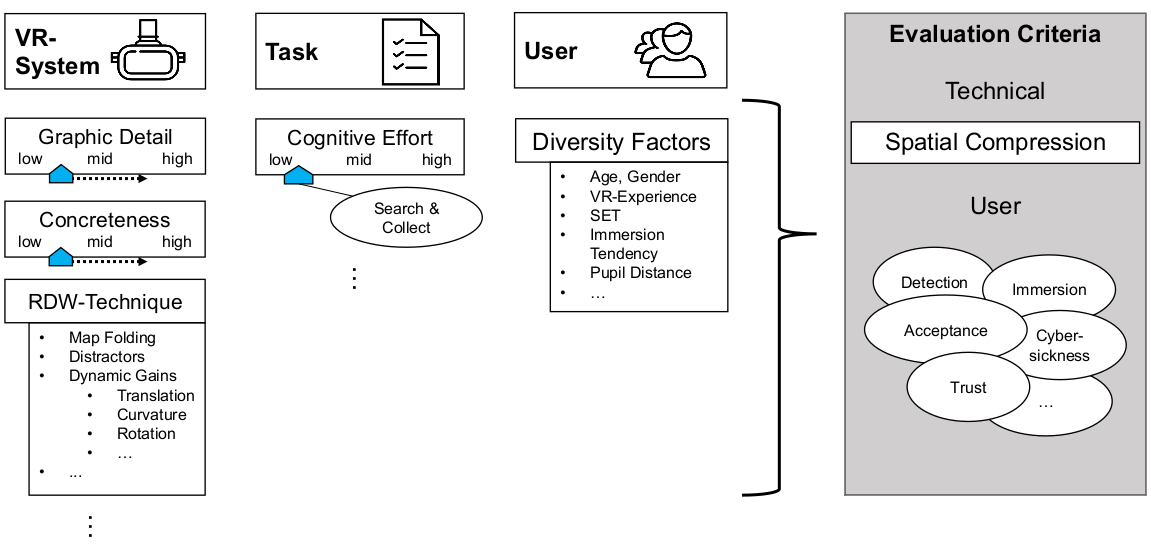

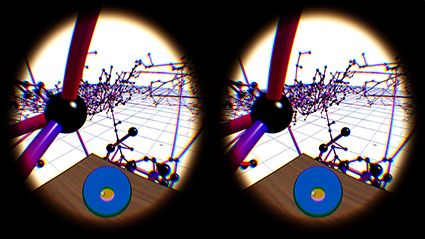

Front Matter: The Third Workshop on Locomotion and Wayfinding in XR (LocXR)

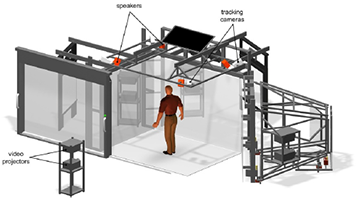

The Third Workshop on Locomotion and Wayfinding in XR, held in conjunction with IEEE VR 2025 in Saint-Malo, France, is dedicated to advancing research and fostering discussions around the critical topics of navigation in extended reality (XR). Navigation is a fundamental form of user interaction in XR, yet it poses numerous challenges in conceptual design, technical implementation, and systematic evaluation. By bringing together researchers and practitioners, this workshop aims to address these challenges and push the boundaries of what is achievable in XR navigation.

@inproceedings{Weissker2025,

author={T. {Weissker} and D. {Zielasko}},

booktitle={2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

title={The Third Workshop on Locomotion and Wayfinding in XR (LocXR)},

year={2025},

volume={},

number={},

pages={239-240},

doi={10.1109/VRW66409.2025.00058}

}

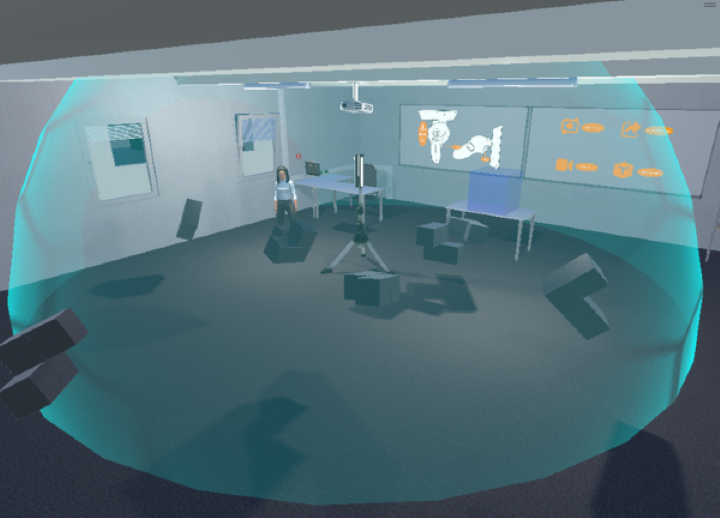

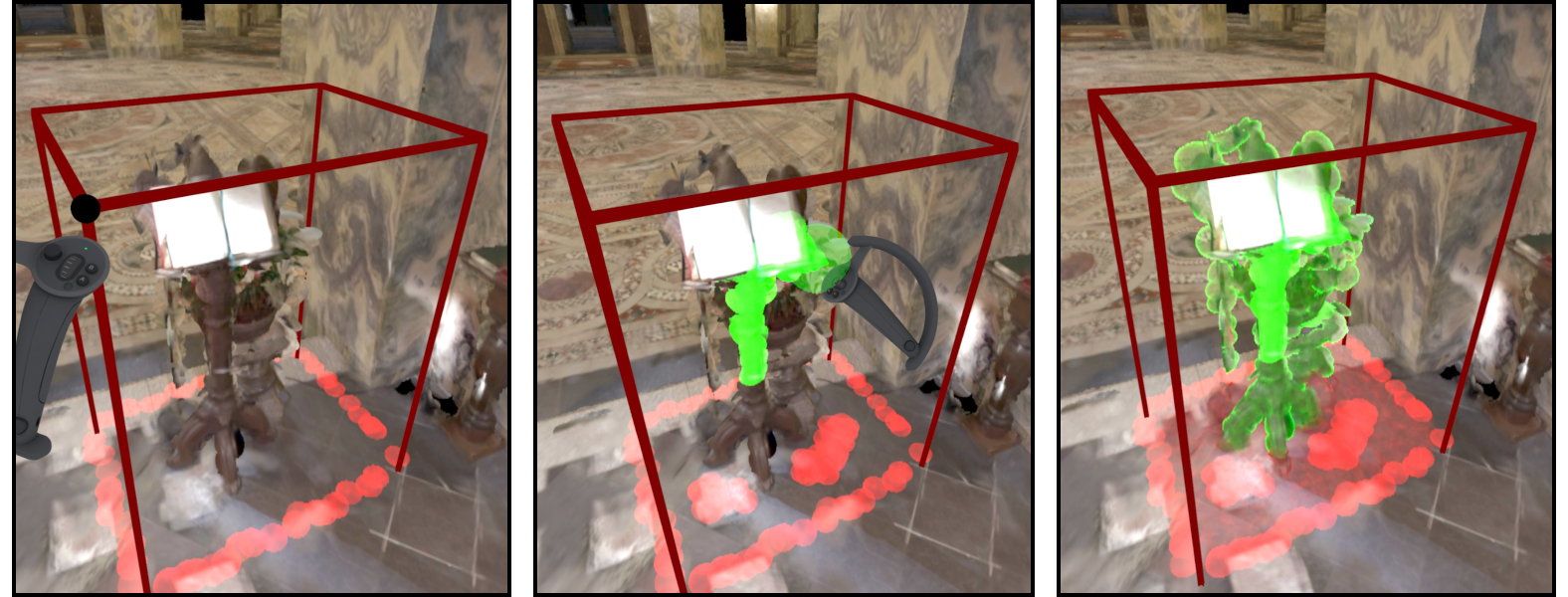

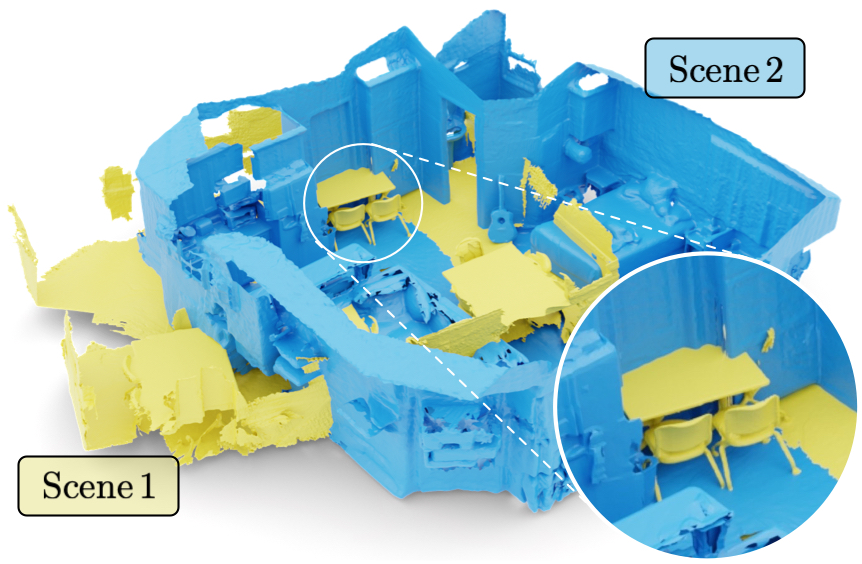

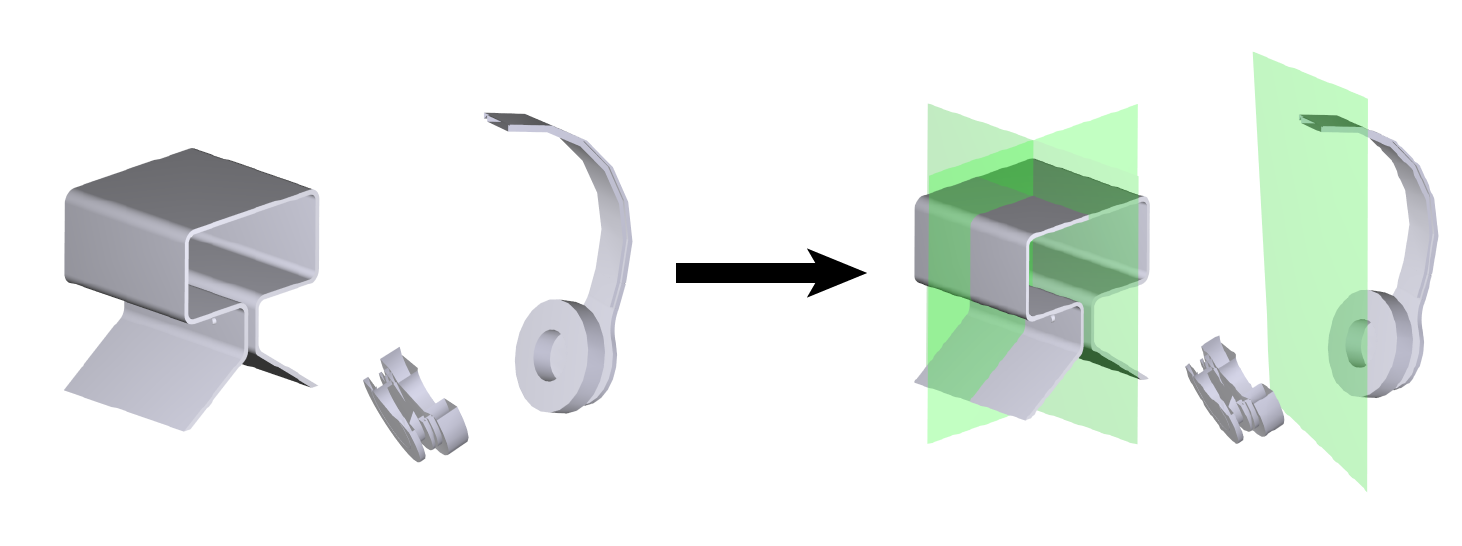

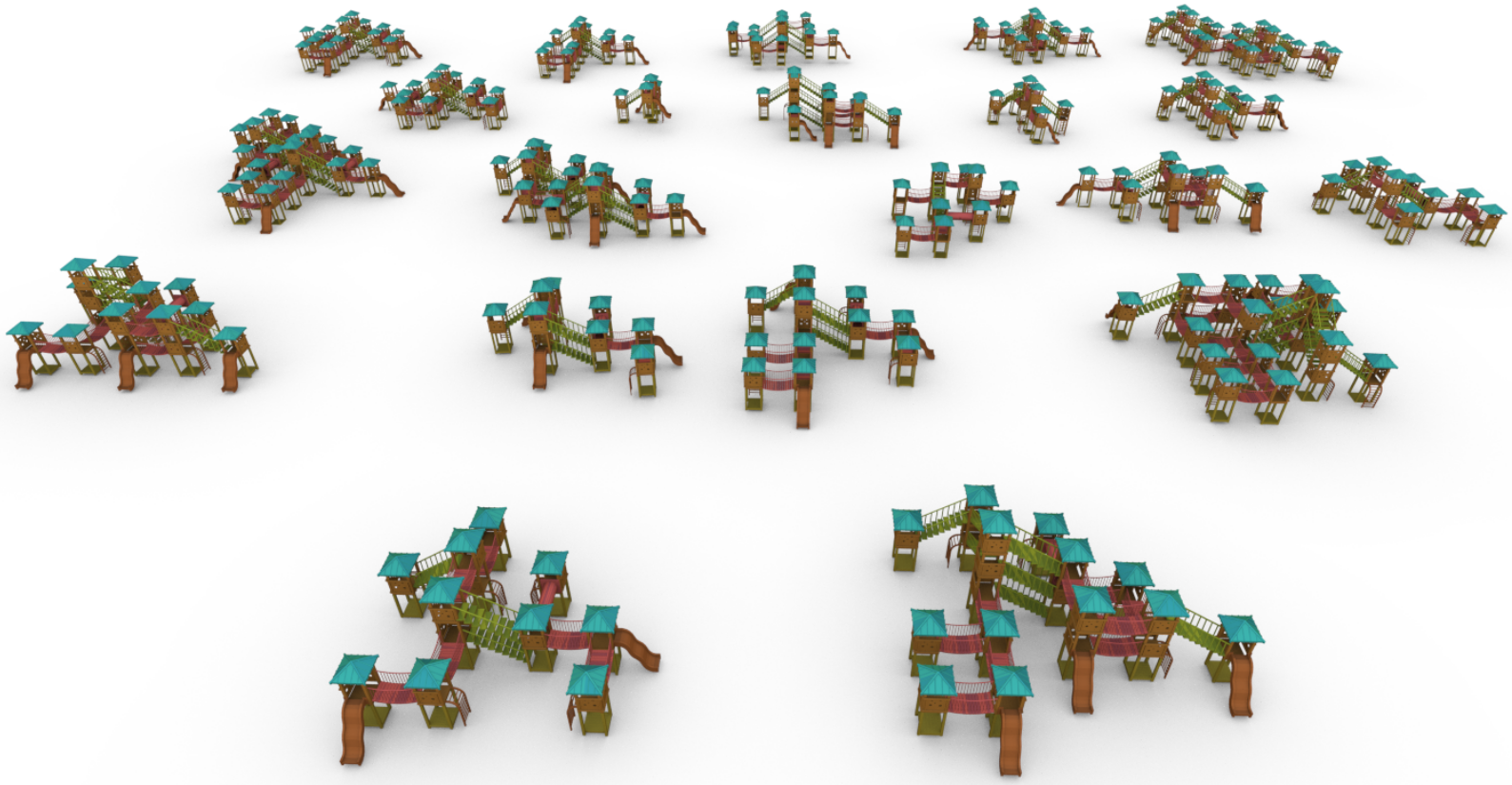

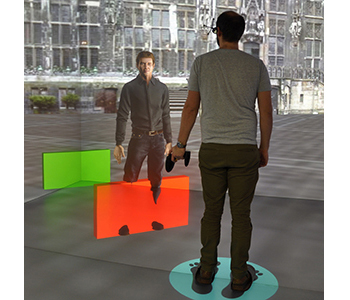

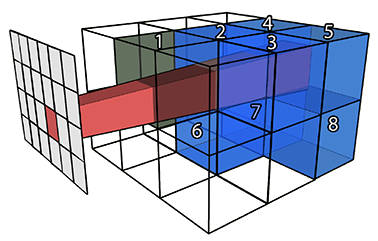

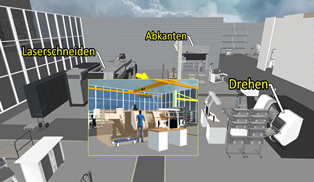

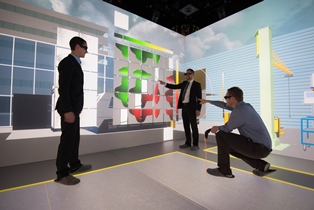

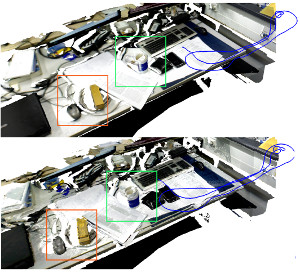

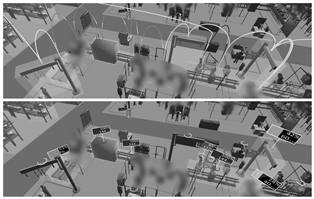

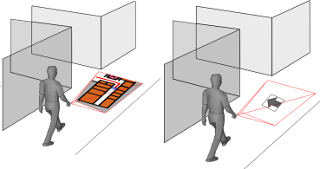

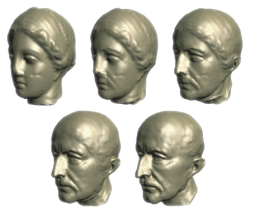

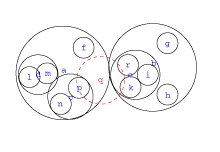

PASCAL - A Collaboration Technique Between Non-Collocated Avatars in Large Collaborative Virtual Environments

Collaborative work in large virtual environments often requires transitions from loosely-coupled collaboration at different locations to tightly-coupled collaboration at a common meeting point. Inspired by prior work on the continuum between these extremes, we present two novel interaction techniques designed to share spatial context while collaborating over large virtual distances. The first method replicates the familiar setup of a video conference by providing users with a virtual tablet to share video feeds with their peers. The second method called PASCAL (Parallel Avatars in a Shared Collaborative Aura Link) enables users to share their immediate spatial surroundings with others by creating synchronized copies of it at the remote locations of their collaborators. We evaluated both techniques in a within-subject user study, in which 24 participants were tasked with solving a puzzle in groups of two. Our results indicate that the additional contextual information provided by PASCAL had significantly positive effects on task completion time, ease of communication, mutual understanding, and co-presence. As a result, our insights contribute to the repertoire of successful interaction techniques to mediate between loosely- and tightly-coupled work in collaborative virtual environments.

@article{Gilbert2025,

author={D. {Gilbert} and A. {Bose} and T. {Kuhlen} and T. {Weissker}},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={PASCAL - A Collaboration Technique Between Non-Collocated Avatars in Large Collaborative Virtual Environments},

year={2025},

volume={31},

number={5},

pages={1268-1278},

doi={10.1109/TVCG.2025.3549175}

}

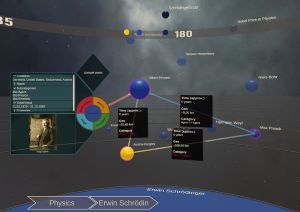

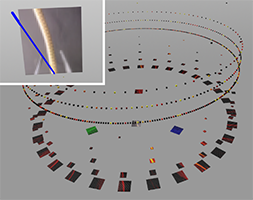

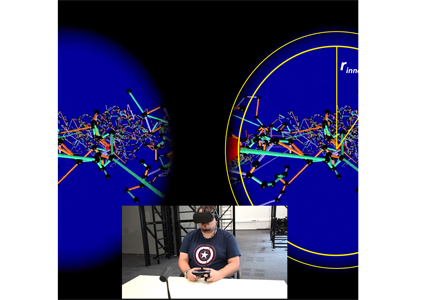

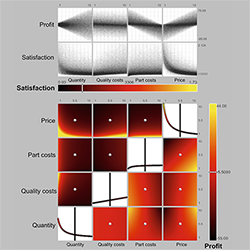

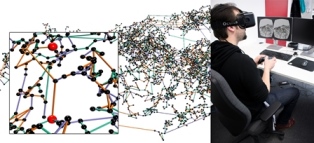

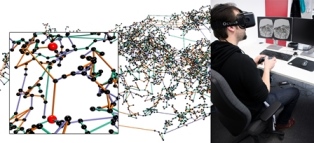

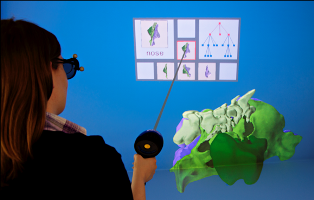

Minimalism or Creative Chaos? On the Arrangement and Analysis of Numerous Scatterplots in Immersi-ve 3D Knowledge Spaces

Working with scatterplots is a classic everyday task for data analysts, which gets increasingly complex the more plots are required to form an understanding of the underlying data. To help analysts retrieve relevant plots more quickly when they are needed, immersive virtual environments (iVEs) provide them with the option to freely arrange scatterplots in the 3D space around them. In this paper, we investigate the impact of different virtual environments on the users' ability to quickly find and retrieve individual scatterplots from a larger collection. We tested three different scenarios, all having in common that users were able to position the plots freely in space according to their own needs, but each providing them with varying numbers of landmarks serving as visual cues - an Emptycene as a baseline condition, a single landmark condition with one prominent visual cue being a Desk, and a multiple landmarks condition being a virtual Office. Results from a between-subject investigation with 45 participants indicate that the time and effort users invest in arranging their plots within an iVE had a greater impact on memory performance than the design of the iVE itself. We report on the individual arrangement strategies that participants used to solve the task effectively and underline the importance of an active arrangement phase for supporting the spatial memorization of scatterplots in iVEs.

@article{Derksen2025,

author={M. {Derksen} and T. {Kuhlen} and M. {Botsch} and T. {Weissker}},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Minimalism or Creative Chaos? On the Arrangement and Analysis of Numerous Scatterplots in Immersive 3D Knowledge Spaces},

year={2025},

volume={31},

number={5},

pages={746-756},

doi={10.1109/TVCG.2025.3549546}

}

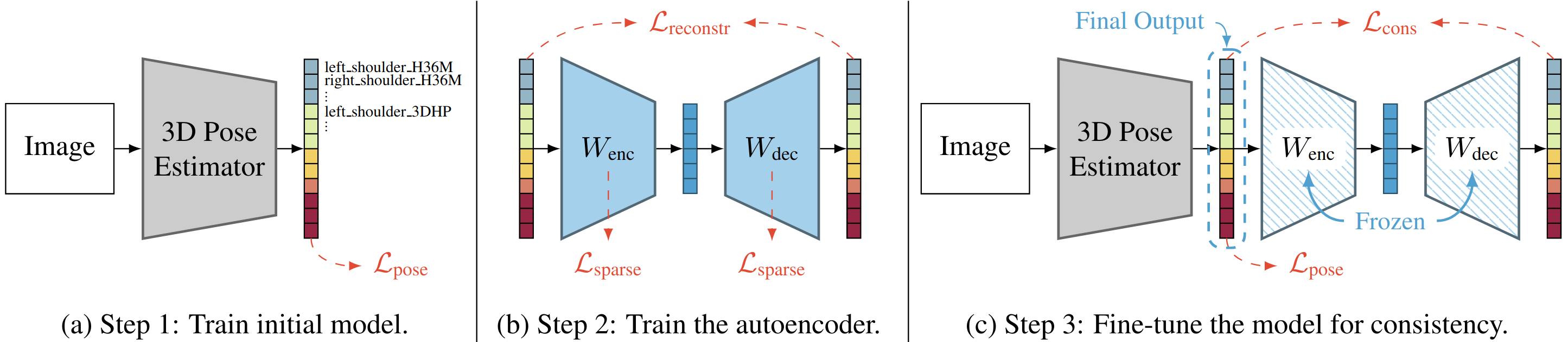

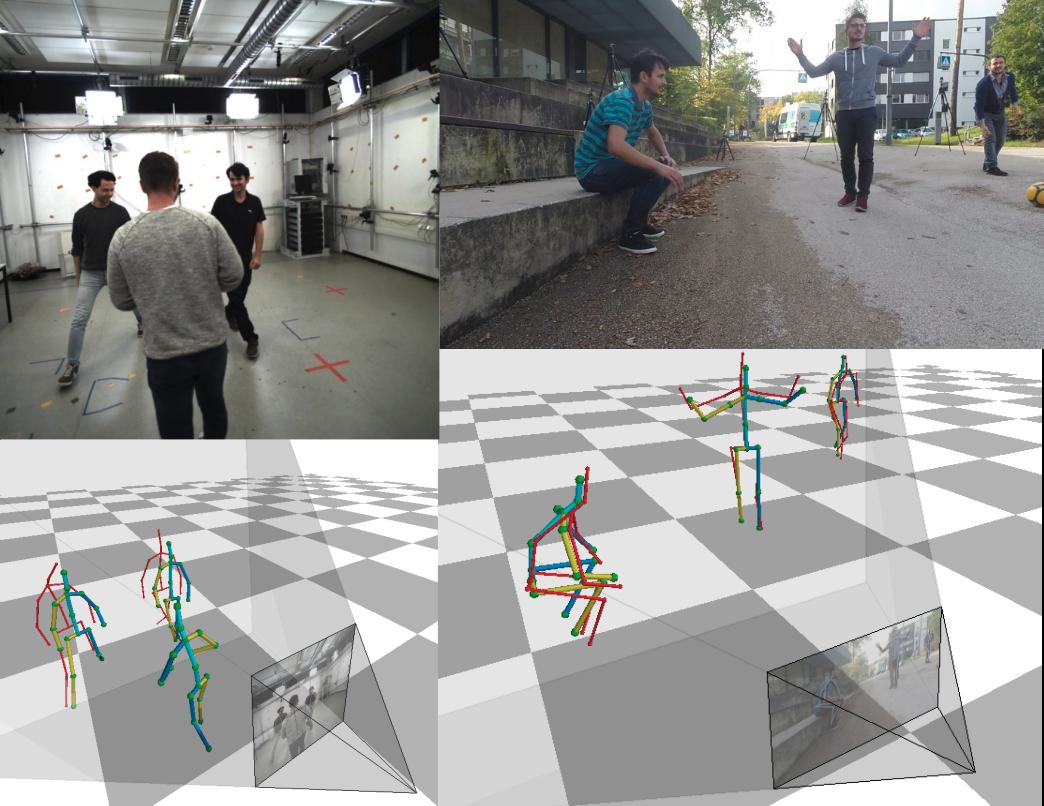

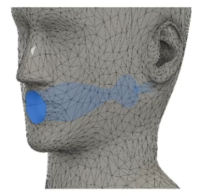

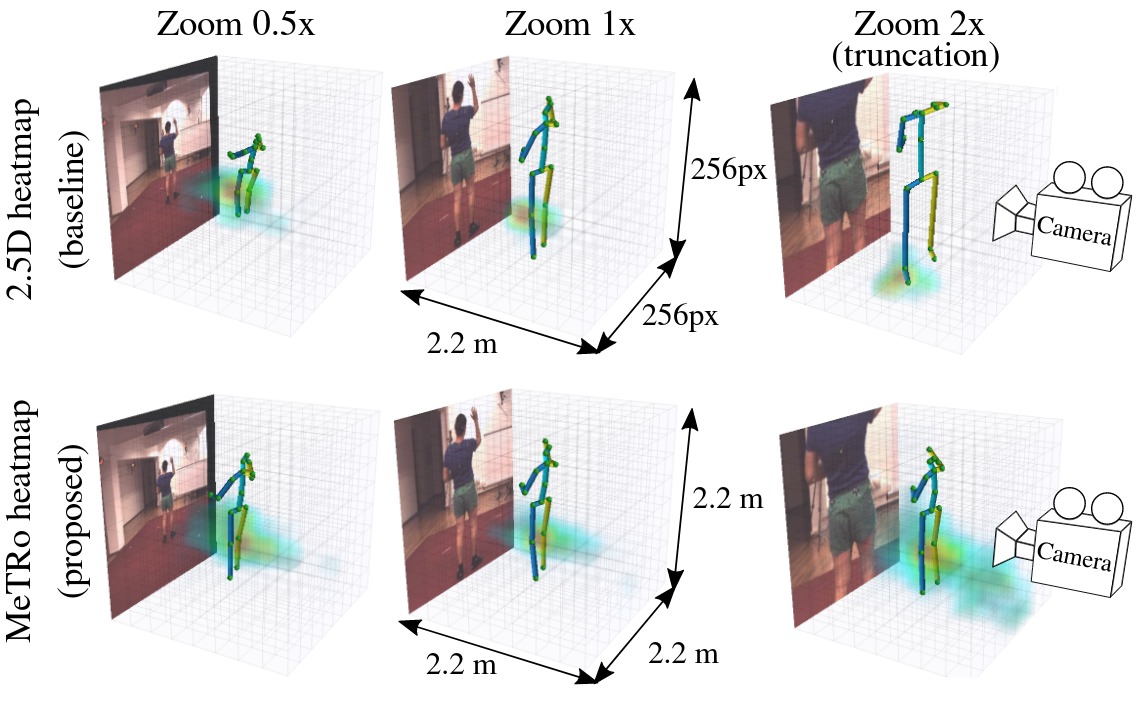

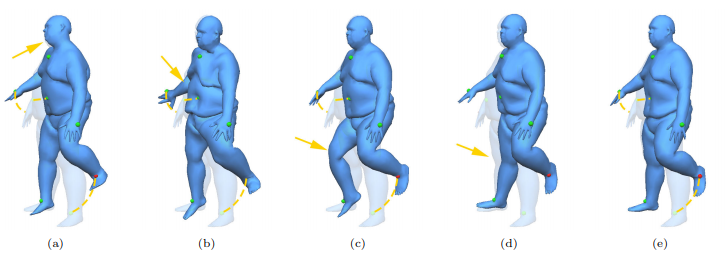

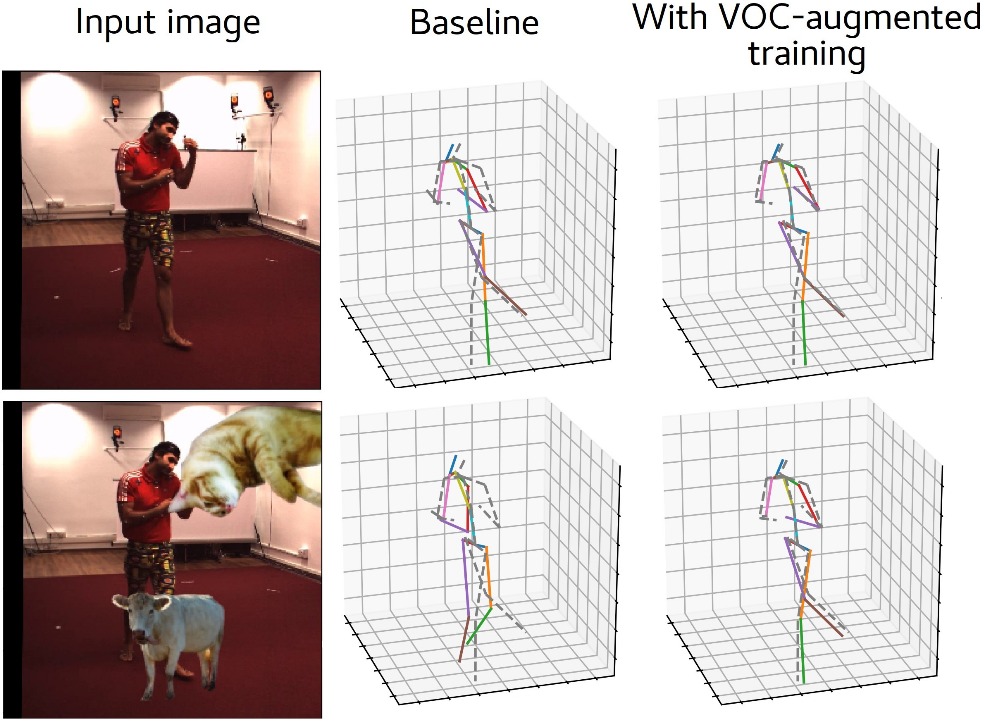

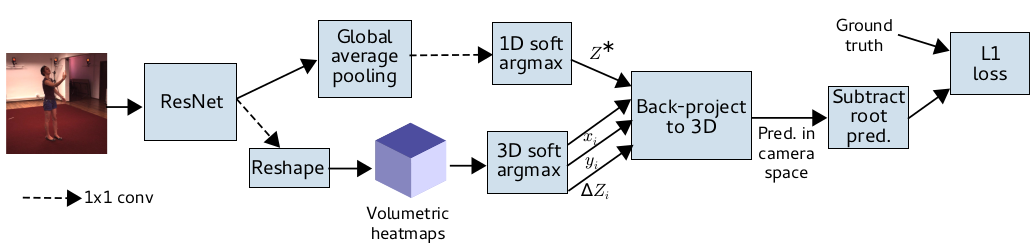

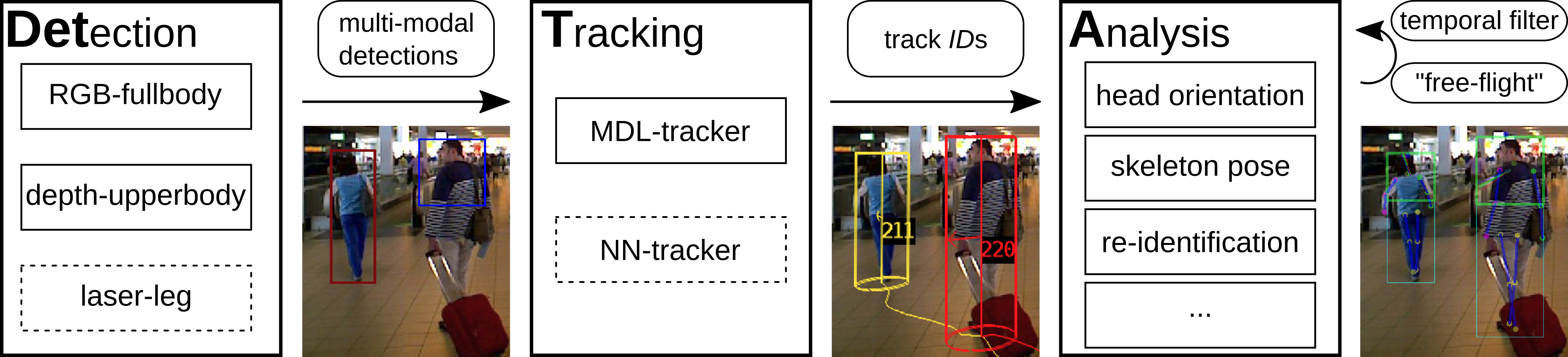

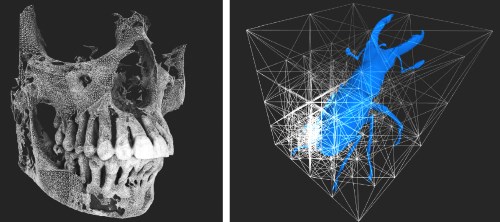

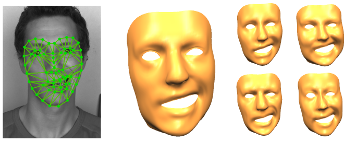

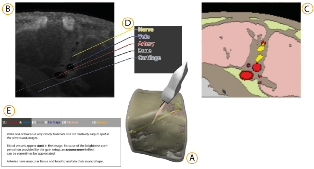

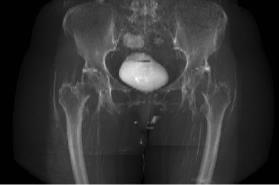

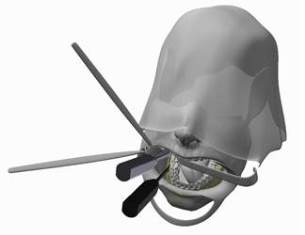

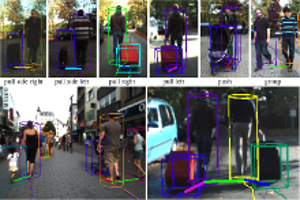

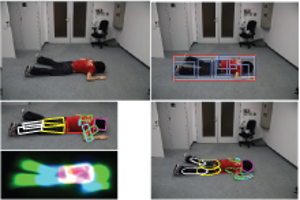

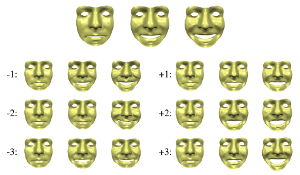

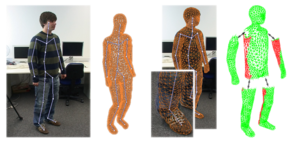

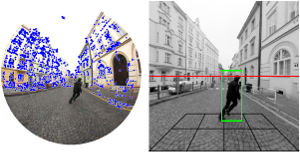

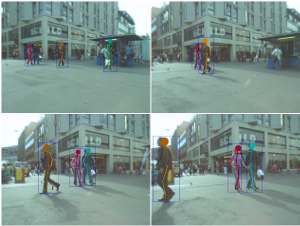

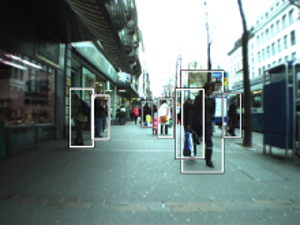

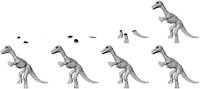

Systematic Evaluation of Different Projection Methods for Monocular 3D Human Pose Estimation on Heavily Distorted Fisheye Images

Authors: Stephanie Käs, Sven Peter, Henrik Thillmann, Anton Burenko, Timm Linder, David Adrian, and Dennis Mack, Bastian Leibe

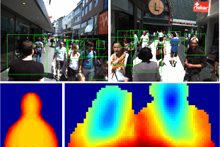

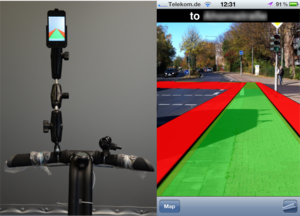

In this work, we tackle the challenge of 3D human pose estimation in fisheye images, which is crucial for applications in robotics, human-robot interaction, and automotive perception. Fisheye cameras offer a wider field of view, but their distortions make pose estimation difficult. We systematically analyze how different camera models impact prediction accuracy and introduce a strategy to improve pose estimation across diverse viewing conditions.

A key contribution of our work is FISHnCHIPS, a novel dataset featuring 3D human skeleton annotations in fisheye images, including extreme close-ups, ground-mounted cameras, and wide-FOV human poses. To support future research, we will be publicly releasing this dataset.

More details coming soon — stay tuned for the final publication! Looking forward to sharing our findings at ICRA 2025!

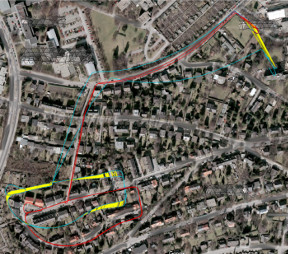

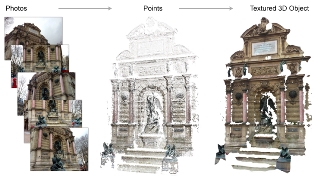

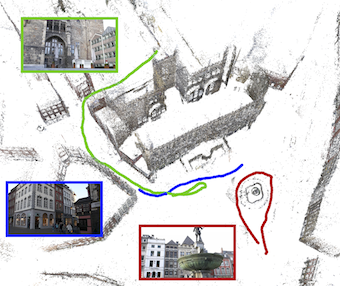

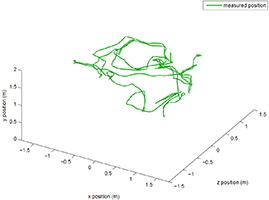

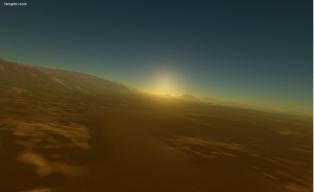

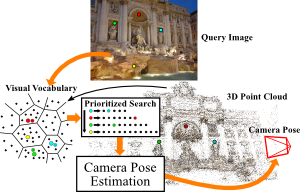

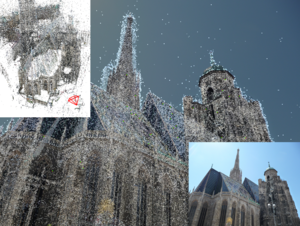

Look Gauss, No Pose: Novel View Synthesis using Gaussian Splatting without Accurate Pose Initialization

3D Gaussian Splatting has recently emerged as a powerful tool for fast and accurate novel-view synthesis from a set of posed input images. However, like most novel-view synthesis approaches, it relies on accurate camera pose information, limiting its applicability in real-world scenarios where acquiring accurate camera poses can be challenging or even impossible. We propose an extension to the 3D Gaussian Splatting framework by optimizing the extrinsic camera parameters with respect to photometric residuals. We derive the analytical gradients and integrate their computation with the existing high-performance CUDA implementation. This enables downstream tasks such as 6-DoF camera pose estimation as well as joint reconstruction and camera refinement. In particular, we achieve rapid convergence and high accuracy for pose estimation on real-world scenes. Our method enables fast reconstruction of 3D scenes without requiring accurate pose information by jointly optimizing geometry and camera poses, while achieving state-of-the-art results in novel-view synthesis. Our approach is considerably faster to optimize than most com- peting methods, and several times faster in rendering. We show results on real-world scenes and complex trajectories through simulated environments, achieving state-of-the-art results on LLFF while reducing runtime by two to four times compared to the most efficient competing method. Source code will be available at https://github.com/Schmiddo/noposegs.

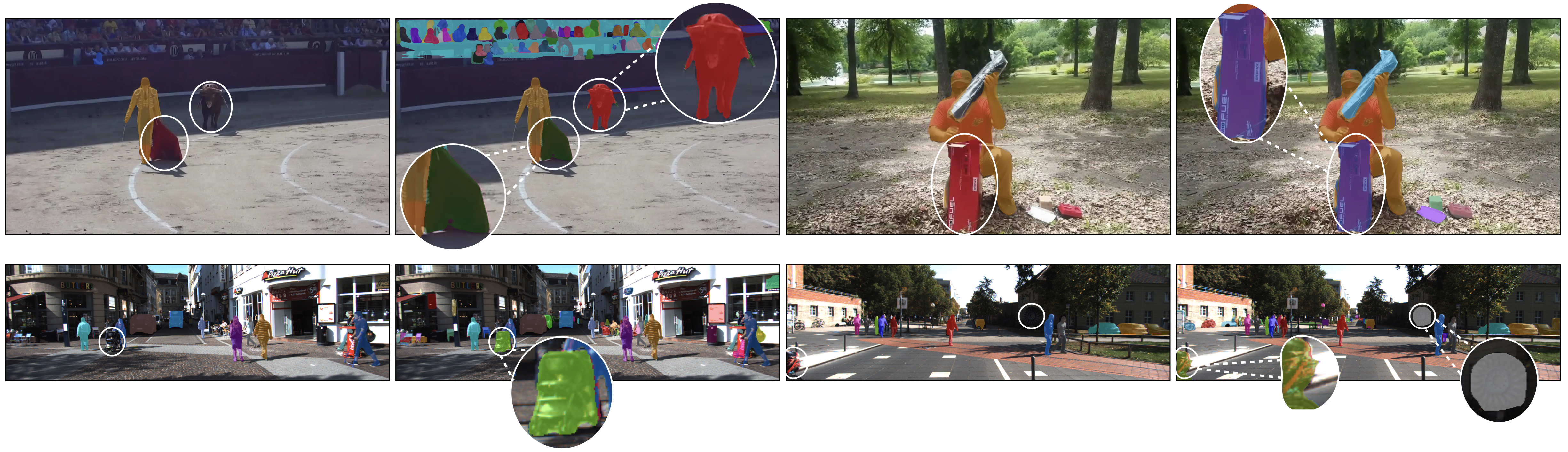

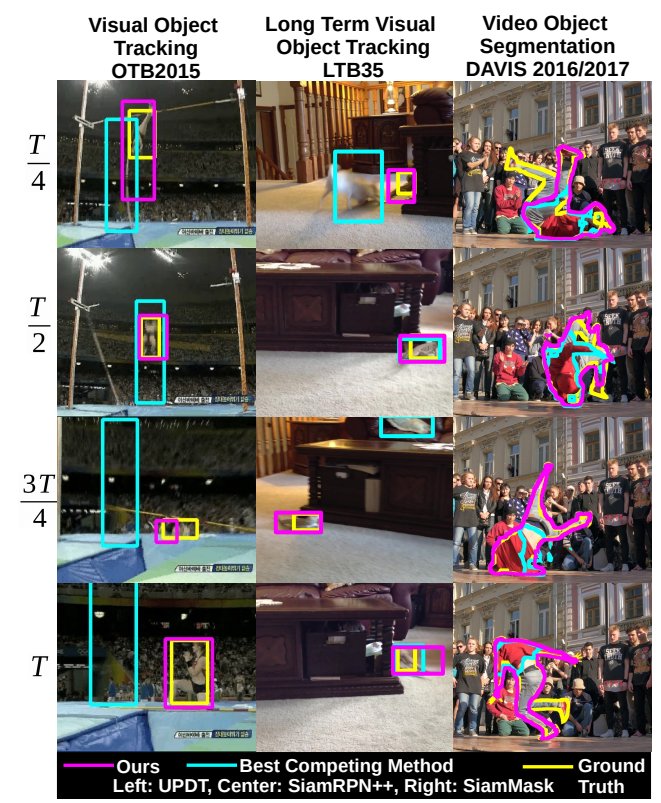

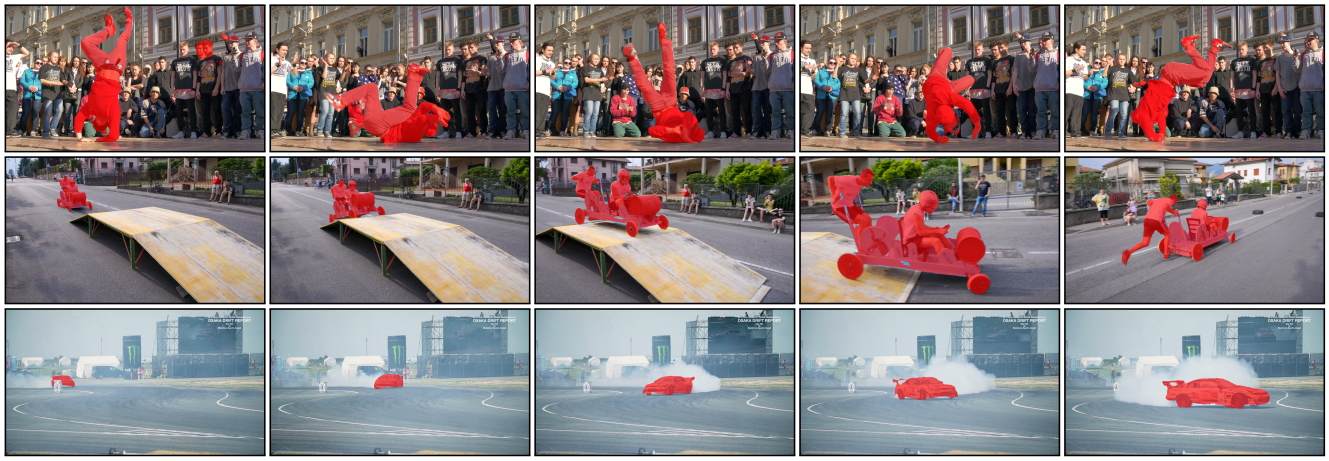

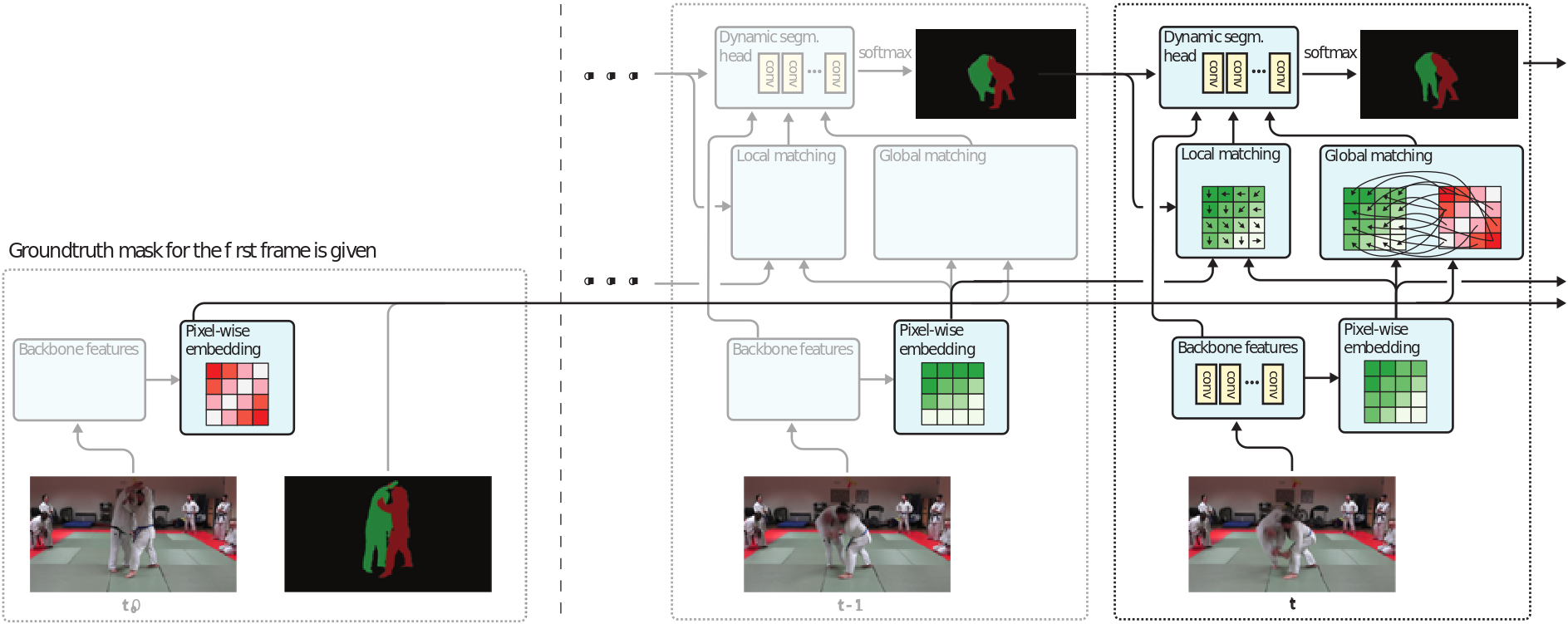

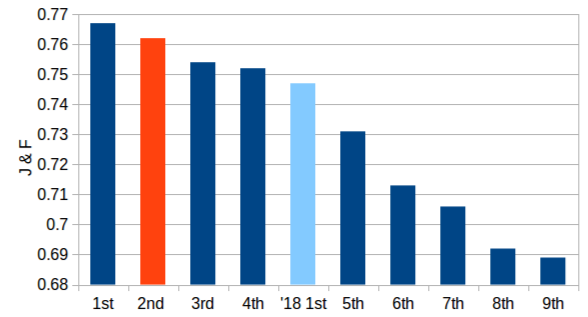

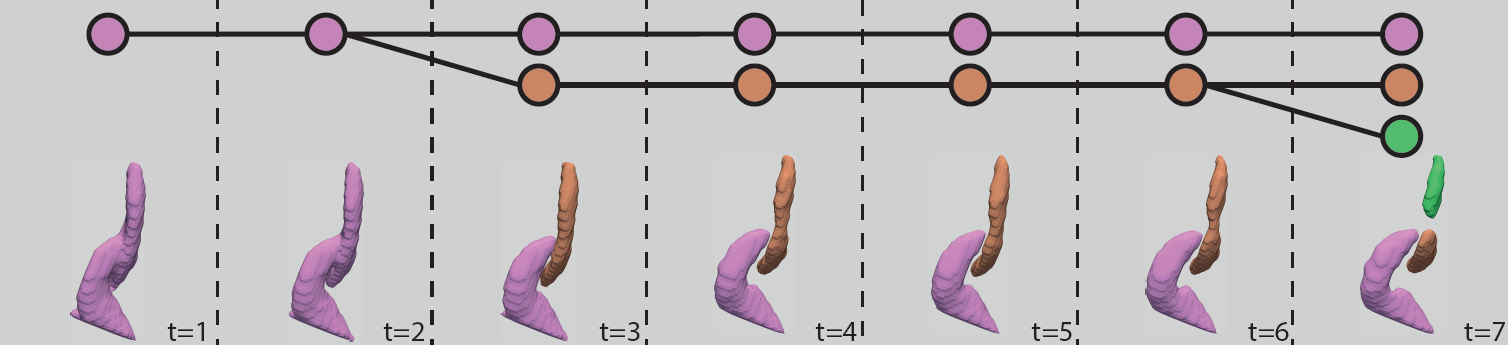

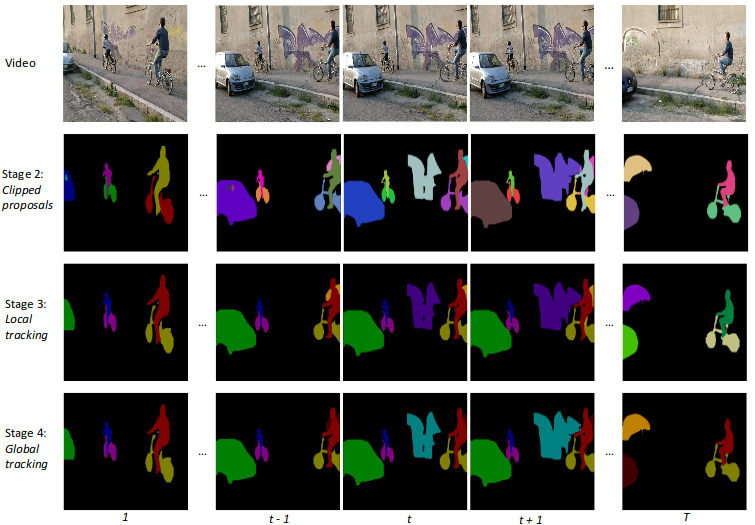

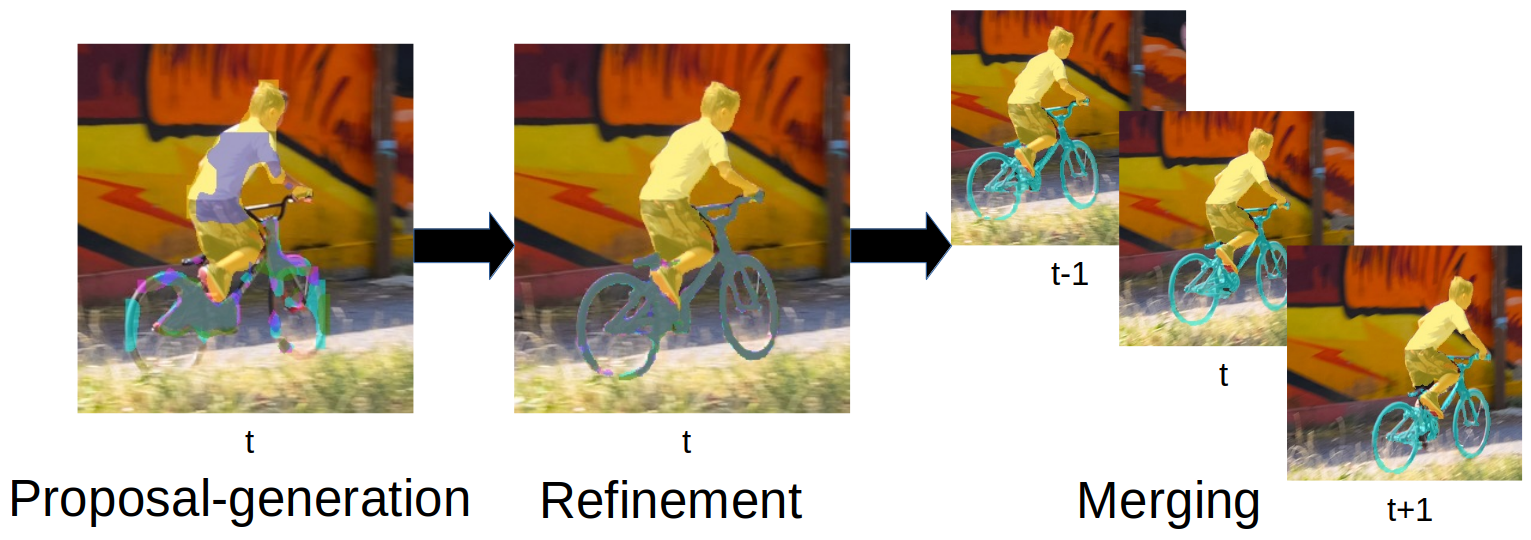

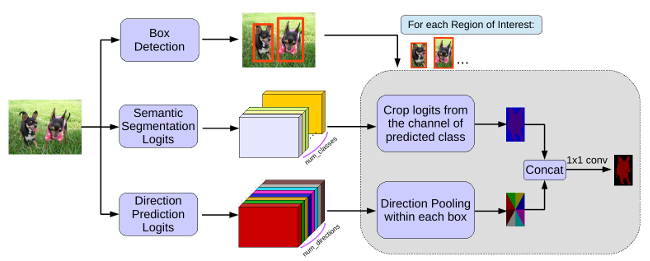

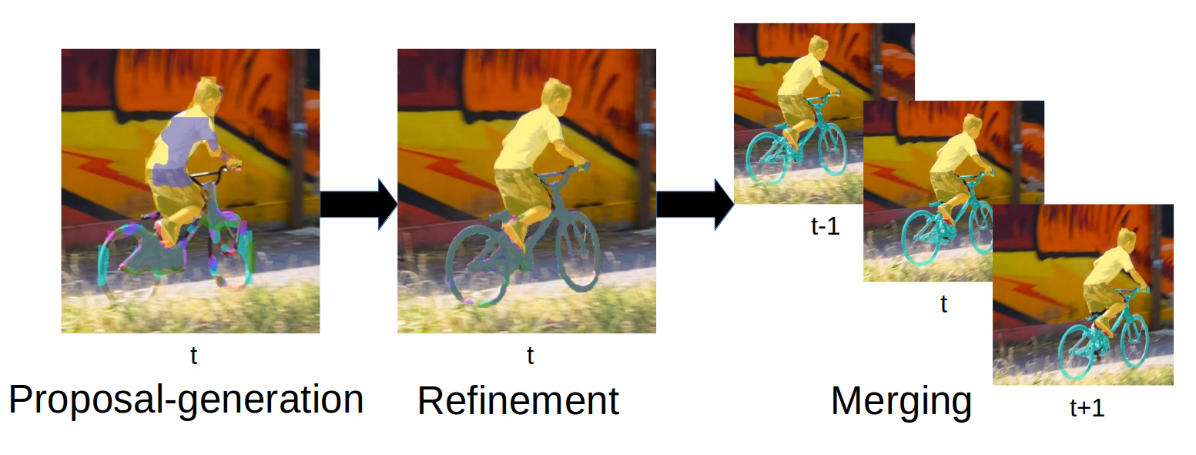

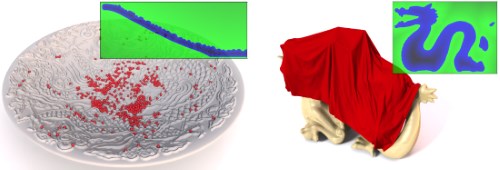

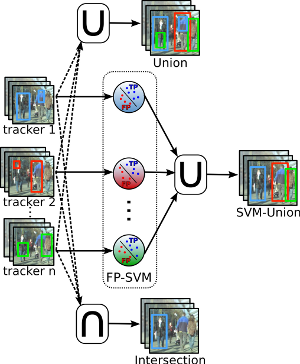

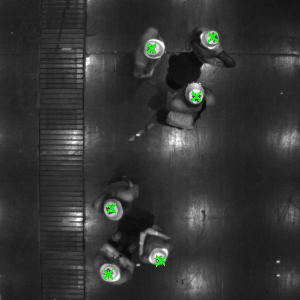

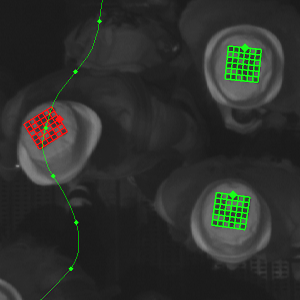

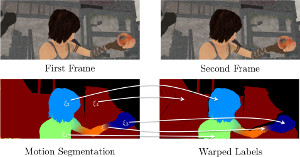

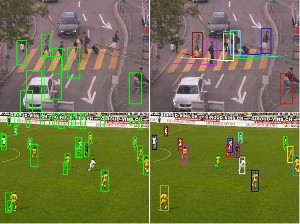

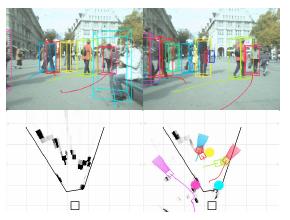

Point-VOS: Pointing Up Video Object Segmentation

Current state-of-the-art Video Object Segmentation (VOS) methods rely on dense per-object mask annotations both during training and testing. This requires time-consuming and costly video annotation mechanisms. We propose a novel Point-VOS task with a spatio-temporally sparse point-wise annotation scheme that substantially reduces the annotation effort. We apply our annotation scheme to two large-scale video datasets with text descriptions and annotate over 19M points across 133K objects in 32K videos. Based on our annotations, we propose a new Point-VOS benchmark, and a corresponding point-based training mechanism, which we use to establish strong baseline results. We show that existing VOS methods can easily be adapted to leverage our point annotations during training, and can achieve results close to the fully-supervised performance when trained on pseudo-masks generated from these points. In addition, we show that our data can be used to improve models that connect vision and language, by evaluating it on the Video Narrative Grounding (VNG) task. We will make our code and annotations available at https://pointvos.github.io.

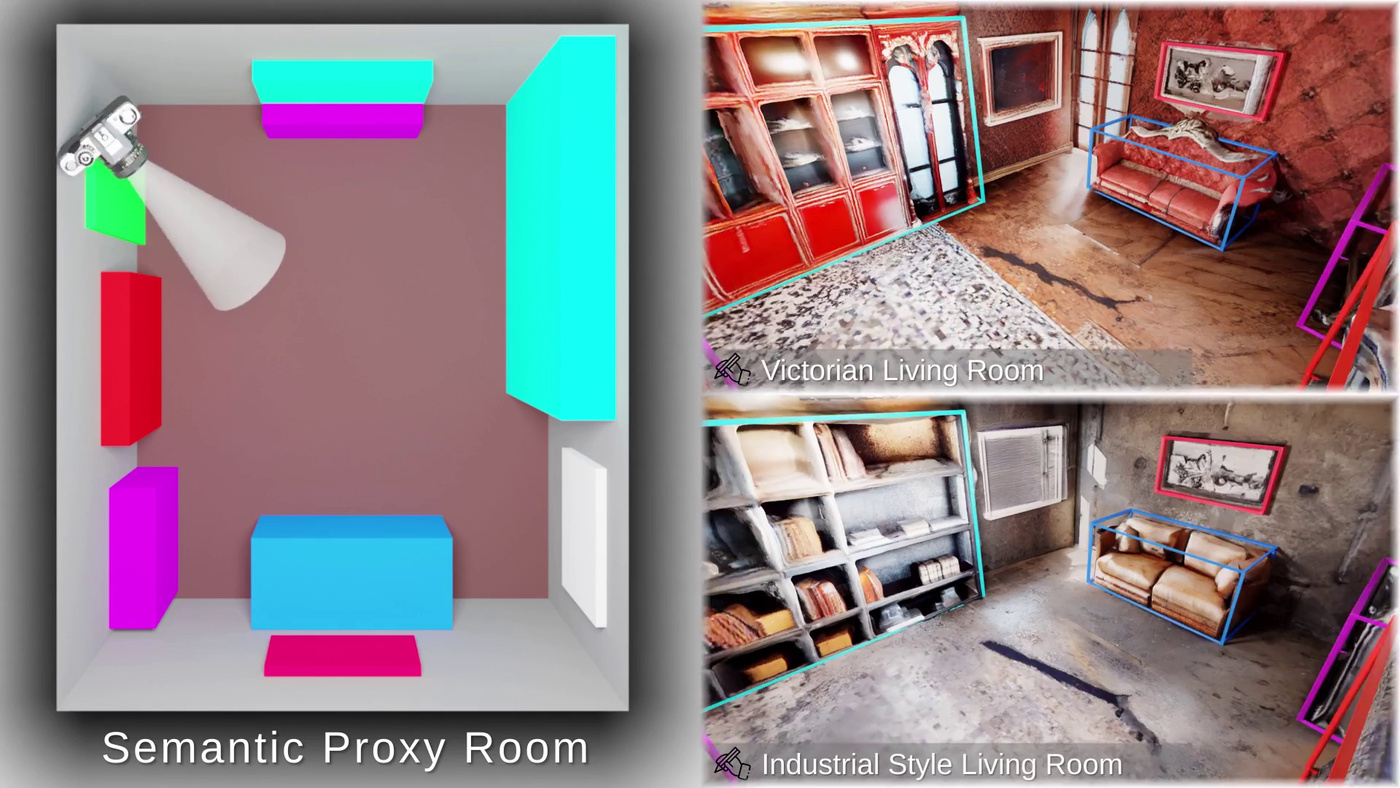

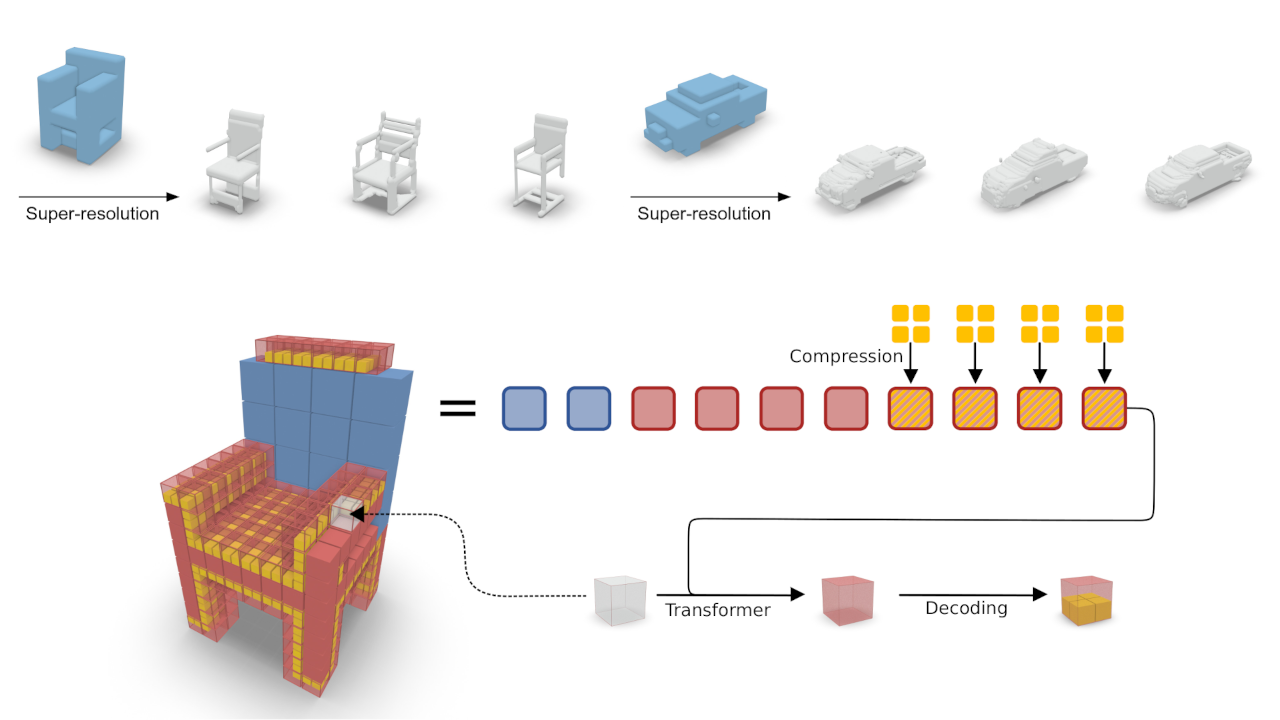

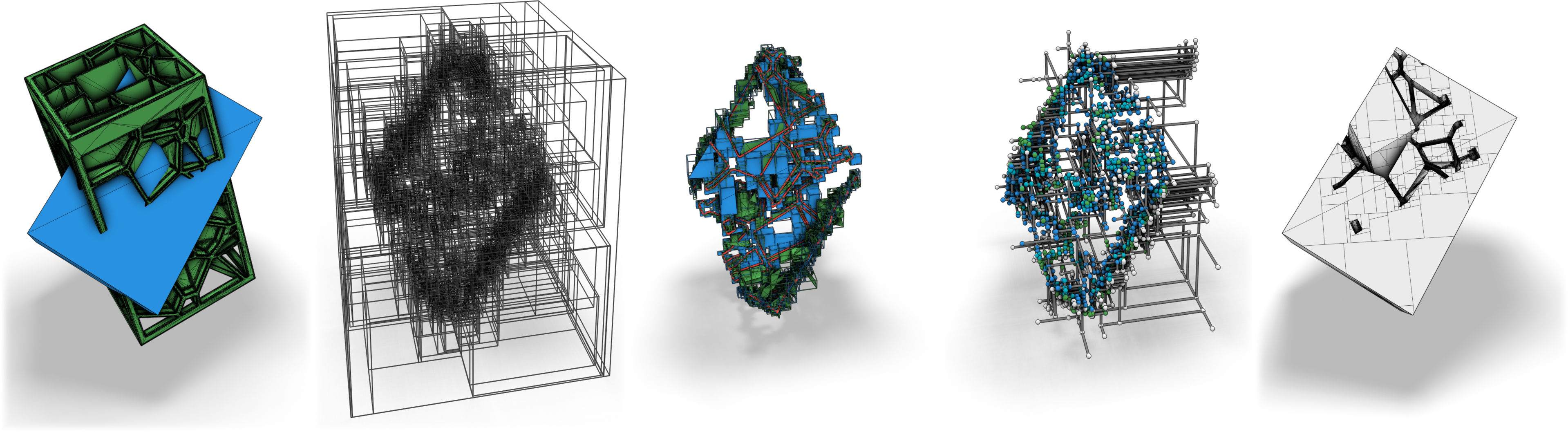

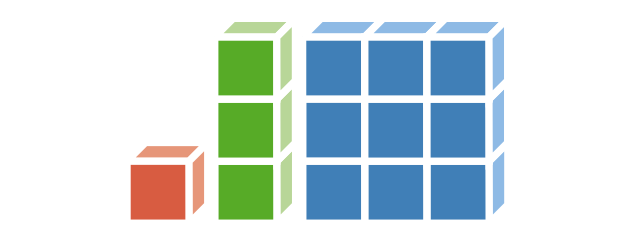

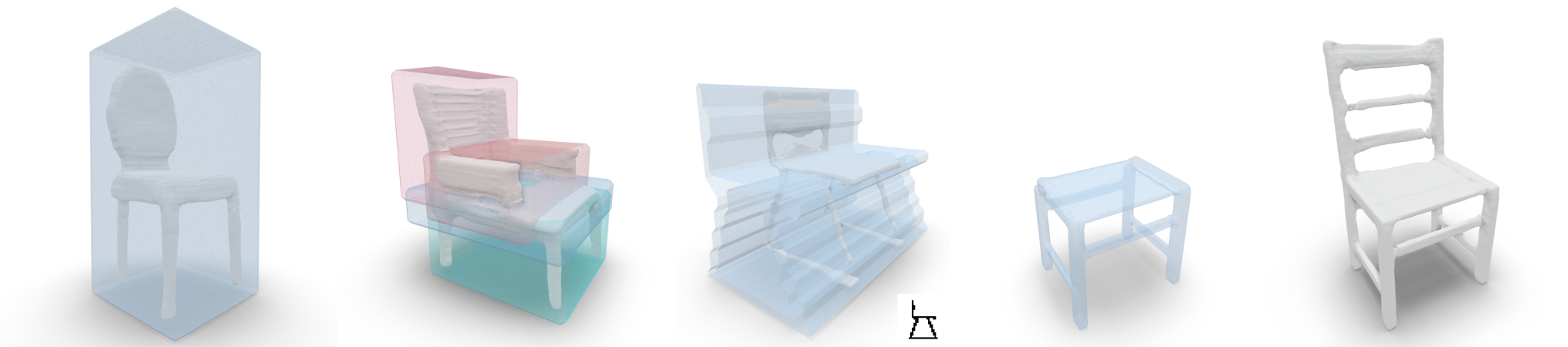

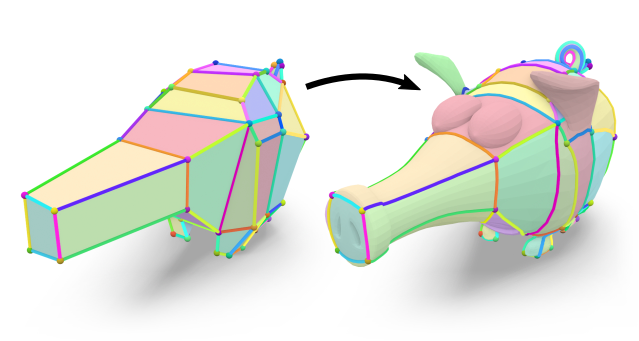

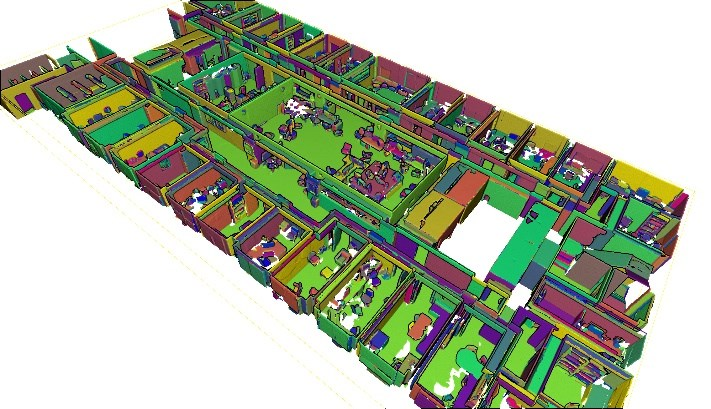

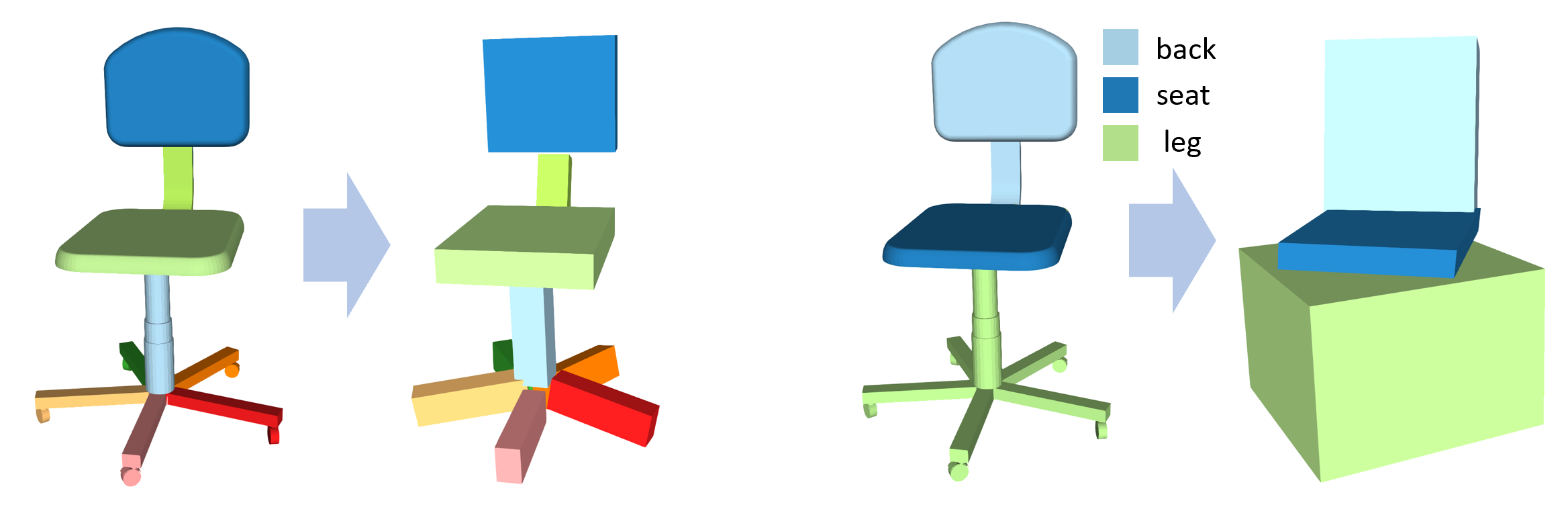

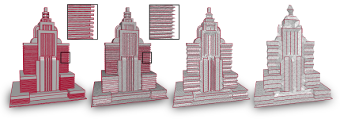

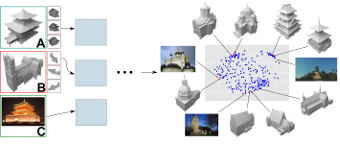

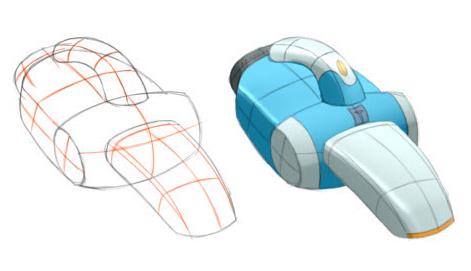

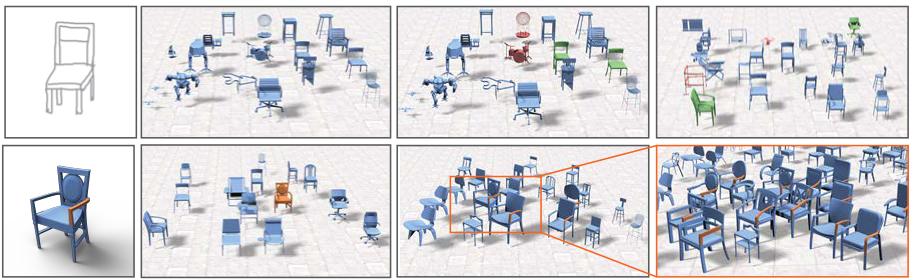

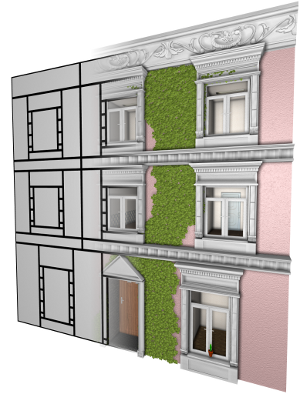

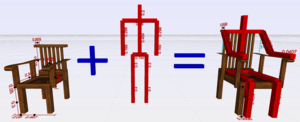

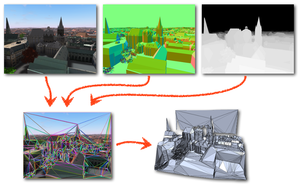

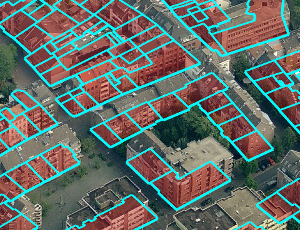

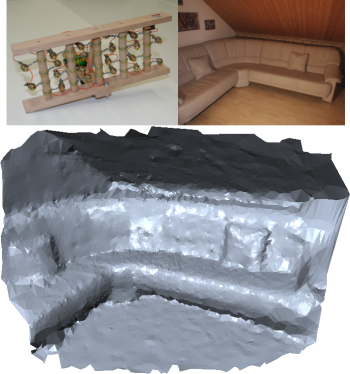

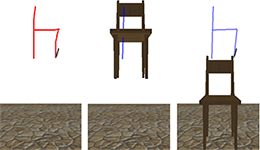

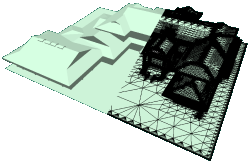

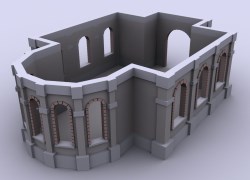

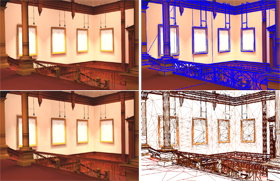

ControlRoom3D: Room Generation using Semantic Proxies

Manually creating 3D environments for AR/VR applications is a complex process requiring expert knowledge in 3D modeling software. Pioneering works facilitate this process by generating room meshes conditioned on textual style descriptions. Yet, many of these automatically generated 3D meshes do not adhere to typical room layouts, compromising their plausibility, e.g., by placing several beds in one bedroom. To address these challenges, we present ControlRoom3D, a novel method to generate high-quality room meshes. Central to our approach is a user-defined 3D semantic proxy room that outlines a rough room layout based on semantic bounding boxes and a textual description of the overall room style. Our key insight is that when rendered to 2D, this 3D representation provides valuable geometric and semantic information to control powerful 2D models to generate 3D consistent textures and geometry that aligns well with the proxy room. Backed up by an extensive study including quantitative metrics and qualitative user evaluations, our method generates diverse and globally plausible 3D room meshes, thus empowering users to design 3D rooms effortlessly without specialized knowledge.

@inproceedings{schult23controlroom3d,

author = {Schult, Jonas and Tsai, Sam and H\"ollein, Lukas and Wu, Bichen and Wang, Jialiang and Ma, Chih-Yao and Li, Kunpeng and Wang, Xiaofang and Wimbauer, Felix and He, Zijian and Zhang, Peizhao and Leibe, Bastian and Vajda, Peter and Hou, Ji},

title = {ControlRoom3D: Room Generation using Semantic Proxy Rooms},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024},

}

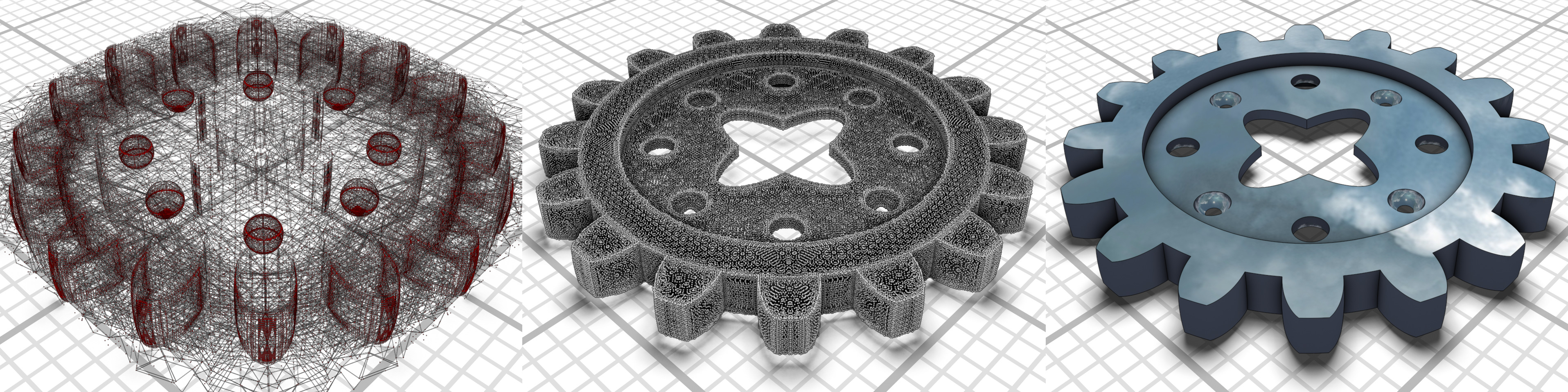

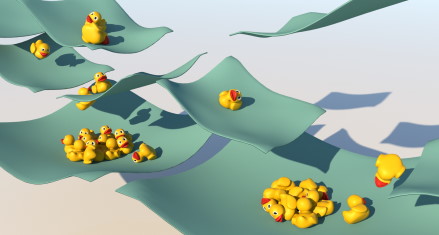

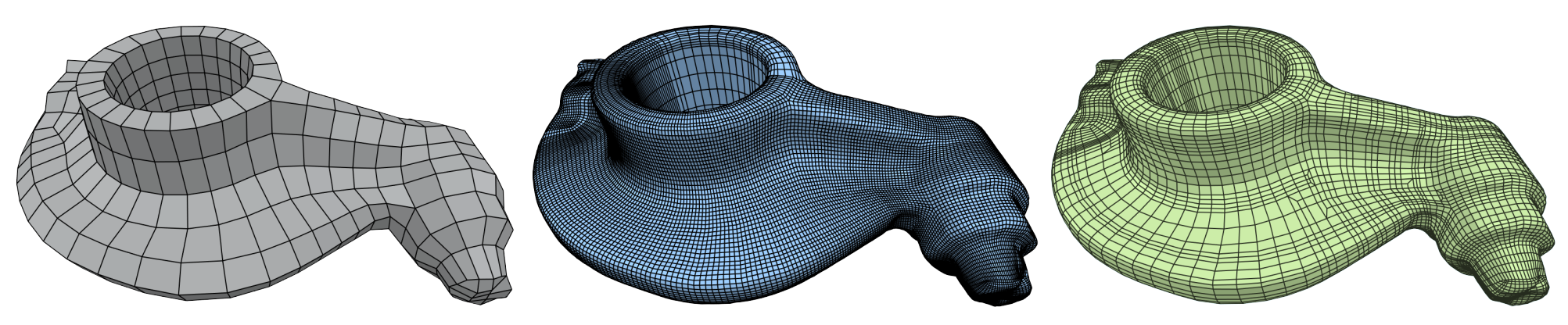

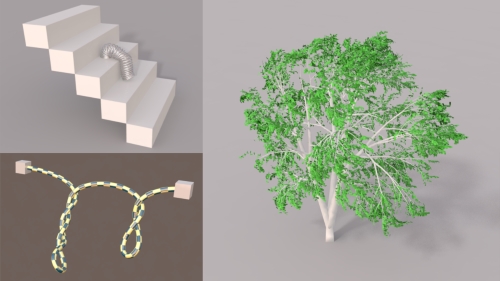

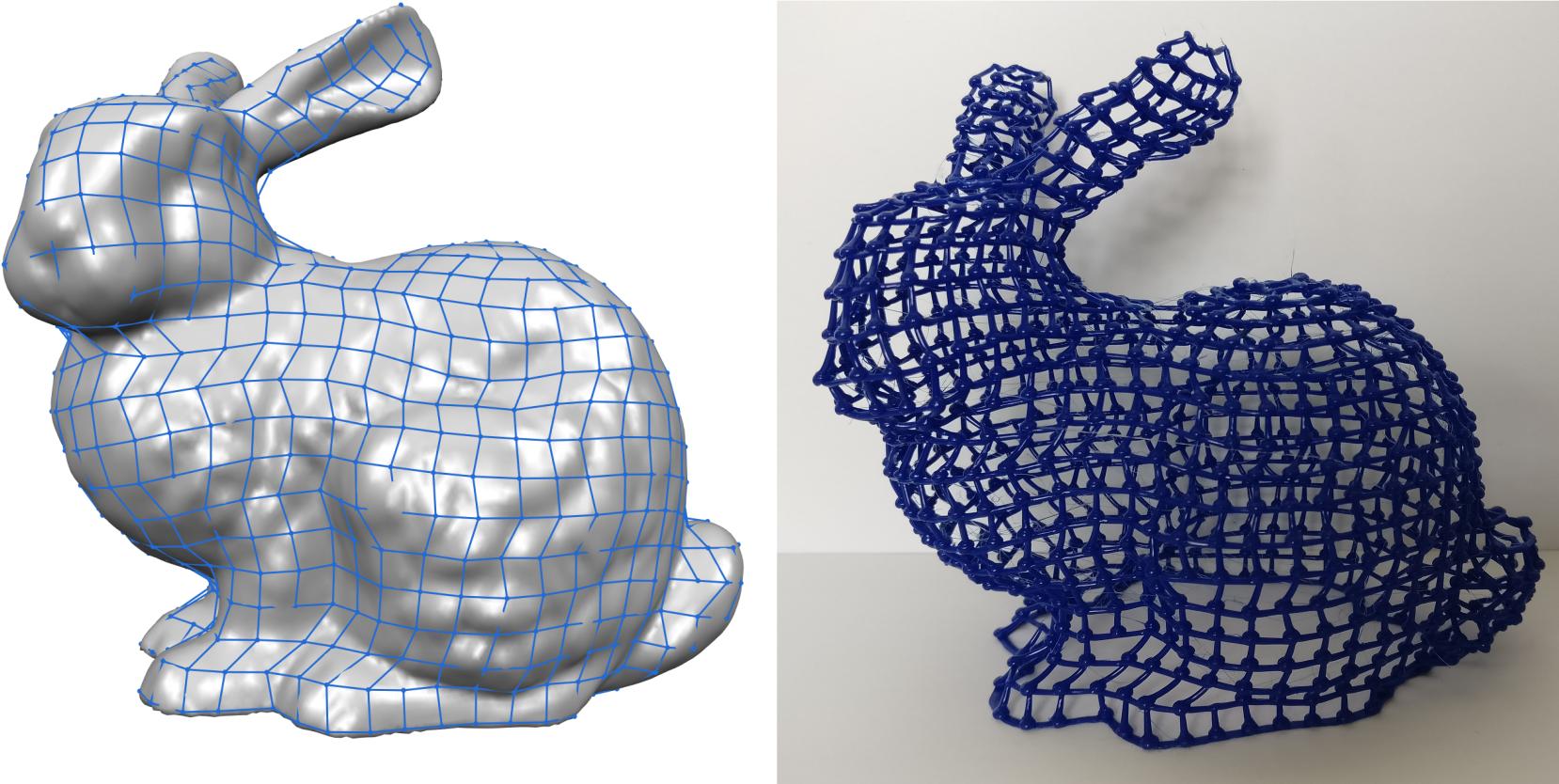

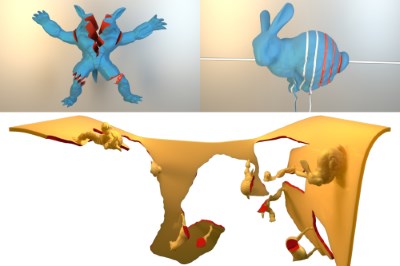

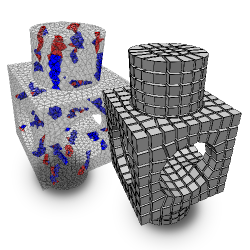

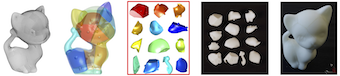

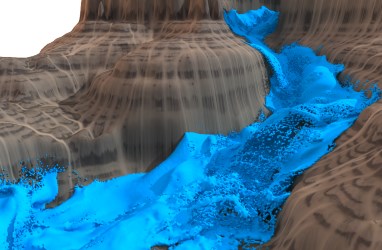

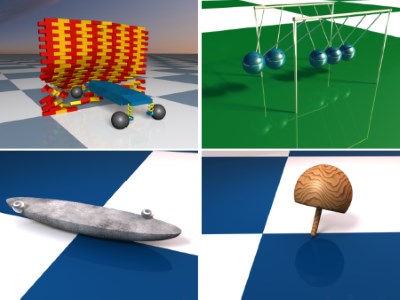

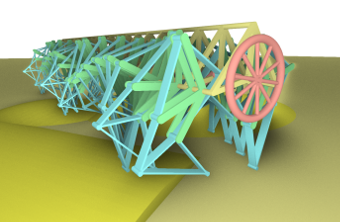

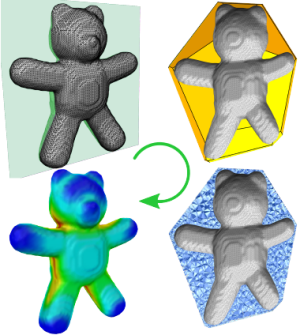

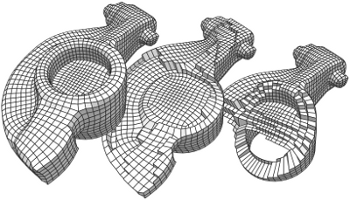

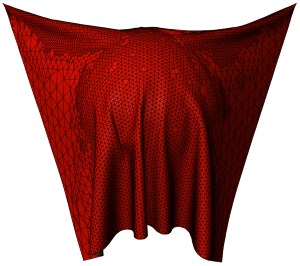

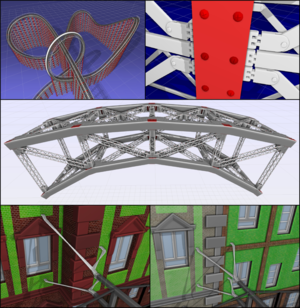

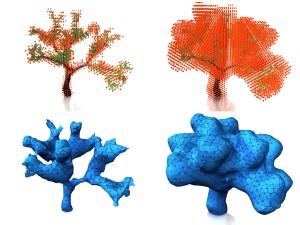

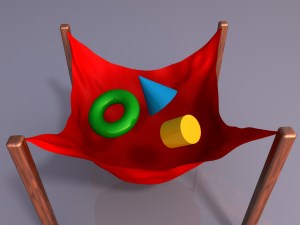

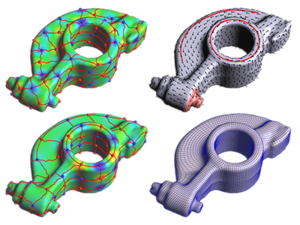

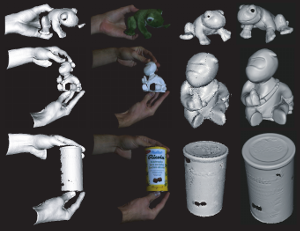

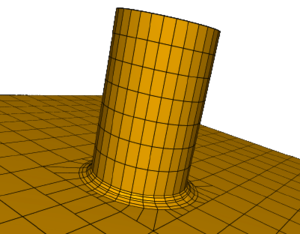

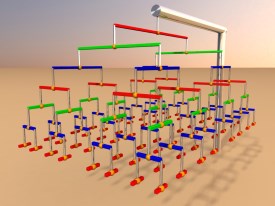

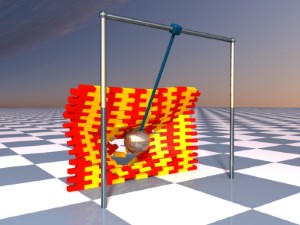

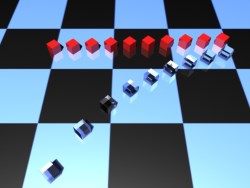

STARK: A Unified Framework for Strongly Coupled Simulation of Rigid and Deformable Bodies with Frictional Contact

The use of simulation in robotics is increasingly widespread for the purpose of testing, synthetic data generation and skill learning. A relevant aspect of simulation for a variety of robot applications is physics-based simulation of robot-object interactions. This involves the challenge of accurately modeling and implementing different mechanical systems such as rigid and deformable bodies as well as their interactions via constraints, contact or friction. Most state-of-the-art physics engines commonly used in robotics either cannot couple deformable and rigid bodies in the same framework, lack important systems such as cloth or shells, have stability issues in complex friction-dominated setups or cannot robustly prevent penetrations. In this paper, we propose a framework for strongly coupled simulation of rigid and deformable bodies with focus on usability, stability, robustness and easy access to state-of-the-art deformation and frictional contact models. Our system uses the Finite Element Method (FEM) to model deformable solids, the Incremental Potential Contact (IPC) approach for frictional contact and a robust second order optimizer to ensure stable and penetration-free solutions to tight tolerances. It is a general purpose framework, not tied to a particular use case such as grasping or learning, it is written in C++ and comes with a Python interface. We demonstrate our system’s ability to reproduce complex real-world experiments where a mobile vacuum robot interacts with a towel on different floor types and towel geometries. Our system is able to reproduce 100% of the qualitative outcomes observed in the laboratory environment. The simulation pipeline, named Stark (the German word for strong, as in strong coupling) is made open-source.

@INPROCEEDINGS{FLL+24,

author={Fernández-Fernández, José Antonio and Lange, Ralph and Laible, Stefan and Arras, Kai O. and Bender, Jan},

booktitle={2024 IEEE International Conference on Robotics and Automation (ICRA)},

title={STARK: A Unified Framework for Strongly Coupled Simulation of Rigid and Deformable Bodies with Frictional Contact},

year={2024}

}

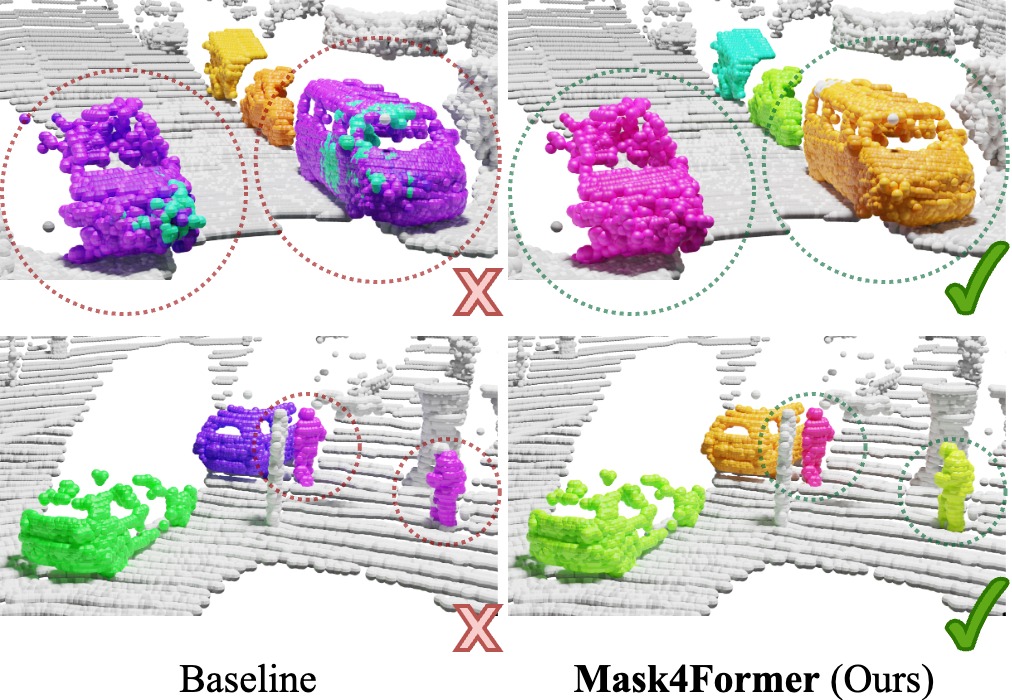

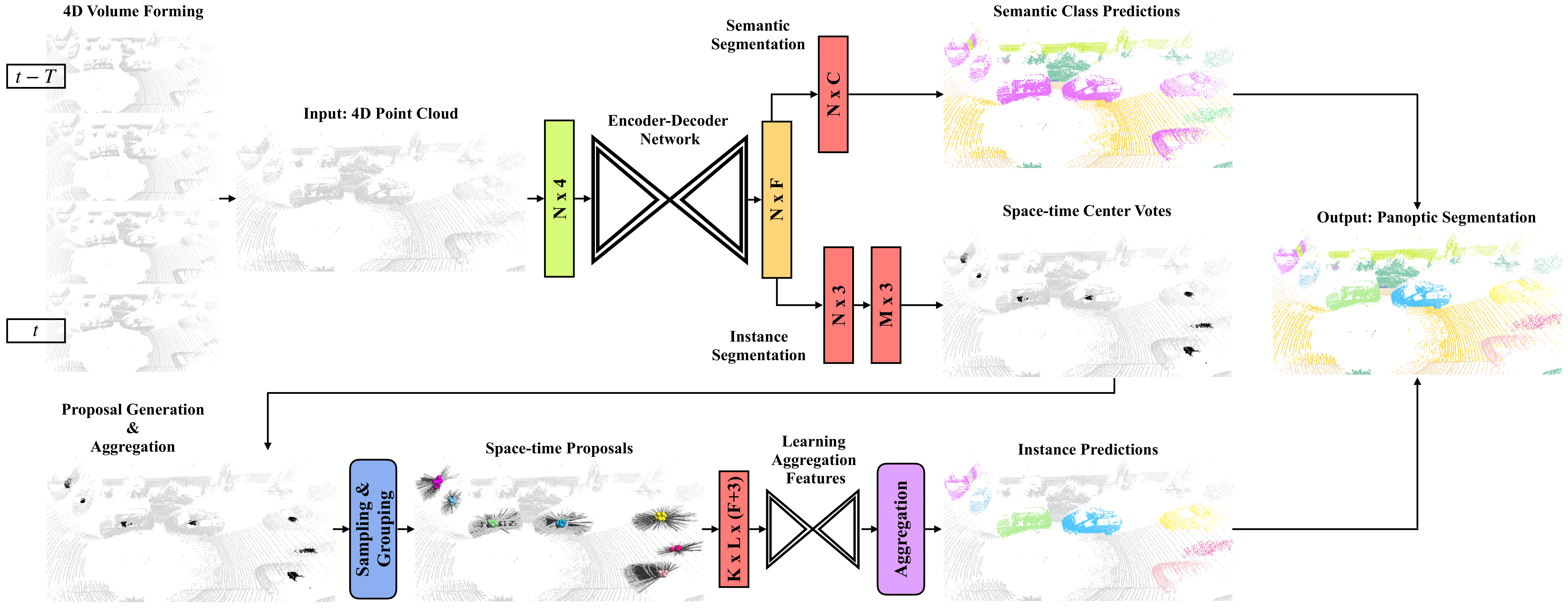

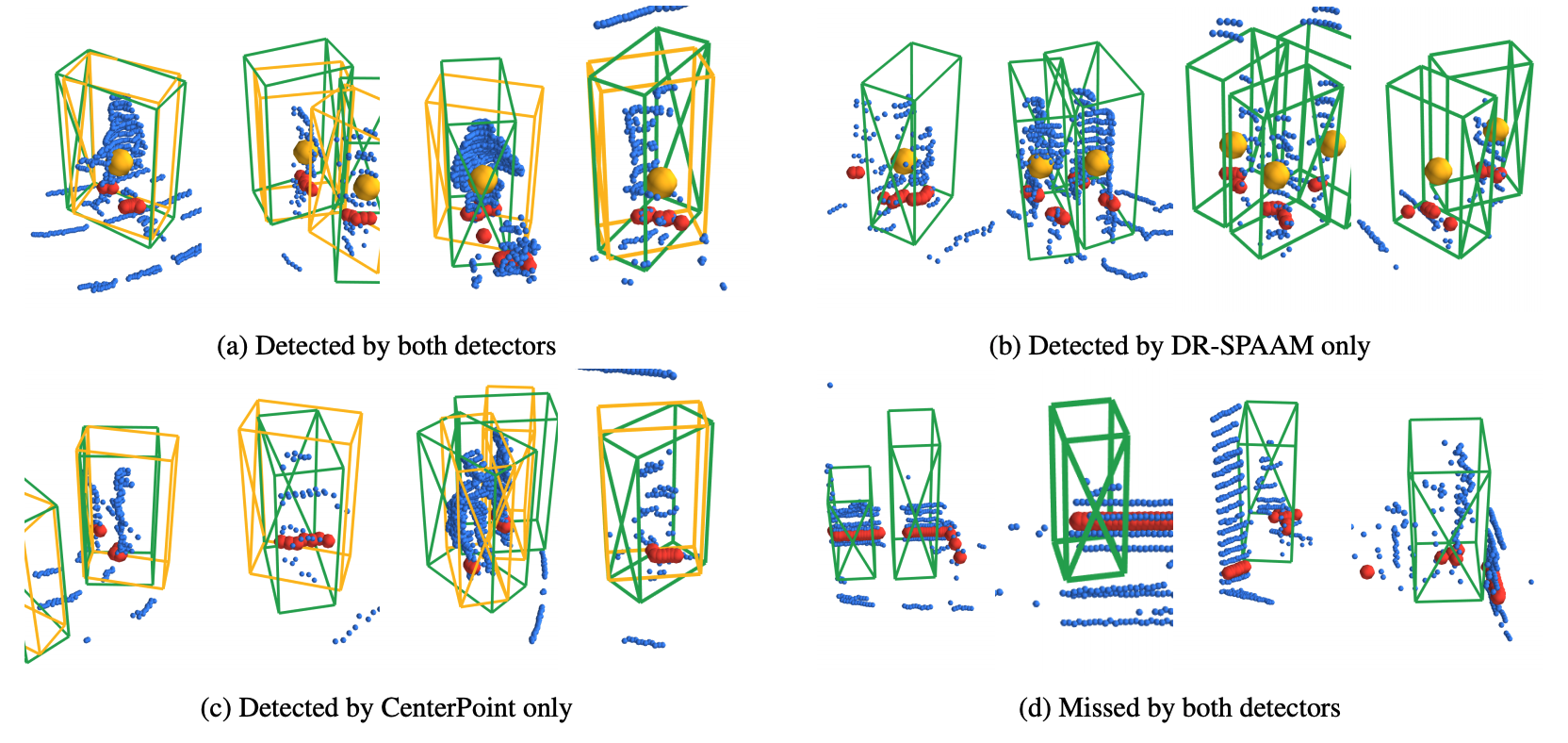

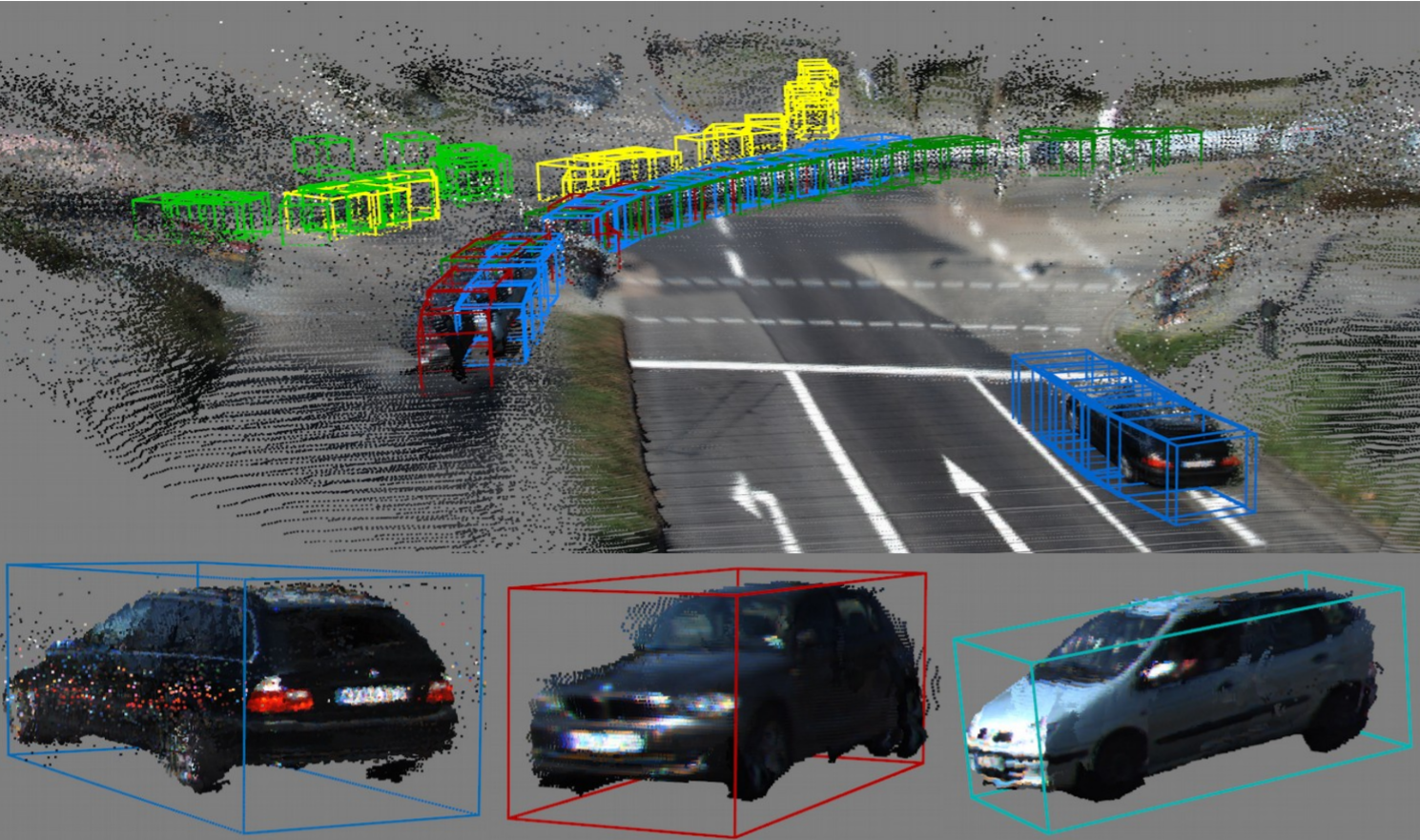

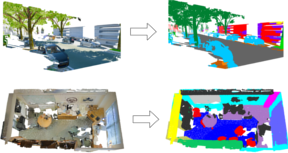

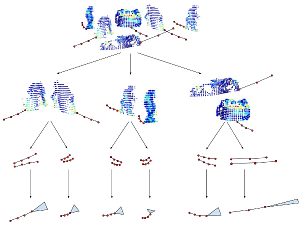

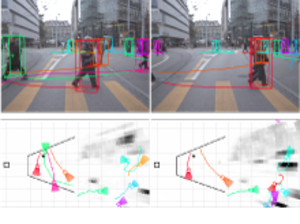

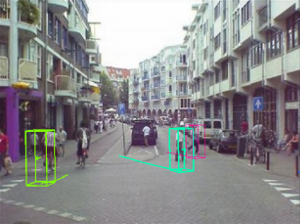

Mask4Former: Mask Transformer for 4D Panoptic Segmentation

Accurately perceiving and tracking instances over time is essential for the decision-making processes of autonomous agents interacting safely in dynamic environments. With this intention, we propose Mask4Former for the challenging task of 4D panoptic segmentation of LiDAR point clouds.

Mask4Former is the first transformer-based approach unifying semantic instance segmentation and tracking of sparse and irregular sequences of 3D point clouds into a single joint model. Our model directly predicts semantic instances and their temporal associations without relying on hand-crafted non-learned association strategies such as probabilistic clustering or voting-based center prediction. Instead, Mask4Former introduces spatio-temporal instance queries that encode the semantic and geometric properties of each semantic tracklet in the sequence.

In an in-depth study, we find that promoting spatially compact instance predictions is critical as spatio-temporal instance queries tend to merge multiple semantically similar instances, even if they are spatially distant. To this end, we regress 6-DOF bounding box parameters from spatio-temporal instance queries, which are used as an auxiliary task to foster spatially compact predictions.

Mask4Former achieves a new state-of-the-art on the SemanticKITTI test set with a score of 68.4 LSTQ.

@inproceedings{yilmaz24mask4former,

title = {{Mask4Former: Mask Transformer for 4D Panoptic Segmentation}},

author = {Yilmaz, Kadir and Schult, Jonas and Nekrasov, Alexey and Leibe, Bastian},

booktitle = {International Conference on Robotics and Automation (ICRA)},

year = {2024}

}

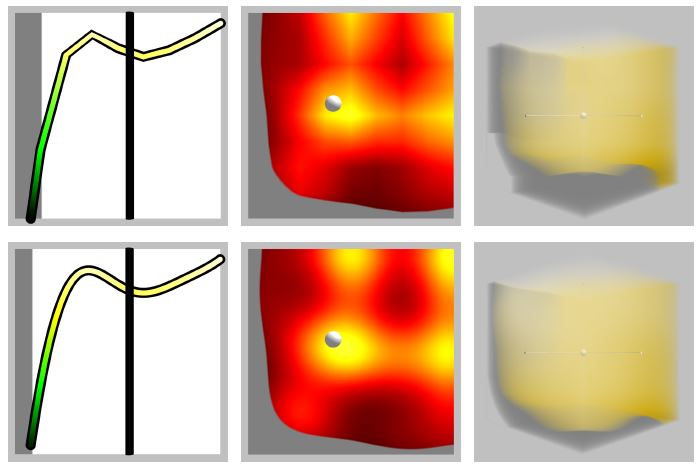

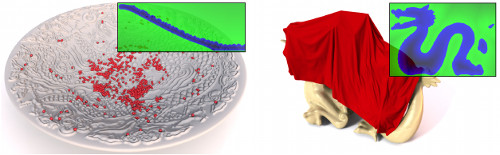

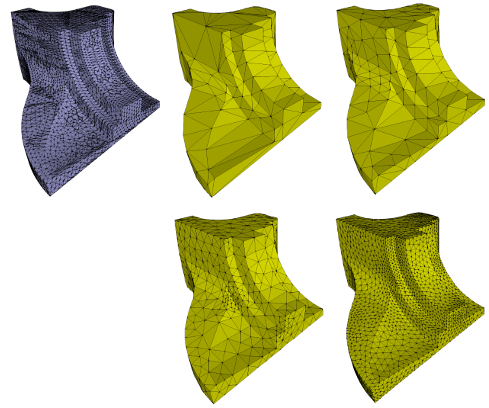

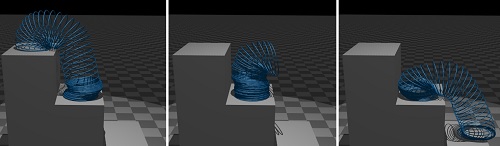

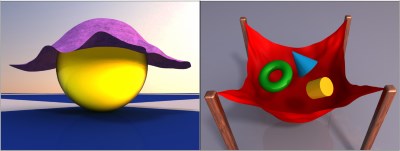

Implicit frictional dynamics with soft constraints

Dynamics simulation with frictional contacts is important for a wide range of applications, from cloth simulation to object manipulation. Recent methods using smoothed lagged friction forces have enabled robust and differentiable simulation of elastodynamics with friction. However, the resulting frictional behavior can be inaccurate and may not converge to analytic solutions. Here we evaluate the accuracy of lagged friction models in comparison with implicit frictional contact systems. We show that major inaccuracies near the stick-slip threshold in such systems are caused by lagging of friction forces rather than by smoothing the Coulomb friction curve. Furthermore, we demonstrate how systems involving implicit or lagged friction can be correctly used with higher-order time integration and highlight limitations in earlier attempts. We demonstrate how to exploit forward-mode automatic differentiation to simplify and, in some cases, improve the performance of the inexact Newton method. Finally, we show that other complex phenomena can also be simulated effectively while maintaining smoothness of the entire system. We extend our method to exhibit stick-slip frictional behavior and preserve volume on compressible and nearly-incompressible media using soft constraints.

@ARTICLE{LLA*24,

author={Larionov, Egor and Longva, Andreas and Ascher, Uri M. and Bender, Jan and Pai, Dinesh K.},

title={Implicit frictional dynamics with soft constraints},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2024},

volume={},

number={},

pages={1-12},

doi={10.1109/TVCG.2024.3437417}

}

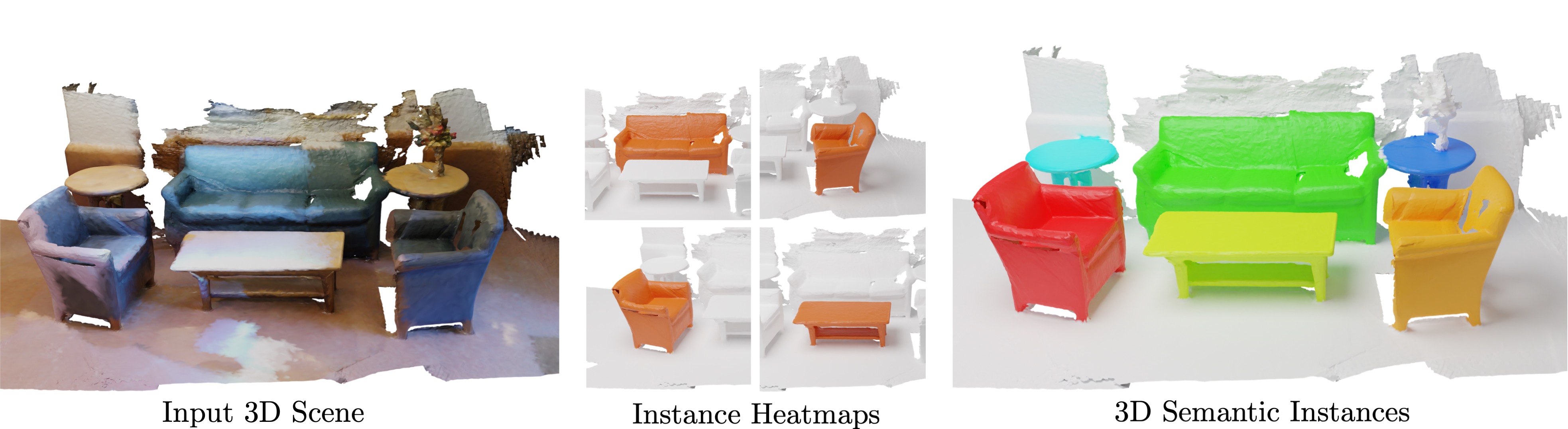

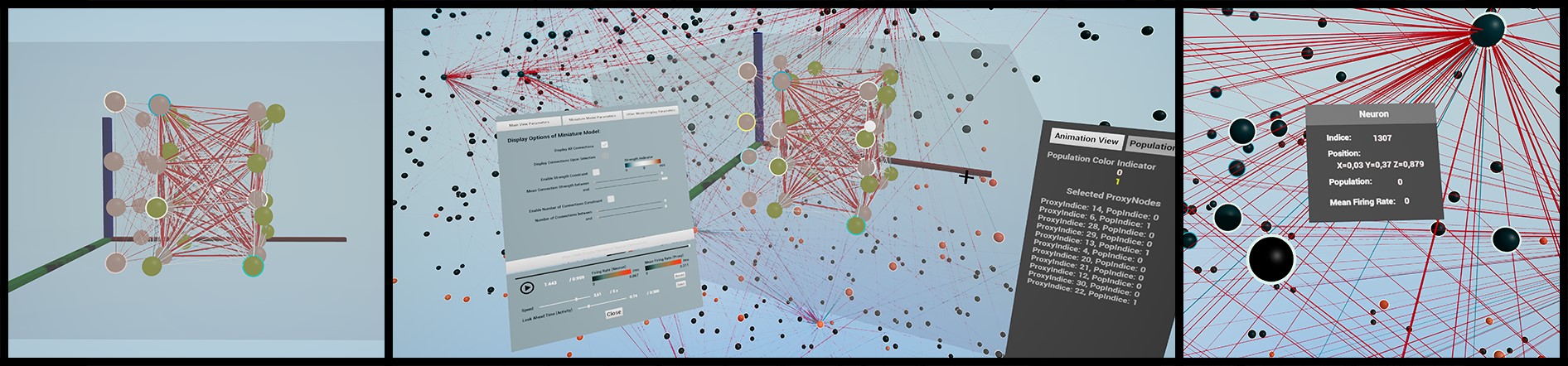

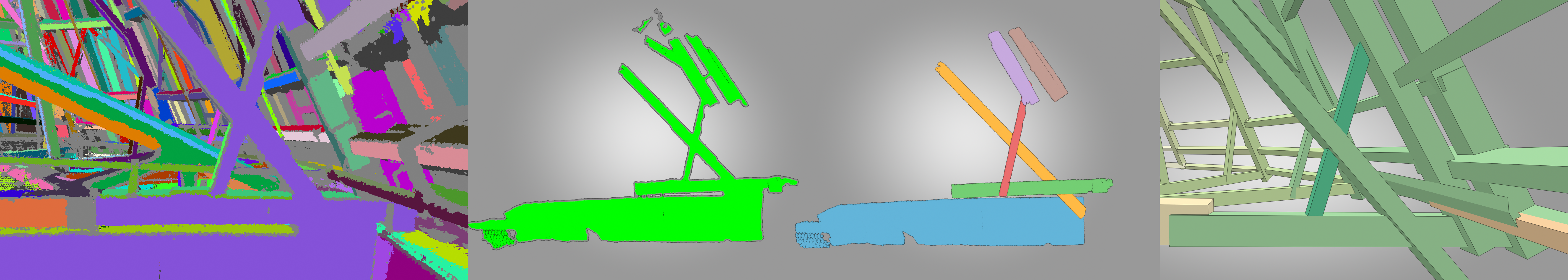

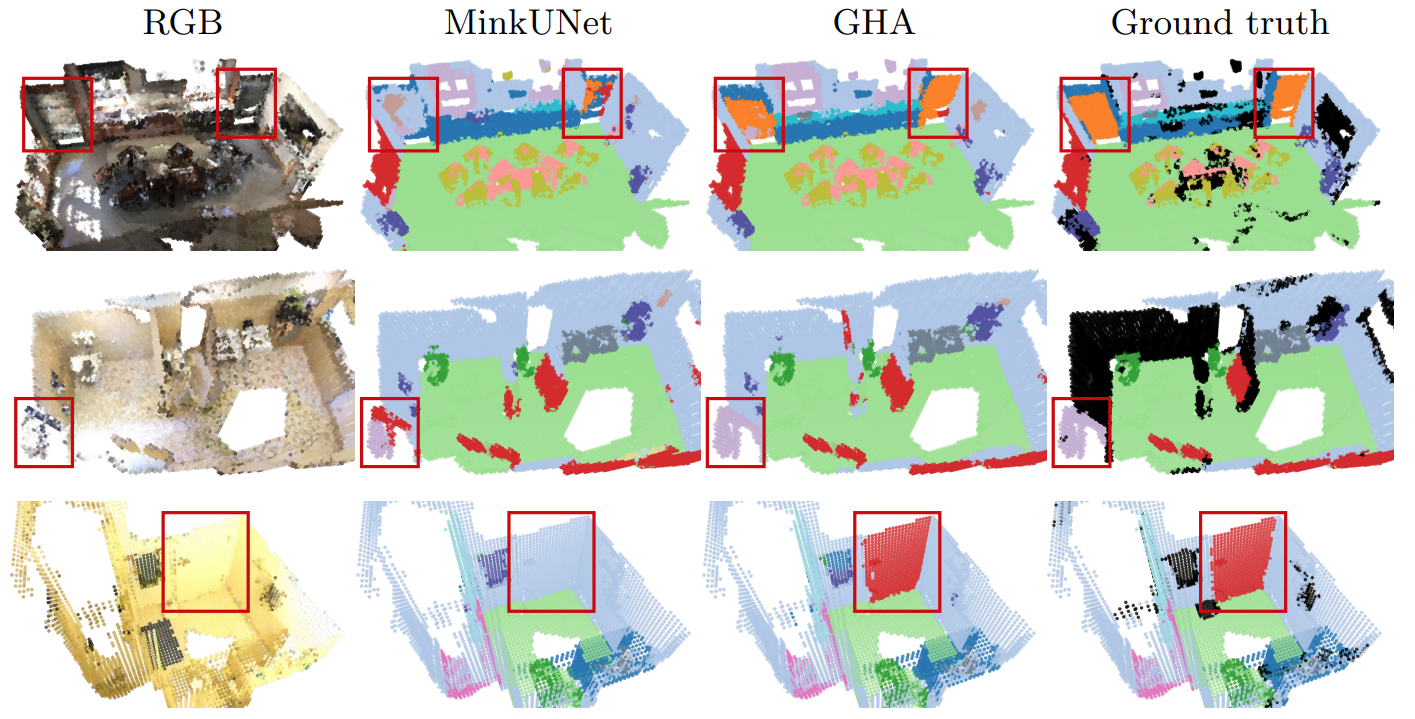

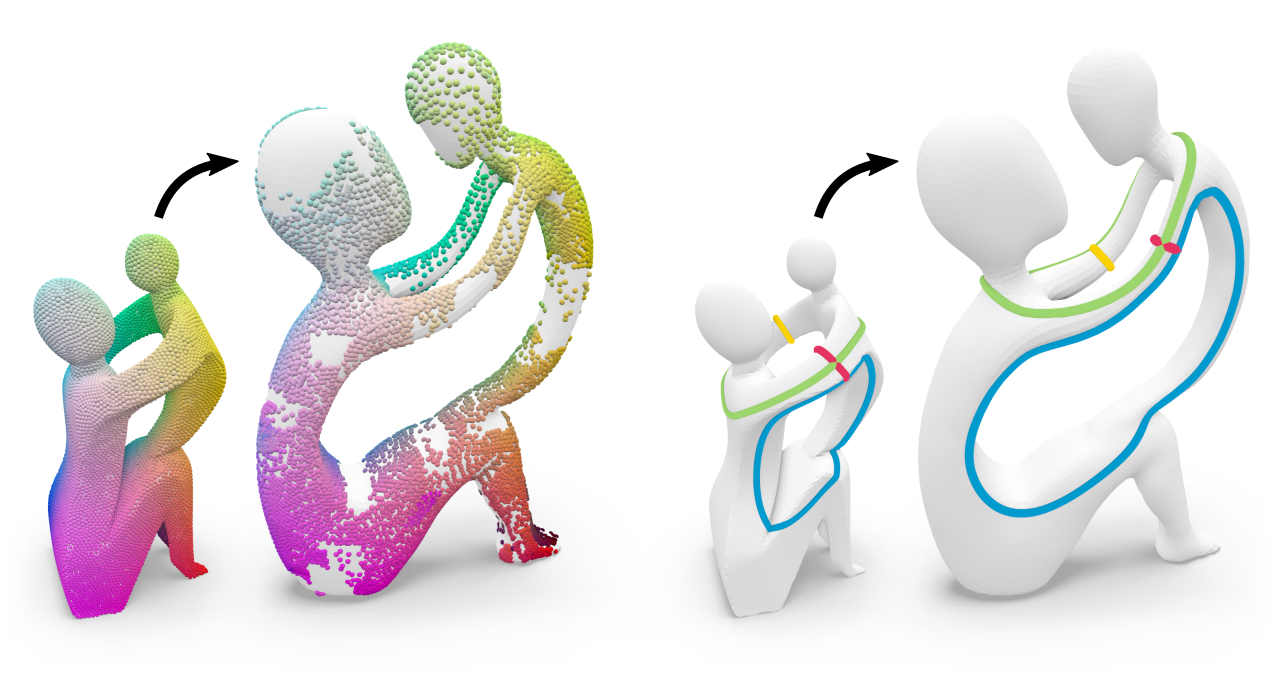

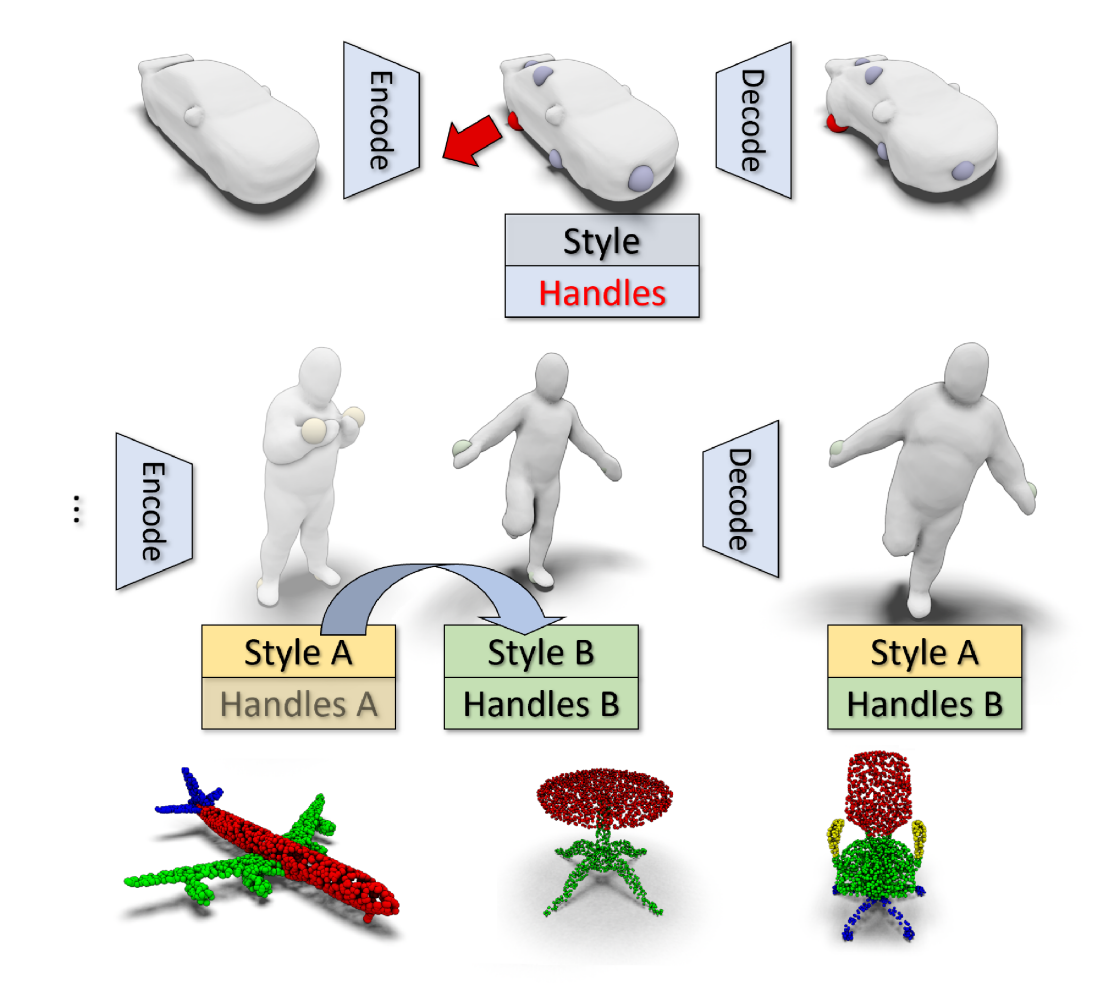

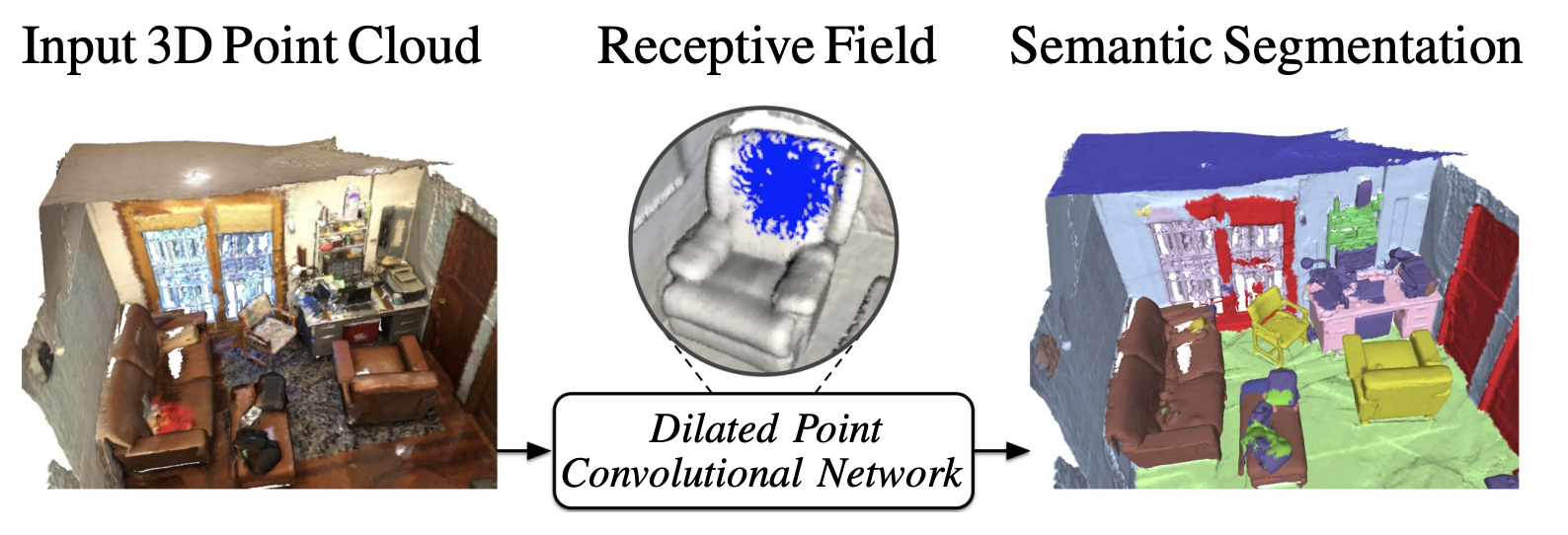

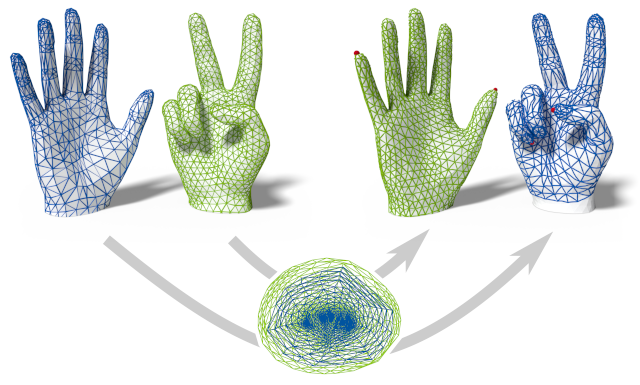

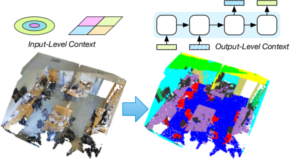

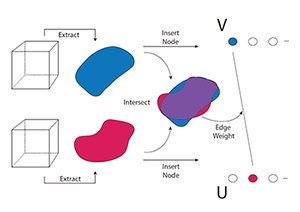

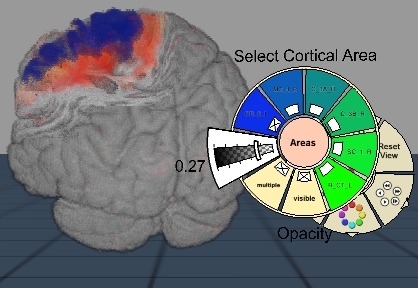

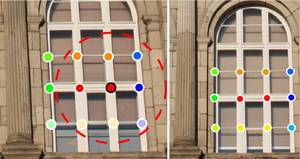

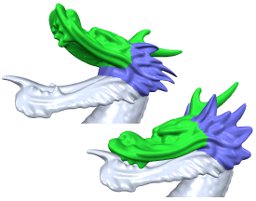

AGILE3D: Attention Guided Interactive Multi-object 3D Segmentation

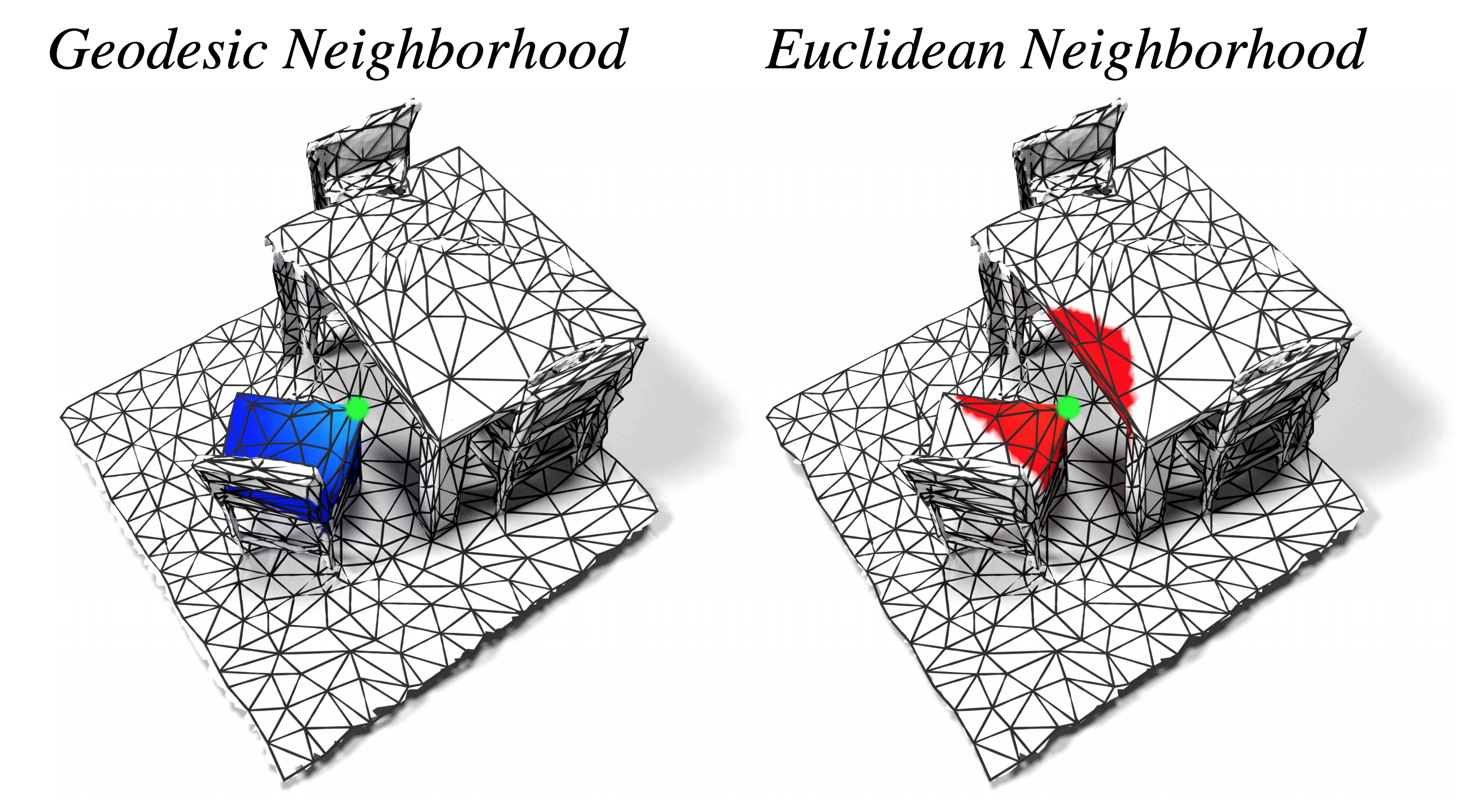

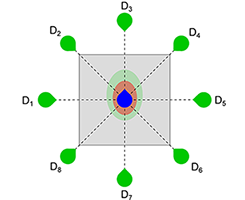

During interactive segmentation, a model and a user work together to delineate objects of interest in a 3D point cloud. In an iterative process, the model assigns each data point to an object (or the background), while the user corrects errors in the resulting segmentation and feeds them back into the model. The current best practice formulates the problem as binary classification and segments objects one at a time. The model expects the user to provide positive clicks to indicate regions wrongly assigned to the background and negative clicks on regions wrongly assigned to the object. Sequentially visiting objects is wasteful since it disregards synergies between objects: a positive click for a given object can, by definition, serve as a negative click for nearby objects. Moreover, a direct competition between adjacent objects can speed up the identification of their common boundary. We introduce AGILE3D, an efficient, attention-based model that (1) supports simultaneous segmentation of multiple 3D objects, (2) yields more accurate segmentation masks with fewer user clicks, and (3) offers faster inference. Our core idea is to encode user clicks as spatial-temporal queries and enable explicit interactions between click queries as well as between them and the 3D scene through a click attention module. Every time new clicks are added, we only need to run a lightweight decoder that produces updated segmentation masks. In experiments with four different 3D point cloud datasets, AGILE3D sets a new state-of-the-art. Moreover, we also verify its practicality in real-world setups with real user studies.

@inproceedings{yue2023agile3d,

title = {{AGILE3D: Attention Guided Interactive Multi-object 3D Segmentation}},

author = {Yue, Yuanwen and Mahadevan, Sabarinath and Schult, Jonas and Engelmann, Francis and Leibe, Bastian and Schindler, Konrad and Kontogianni, Theodora},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2024}

}

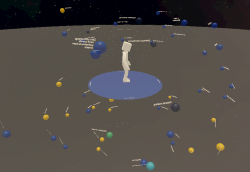

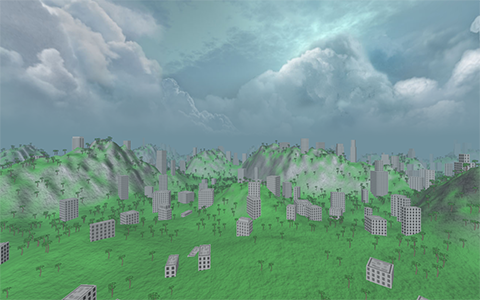

Wayfinding in Immersive Virtual Environments as Social Activity Supported by Virtual Agents

Effective navigation and interaction within immersive virtual environments rely on thorough scene exploration. Therefore, wayfinding is essential, assisting users in comprehending their surroundings, planning routes, and making informed decisions. Based on real-life observations, wayfinding is, thereby, not only a cognitive process but also a social activity profoundly influenced by the presence and behaviors of others. In virtual environments, these 'others' are virtual agents (VAs), defined as anthropomorphic computer-controlled characters, who enliven the environment and can serve as background characters or direct interaction partners. However, little research has been done to explore how to efficiently use VAs as social wayfinding support. In this paper, we aim to assess and contrast user experience, user comfort, and the acquisition of scene knowledge through a between-subjects study involving n = 60 participants across three distinct wayfinding conditions in one slightly populated urban environment: (i) unsupported wayfinding, (ii) strong social wayfinding using a virtual supporter who incorporates guiding and accompanying elements while directly impacting the participants' wayfinding decisions, and (iii) weak social wayfinding using flows of VAs that subtly influence the participants' wayfinding decisions by their locomotion behavior. Our work is the first to compare the impact of VAs' behavior in virtual reality on users' scene exploration, including spatial awareness, scene comprehension, and comfort. The results show the general utility of social wayfinding support, while underscoring the superiority of the strong type. Nevertheless, further exploration of weak social wayfinding as a promising technique is needed. Thus, our work contributes to the enhancement of VAs as advanced user interfaces, increasing user acceptance and usability.

@article{Boensch2024,

title={Wayfinding in Immersive Virtual Environments as Social Activity Supported by Virtual Agents},

author={B{\"o}nsch, Andrea and Ehret, Jonathan and Rupp, Daniel and Kuhlen, Torsten W.},

journal={Frontiers in Virtual Reality},

volume={4},

year={2024},

pages={1334795},

publisher={Frontiers},

doi={10.3389/frvir.2023.1334795}

}

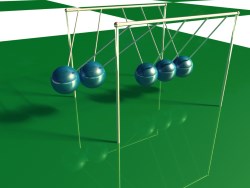

Strongly Coupled Simulation of Magnetic Rigid Bodies

We present a strongly coupled method for the robust simulation of linear magnetic rigid bodies. Our approach describes the magnetic effects as part of an incremental potential function. This potential is inserted into the reformulation of the equations of motion for rigid bodies as an optimization problem. For handling collision and friction, we lean on the Incremental Potential Contact (IPC) method. Furthermore, we provide a novel, hybrid explicit / implicit time integration scheme for the magnetic potential based on a distance criterion. This reduces the fill-in of the energy Hessian in cases where the change in magnetic potential energy is small, leading to a simulation speedup without compromising the stability of the system. The resulting system yields a strongly coupled method for the robust simulation of magnetic effects. We showcase the robustness in theory by analyzing the behavior of the magnetic attraction against the contact resolution. Furthermore, we display stability in practice by simulating exceedingly strong and arbitrarily shaped magnets. The results are free of artifacts like bouncing for time step sizes larger than with the equivalent weakly coupled approach. Finally, we showcase the utility of our method in different scenarios with complex joints and numerous magnets.

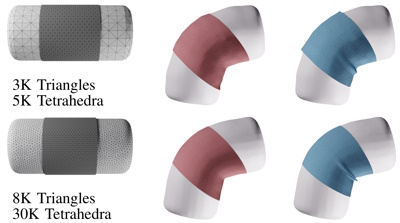

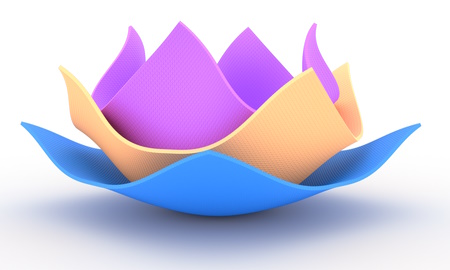

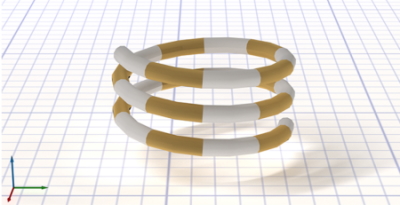

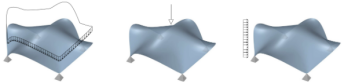

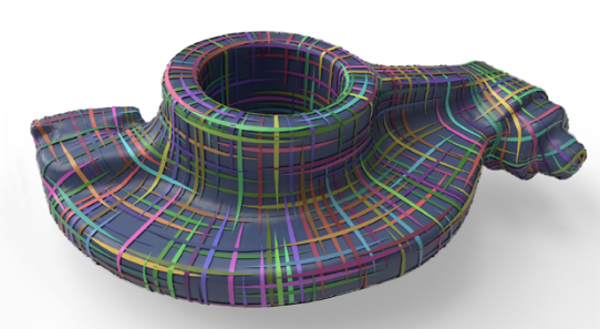

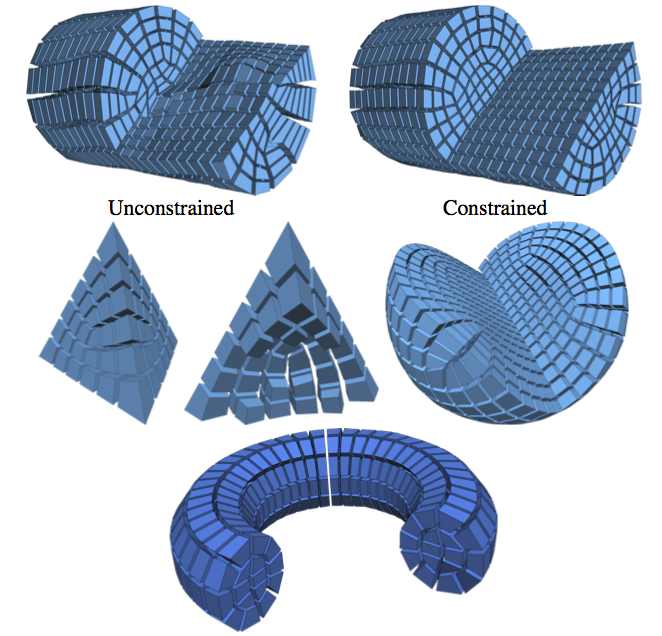

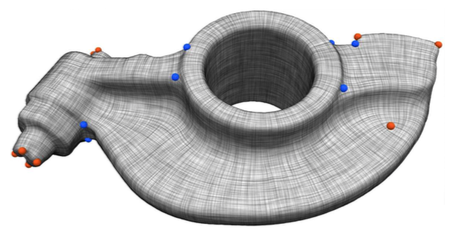

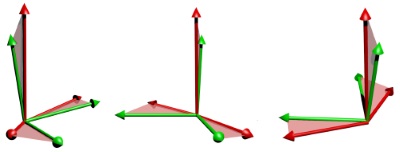

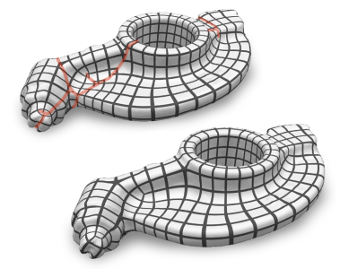

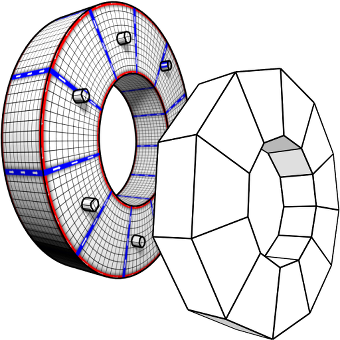

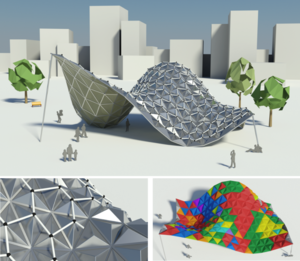

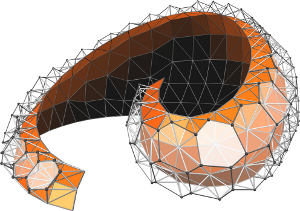

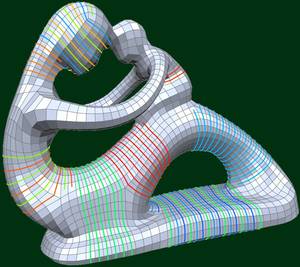

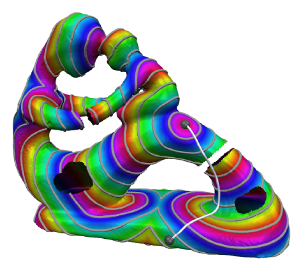

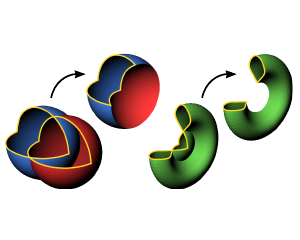

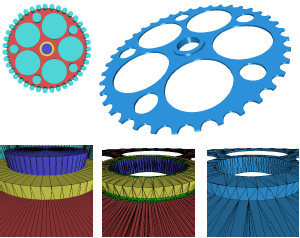

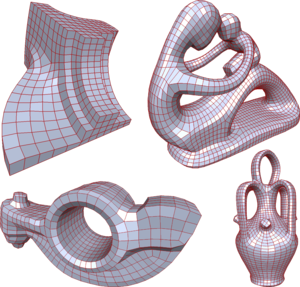

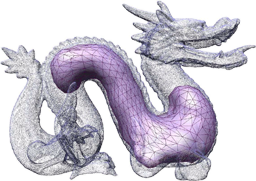

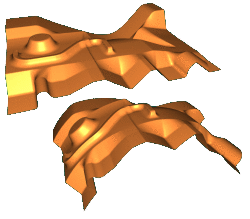

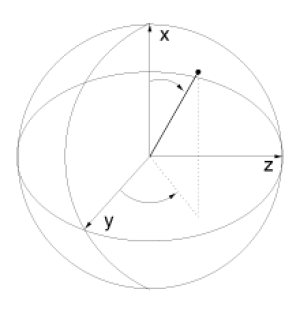

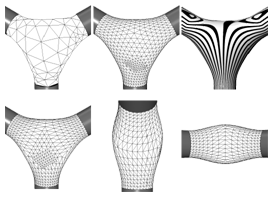

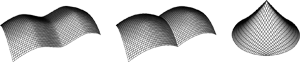

Curved Three-Director Cosserat Shells with Strong Coupling

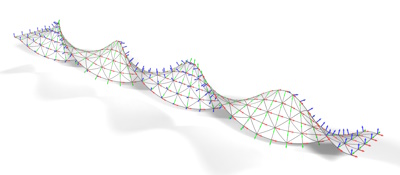

Continuum-based shell models are an established approach for the simulation of thin deformables in computer graphics. However, existing research in physically-based animation is mostly focused on shear-rigid Kirchhoff-Love shells. In this work we explore three-director Cosserat (micropolar) shells which introduce additional rotational degrees of freedom. This microrotation field models transverse shearing and in-plane drilling rotations. We propose an incremental potential formulation of the Cosserat shell dynamics which allows for strong coupling with frictional contact and other physical systems. We evaluate a corresponding finite element discretization for non-planar shells using second-order elements which alleviates shear-locking and permits simulation of curved geometries. Our formulation and the discretization, in particular of the rotational degrees of freedom, is designed to integrate well with typical simulation approaches in physically-based animation. While the discretization of the rotations requires some care, we demonstrate that they do not pose significant numerical challenges in Newton’s method. In our experiments we also show that the codimensional shell model is consistent with the respective three-dimensional model. We qualitatively compare our formulation with Kirchhoff-Love shells and demonstrate intriguing use cases for the additional modes of control over dynamic deformations offered by the Cosserat model such as directly prescribing rotations or angular velocities and influencing the shell’s curvature.

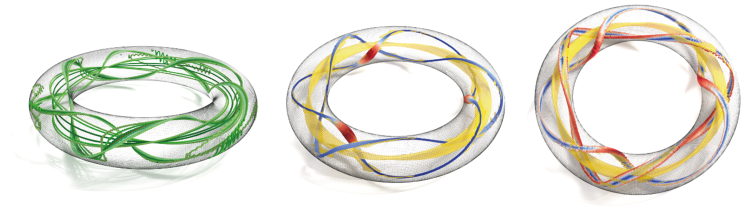

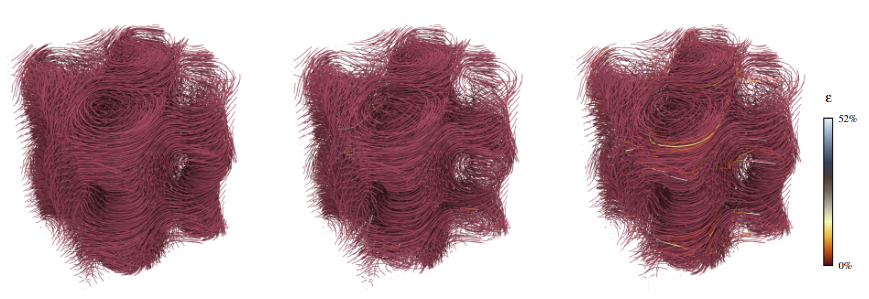

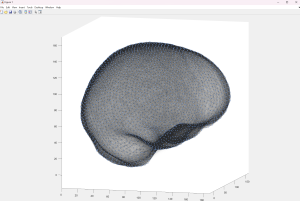

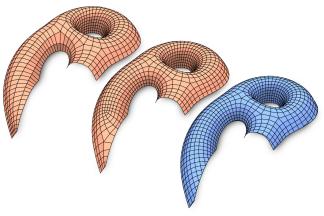

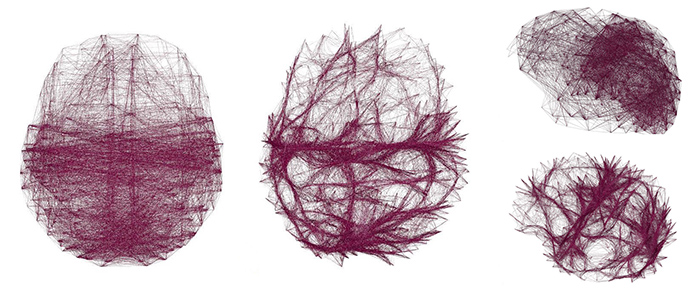

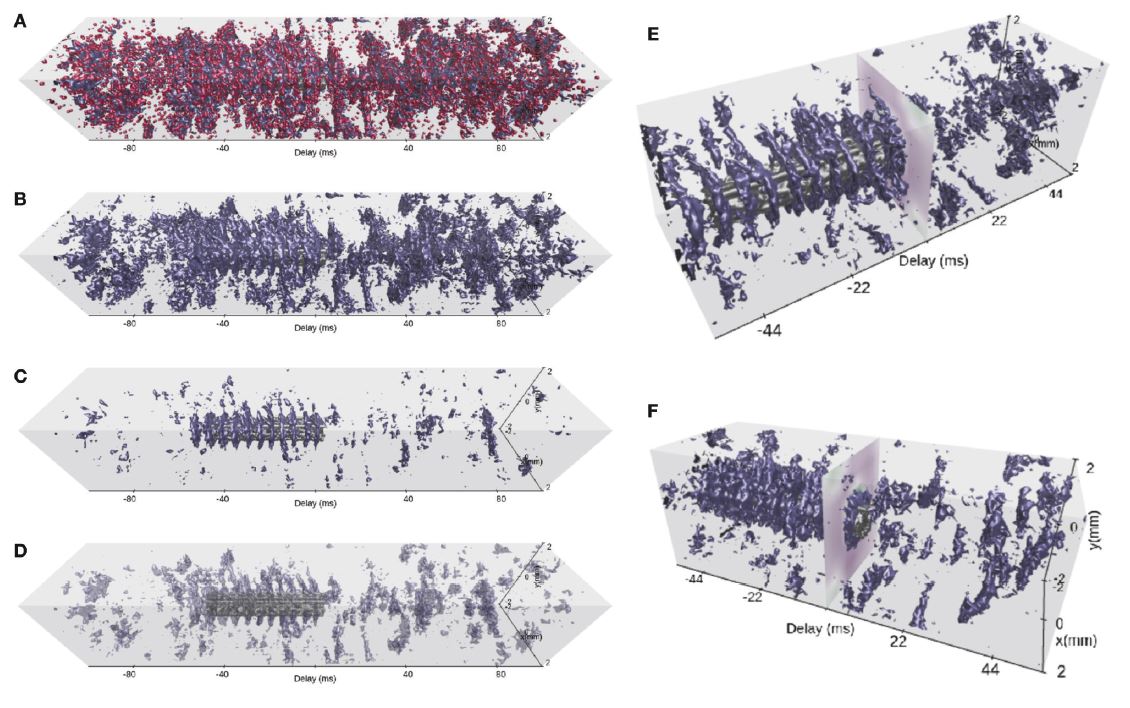

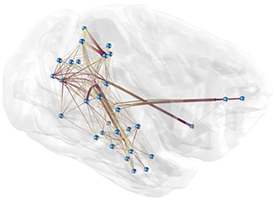

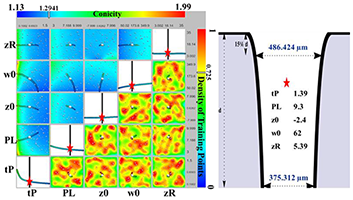

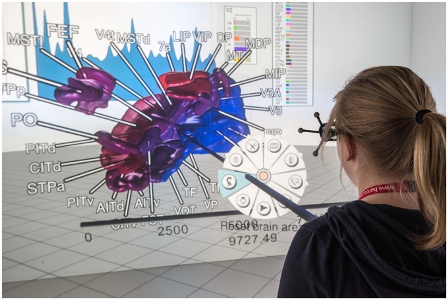

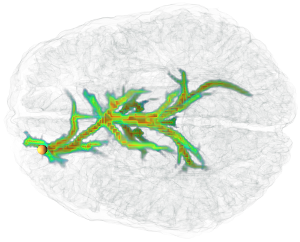

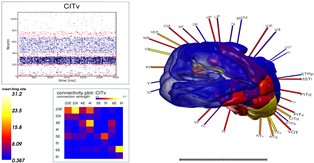

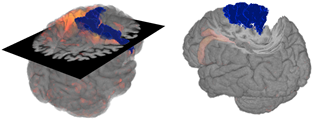

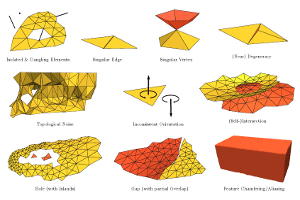

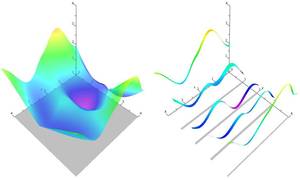

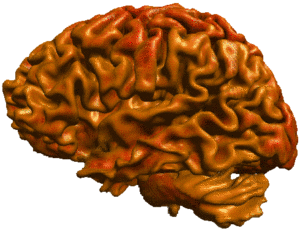

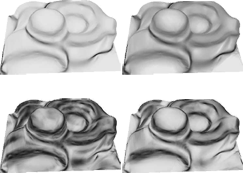

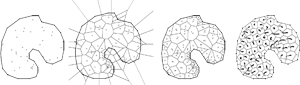

Exploring Uncertainty Visualization for Degenerate Tensors in 3D Symmetric Second-Order Tensor Field Ensembles

second-order tensors are fundamental in various scientific and engineering domains, as they can represent properties such as material stresses or diffusion processes in brain tissue. In recent years, several approaches have been introduced and improved to analyze these fields using topological features, such as degenerate tensor locations, i.e., the tensor has repeated eigenvalues, or normal surfaces. Traditionally, the identification of such features has been limited to single tensor fields. However, it has become common to create ensembles to account for uncertainties and variability in simulations and measurements. In this work, we explore novel methods for describing and visualizing degenerate tensor locations in 3D symmetric second-order tensor field ensembles. We base our considerations on the tensor mode and analyze its practicality in characterizing the uncertainty of degenerate tensor locations before proposing a variety of visualization strategies to effectively communicate degenerate tensor information. We demonstrate our techniques for synthetic and simulation data sets. The results indicate that the interplay of different descriptions for uncertainty can effectively convey information on degenerate tensor locations.

Best Paper Award!@INPROCEEDINGS{10771098,

author={Koenen, Jens and Petersen, Marvin and Garth, Christoph and Gerrits, Tim},

booktitle={2024 IEEE Visualization and Visual Analytics (VIS)},

title={DaVE - A Curated Database of Visualization Examples},

year={2024},

volume={},

number={},

pages={11-15},

keywords={Codes;Visual analytics;High performance computing;Data visualization;Containers;Visual databases;Visualization;Curated Database;High-Performance Computing},

doi={10.1109/VIS55277.2024.00010}

}

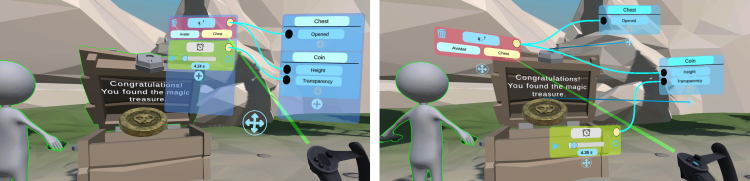

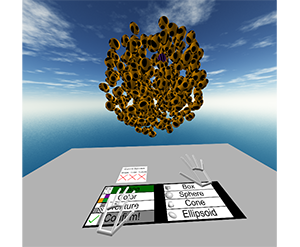

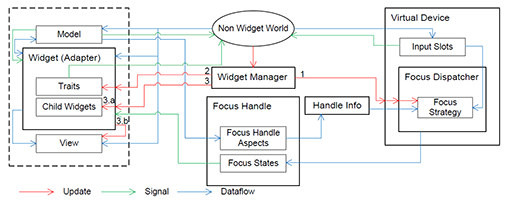

Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior

Immersive authoring enables content creation for virtual environments without a break of immersion. To enable immersive authoring of reactive behavior for a broad audience, we present modulation mapping, a simplified visual programming technique. To evaluate the applicability of our technique, we investigate the role of reference frames in which the programming elements are positioned, as this can affect the user experience. Thus, we developed two interface layouts: "surround-referenced" and "object-referenced". The former positions the programming elements relative to the physical tracking space, and the latter relative to the virtual scene objects. We compared the layouts in an empirical user study (n = 34) and found the surround-referenced layout faster, lower in task load, less cluttered, easier to learn and use, and preferred by users. Qualitative feedback, however, revealed the object-referenced layout as more intuitive, engaging, and valuable for visual debugging. Based on the results, we propose initial design implications for immersive authoring of reactive behavior by visual programming. Overall, modulation mapping was found to be an effective means for creating reactive behavior by the participants.

Honorable Mention for Best Paper!@inproceedings{eroglu2024choose,

title={Choose Your Reference Frame Right: An Immersive Authoring Technique for Creating Reactive Behavior},

author={Eroglu, Sevinc and Schmitz, Patric and Sinke, Kilian and Anders, David and Kuhlen, Torsten Wolfgang and Weyers, Benjamin},

booktitle={30th ACM Symposium on Virtual Reality Software and Technology},

pages={1--11},

year={2024}

}

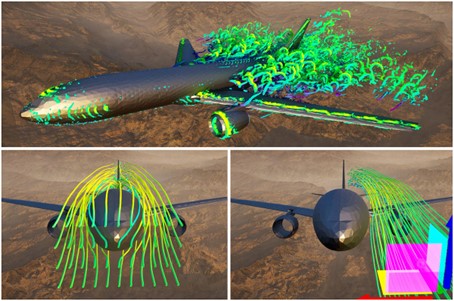

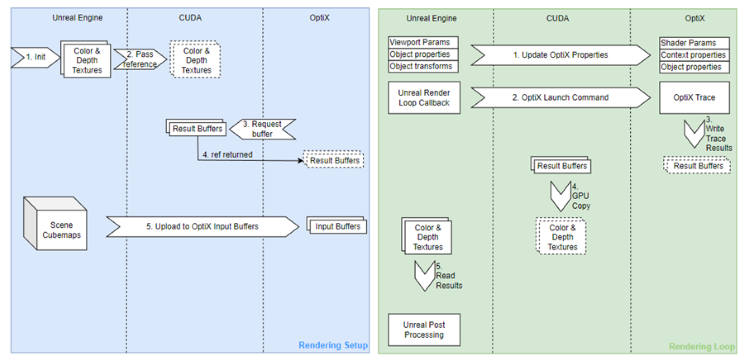

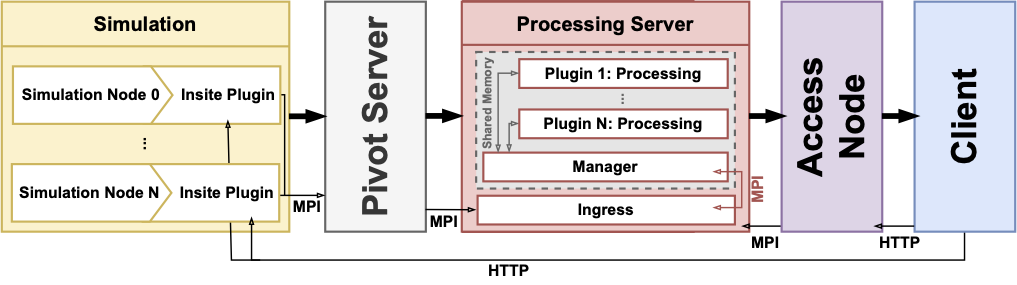

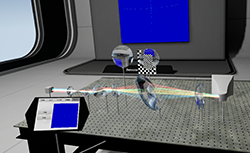

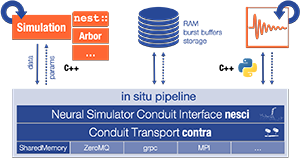

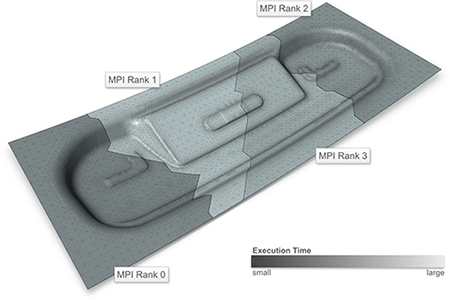

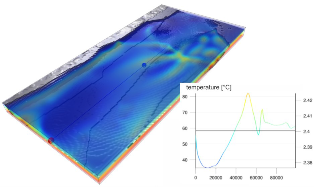

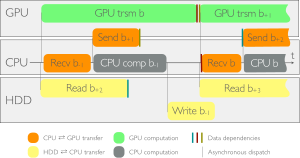

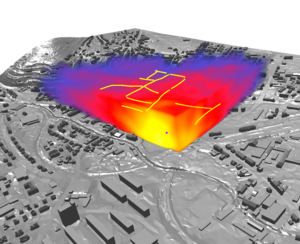

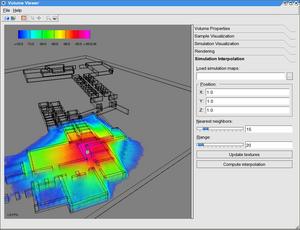

InsitUE - Enabling Hybrid In-situ Visualizations through Unreal Engine and Catalyst

In-situ, in-transit, and hybrid approaches have become well-established visualization methods over the last decades. Especially for large simulations, these paradigms enable visualization and additionally allow for early insights. While there has been a lot of research on combining these approaches with classical visualization software, only a few worked on combining in-situ/in-transit approaches with modern game engines. In this paper, we present and demonstrate InsitUE, a Catalyst2 compatible hybrid workflow that enables interactive real-time visualization of simulation results using Unreal Engine.

@InProceedings{10.1007/978-3-031-73716-9_33,

author="Kr{\"u}ger, Marcel

and Milke, Jan Frieder

and Kuhlen, Torsten W.

and Gerrits, Tim",

editor="Weiland, Mich{\`e}le

and Neuwirth, Sarah

and Kruse, Carola

and Weinzierl, Tobias",

title="InsitUE - Enabling Hybrid In-situ Visualizations Through Unreal Engine and Catalyst",

booktitle="High Performance Computing. ISC High Performance 2024 International Workshops",

year="2025",

publisher="Springer Nature Switzerland",

address="Cham",

pages="469--481",

abstract="In-situ, in-transit, and hybrid approaches have become well-established visualization methods over the last decades. Especially for large simulations, these paradigms enable visualization and additionally allow for early insights. While there has been a lot of research on combining these approaches with classical visualization software, only a few worked on combining in-situ/in-transit approaches with modern game engines. In this paper, we present and demonstrate InsitUE, a Catalyst2 compatible hybrid workflow that enables interactive real-time visualization of simulation results using Unreal Engine.",

isbn="978-3-031-73716-9"

}

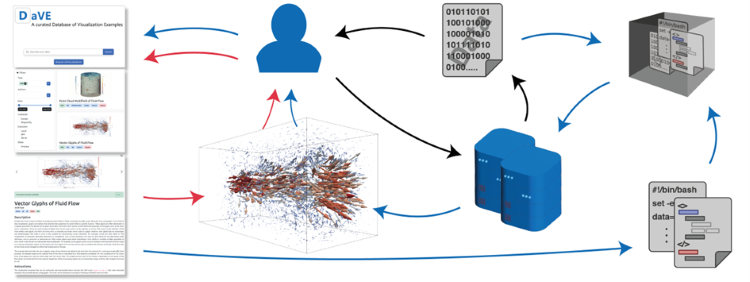

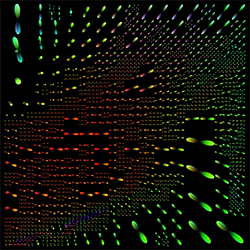

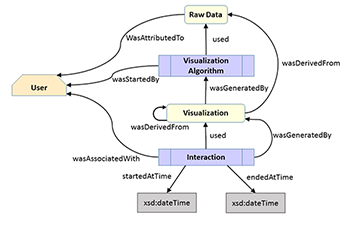

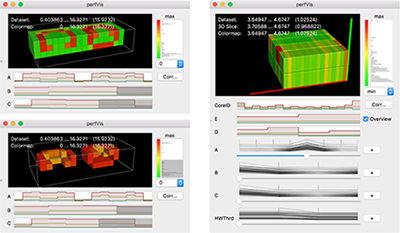

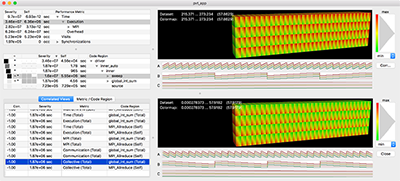

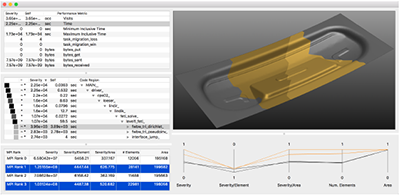

DaVE - A Curated Database of Visualization Examples

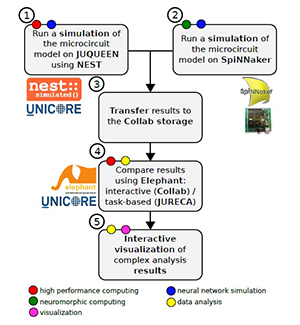

Visualization, from simple line plots to complex high-dimensional visual analysis systems, has established itself throughout numerous domains to explore, analyze, and evaluate data. Applying such visualizations in the context of simulation science where High-Performance Computing (HPC) produces ever-growing amounts of data that is more complex, potentially multidimensional, and multimodal, takes up resources and a high level of technological experience often not available to domain experts. In this work, we present DaVE -- a curated database of visualization examples, which aims to provide state-of-the-art and advanced visualization methods that arise in the context of HPC applications. Based on domain- or data-specific descriptors entered by the user, DaVE provides a list of appropriate visualization techniques, each accompanied by descriptions, examples, references, and resources. Sample code, adaptable container templates, and recipes for easy integration in HPC applications can be downloaded for easy access to high-fidelity visualizations. While the database is currently filled with a limited number of entries based on a broad evaluation of needs and challenges of current HPC users, DaVE is designed to be easily extended by experts from both the visualization and HPC communities.

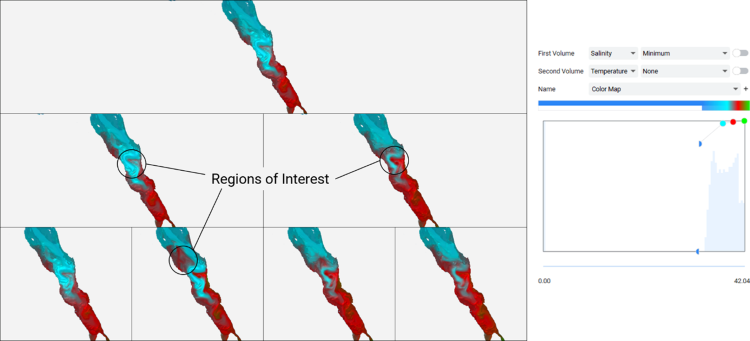

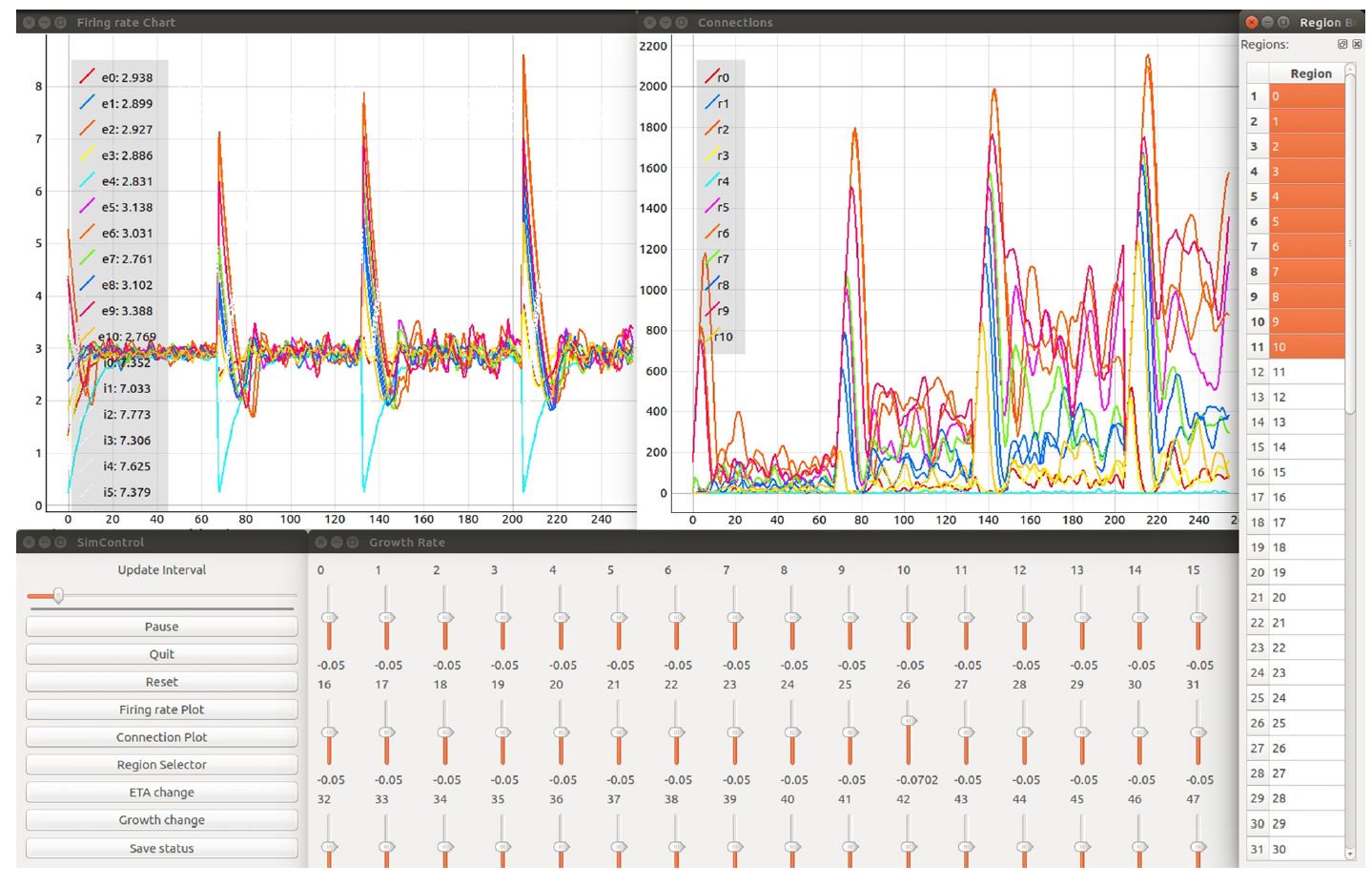

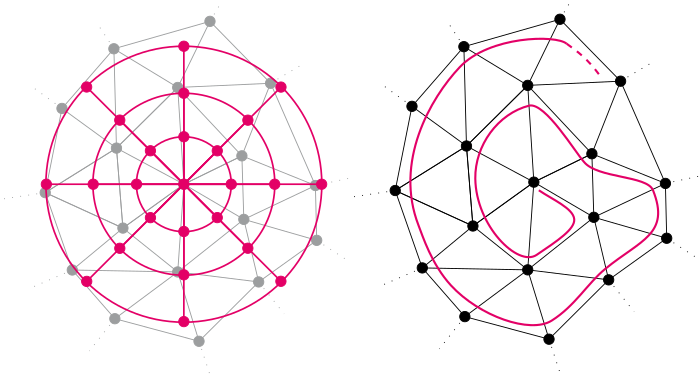

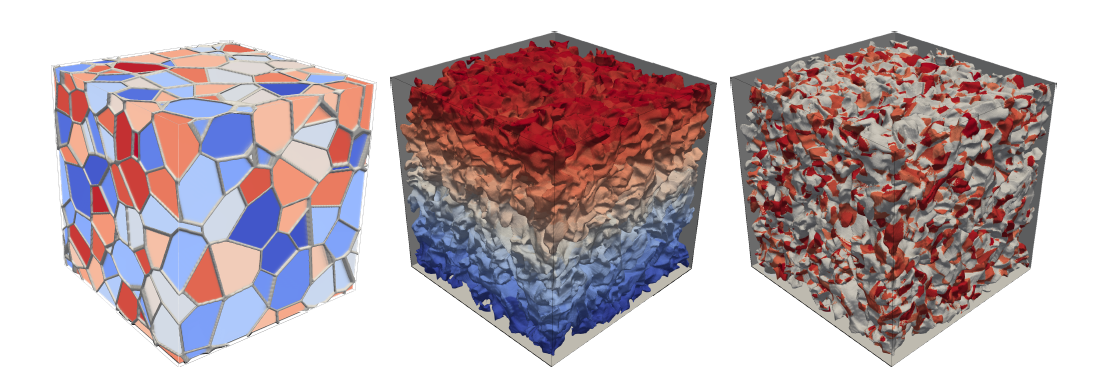

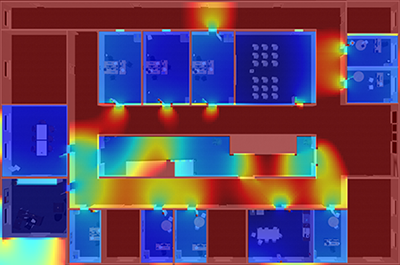

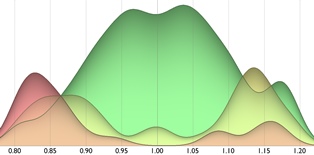

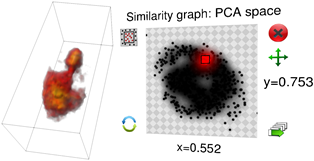

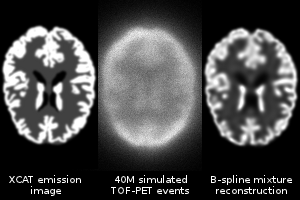

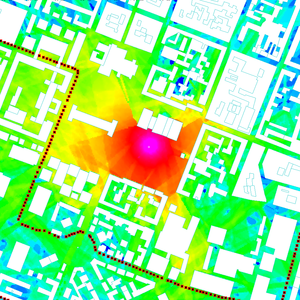

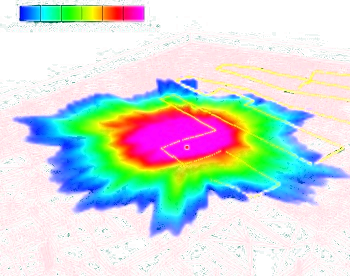

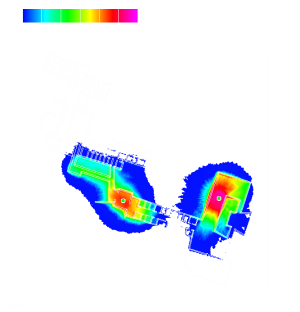

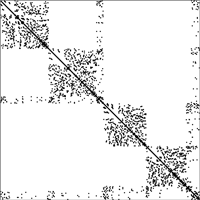

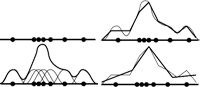

Region-based Visualization in Hierarchically Clustered Ensemble Volumes

Ensembles of simulations are generated to capture uncertainties in the simulation model and its initialization. When simulating 3D spatial phenomena, the value distributions may vary from region to region. Therefore, visualization methods need to adapt to different types and shapes of statistical distributions across regions. In the case of normal distribution, a region is well represented and visualized by the means and standard deviations. In the case of multi-modal distributions, the ensemble can be subdivided to investigate whether sub-ensembles exhibit uni-modal distributions in that region. We, therefore, propose an interactive visual analysis approach for region-based visualization within a hierarchy of sub-ensembles. The hierarchy of sub-ensembles is created using hierarchical clustering, while regions can be defined using parallel coordinates of statistical properties. The identified regions are rendered in a hierarchy of interactive volume renderers. We apply our approach to two real-world simulation ensembles to show its usability.

@inproceedings{10.2312:vmv.20241206,

booktitle = {Vision, Modeling, and Visualization},

editor = {Linsen, Lars and Thies, Justus},

title = {{Region-based Visualization in Hierarchically Clustered Ensemble Volumes}},

author = {Rave, Hennes and Evers, Marina and Gerrits, Tim and Linsen, Lars},

year = {2024},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-247-9},

DOI = {10.2312/vmv.20241206}

}

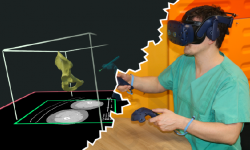

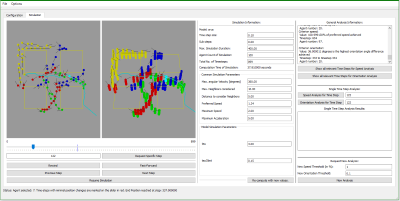

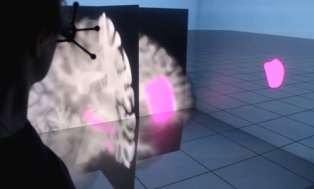

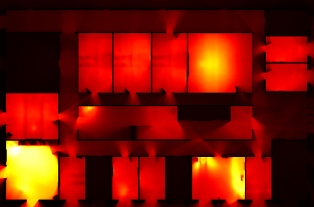

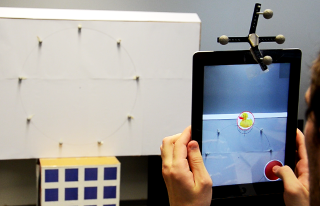

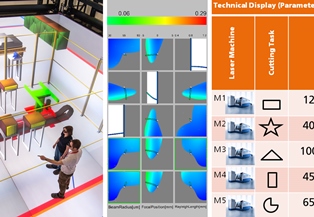

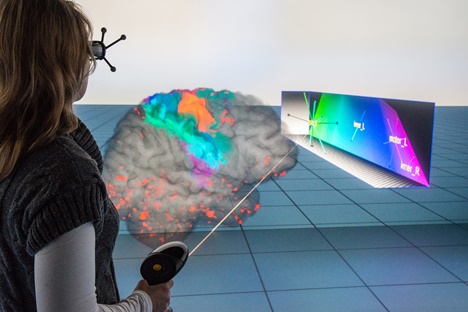

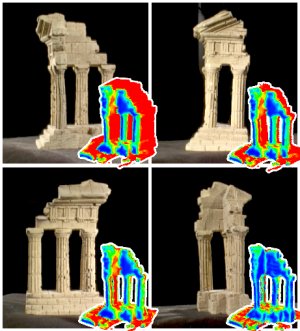

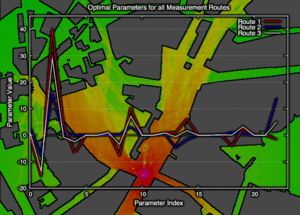

Virtual Reality as a Tool for Monitoring Additive Manufacturing Processes via Digital Shadows

We present a data acquisition and visualization pipeline that allows experts to monitor additive manufacturing processes, in particular laser metal deposition with wire (LMD-w) processes, in immersive virtual reality. Our virtual environment consists of a digital shadow of the LMD-w production site enriched with additional measurement data shown on both static as well as handheld virtual displays. Users can explore the production site by enhanced teleportation capabilities that enable them to change their scale as well as their elevation above the ground plane. In an exploratory user study with 22 participants, we demonstrate that our system is generally suitable for the supervision of LMD-w processes while generating low task load and cybersickness. Therefore, it serves as a first promising step towards the successful application of virtual reality technology in the comparatively young field of additive manufacturing.

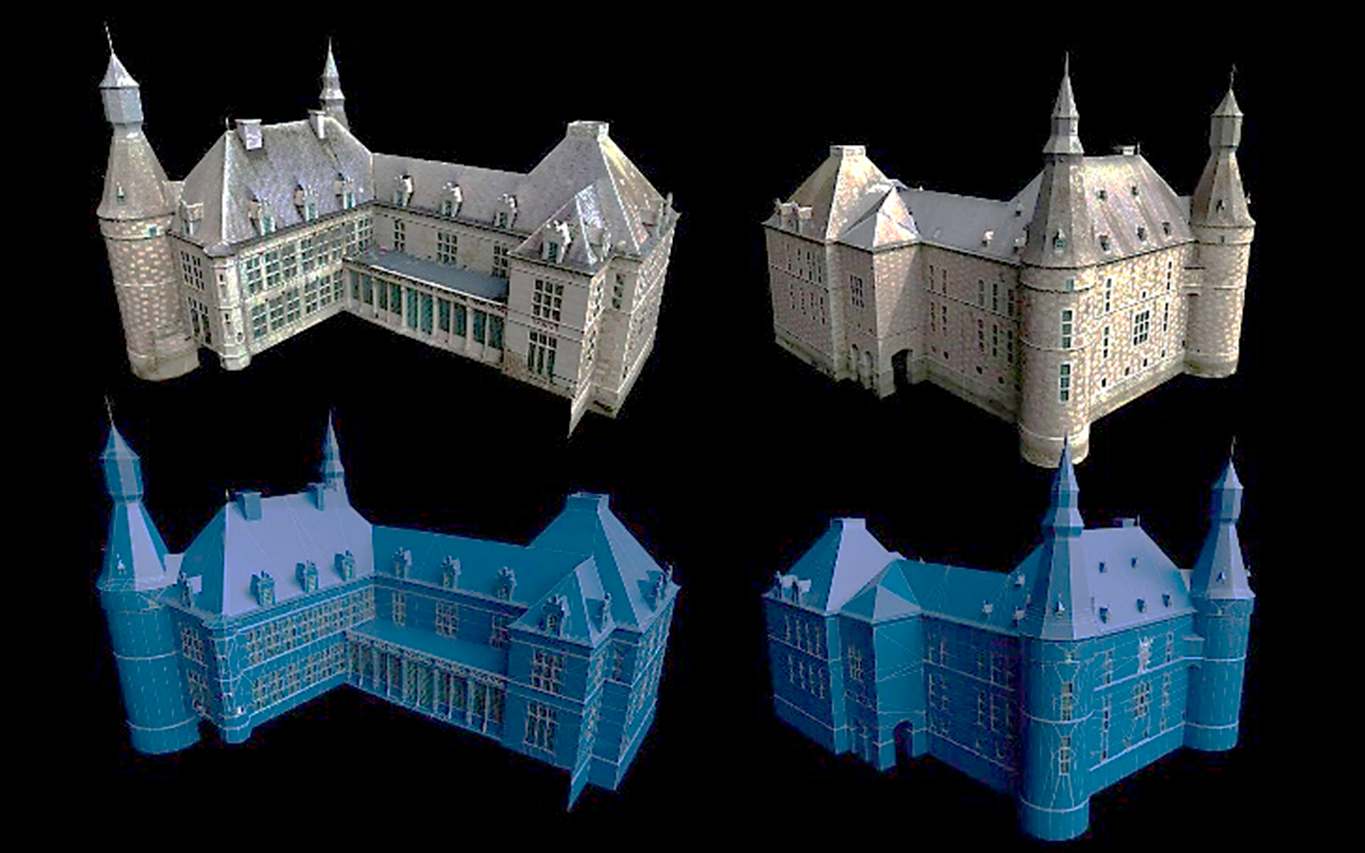

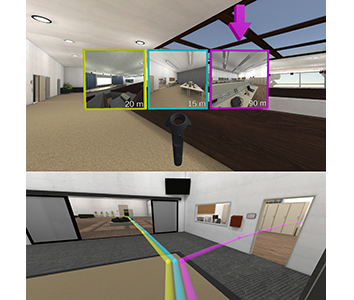

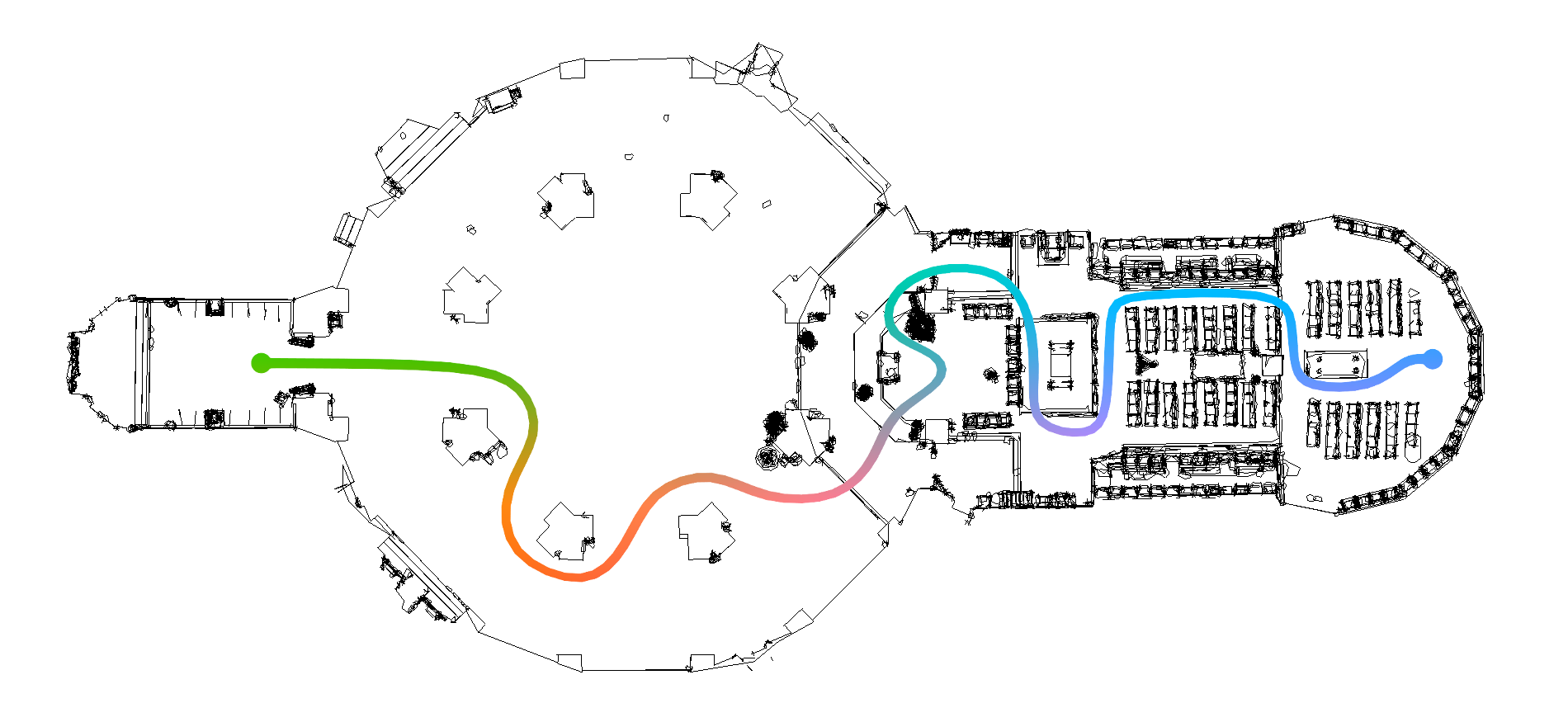

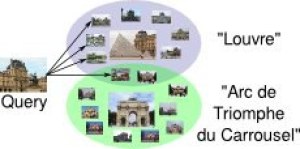

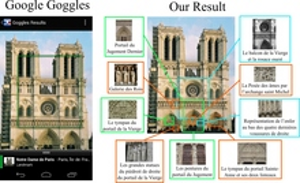

Semi-Automated Guided Teleportation through Immersive Virtual Environments

Immersive knowledge spaces like museums or cultural sites are often explored by traversing pre-defined paths that are curated to unfold a specific educational narrative. To support this type of guided exploration in VR, we present a semi-automated, handsfree path traversal technique based on teleportation that features a slow-paced interaction workflow targeted at fostering knowledge acquisition and maintaining spatial awareness. In an empirical user study with 34 participants, we evaluated two variations of our technique, differing in the presence or absence of intermediate teleportation points between the main points of interest along the route. While visiting additional intermediate points was objectively less efficient, our results indicate significant benefits of this approach regarding the user’s spatial awareness and perception of interface dependability. However, the user’s perception of flow, presence, attractiveness, perspicuity, and stimulation did not differ significantly. The overall positive reception of our approach encourages further research into semi-automated locomotion based on teleportation and provides initial insights into the design space of successful techniques in this domain.

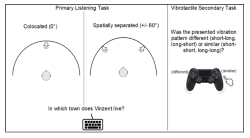

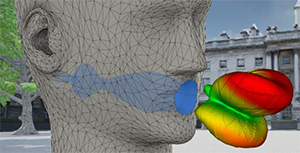

A Lecturer’s Voice Quality and its Effect on Memory, Listening Effort, and Perception in a VR Environment

Many lecturers develop voice problems, such as hoarseness. Nevertheless, research on how voice quality influences listeners’ perception, comprehension, and retention of spoken language is limited to a small number of audio-only experiments. We aimed to address this gap by using audio-visual virtual reality (VR) to investigate the impact of a lecturer’s hoarseness on university students’ heard text recall, listening effort, and listening impression. Fifty participants were immersed in a virtual seminar room, where they engaged in a Dual-Task Paradigm. They listened to narratives presented by a virtual female professor, who spoke in either a typical or hoarse voice. Simultaneously, participants performed a secondary task. Results revealed significantly prolonged secondary-task response times with the hoarse voice compared to the typical voice, indicating increased listening effort. Subjectively, participants rated the hoarse voice as more annoying, effortful to listen to, and impeding for their cognitive performance. No effect of voice quality was found on heard text recall, suggesting that, while hoarseness may compromise certain aspects of spoken language processing, this might not necessarily result in reduced information retention. In summary, our findings underscore the importance of promoting vocal health among lecturers, which may contribute to enhanced listening conditions in learning spaces.

@article{Schiller2024,

author = {Isabel S. Schiller and Carolin Breuer and Lukas Aspöck and

Jonathan Ehret and Andrea Bönsch and Torsten W. Kuhlen and Janina Fels and

Sabine J. Schlittmeier},

doi = {10.1038/s41598-024-63097-6},

issn = {2045-2322},

issue = {1},

journal = {Scientific Reports},

keywords = {Audio-visual language processing,Virtual reality,Voice

quality},

month = {5},

pages = {12407},

pmid = {38811832},

title = {A lecturer’s voice quality and its effect on memory, listening

effort, and perception in a VR environment},

volume = {14},

url = {https://www.nature.com/articles/s41598-024-63097-6},

year = {2024},

}

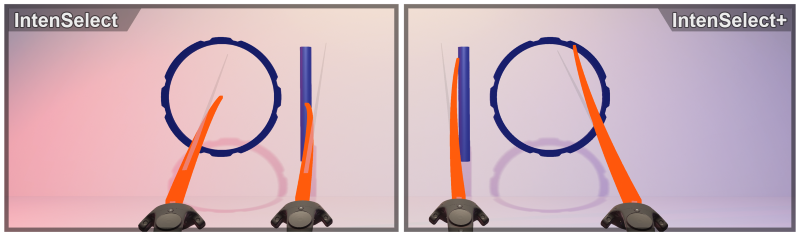

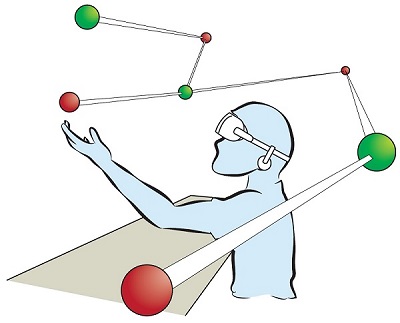

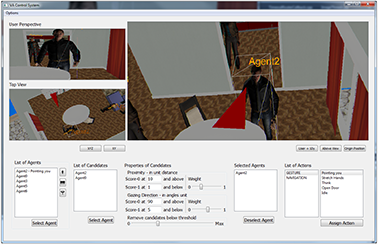

IntenSelect+: Enhancing Score-Based Selection in Virtual Reality

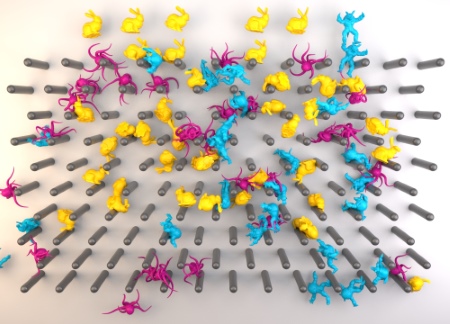

Object selection in virtual environments is one of the most common and recurring interaction tasks. Therefore, the used technique can critically influence a system’s overall efficiency and usability. IntenSelect is a scoring-based selection-by-volume technique that was shown to offer improved selection performance over conventional raycasting in virtual reality. This initial method, however, is most pronounced for small spherical objects that converge to a point-like appearance only, is challenging to parameterize, and has inherent limitations in terms of flexibility. We present an enhanced version of IntenSelect called IntenSelect+ designed to overcome multiple shortcomings of the original IntenSelect approach. In an empirical within-subjects user study with 42 participants, we compared IntenSelect+ to IntenSelect and conventional raycasting on various complex object configurations motivated by prior work. In addition to replicating the previously shown benefits of IntenSelect over raycasting, our results demonstrate significant advantages of IntenSelect+ over IntenSelect regarding selection performance, task load, and user experience. We, therefore, conclude that IntenSelect+ is a promising enhancement of the original approach that enables faster, more precise, and more comfortable object selection in immersive virtual environments.

@ARTICLE{10459000,

author={Krüger, Marcel and Gerrits, Tim and Römer, Timon and Kuhlen, Torsten and Weissker, Tim},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={IntenSelect+: Enhancing Score-Based Selection in Virtual Reality},

year={2024},

volume={},

number={},

pages={1-10},

keywords={Visualization;Three-dimensional displays;Task analysis;Usability;Virtual environments;Shape;Engines;Virtual Reality;3D User Interfaces;3D Interaction;Selection;Score-Based Selection;Temporal Selection;IntenSelect},

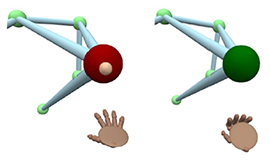

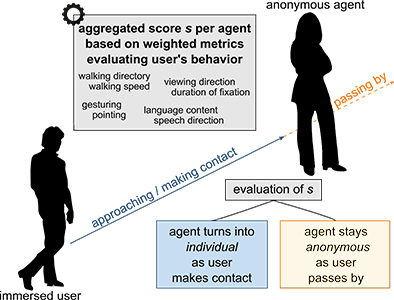

Authentication in Immersive Virtual Environments through Gesture-Based Interaction with a Virtual Agent

Authentication poses a significant challenge in VR applications, as conventional methods, such as text input for usernames and passwords, prove cumbersome and unnatural in immersive virtual environments. Alternatives such as password managers or two-factor authentication may necessitate users to disengage from the virtual experience by removing their headsets. Consequently, we present an innovative system that utilizes virtual agents (VAs) as interaction partners, enabling users to authenticate naturally through a set of ten gestures, such as high fives, fist bumps, or waving. By combining these gestures, users can create personalized authentications akin to PINs, potentially enhancing security without compromising the immersive experience. To gain first insights into the suitability of this authentication process, we conducted a formal expert review with five participants and compared our system to a virtual keypad authentication approach. While our results show that the effectiveness of a VA-mediated gesture-based authentication system is still limited, they motivate further research in this area.

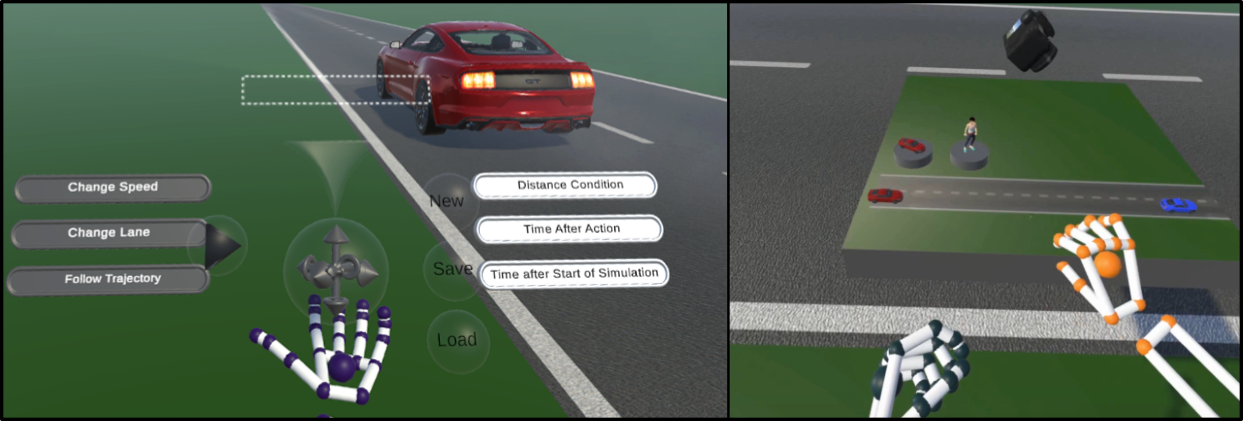

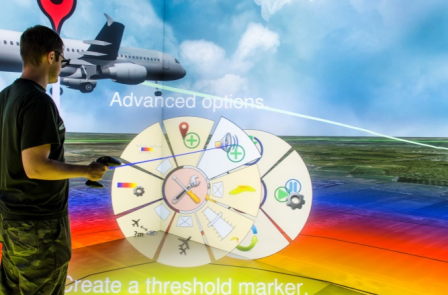

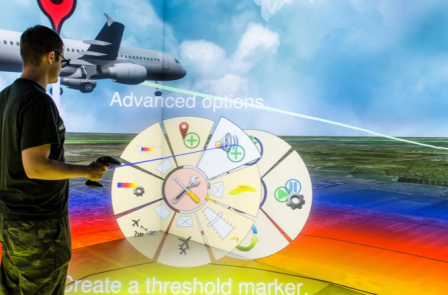

VRScenarioBuilder: Free-Hand Immersive Authoring Tool for Scenario-based Testing of Automated Vehicles

Virtual Reality has become an important medium in the automotive industry, providing engineers with a simulated platform to actively engage with and evaluate realistic driving scenarios for testing and validating automated vehicles. However, engineers are often restricted to using 2D desktop-based tools for designing driving scenarios, which can result in inefficiencies in the development and testing cycles. To this end, we present VRScenarioBuilder, an immersive authoring tool that enables engineers to create and modify dynamic driving scenarios directly in VR using free-hand interactions. Our tool features a natural user interface that enables users to create scenarios by using drag-and-drop building blocks. To evaluate the interface components and interactions, we conducted a user study with VR experts. Our findings highlight the effectiveness and potential improvements of our tool. We have further identified future research directions, such as exploring the spatial arrangement of the interface components and managing lengthy blocks.

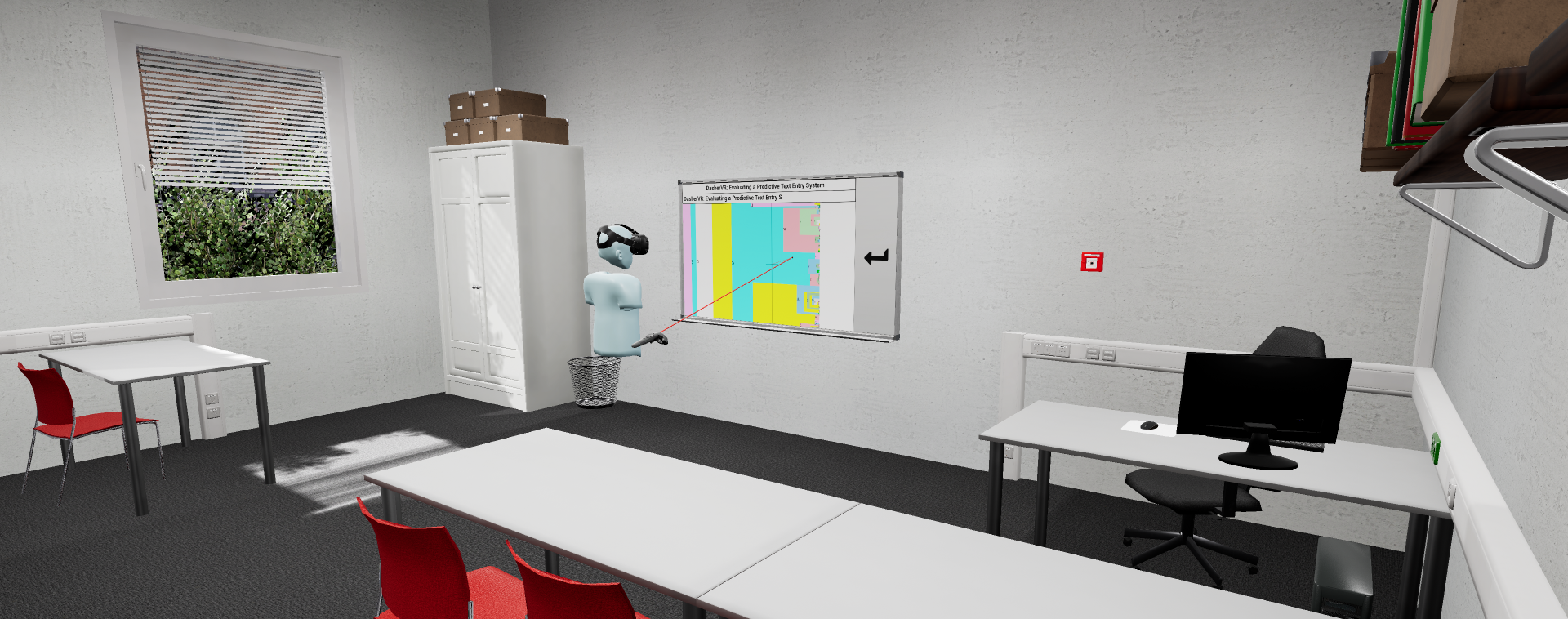

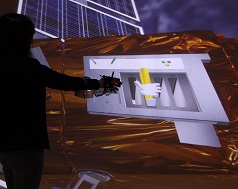

Game Engines for Immersive Visualization: Using Unreal Engine Beyond Entertainment

One core aspect of immersive visualization labs is to develop and provide powerful tools and applications that allow for efficient analysis and exploration of scientific data. As the requirements for such applications are often diverse and complex, the same applies to the development process. This has led to a myriad of different tools, frameworks, and approaches that grew and developed over time. The steady advance of commercial off-the-shelf game engines such as Unreal Engine has made them a valuable option for development in immersive visualization labs. In this work, we share our experience of migrating to Unreal Engine as a primary developing environment for immersive visualization applications. We share our considerations on requirements, present use cases developed in our lab to communicate advantages and challenges experienced, discuss implications on our research and development environments, and aim to provide guidance for others within our community facing similar challenges.

@article{10.1162/pres_a_00416,

author = {Krüger, Marcel and Gilbert, David and Kuhlen, Torsten W. and Gerrits, Tim},

title = "{Game Engines for Immersive Visualization: Using Unreal Engine Beyond Entertainment}",

journal = {PRESENCE: Virtual and Augmented Reality},

volume = {33},

pages = {31-55},

year = {2024},

month = {07},

abstract = "{One core aspect of immersive visualization labs is to develop and provide powerful tools and applications that allow for efficient analysis and exploration of scientific data. As the requirements for such applications are often diverse and complex, the same applies to the development process. This has led to a myriad of different tools, frameworks, and approaches that grew and developed over time. The steady advance of commercial off-the-shelf game engines such as Unreal Engine has made them a valuable option for development in immersive visualization labs. In this work, we share our experience of migrating to Unreal Engine as a primary developing environment for immersive visualization applications. We share our considerations on requirements, present use cases developed in our lab to communicate advantages and challenges experienced, discuss implications on our research and development environments, and aim to provide guidance for others within our community facing similar challenges.}",

issn = {1054-7460},

doi = {10.1162/pres_a_00416},

url = {https://doi.org/10.1162/pres\_a\_00416},

eprint = {https://direct.mit.edu/pvar/article-pdf/doi/10.1162/pres\_a\_00416/2465397/pres\_a\_00416.pdf},

}

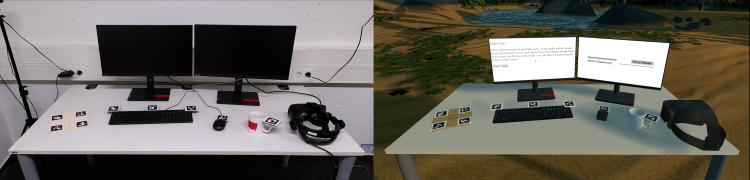

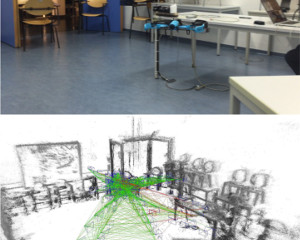

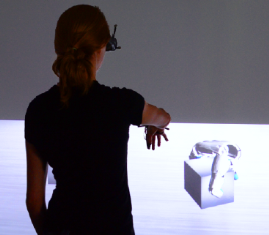

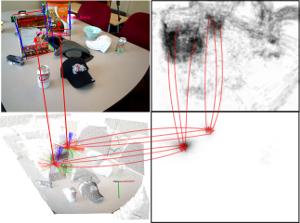

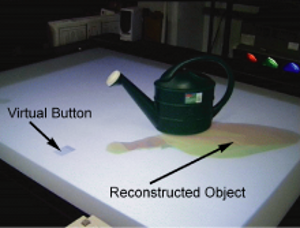

Demo: Webcam-based Hand- and Object-Tracking for a Desktop Workspace in Virtual Reality

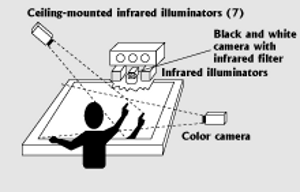

As virtual reality overlays the user’s view, challenges arise when interaction with their physical surroundings is still needed. In a seated workspace environment interaction with the physical surroundings can be essential to enable productive working. Interaction with e.g. physical mouse and keyboard can be difficult when no visual reference is given to where they are placed. This demo shows a combination of computer vision-based marker detection with machine-learning-based hand detection to bring users’ hands and arbitrary objects into VR.

@inproceedings{10.1145/3677386.3688879,

author = {Pape, Sebastian and Beierle, Jonathan Heinrich and Kuhlen, Torsten Wolfgang and Weissker, Tim},

title = {Webcam-based Hand- and Object-Tracking for a Desktop Workspace in Virtual Reality},

year = {2024},

isbn = {9798400710889},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3677386.3688879},

doi = {10.1145/3677386.3688879},

abstract = {As virtual reality overlays the user’s view, challenges arise when interaction with their physical surroundings is still needed. In a seated workspace environment interaction with the physical surroundings can be essential to enable productive working. Interaction with e.g. physical mouse and keyboard can be difficult when no visual reference is given to where they are placed. This demo shows a combination of computer vision-based marker detection with machine-learning-based hand detection to bring users’ hands and arbitrary objects into VR.},

booktitle = {Proceedings of the 2024 ACM Symposium on Spatial User Interaction},

articleno = {64},

numpages = {2},

keywords = {Hand-Tracking, Object-Tracking, Physical Props, Virtual Reality, Webcam},

location = {Trier, Germany},

series = {SUI '24}

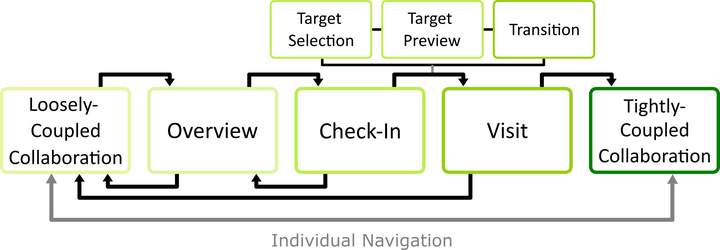

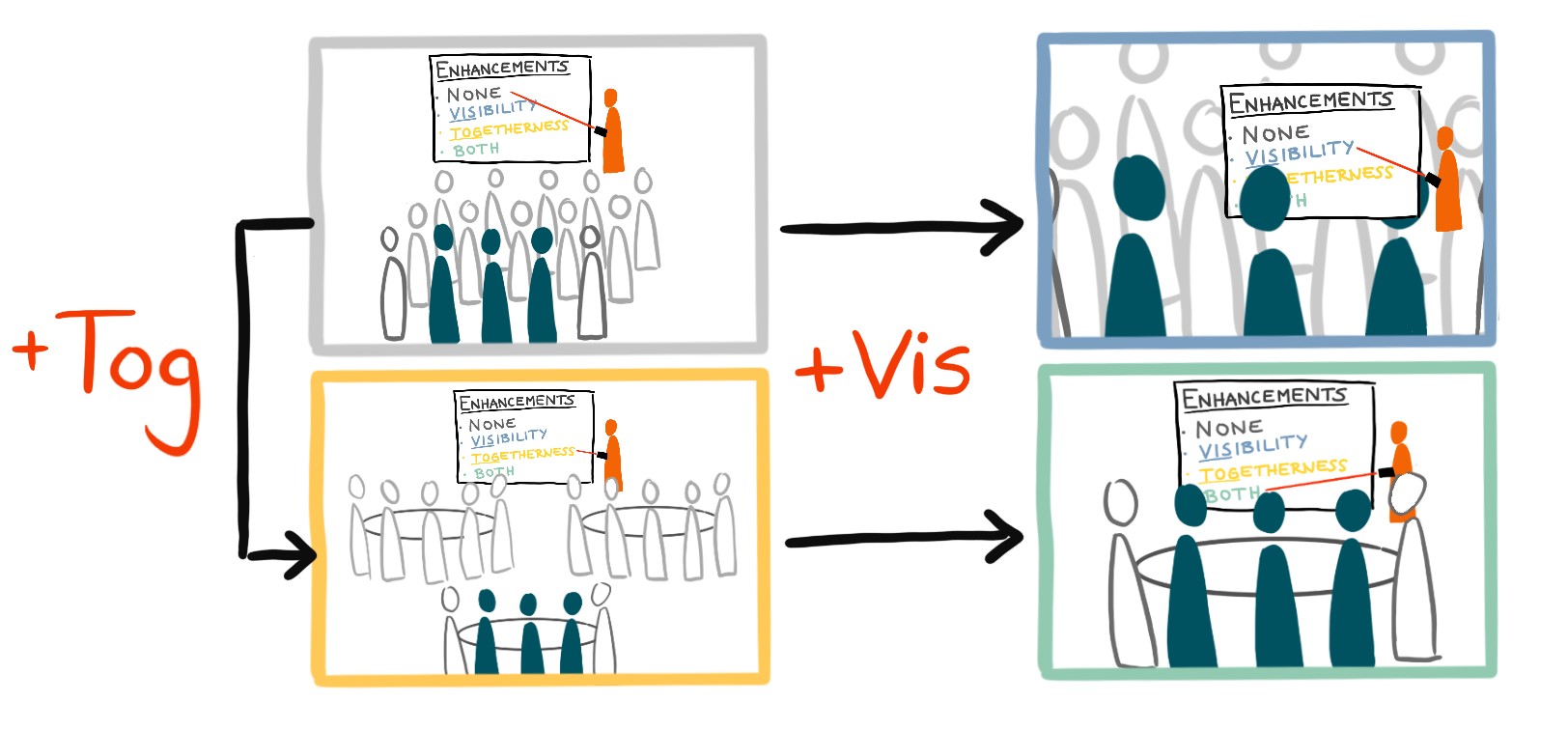

Come Look at This: Supporting Fluent Transitions between Tightly and Loosely Coupled Collaboration in Social Virtual Reality

Collaborative work in social virtual reality often requires an interplay of loosely coupled collaboration from different virtual locations and tightly coupled face-to-face collaboration. Without appropriate system mediation, however, transitioning between these phases requires high navigation and coordination efforts. In this paper, we present an interaction system that allows collaborators in virtual reality to seamlessly switch between different collaboration models known from related work. To this end, we present collaborators with functionalities that let them work on individual sub-tasks in different virtual locations, consult each other using asymmetric interaction patterns while keeping their current location, and temporarily or permanently join each other for face-to-face interaction. We evaluated our methods in a user study with 32 participants working in teams of two. Our quantitative results indicate that delegating the target selection process for a long-distance teleport significantly improves placement accuracy and decreases task load within the team. Our qualitative user feedback shows that our system can be applied to support flexible collaboration. In addition, the proposed interaction sequence received positive evaluations from teams with varying VR experiences.

@ARTICLE{10568966,

author={Bimberg, Pauline and Zielasko, Daniel and Weyers, Benjamin and Froehlich, Bernd and Weissker, Tim},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Come Look at This: Supporting Fluent Transitions between Tightly and Loosely Coupled Collaboration in Social Virtual Reality},

year={2024},

volume={},

number={},

pages={1-17},

keywords={Collaboration;Virtual environments;Navigation;Task analysis;Virtual reality;Three-dimensional displays;Teleportation;Virtual Reality;3D User Interfaces;Multi-User Environments;Social VR;Groupwork;Collaborative Interfaces},

doi={10.1109/TVCG.2024.3418009}}

Poster: Travel Speed, Spatial Awareness, And Implications for Egocentric Target-Selection-Based Teleportation - A Replication Design

Virtual travel in Virtual Reality experiences is common, offering users the ability to explore expansive virtual spaces. Various interfaces exist for virtual travel, with speed playing a crucial role in user experience and spatial awareness. Teleportation-based interfaces provide instantaneous transitions, whereas continuous and semi-continuous methods vary in speed and control. Prior research by Bowman et al. highlighted the impact of travel speed on spatial awareness demonstrating that instantaneous travel can lead to user disorientation. However, additional cues, such as visual target selection, can aid in reorientation. This study replicates and extends Bowman’s experiment, investigating the influence of travel speed and visual target cues on spatial orientation.

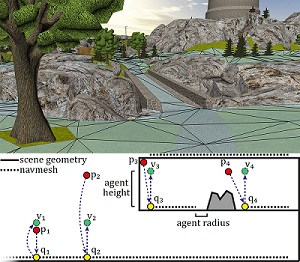

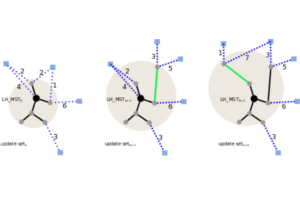

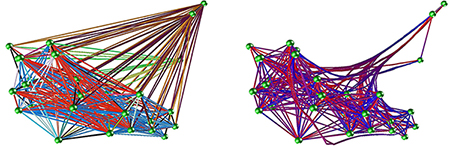

On the Computation of User Placements for Virtual Formation Adjustments during Group Navigation

Several group navigation techniques enable a single navigator to control travel for all group members simultaneously in social virtual reality. A key aspect of this process is the ability to rearrange the group into a new formation to facilitate the joint observation of the scene or to avoid obstacles on the way. However, the question of how users should be distributed within the new formation to create an intuitive transition that minimizes disruptions of ongoing social activities is currently not explored. In this paper, we begin to close this gap by introducing four user placement strategies based on mathematical considerations, discussing their benefits and drawbacks, and sketching further novel ideas to approach this topic from different angles in future work. Our work, therefore, contributes to the overarching goal of making group interactions in social virtual reality more intuitive and comfortable for the involved users.

@INPROCEEDINGS{10536250,

author={Weissker, Tim and Franzgrote, Matthis and Kuhlen, Torsten and Gerrits, Tim},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)},

title={On the Computation of User Placements for Virtual Formation Adjustments During Group Navigation},

year={2024},

volume={},

number={},

pages={396-402},

keywords={Three-dimensional displays;Navigation;Conferences;Virtual reality;Human factors;User interfaces;Task analysis;Human-centered computing—Human computer interaction (HCI)—Interaction paradigms—Virtual reality;Human-centered computing—Interaction design—Interaction design theory, concepts and paradigms},

doi={10.1109/VRW62533.2024.00077}}

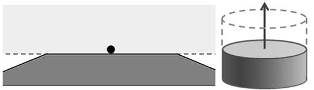

Try This for Size: Multi-Scale Teleportation in Immersive Virtual Reality

The ability of a user to adjust their own scale while traveling through virtual environments enables them to inspect tiny features being ant-sized and to gain an overview of the surroundings as a giant. While prior work has almost exclusively focused on steering-based interfaces for multi-scale travel, we present three novel teleportation-based techniques that avoid continuous motion flow to reduce the risk of cybersickness. Our approaches build on the extension of known teleportation workflows and suggest specifying scale adjustments either simultaneously with, as a connected second step after, or separately from the user’s new horizontal position. The results of a two-part user study with 30 participants indicate that the simultaneous and connected specification paradigms are both suitable candidates for effective and comfortable multi-scale teleportation with nuanced individual benefits. Scale specification as a separate mode, on the other hand, was considered less beneficial. We compare our findings to prior research and publish the executable of our user study to facilitate replication and further analyses.

@ARTICLE{10458384,

author={Weissker, Tim and Franzgrote, Matthis and Kuhlen, Torsten},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Try This for Size: Multi-Scale Teleportation in Immersive Virtual Reality},

year={2024},

volume={30},

number={5},

pages={2298-2308},

keywords={Teleportation;Navigation;Virtual environments;Three-dimensional displays;Visualization;Cybersickness;Collaboration;Virtual Reality;3D User Interfaces;3D Navigation;Head-Mounted Display;Teleportation;Multi-Scale},

doi={10.1109/TVCG.2024.3372043}}

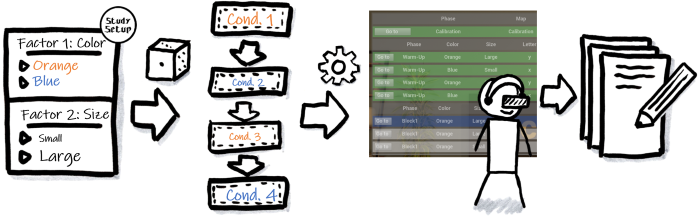

StudyFramework: Comfortably Setting up and Conducting Factorial-Design Studies Using the Unreal Engine

Setting up and conducting user studies is fundamental to virtual reality research. Yet, often these studies are developed from scratch, which is time-consuming and especially hard and error-prone for novice developers. In this paper, we introduce the StudyFramework, a framework specifically designed to streamline the setup and execution of factorial-design VR-based user studies within the Unreal Engine, significantly enhancing the overall process. We elucidate core concepts such as setup, randomization, the experimenter view, and logging. After utilizing our framework to set up and conduct their respective studies, 11 study developers provided valuable feedback through a structured questionnaire. This feedback, which was generally positive, highlighting its simplicity and usability, is discussed in detail.

@ InProceedings{Ehret2024a,

author={Ehret, Jonathan and Bönsch, Andrea and Fels, Janina and

Schlittmeier, Sabine J. and Kuhlen, Torsten W.},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW): Workshop "Open Access Tools and Libraries for

Virtual Reality"},

title={StudyFramework: Comfortably Setting up and Conducting

Factorial-Design Studies Using the Unreal Engine},

year={2024}

}

Audiovisual Coherence: Is Embodiment of Background Noise Sources a Necessity?

Exploring the synergy between visual and acoustic cues in virtual reality (VR) is crucial for elevating user engagement and perceived (social) presence. We present a study exploring the necessity and design impact of background sound source visualizations to guide the design of future soundscapes. To this end, we immersed n = 27 participants using a head-mounted display (HMD) within a virtual seminar room with six virtual peers and a virtual female professor. Participants engaged in a dual-task paradigm involving simultaneously listening to the professor and performing a secondary vibrotactile task, followed by recalling the heard speech content. We compared three types of background sound source visualizations in a within-subject design: no visualization, static visualization, and animated visualization. Participants’ subjective ratings indicate the importance of animated background sound source visualization for an optimal coherent audiovisual representation, particularly when embedding peer-emitted sounds. However, despite this subjective preference, audiovisual coherence did not affect participants’ performance in the dual-task paradigm measuring their listening effort.

@ InProceedings{Ehret2024b,

author={Ehret, Jonathan and Bönsch, Andrea and Schiller, Isabel S. and

Breuer, Carolin and Aspöck, Lukas and Fels, Janina and Schlittmeier, Sabine

J. and Kuhlen, Torsten W.},

booktitle={2024 IEEE Conference on Virtual Reality and 3D User Interfaces

Abstracts and Workshops (VRW): "Workshop on Virtual Humans and Crowds in

Immersive Environments (VHCIE)"},

title={Audiovisual Coherence: Is Embodiment of Background Noise Sources a

Necessity?},

year={2024}

}

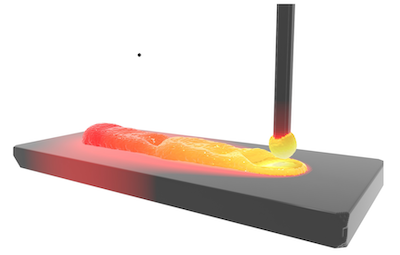

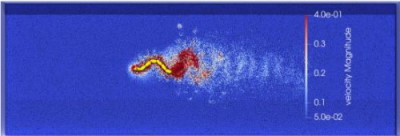

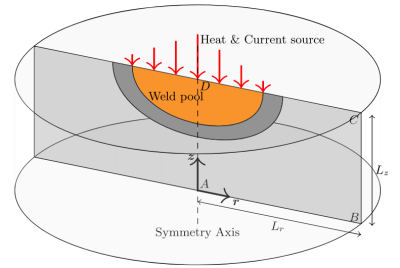

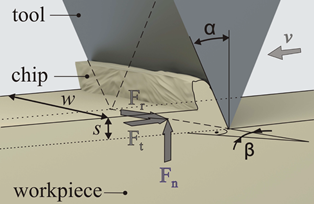

Simulation of wire metal transfer in the cold metal transfer (CMT) variant of gas metal arc welding using the smoothed particle hydrodynamics (SPH) approach

Cold metal transfer (CMT) is a variant of gas metal arc welding (GMAW) in which the molten metal of the wire is transferred to the weld pool mainly in the short-circuit phase. A special feature here is that the wire is retracted during this strongly controlled welding process. This allows precise and spatter-free formation of the weld seams with lower energy input. To simulate this process, a model based on the particle-based smoothed particle hydrodynamics (SPH) method is developed. This method provides a native solution for the mass and heat transfer. A simplified surrogate model was implemented as an arc heat source for welding simulation. This welding simulation model based on smoothed particle hydrodynamics method was augmented with surface effects, the Joule heating of the wire, and the effect of the electromagnetic forces. The model of metal transfer in the cold metal transfer process shows good qualitative agreement with real experiments.

@article{MWW+24,

author = {Mokrov, O. and Warkentin, S. and Westhofen, L. and Jeske, S. and Bender, J. and Sharma, R. and Reisgen, U.},

title = {Simulation of wire metal transfer in the cold metal transfer (CMT) variant of gas metal arc welding using the smoothed particle hydrodynamics (SPH) approach},

journal = {Materialwissenschaft und Werkstofftechnik},

volume = {55},

number = {1},

pages = {62-71},

keywords = {cold metal transfer (CMT), free surface deformation, gas metal arc welding (GMAW), simulation, smoothed particle hydrodynamics (SPH), geglätteter Partikel-basierter hydrodynamischer Ansatz (SPH), Kaltmetalltransfer (CMT), Metallschutzgasschweißens, Oberflächenverformung, Simulation},

doi = {https://doi.org/10.1002/mawe.202300166},

year = {2024}

}

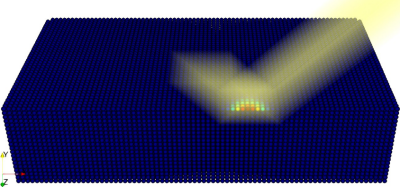

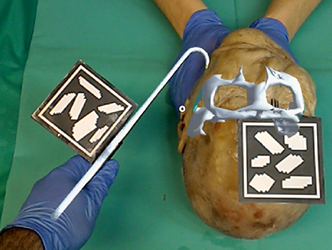

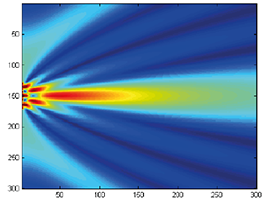

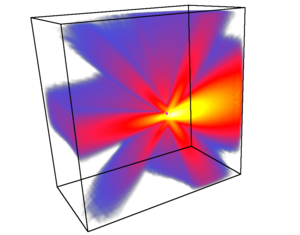

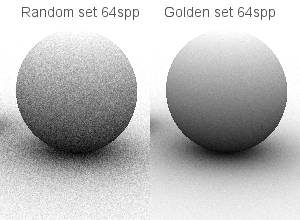

Ray tracing method with implicit surface detection for smoothed particle hydrodynamics-based laser beam welding simulations

An important prerequisite for process simulations of laser beam welding is the accurate depiction of the surface energy distribution. This requires capturing the optical effects of the laser beam occurring at the free surface. In this work, a novel optics ray tracing scheme is proposed which can handle the reflection and absorption dynamics associated with laser beam welding. Showcasing the applicability of the approach, it is coupled with a novel surface detection algorithm based on smoothed particle hydrodynamics (SPH), which offers significant performance benefits over reconstruction-based methods. The results are compared to state-of-the-art experimental results in laser beam welding, for which an excellent correspondence in the case of the energy distributions inside capillaries is shown.

@article{WKB+24,

author = {Westhofen, L. and Kruska, J. and Bender, J. and Warkentin, S. and Mokrov, O. and Sharma, R. and Reisgen, U.},

title = {Ray tracing method with implicit surface detection for smoothed particle hydrodynamics-based laser beam welding simulations},

journal = {Materialwissenschaft und Werkstofftechnik},

volume = {55},

number = {1},

pages = {40-52},

keywords = {heat transfer, hydrodynamics, laser beam welding, ray optics, ray tracing, smoothed particle, geglättete Partikel, hydrodynamische, Laserstrahlschweißen, Strahloptik, Strahlverfolgung, Wärmetransfer},

doi = {https://doi.org/10.1002/mawe.202300161},

year = {2024}

}

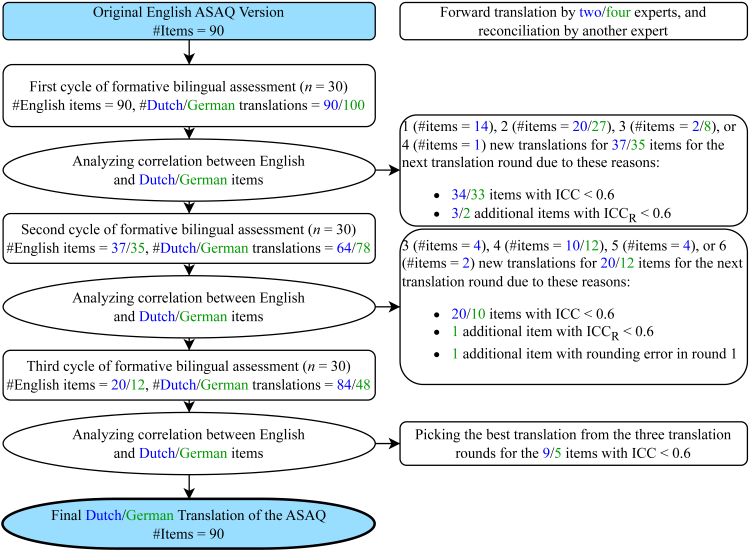

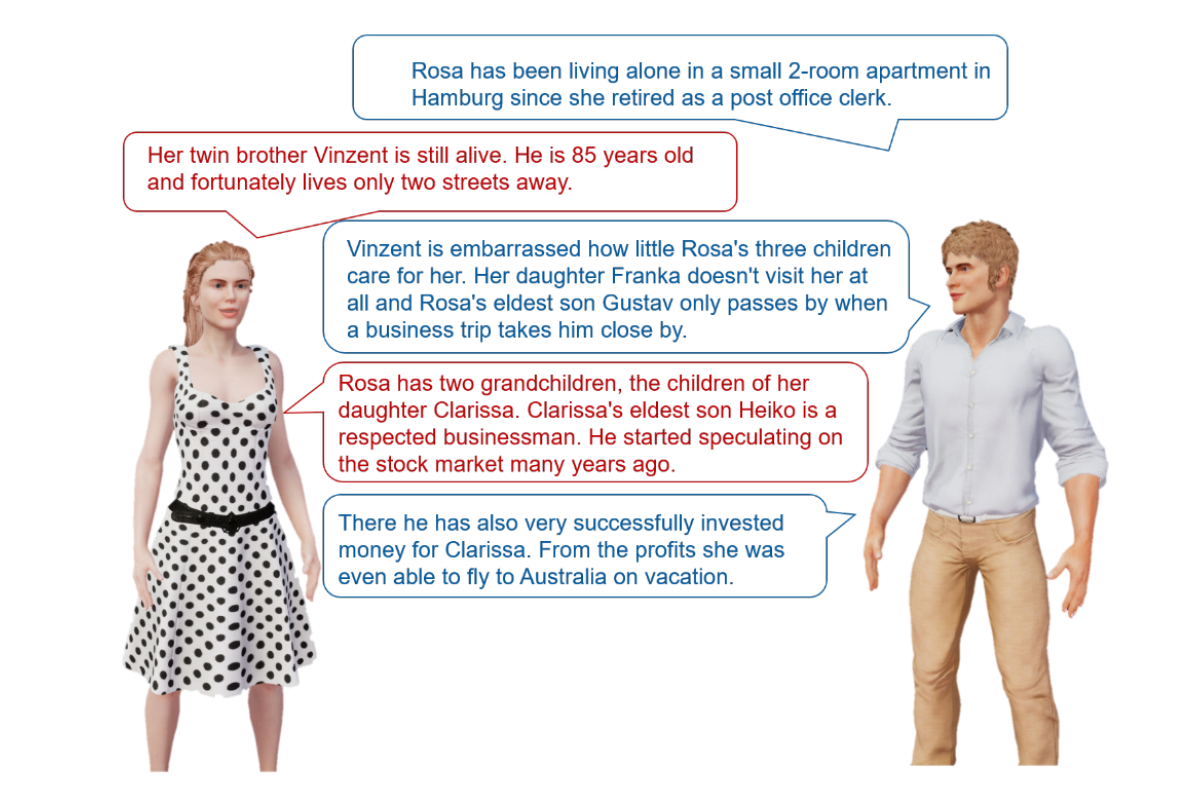

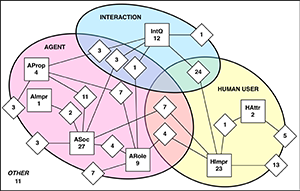

German and Dutch Translations of the Artificial-Social-Agent Questionnaire Instrument for Evaluating Human-Agent Interactions

Enabling the widespread utilization of the Artificial-Social-Agent (ASA)Questionnaire, a research instrument to comprehensively assess diverse ASA qualities while ensuring comparability, necessitates translations beyond the original English source language questionnaire. We thus present Dutch and German translations of the long and short versions of the ASA Questionnaire and describe the translation challenges we encountered. Summative assessments with 240 English-Dutch and 240 English-German bilingual participants show, on average, excellent correlations (Dutch ICC M = 0.82,SD = 0.07, range [0.58, 0.93]; German ICC M = 0.81, SD = 0.09, range [0.58,0.94]) with the original long version on the construct and dimension level. Results for the short version show, on average, good correlations (Dutch ICC M = 0.65, SD = 0.12, range [0.39, 0.82]; German ICC M = 0.67, SD = 0.14, range [0.30,0.91]). We hope these validated translations allow the Dutch and German-speaking populations to evaluate ASAs in their own language.

@InProceedings{Boensch2024,

author = { Nele Albers, Andrea Bönsch, Jonathan Ehret, Boleslav

A. Khodakov, Willem-Paul Brinkman },

booktitle = {ACM International Conference on Intelligent Virtual

Agents (IVA ’24)},

title = { German and Dutch Translations of the

Artificial-Social-Agent Questionnaire Instrument for Evaluating Human-Agent

Interactions},

year = {2024},

organization = {ACM},

pages = {4},

doi = {10.1145/3652988.3673928},

}

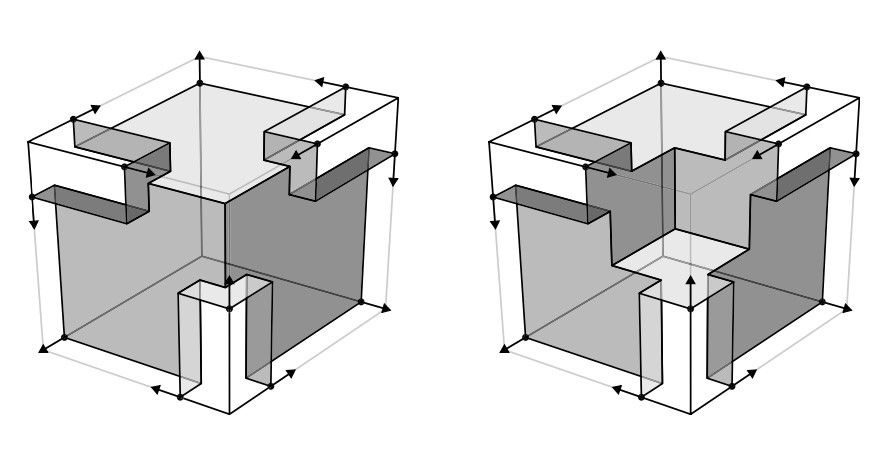

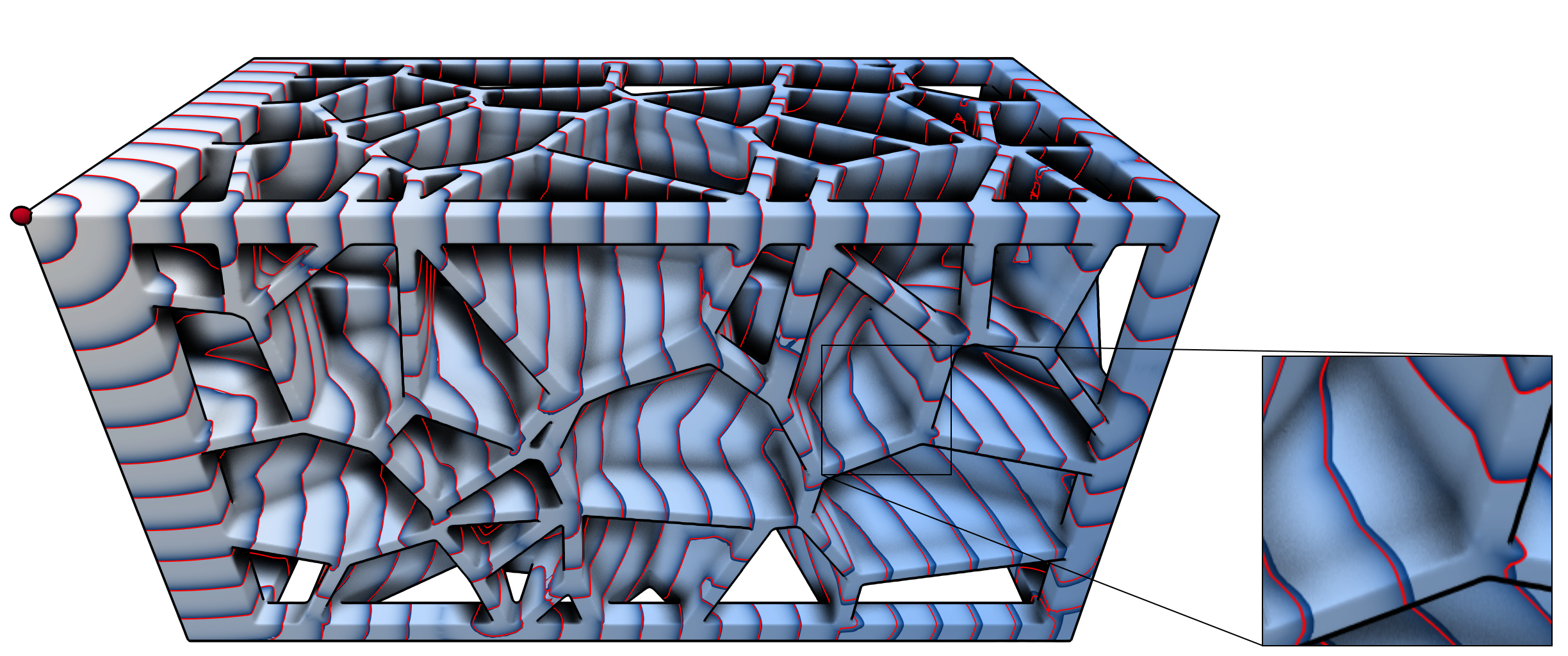

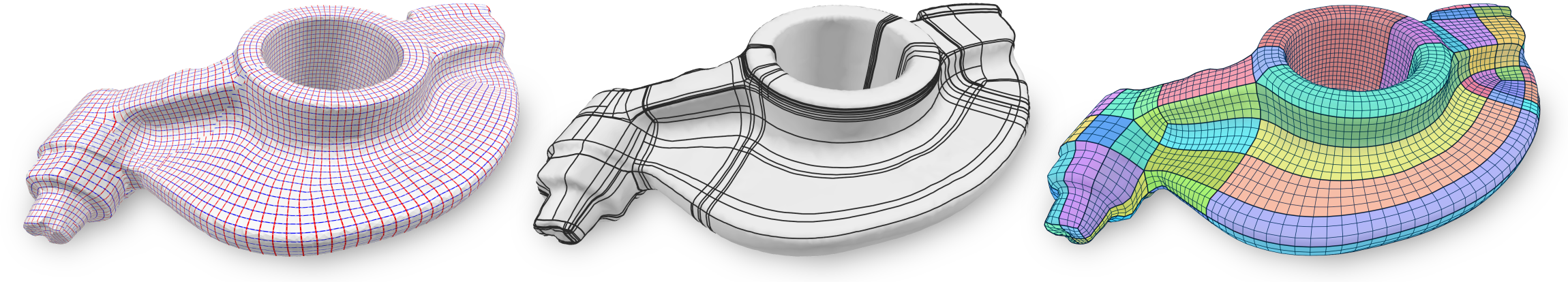

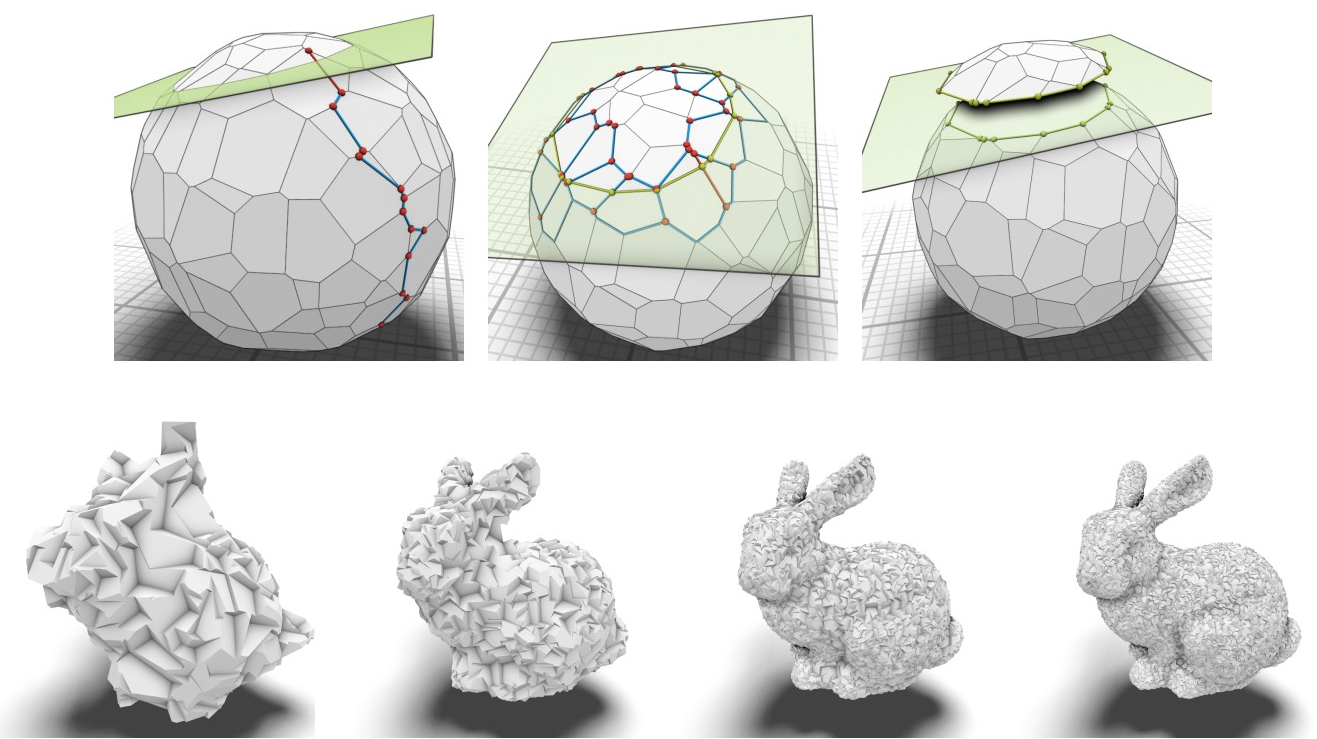

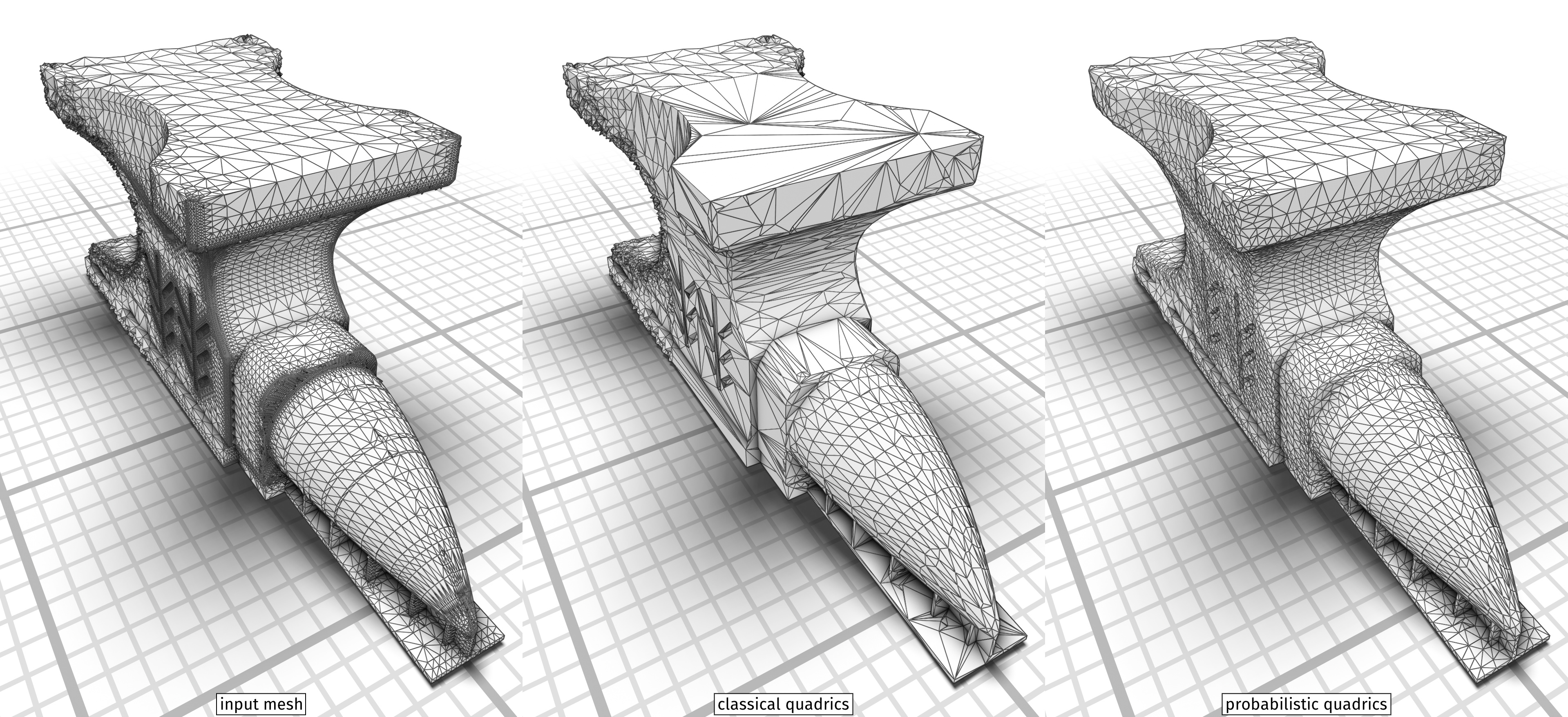

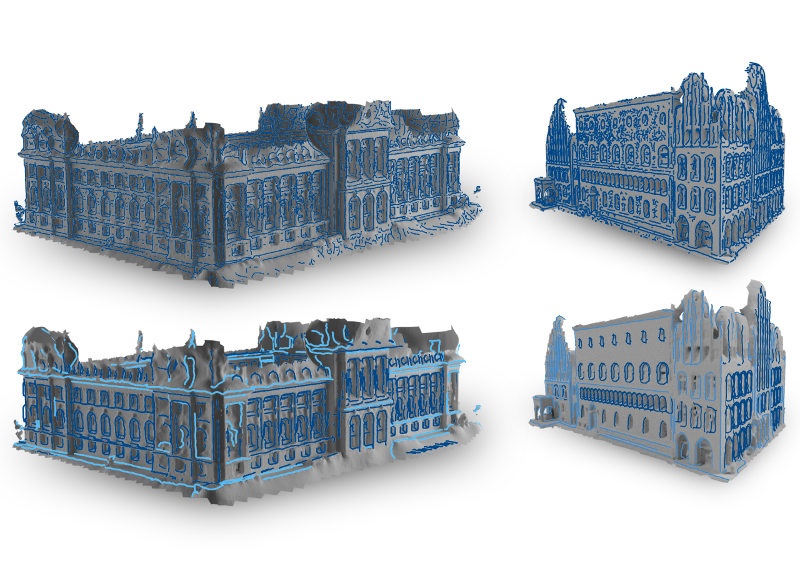

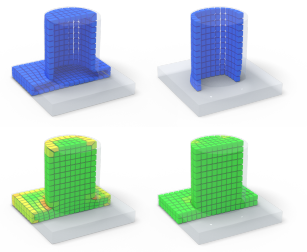

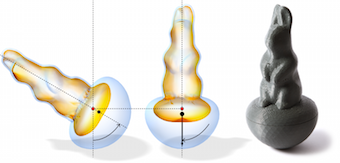

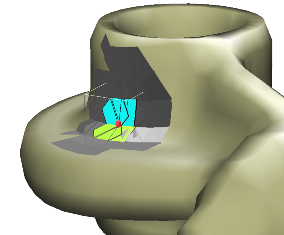

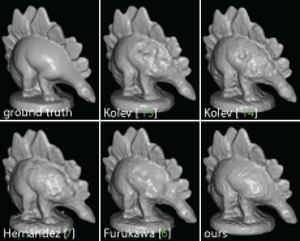

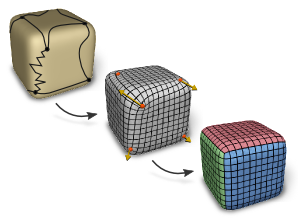

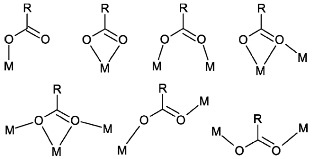

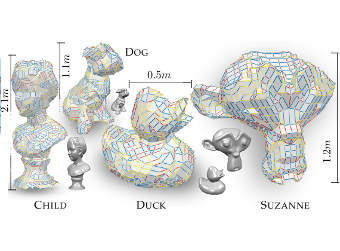

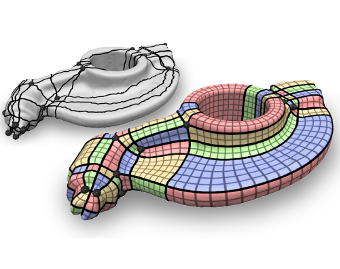

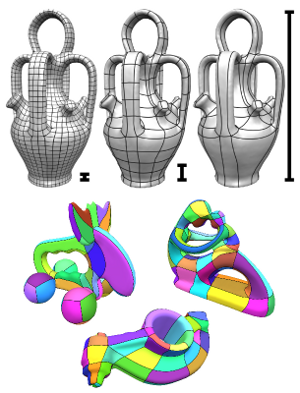

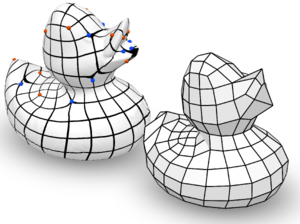

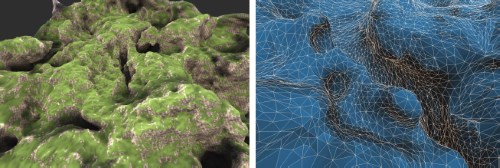

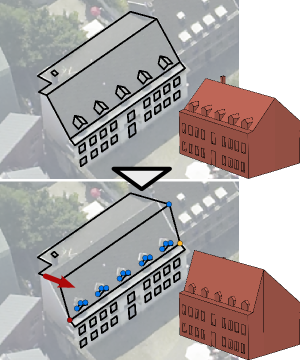

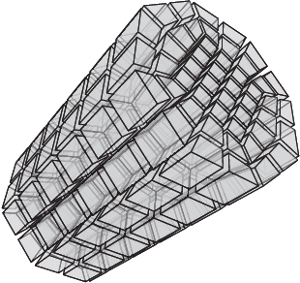

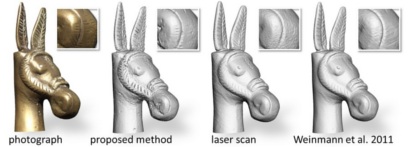

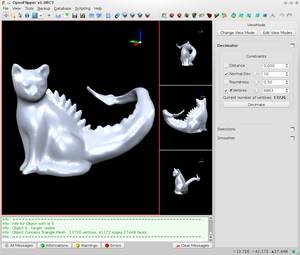

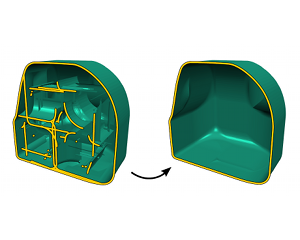

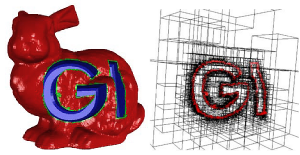

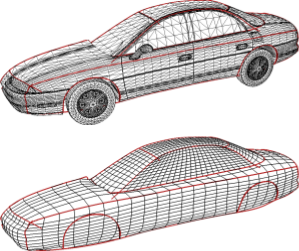

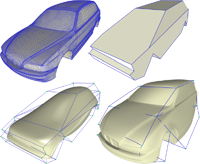

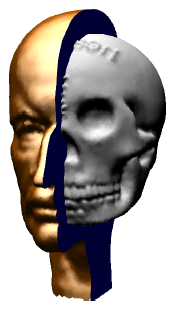

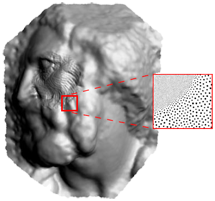

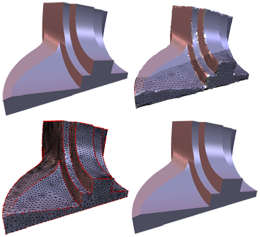

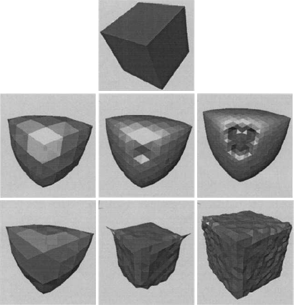

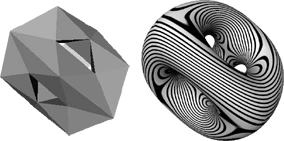

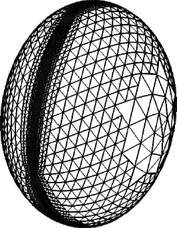

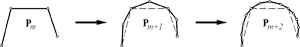

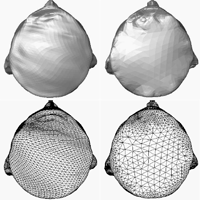

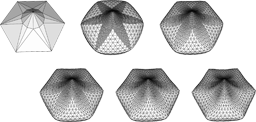

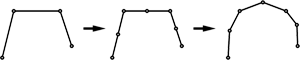

Generalizing feature preservation in iso-surface extraction from triple dexel models

We present a method to resolve visual artifacts of a state-of-the-art iso-surface extraction algorithm by generating feature-preserving surface patches for isolated arbitrarily complex, single voxels without the need for further adaptive subdivision. In the literature, iso-surface extraction from a 3D voxel grid is limited to a single sharp feature per minimal unit, even for algorithms such as Cubical Marching Squares that produce feature-preserving surface reconstructions. In practice though, multiple sharp features can meet in a single voxel. This is reflected in the triple dexel model, which is used in simulation of CNC manufacturing processes. Our approach generalizes the use of normal information to perfectly preserve multiple sharp features for a single voxel, thus avoiding visual artifacts caused by state-of-the-art procedures.

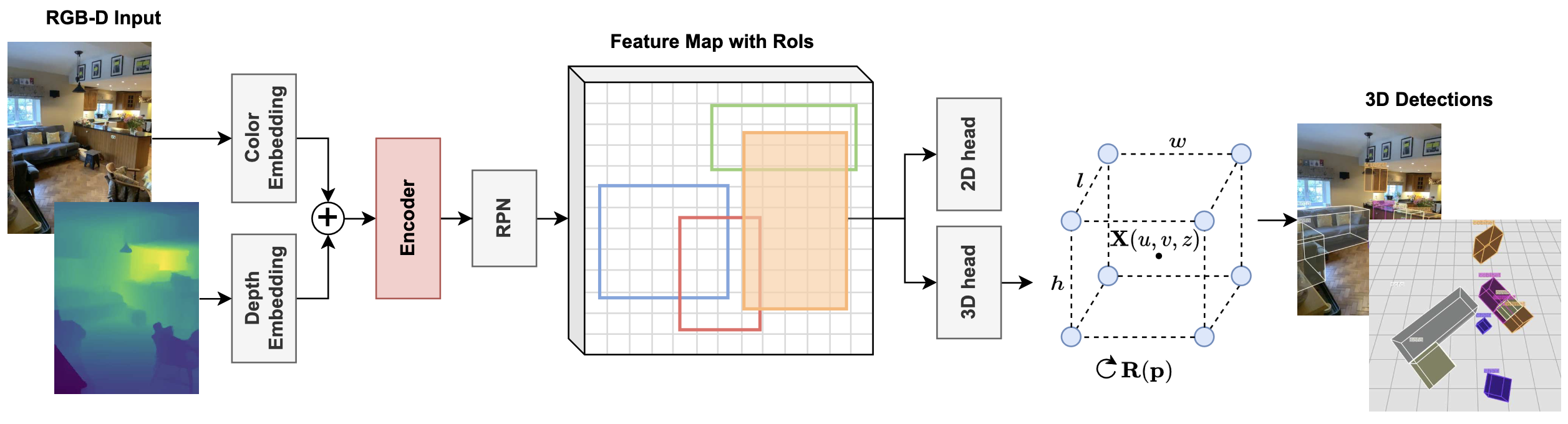

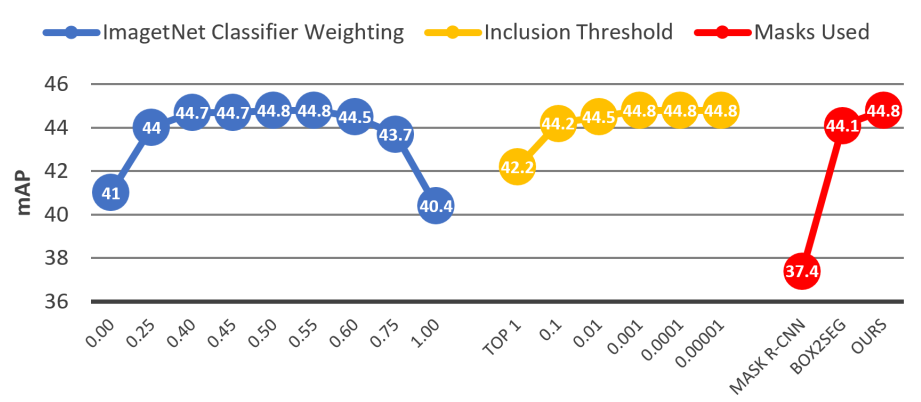

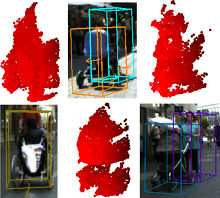

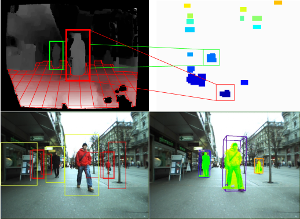

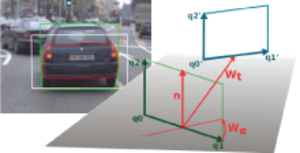

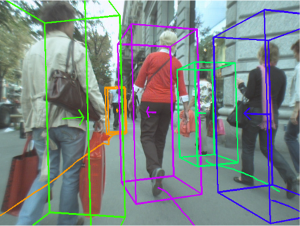

RGB-D Cube R-CNN: 3D Object Detection with Selective Modality Dropout

In this paper we create an RGB-D 3D object detector targeted at indoor robotics use cases where one modality may be unavailable due to a specific sensor setup or a sensor failure. We incorporate RGB and depth fusion into the recent Cube R-CNN framework with support for selective modality dropout. To train this model we augment the Omni3DIN dataset with depth information leading to a diverse dataset for 3D object detection in indoor scenes. In order to leverage strong pretrained networks we investigate the viability of Transformer-based backbones (Swin ViT) as an alternative to the currently popular CNN-based DLA backbone. We show that these Transformer-based image models work well based on our early-fusion approach and propose a modality dropout scheme to avoid the disregard of any modality during training facilitating selective modality dropout during inference. In extensive experiments our proposed RGB-D Cube R-CNN outperforms an RGB-only Cube R-CNN baseline by a significant margin on the task of indoor object detection. Additionally we observe a slight performance boost from the RGB-D training when inferring on only one modality which could for example be valuable in robotics applications with a reduced or unreliable sensor set.

@InProceedings{RGB_D_Cube_RCNN_2024_CVPRW,

author = {Piekenbrinck, Jens and Hermans, Alexander and Vaskevicius, Narunas and Linder, Timm and Leibe, Bastian},

title = {{RGB-D Cube R-CNN: 3D Object Detection with Selective Modality Dropout}},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

year = {2024},

}

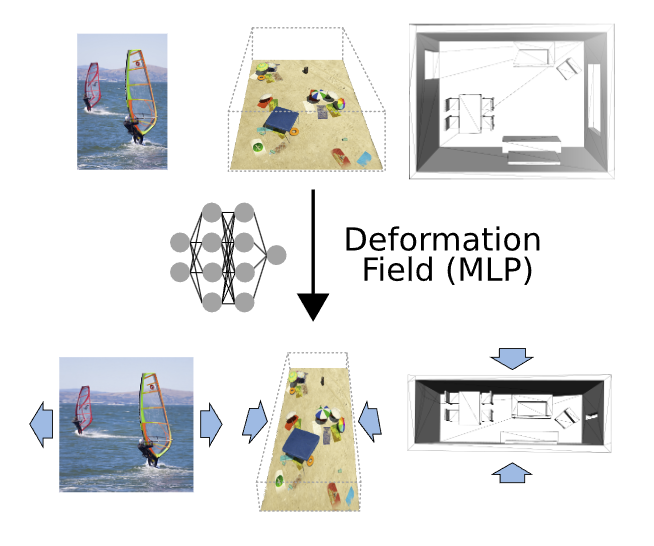

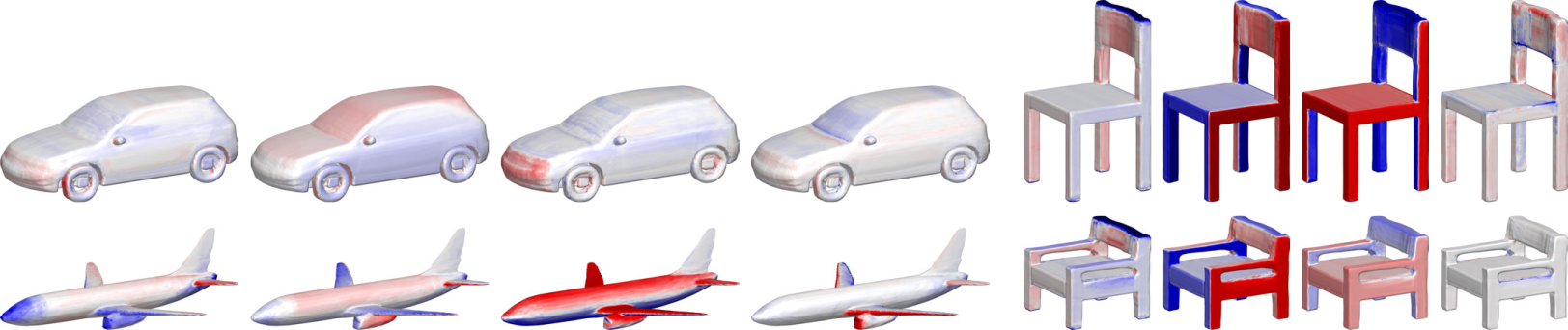

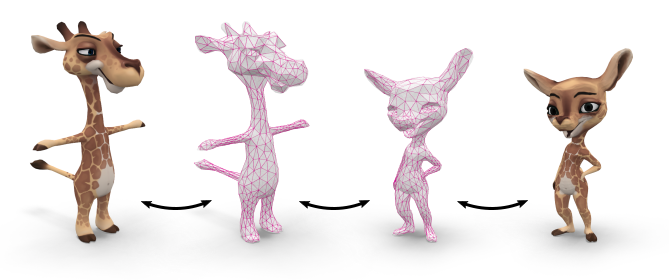

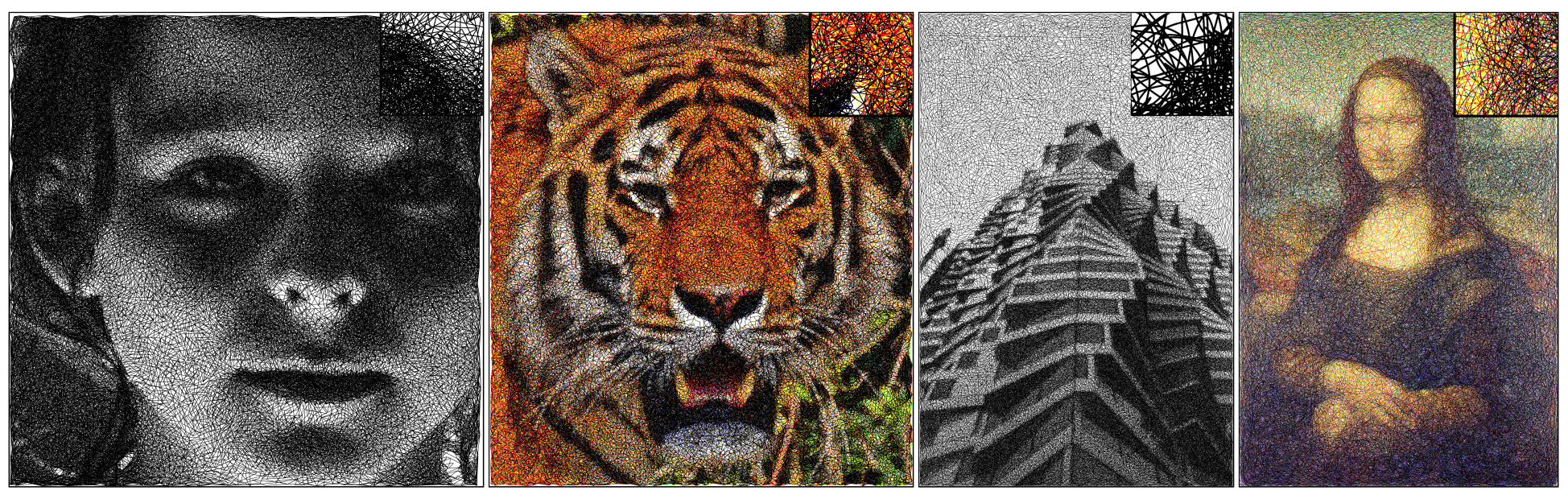

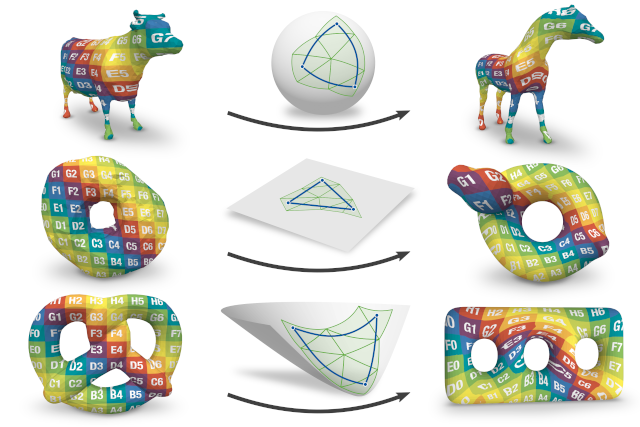

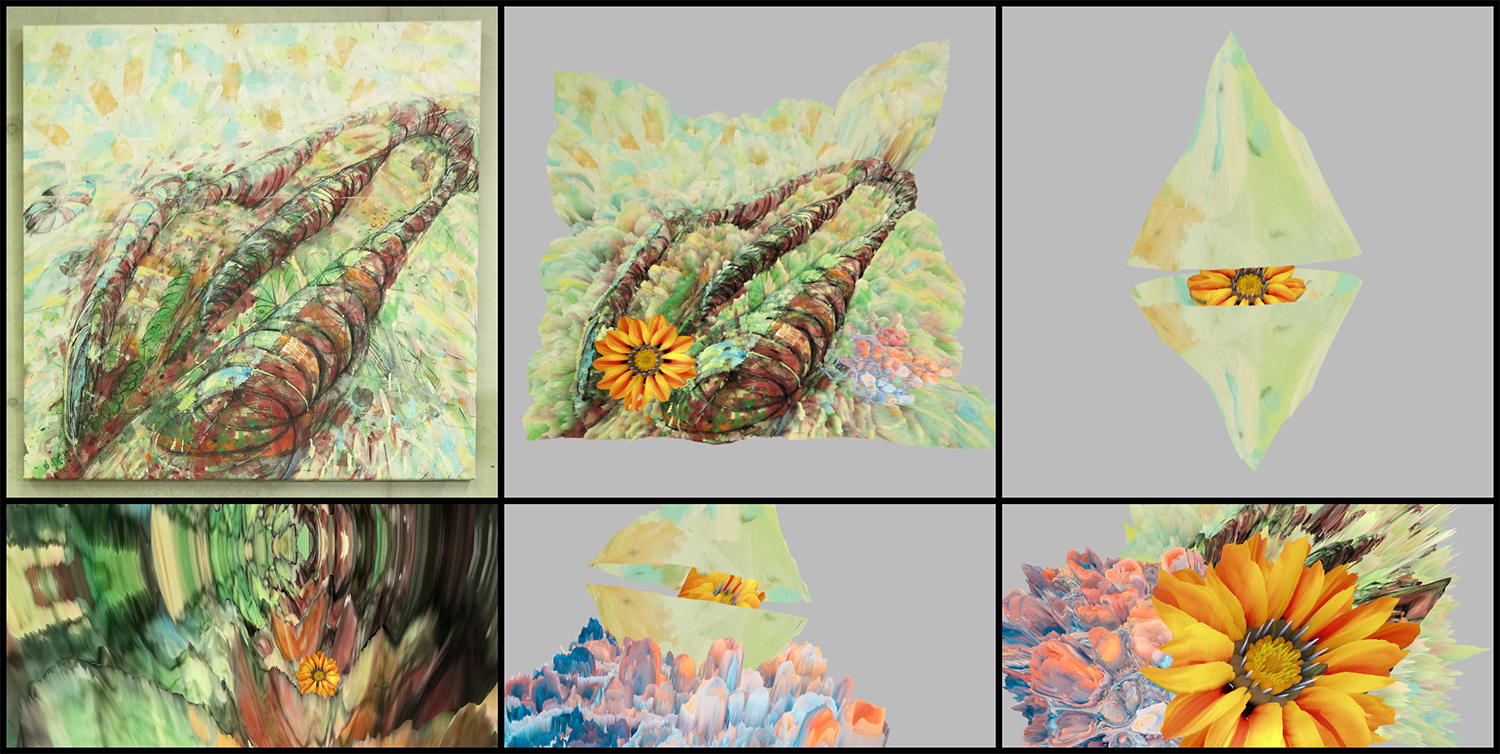

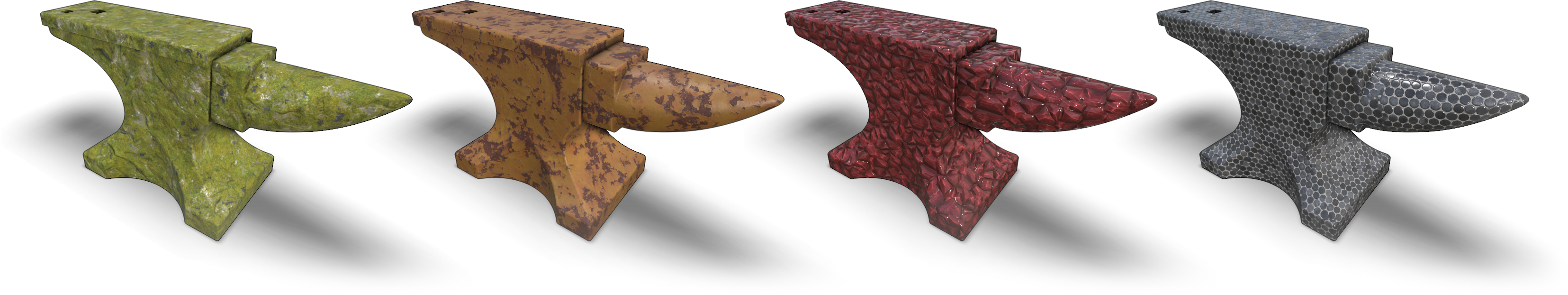

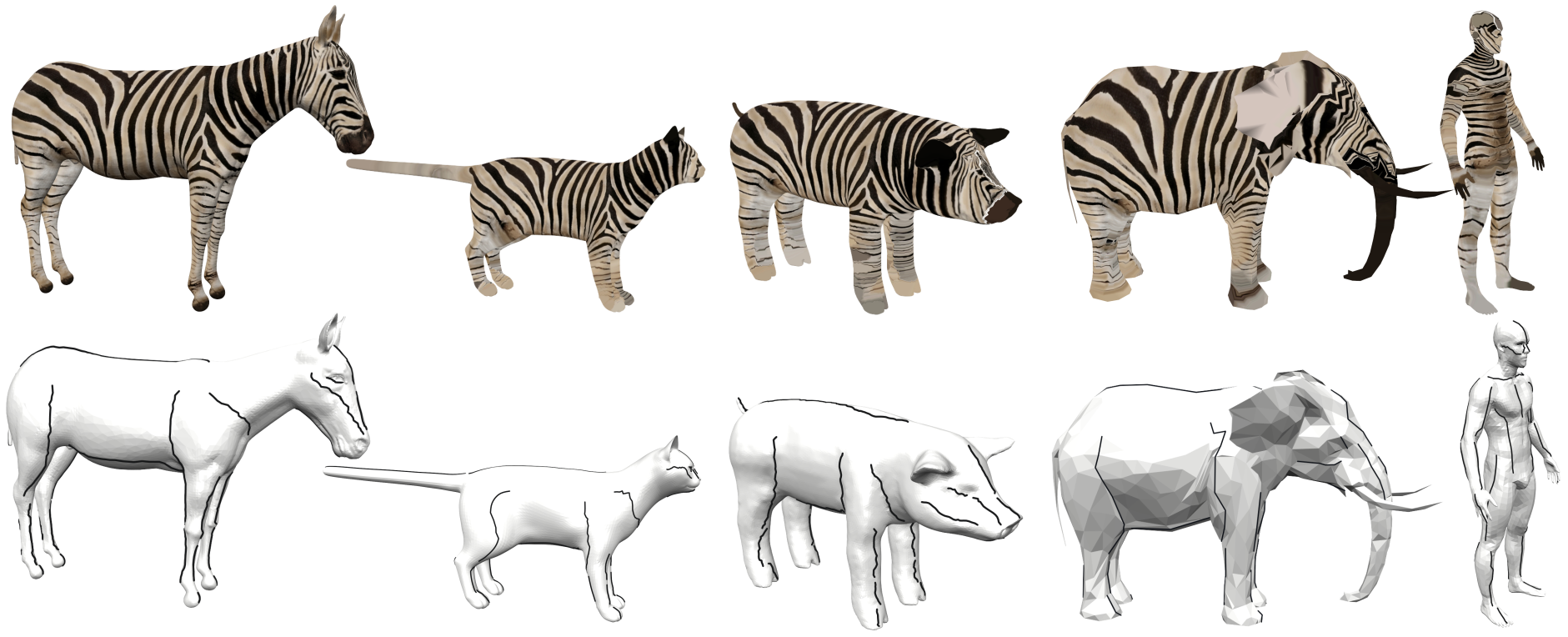

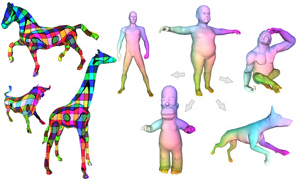

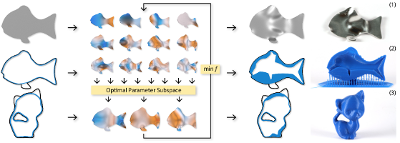

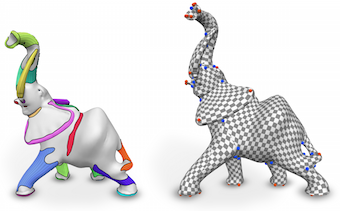

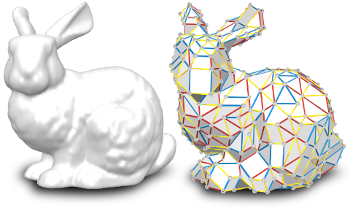

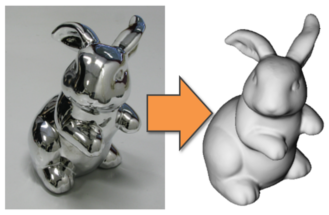

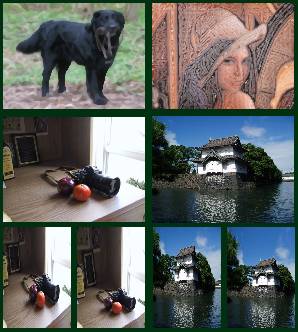

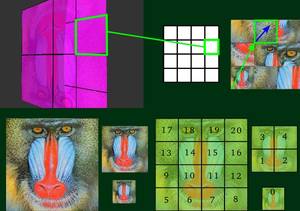

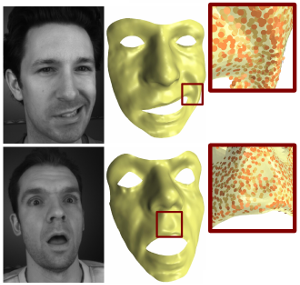

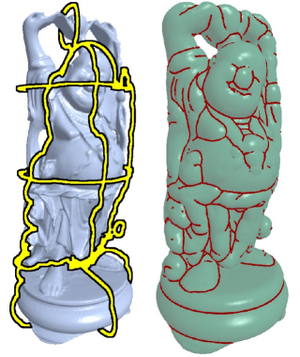

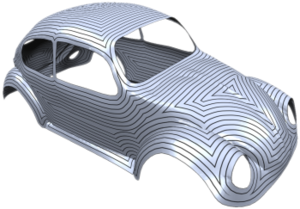

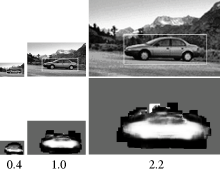

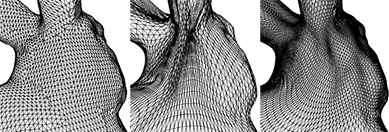

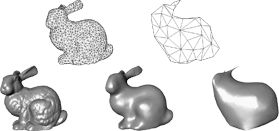

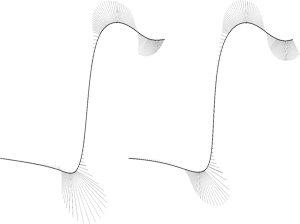

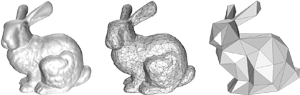

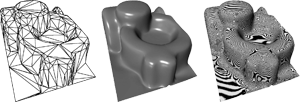

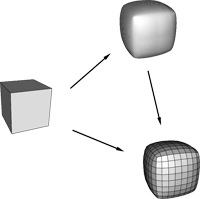

Retargeting Visual Data with Deformation Fields

Seam carving is an image editing method that enables content- aware resizing, including operations like removing objects. However, the seam-finding strategy based on dynamic programming or graph-cut lim- its its applications to broader visual data formats and degrees of freedom for editing. Our observation is that describing the editing and retargeting of images more generally by a deformation field yields a generalisation of content-aware deformations. We propose to learn a deformation with a neural network that keeps the output plausible while trying to deform it only in places with low information content. This technique applies to different kinds of visual data, including images, 3D scenes given as neu- ral radiance fields, or even polygon meshes. Experiments conducted on different visual data show that our method achieves better content-aware retargeting compared to previous methods.

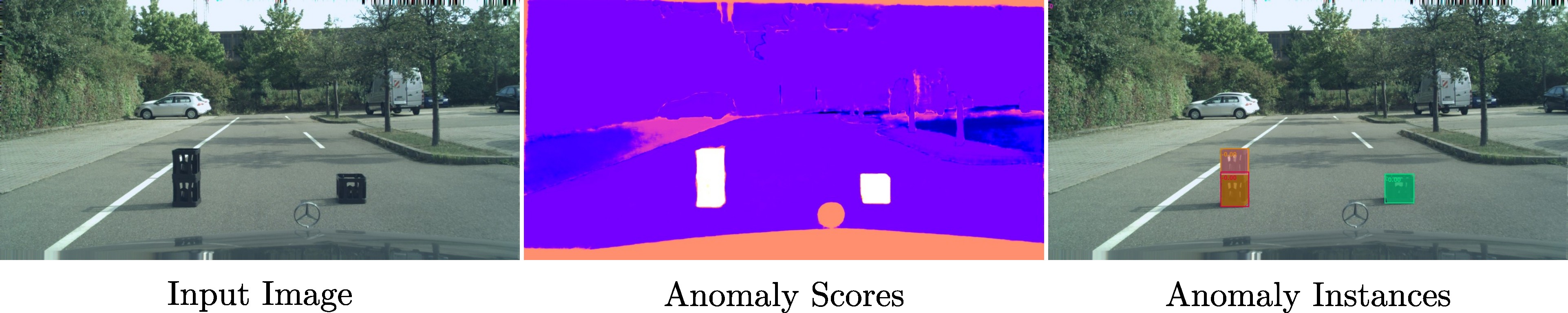

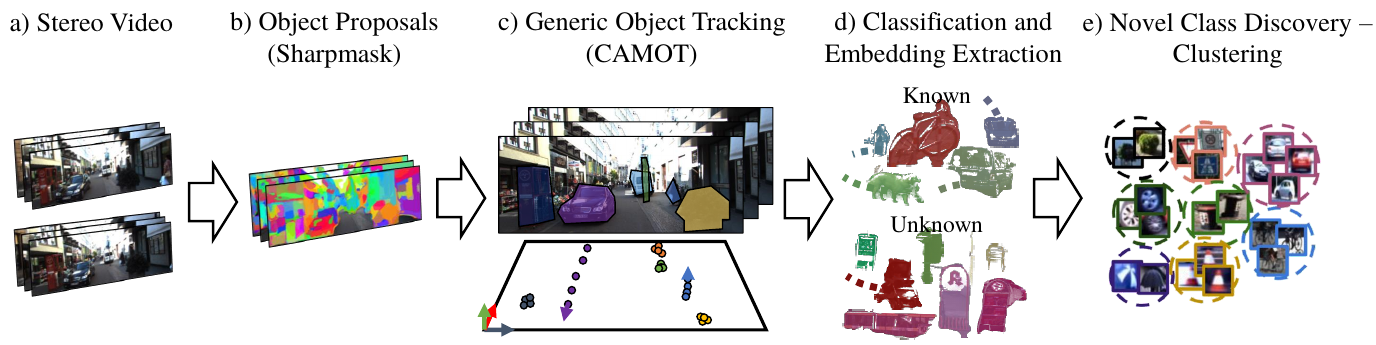

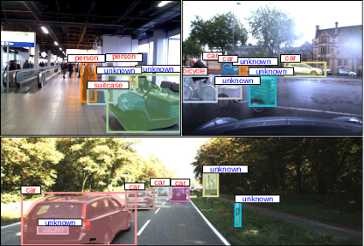

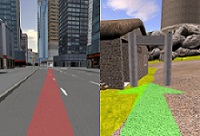

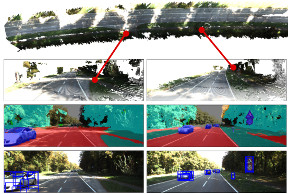

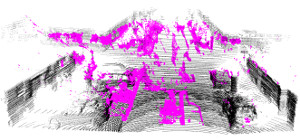

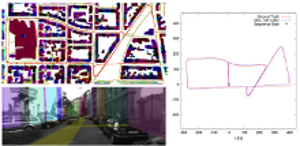

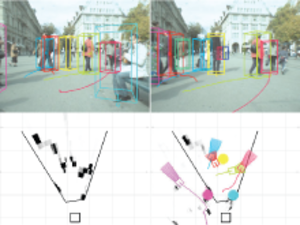

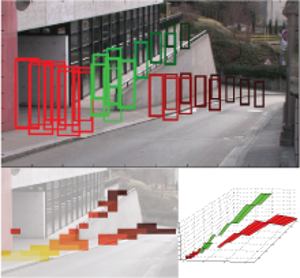

OoDIS: Anomaly Instance Segmentation Benchmark

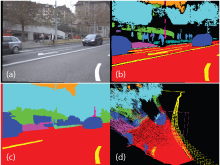

Autonomous vehicles require a precise understanding of their environment to navigate safely. Reliable identification of unknown objects, especially those that are absent during training, such as wild animals, is critical due to their potential to cause serious accidents. Significant progress in semantic segmentation of anomalies has been driven by the availability of out-of-distribution (OOD) benchmarks. However, a comprehensive understanding of scene dynamics requires the segmentation of individual objects, and thus the segmentation of instances is essential. Development in this area has been lagging, largely due to the lack of dedicated benchmarks. To address this gap, we have extended the most commonly used anomaly segmentation benchmarks to include the instance segmentation task. Our evaluation of anomaly instance segmentation methods shows that this challenge remains an unsolved problem. The benchmark website and the competition page can be found at: https://vision.rwth-aachen.de/oodis

@inproceedings{nekrasov2023ugains,

title = {{UGainS: Uncertainty Guided Anomaly Instance Segmentation}},

author = {Nekrasov, Alexey and Hermans, Alexander and Kuhnert, Lars and Leibe, Bastian},

booktitle = {GCPR},

year = {2023}

}

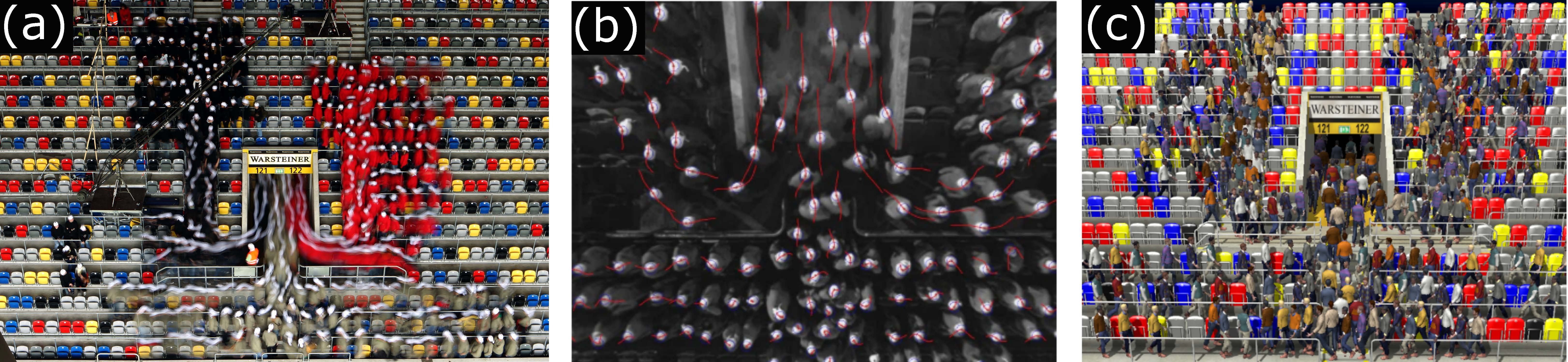

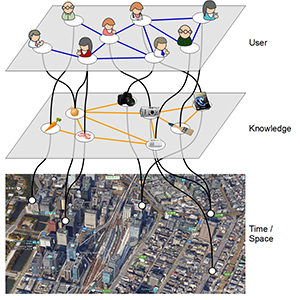

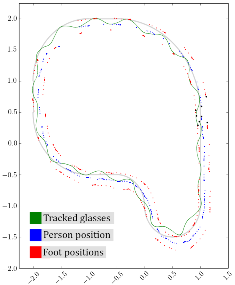

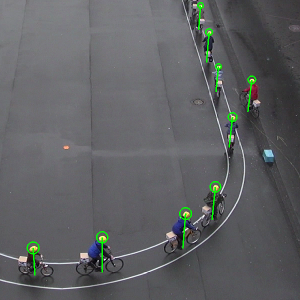

Late-Breaking Report: VR-CrowdCraft: Coupling and Advancing Research in Pedestrian Dynamics and Social Virtual Reality

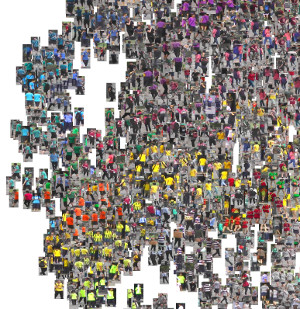

VR-CrowdCraft is a newly formed interdisciplinary initiative, dedicated to the convergence and advancement of two distinct yet interconnected research fields: pedestrian dynamics (PD) and social virtual reality (VR). The initiative aims to establish foundational workflows for a systematic integration of PD data obtained from real-life experiments, encompassing scenarios ranging from smaller clusters of approximately ten individuals to larger groups comprising several hundred pedestrians, into immersive virtual environments (IVEs), addressing the following two crucial goals: (1) Advancing pedestrian dynamic analysis and (2) Advancing virtual pedestrian behavior: authentic populated IVEs and new PD experiments. The LBR presentation will focus on goal 1.

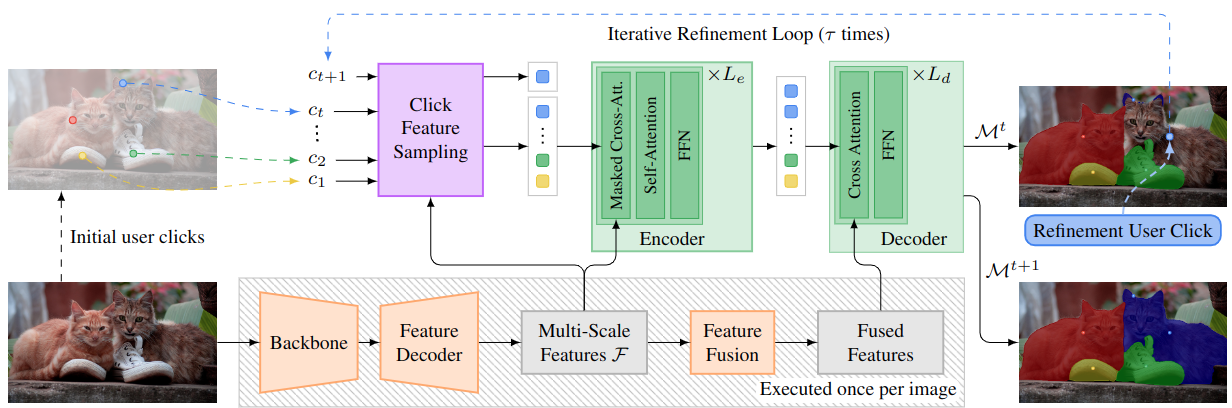

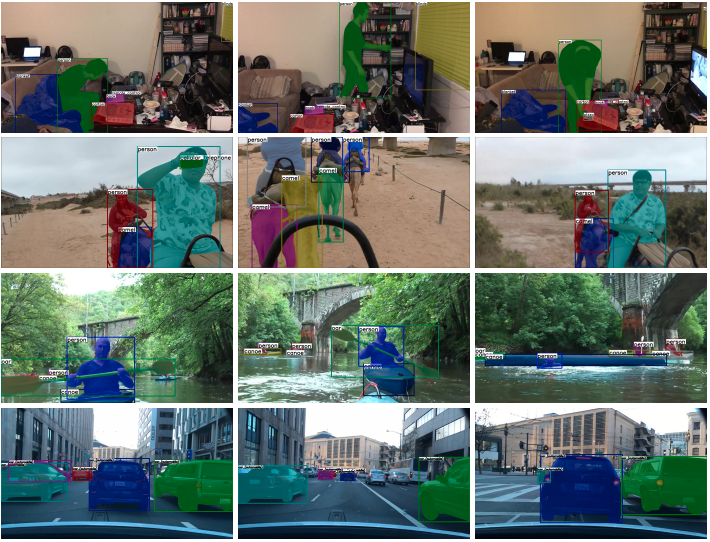

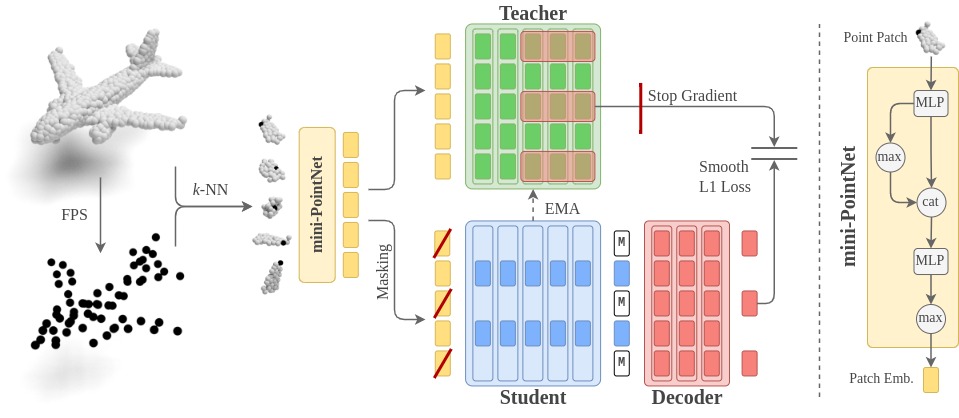

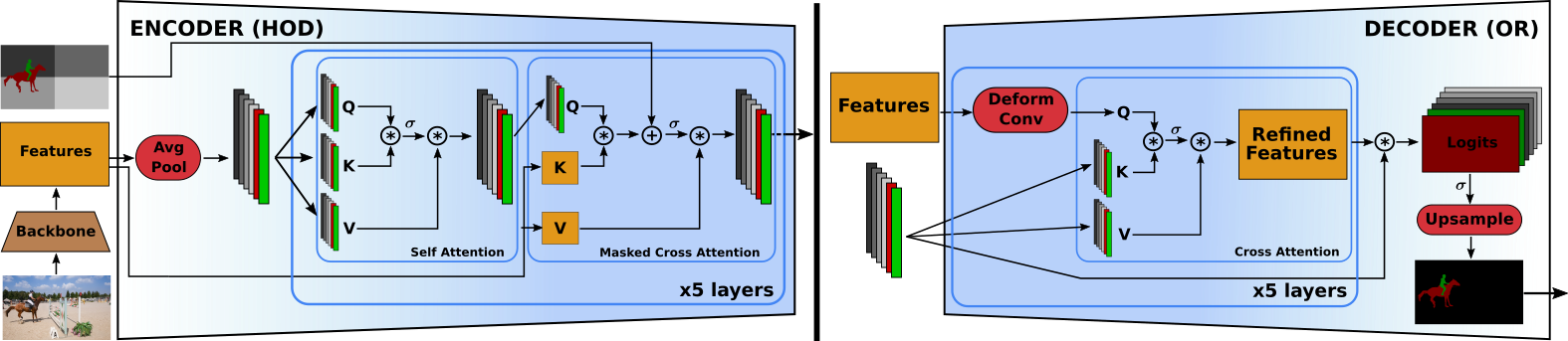

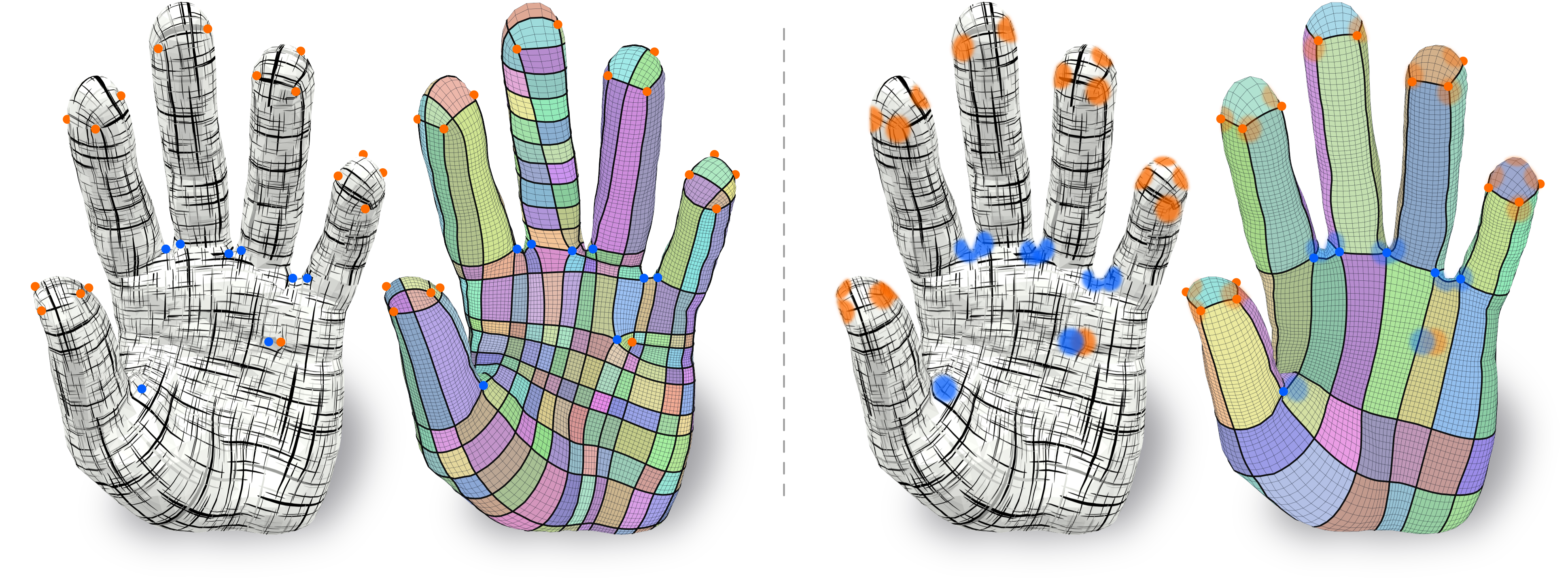

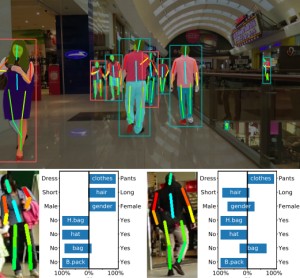

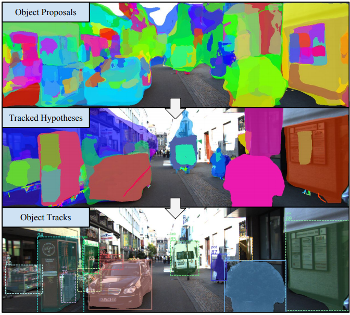

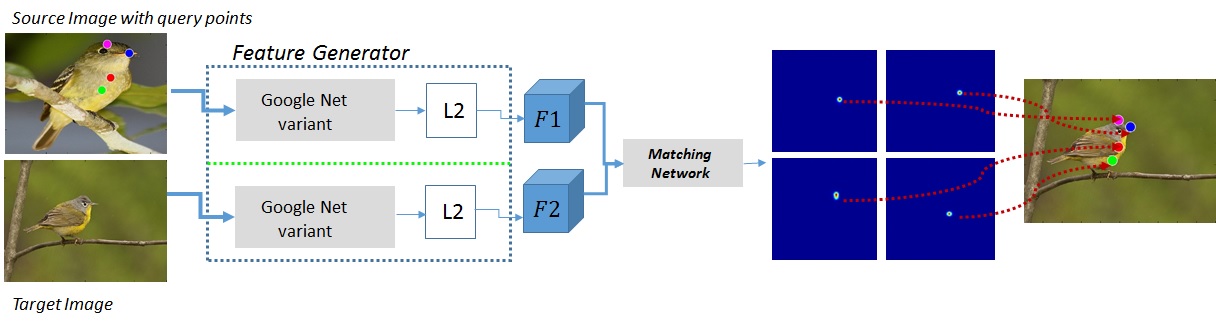

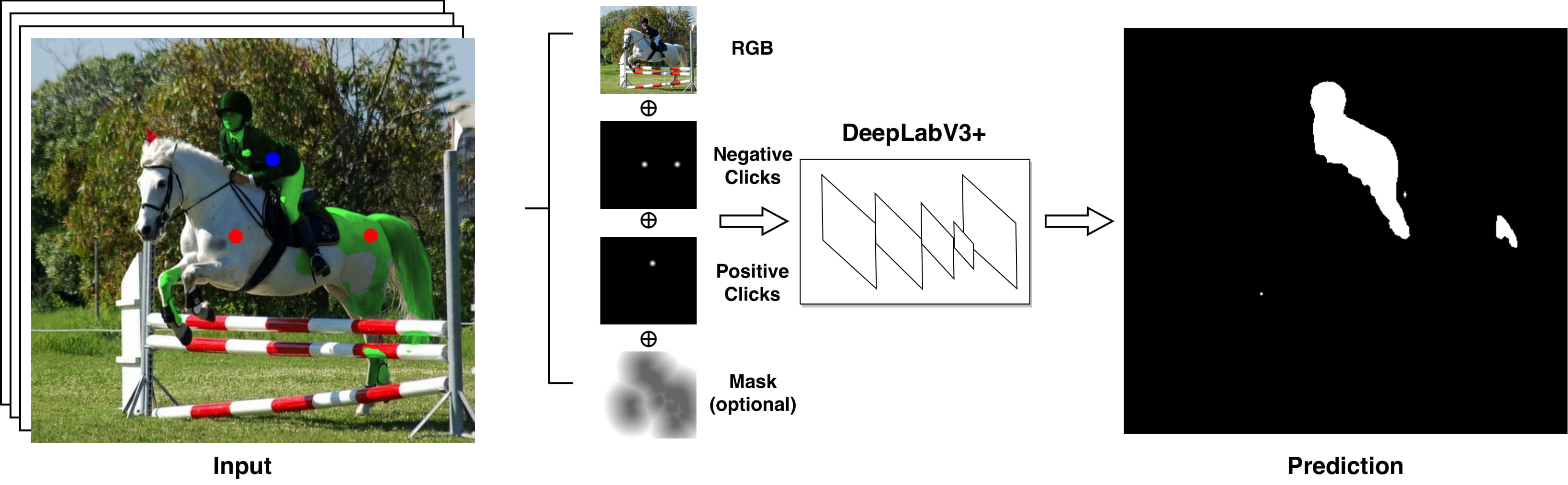

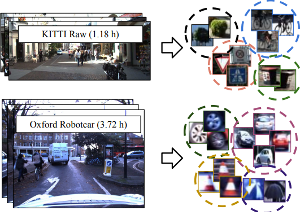

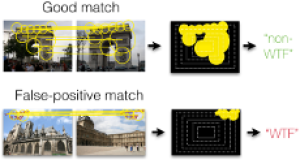

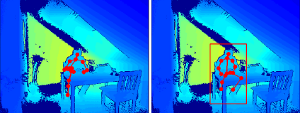

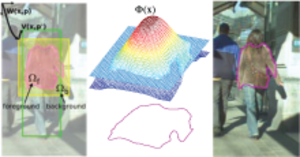

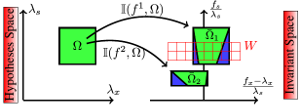

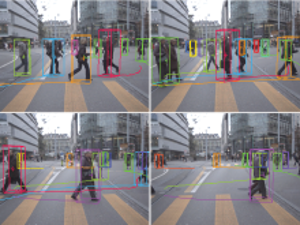

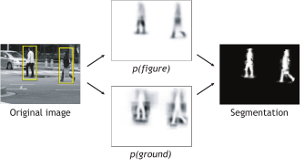

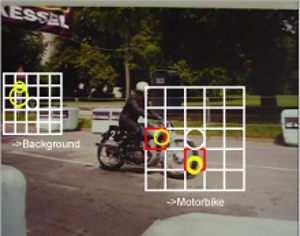

DynaMITe: Dynamic Query Bootstrapping for Multi-object Interactive Segmentation Transformer

Most state-of-the-art instance segmentation methods rely on large amounts of pixel-precise ground-truth annotations for training, which are expensive to create. Interactive segmentation networks help generate such annotations based on an image and the corresponding user interactions such as clicks. Existing methods for this task can only process a single instance at a time and each user interaction requires a full forward pass through the entire deep network. We introduce a more efficient approach, called DynaMITe, in which we represent user interactions as spatio-temporal queries to a Transformer decoder with a potential to segment multiple object instances in a single iteration. Our architecture also alleviates any need to re-compute image features during refinement, and requires fewer interactions for segmenting multiple instances in a single image when compared to other methods. DynaMITe achieves state-of-the-art results on multiple existing interactive segmentation benchmarks, and also on the new multi-instance benchmark that we propose in this paper.

@article{RanaMahadevan23arxiv,

title={DynaMITe: Dynamic Query Bootstrapping for Multi-object Interactive Segmentation Transformer},

author={Rana, Amit and Mahadevan, Sabarinath and Alexander Hermans and Leibe, Bastian},

journal={arXiv preprint arXiv:2304.06668},

year={2023}

}

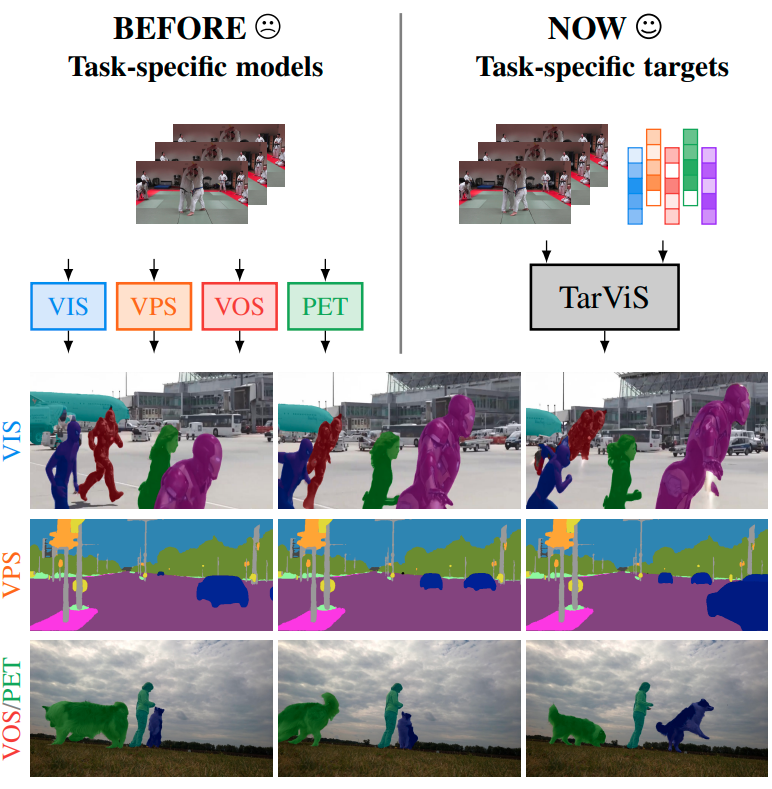

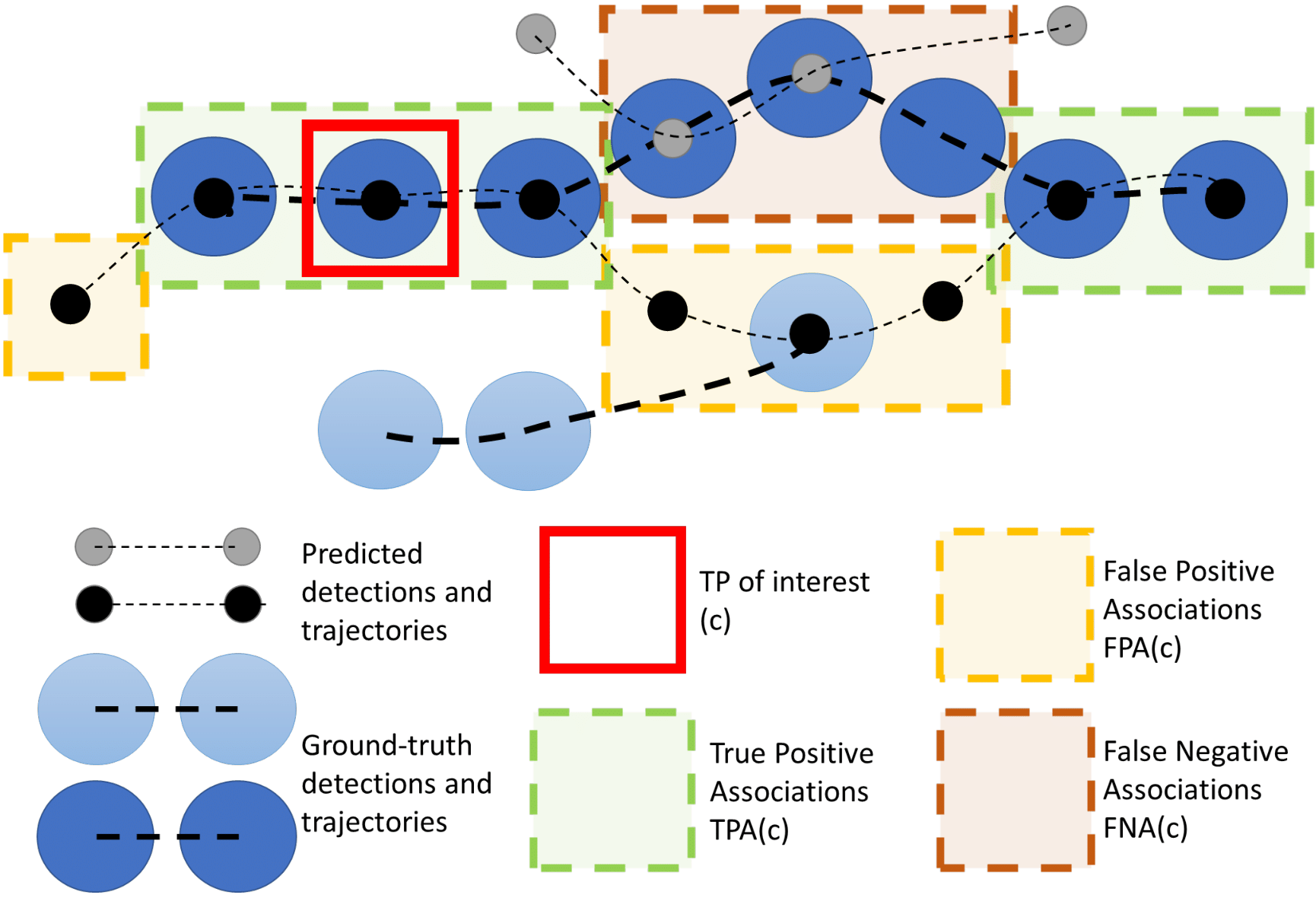

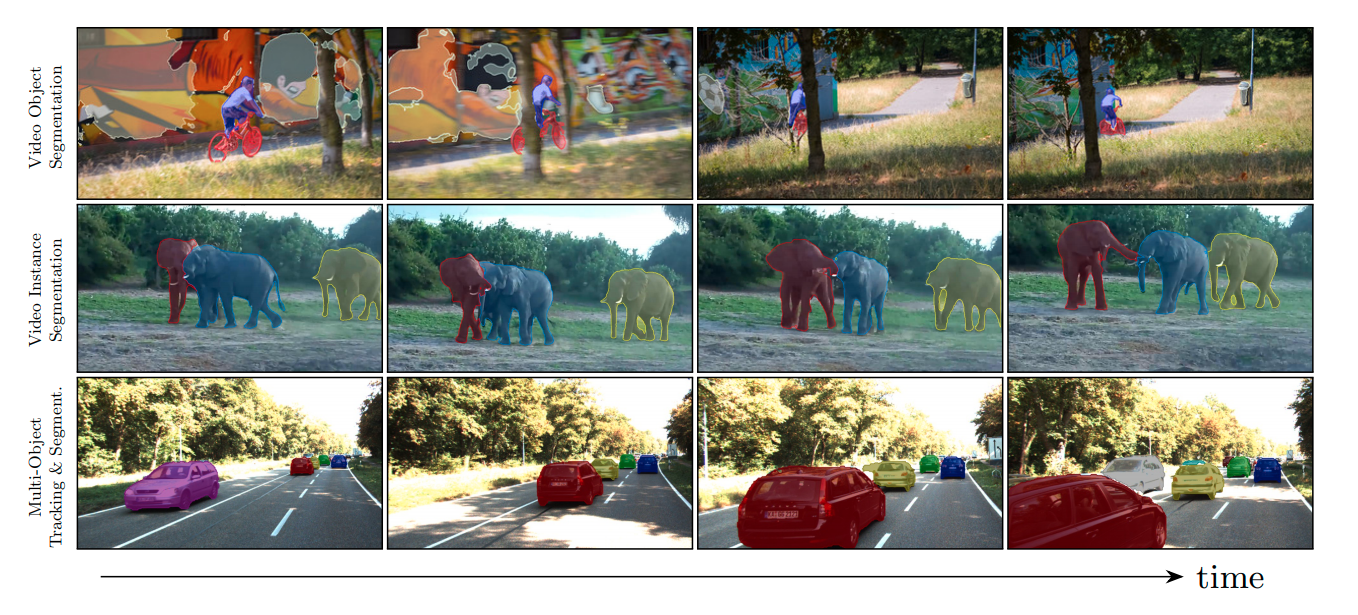

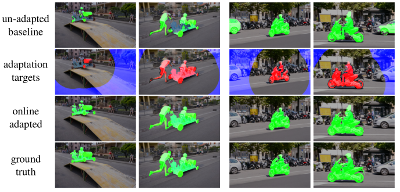

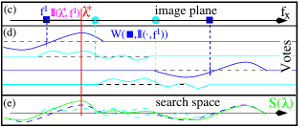

TarVis: A Unified Approach for Target-based Video Segmentation

The general domain of video segmentation is currently fragmented into different tasks spanning multiple benchmarks. Despite rapid progress in the state-of-the-art, current methods are overwhelmingly task-specific and cannot conceptually generalize to other tasks. Inspired by recent approaches with multi-task capability, we propose TarViS: a novel, unified network architecture that can be applied to any task that requires segmenting a set of arbitrarily defined 'targets' in video. Our approach is flexible with respect to how tasks define these targets, since it models the latter as abstract 'queries' which are then used to predict pixel-precise target masks. A single TarViS model can be trained jointly on a collection of datasets spanning different tasks, and can hot-swap between tasks during inference without any task-specific retraining. To demonstrate its effectiveness, we apply TarViS to four different tasks, namely Video Instance Segmentation (VIS), Video Panoptic Segmentation (VPS), Video Object Segmentation (VOS) and Point Exemplar-guided Tracking (PET). Our unified, jointly trained model achieves state-of-the-art performance on 5/7 benchmarks spanning these four tasks, and competitive performance on the remaining two.

@inproceedings{athar2023tarvis,

title={TarViS: A Unified Architecture for Target-based Video Segmentation},

author={Athar, Ali and Hermans, Alexander and Luiten, Jonathon and Ramanan, Deva and Leibe, Bastian},

booktitle={CVPR},

year={2023}

}

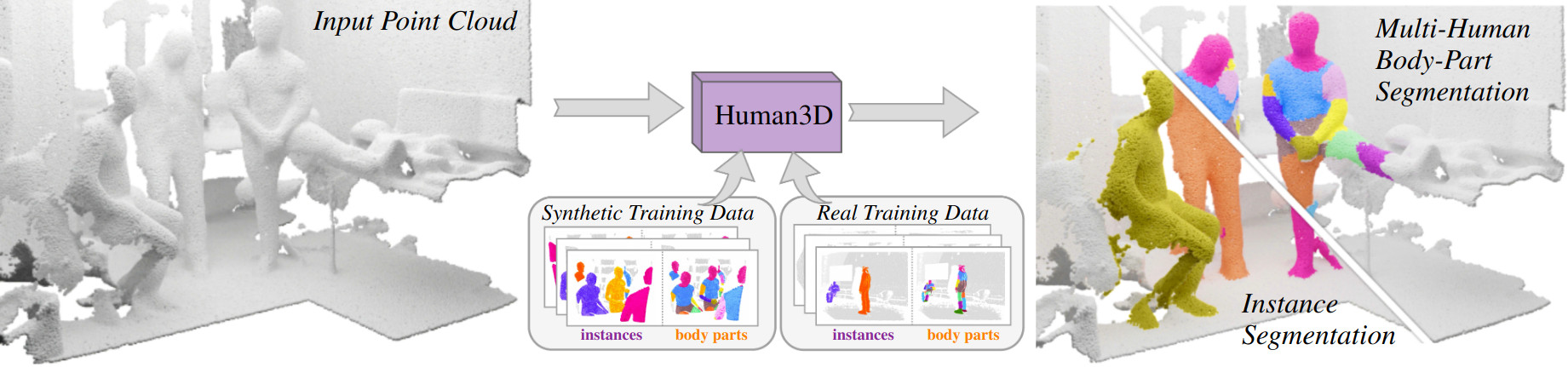

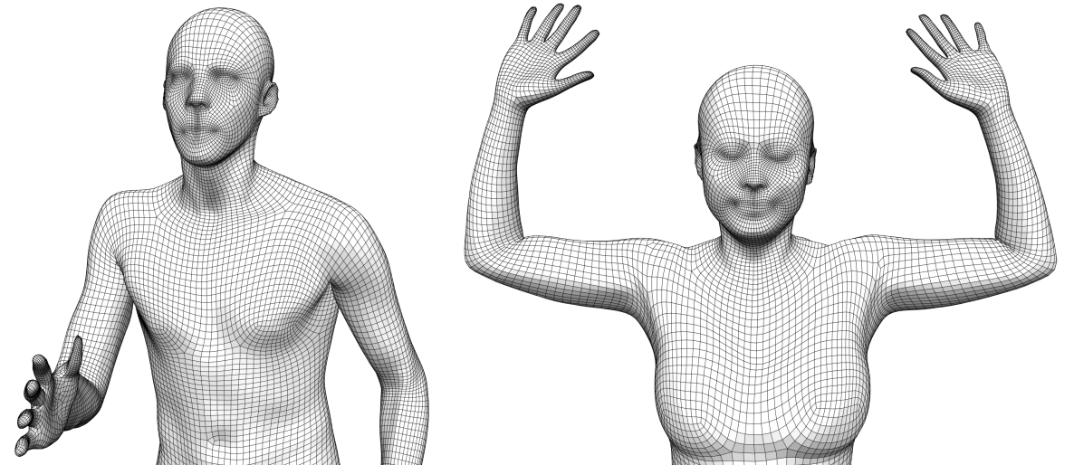

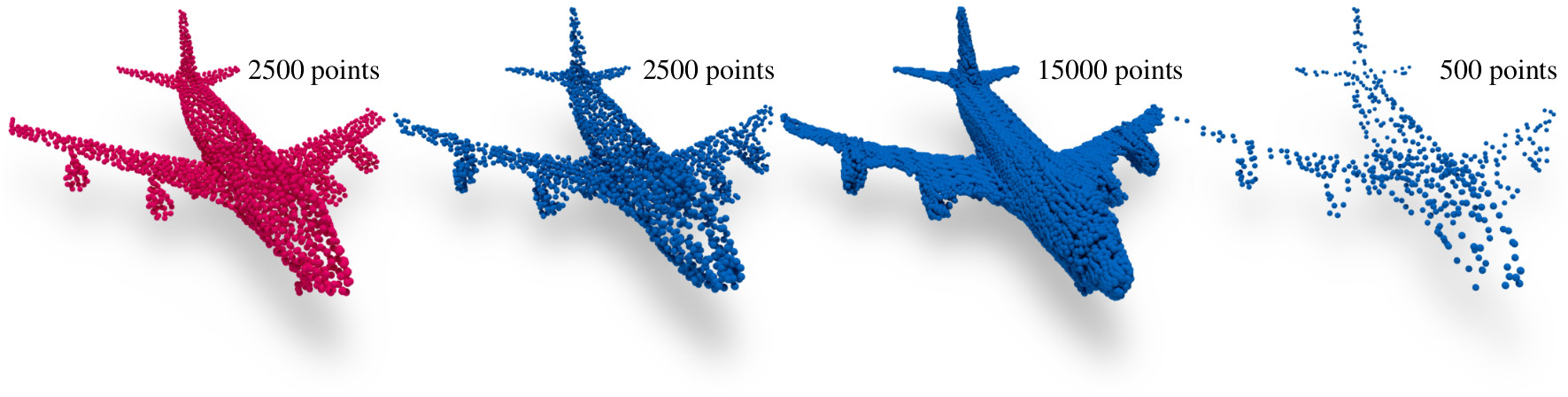

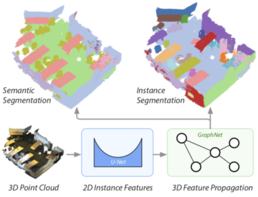

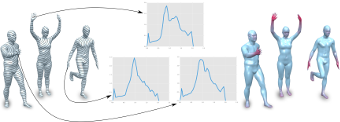

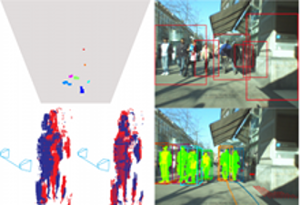

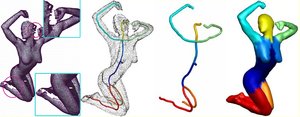

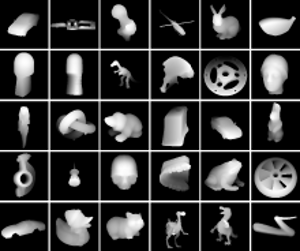

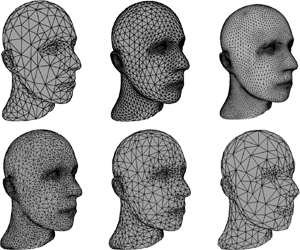

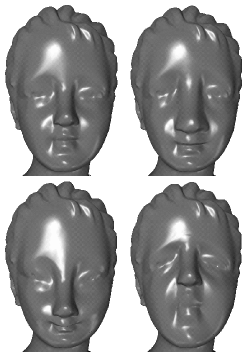

3D Segmentation of Humans in Point Clouds with Synthetic Data

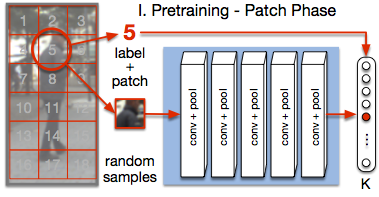

Segmenting humans in 3D indoor scenes has become increasingly important with the rise of human-centered robotics and AR/VR applications. In this direction, we explore the tasks of 3D human semantic-, instance- and multi-human body-part segmentation. Few works have attempted to directly segment humans in point clouds (or depth maps), which is largely due to the lack of training data on humans interacting with 3D scenes. We address this challenge and propose a framework for synthesizing virtual humans in realistic 3D scenes. Synthetic point cloud data is attractive since the domain gap between real and synthetic depth is small compared to images. Our analysis of different training schemes using a combination of synthetic and realistic data shows that synthetic data for pre-training improves performance in a wide variety of segmentation tasks and models. We further propose the first end-to-end model for 3D multi-human body-part segmentation, called Human3D, that performs all the above segmentation tasks in a unified manner. Remarkably, Human3D even outperforms previous task-specific state-of-the-art methods. Finally, we manually annotate humans in test scenes from EgoBody to compare the proposed training schemes and segmentation models.

@article{Takmaz23,

title = {{3D Segmentation of Humans in Point Clouds with Synthetic Data}},

author = {Takmaz, Ay\c{c}a and Schult, Jonas and Kaftan, Irem and Ak\c{c}ay, Mertcan

and Leibe, Bastian and Sumner, Robert and Engelmann, Francis and Tang, Siyu},

booktitle = {{International Conference on Computer Vision (ICCV)}},

year = {2023}

}

Implicit Surface Tension for SPH Fluid Simulation